正在加载图片...

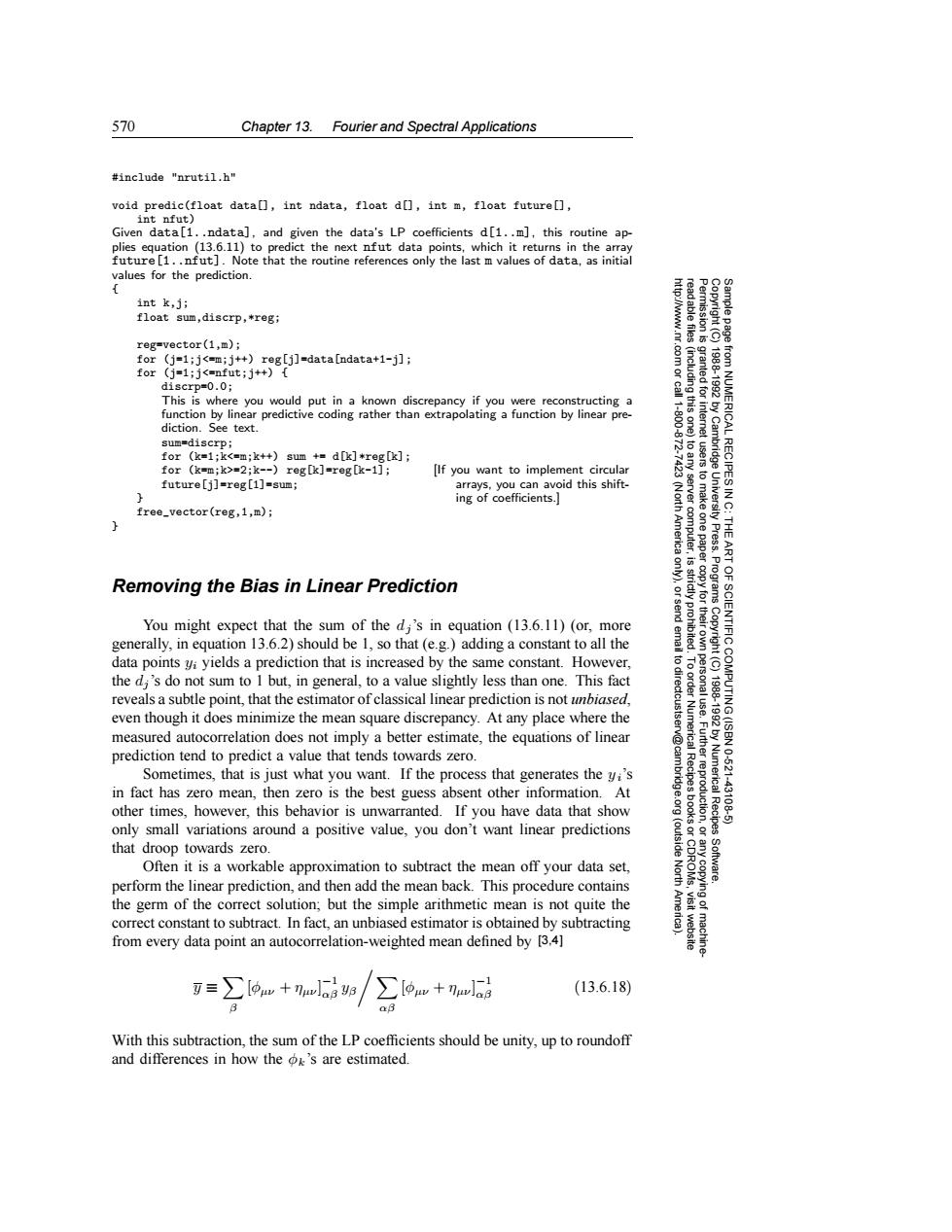

570 Chapter 13.Fourier and Spectral Applications #include "nrutil.h" void predic(float data[],int ndata,float d[],int m,float future[], int nfut) Given data[1..ndata],and given the data's LP coefficients d[1..m],this routine ap- plies equation (13.6.11)to predict the next nfut data points,which it returns in the array future [1..nfut].Note that the routine references only the last m values of data,as initial values for the prediction. int k,j; float sum,discrp,*regi reg=vector(1,m); for (j=1;j<=m;j++)reg[j]=data[ndata+1-j]; for (j=1;j<=nfut;j++){ discrp=0.0; This is where you would put in a known discrepancy if you were reconstructing a function by linear predictive coding rather than extrapolating a function by linear pre- diction.See text. sum=discrp; for (k=1;k<=m;k++)sum +d[k]*reg[k]; for (k=m;k>=2;k--)reg[k]=reg[k-1]; [If you want to implement circular future[j]=reg[1]=sum; arrays,you can avoid this shift- ing of coefficients. free_vector(reg,1,m); e西d相 Press. Removing the Bias in Linear Prediction You might expect that the sum of the di's in equation (13.6.11)(or,more generally,in equation 13.6.2)should be 1,so that (e.g.)adding a constant to all the 2州 IENTIFIC data points vi yields a prediction that is increased by the same constant.However, the di's do not sum to I but,in general,to a value slightly less than one.This fact reveals a subtle point,that the estimator of classical linear prediction is not unbiased, even though it does minimize the mean square discrepancy.At any place where the measured autocorrelation does not imply a better estimate,the equations of linear prediction tend to predict a value that tends towards zero. 10621 Sometimes,that is just what you want.If the process that generates the yi's Numerica in fact has zero mean,then zero is the best guess absent other information.At other times,however,this behavior is unwarranted.If you have data that show 4310 only small variations around a positive value,you don't want linear predictions Recipes that droop towards zero. Often it is a workable approximation to subtract the mean off your data set, perform the linear prediction,and then add the mean back.This procedure contains the germ of the correct solution;but the simple arithmetic mean is not quite the correct constant to subtract.In fact,an unbiased estimator is obtained by subtracting from every data point an autocorrelation-weighted mean defined by [3.4] (13.6.18) aB With this subtraction,the sum of the LP coefficients should be unity,up to roundoff and differences in how the o's are estimated.570 Chapter 13. Fourier and Spectral Applications Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copyin Copyright (C) 1988-1992 by Cambridge University Press. Programs Copyright (C) 1988-1992 by Numerical Recipes Software. Sample page from NUMERICAL RECIPES IN C: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43108-5) g of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America). #include "nrutil.h" void predic(float data[], int ndata, float d[], int m, float future[], int nfut) Given data[1..ndata], and given the data’s LP coefficients d[1..m], this routine applies equation (13.6.11) to predict the next nfut data points, which it returns in the array future[1..nfut]. Note that the routine references only the last m values of data, as initial values for the prediction. { int k,j; float sum,discrp,*reg; reg=vector(1,m); for (j=1;j<=m;j++) reg[j]=data[ndata+1-j]; for (j=1;j<=nfut;j++) { discrp=0.0; This is where you would put in a known discrepancy if you were reconstructing a function by linear predictive coding rather than extrapolating a function by linear prediction. See text. sum=discrp; for (k=1;k<=m;k++) sum += d[k]*reg[k]; for (k=m;k>=2;k--) reg[k]=reg[k-1]; [If you want to implement circular arrays, you can avoid this shifting of coefficients.] future[j]=reg[1]=sum; } free_vector(reg,1,m); } Removing the Bias in Linear Prediction You might expect that the sum of the dj ’s in equation (13.6.11) (or, more generally, in equation 13.6.2) should be 1, so that (e.g.) adding a constant to all the data points yi yields a prediction that is increased by the same constant. However, the dj ’s do not sum to 1 but, in general, to a value slightly less than one. This fact reveals a subtle point, that the estimator of classical linear prediction is not unbiased, even though it does minimize the mean square discrepancy. At any place where the measured autocorrelation does not imply a better estimate, the equations of linear prediction tend to predict a value that tends towards zero. Sometimes, that is just what you want. If the process that generates the yi’s in fact has zero mean, then zero is the best guess absent other information. At other times, however, this behavior is unwarranted. If you have data that show only small variations around a positive value, you don’t want linear predictions that droop towards zero. Often it is a workable approximation to subtract the mean off your data set, perform the linear prediction, and then add the mean back. This procedure contains the germ of the correct solution; but the simple arithmetic mean is not quite the correct constant to subtract. In fact, an unbiased estimator is obtained by subtracting from every data point an autocorrelation-weighted mean defined by [3,4] y ≡ β [φµν + ηµν ] −1 αβ yβ αβ [φµν + ηµν] −1 αβ (13.6.18) With this subtraction, the sum of the LP coefficients should be unity, up to roundoff and differences in how the φk’s are estimated