正在加载图片...

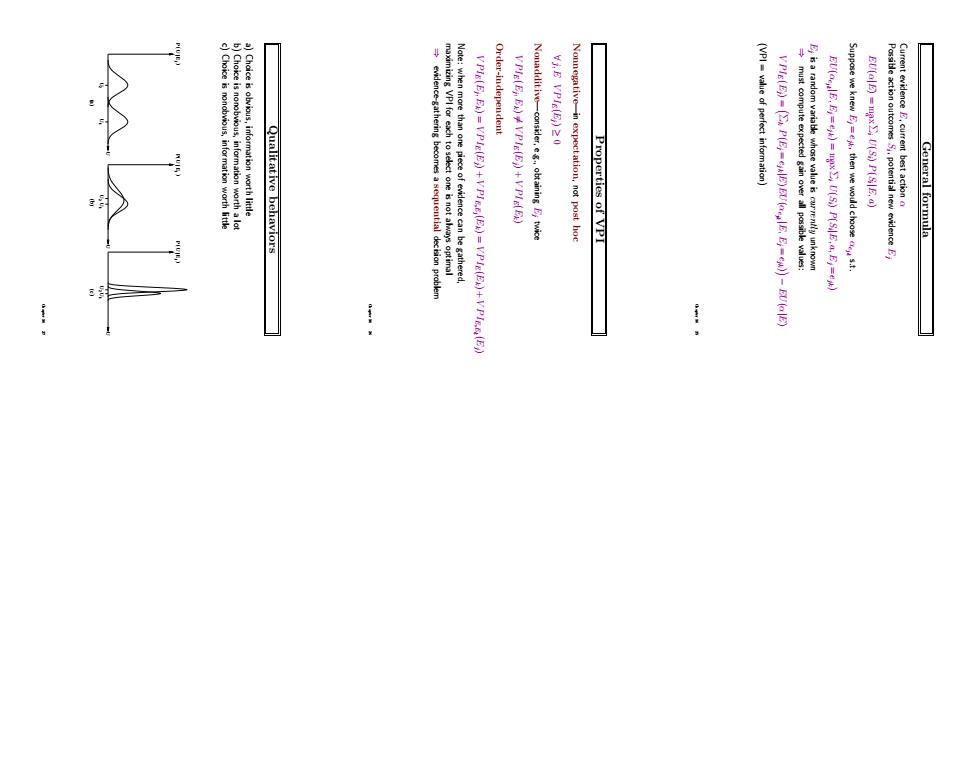

VPI&(E EA)=VPIE(E+VPI66,(E)=VPIg(EA)+(E Order-independent VPlE(En Ex)4 VPIg(E)+VPIg(E) onnegative-in expect ation,not post ho Properties of VPI (VPIvalue of perfect information) alitative behaviors Suppose we knew E=then we would chaoses.t. EU(olE)=max U(S)P(SIE.a) General formulaGeneral formula Current evidence E, current best action α Possible action outcomes Si , potential new evidence Ej EU(α|E) = max a Σi U(Si) P(Si |E, a) Suppose we knew Ej = ejk, then we would choose αejk s.t. EU(αejk |E, Ej = ejk) = max a Σi U(Si) P(Si |E, a, Ej = ejk) Ej is a random variable whose value is currently unknown ⇒ must compute expected gain over all possible values: V PIE(Ej) = Σ k P(Ej = ejk|E)EU(αejk |E, Ej = ejk) ! − EU(α|E) (VPI = value of perfect information) Chapter 16 25 Properties of VPI Nonnegative—in expectation, not post hoc ∀ j, E V PIE(Ej) ≥ 0 Nonadditive—consider, e.g., obtaining Ej twice V PIE(Ej , Ek) 6= V PIE(Ej) + V PIE(Ek) Order-independent V PIE(Ej , Ek) = V PIE(Ej) + V PIE,Ej (Ek) = V PIE(Ek) + V PIE,Ek (Ej) Note: when more than one piece of evidence can be gathered, maximizing VPI for each to select one is not always optimal ⇒ evidence-gathering becomes a sequential decision problem Chapter 16 26 Qualitative behaviors a) Choice is obvious, information worth little b) Choice is nonobvious, information worth a lot c) Choice is nonobvious, information worth little P ( U | E ) j P ( U | E ) j P ( U | E ) j (a) (b) (c) U U U U 1 U 2 U 2 U 2 U 1 U 1 Chapter 16 27