正在加载图片...

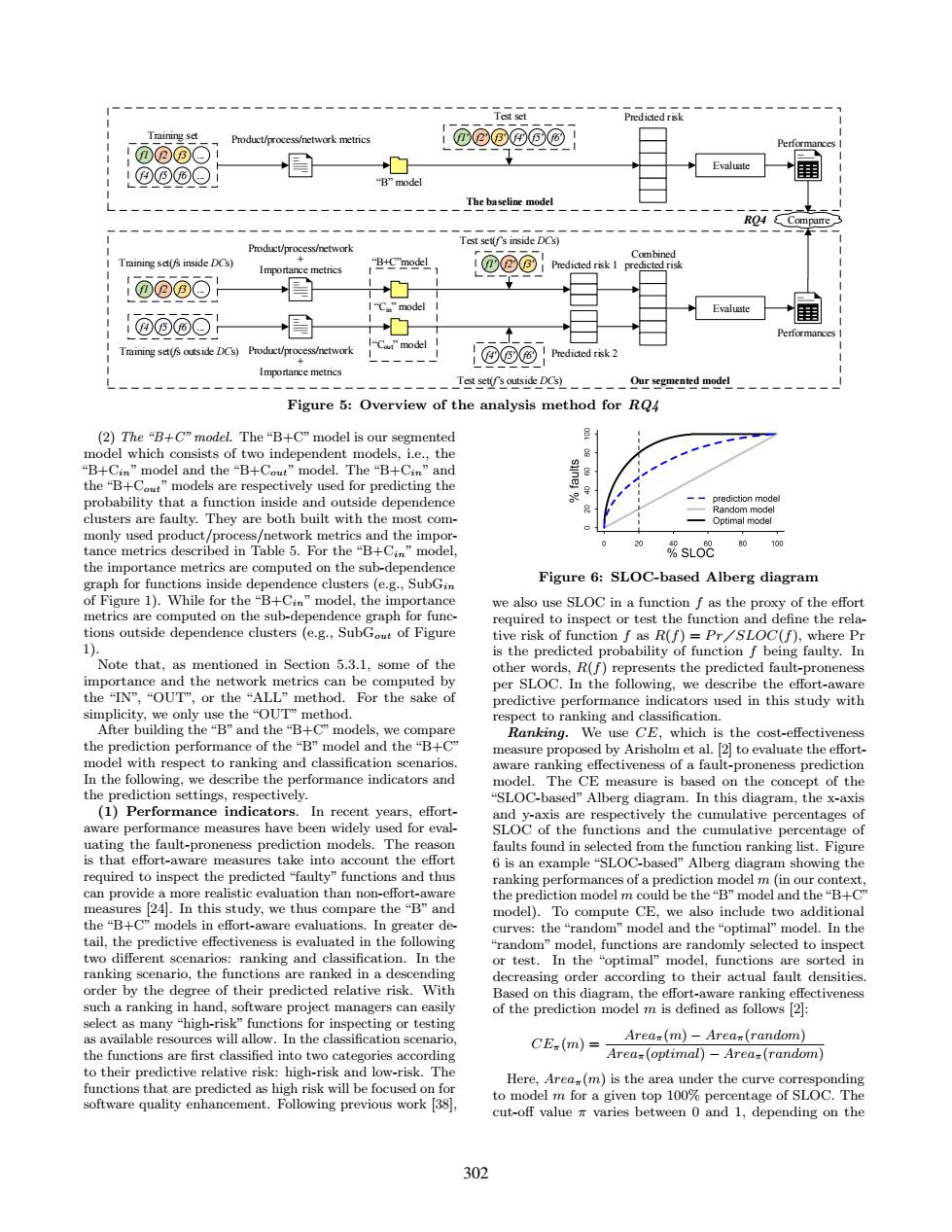

T Predicted risk Training set Product/process/network metrics ⑦@@08而】 Pertormances @@@01 Evaluate 1@⊙@▣1 B”model The baseline model Tet ssinside DCs) Product/process/network Combined Training set(fs inside DCs) Importance metrics "B+C"model L@0@ Predicted risk 1 predicte 1o@05! “C.”model Evaluate !可⑥面可 Performances Training set(fs outside DCs)Product/process/network @⑤⑥Pdidedk2 Importance metrics Test set(fs outside DCs) Our segmented model Figure 5:Overview of the analysis method for RQ4 (2)The "B+C"model.The"B+C"model is our segmented g1 model which consists of two independent models,i.e.,the B+Cin”model and the“B+Cout”nodel..The“B+Cin”and the "B+Cot"models are respectively used for predicting the probability that a function inside and outside dependence o -prediction model Random model clusters are faulty.They are both built with the most com- Optimal model monly used product/process/network metrics and the impor- tance metrics described in Table 5.For the "B+Cin"model. %sL08 the importance metrics are computed on the sub-dependence graph for functions inside dependence clusters (e.g.,SubGin Figure 6:SLOC-based Alberg diagram of Figure 1).While for the "B+Cin"model,the importance we also use SLOC in a function f as the proxy of the effort metrics are computed on the sub-dependence graph for func- required to inspect or test the function and define the rela- tions outside dependence clusters (e.g.,SubGout of Figure tive risk of function f as R(f)=PrSLOC(f),where Pr 1 is the predicted probability of function f being faulty.In Note that,as mentioned in Section 5.3.1,some of the other words,R(f)represents the predicted fault-proneness importance and the network metrics can be computed by per SLOC.In the following,we describe the effort-aware the“TN",“OUT,or the“ALL”nethod.For the sake of predictive performance indicators used in this study with simplicity,we only use the“OUT”nethod. respect to ranking and classification. After building the“B”and the“B+C"models,we compare Ranking.We use CE,which is the cost-effectiveness the prediction performance of the“B”model and the“B+C measure proposed by Arisholm et al.[2]to evaluate the effort- model with respect to ranking and classification scenarios. aware ranking effectiveness of a fault-proneness prediction In the following,we describe the performance indicators and model.The CE measure is based on the concept of the the prediction settings,respectively. "SLOC-based"Alberg diagram.In this diagram,the x-axis (1)Performance indicators.In recent years,effort- and y-axis are respectively the cumulative percentages of aware performance measures have been widely used for eval- SLOC of the functions and the cumulative percentage of uating the fault-proneness prediction models.The reason faults found in selected from the function ranking list.Figure is that effort-aware measures take into account the effort 6 is an example "SLOC-based"Alberg diagram showing the required to inspect the predicted "faulty"functions and thus ranking performances of a prediction model m(in our context can provide a more realistic evaluation than non-effort-aware the prediction model m could be the "B"model and the"B+C" measures 24.In this study,we thus compare the "B"and model).To compute CE,we also include two additional the "B+C"models in effort-aware evaluations.In greater de- curves:the“random”nodel and the“optimal'”nodel.In the tail,the predictive effectiveness is evaluated in the following "random"model,functions are randomly selected to inspect two different scenarios:ranking and classification.In the or test.In the "optimal"model,functions are sorted in ranking scenario,the functions are ranked in a descending decreasing order according to their actual fault densities. order by the degree of their predicted relative risk.With Based on this diagram,the effort-aware ranking effectiveness such a ranking in hand,software project managers can easily of the prediction model m is defined as follows 2: select as many"high-risk"functions for inspecting or testing as available resources will allow.In the classification scenario, CE(m)= Areax(m)-Arear(random) the functions are first classified into two categories according Area=(optimal)-Area(random) to their predictive relative risk:high-risk and low-risk.The functions that are predicted as high risk will be focused on for Here,Area(m)is the area under the curve corresponding software quality enhancement.Following previous work [38], to model m for a given top 100%percentage of SLOC.The cut-off value varies between 0 and 1,depending on the 302Product/process/network + Importance metrics Product/process/network + Importance metrics Cin model Cout model Predicted risk 2 Evaluate Combined predicted risk Performances Test set(f s inside DCs) Training set(fs inside DCs) Training set(fs outside DCs) Test set(f s outside DCs) B+C model Our segmented model RQ4 Comparre Predicted risk 1 Product/process/network metrics Predicted risk Evaluate Test set Training set B model Performances The baseline model f1' f2' f3' f4' f5' f6' f1 f2 f3 ... f4 f5 f6 ... f1 f2 f3 ... f4 f5 f6 ... f1' f2' f3' f4' f5' f6' Figure 5: Overview of the analysis method for RQ4 (2) The “B+C” model. The “B+C” model is our segmented model which consists of two independent models, i.e., the “B+Cin” model and the “B+Cout” model. The “B+Cin” and the “B+Cout” models are respectively used for predicting the probability that a function inside and outside dependence clusters are faulty. They are both built with the most commonly used product/process/network metrics and the importance metrics described in Table 5. For the “B+Cin” model, the importance metrics are computed on the sub-dependence graph for functions inside dependence clusters (e.g., SubGin of Figure 1). While for the “B+Cin” model, the importance metrics are computed on the sub-dependence graph for functions outside dependence clusters (e.g., SubGout of Figure 1). Note that, as mentioned in Section 5.3.1, some of the importance and the network metrics can be computed by the “IN”, “OUT”, or the “ALL” method. For the sake of simplicity, we only use the “OUT” method. After building the “B” and the “B+C” models, we compare the prediction performance of the “B” model and the “B+C” model with respect to ranking and classification scenarios. In the following, we describe the performance indicators and the prediction settings, respectively. (1) Performance indicators. In recent years, effortaware performance measures have been widely used for evaluating the fault-proneness prediction models. The reason is that effort-aware measures take into account the effort required to inspect the predicted “faulty” functions and thus can provide a more realistic evaluation than non-effort-aware measures [24]. In this study, we thus compare the “B” and the “B+C” models in effort-aware evaluations. In greater detail, the predictive effectiveness is evaluated in the following two different scenarios: ranking and classification. In the ranking scenario, the functions are ranked in a descending order by the degree of their predicted relative risk. With such a ranking in hand, software project managers can easily select as many “high-risk” functions for inspecting or testing as available resources will allow. In the classification scenario, the functions are first classified into two categories according to their predictive relative risk: high-risk and low-risk. The functions that are predicted as high risk will be focused on for software quality enhancement. Following previous work [38], Figure 6: SLOC-based Alberg diagram we also use SLOC in a function f as the proxy of the effort required to inspect or test the function and define the relative risk of function f as R(f) = P r

SLOC(f), where Pr is the predicted probability of function f being faulty. In other words, R(f) represents the predicted fault-proneness per SLOC. In the following, we describe the effort-aware predictive performance indicators used in this study with respect to ranking and classification. Ranking. We use CE, which is the cost-effectiveness measure proposed by Arisholm et al. [2] to evaluate the effortaware ranking effectiveness of a fault-proneness prediction model. The CE measure is based on the concept of the “SLOC-based” Alberg diagram. In this diagram, the x-axis and y-axis are respectively the cumulative percentages of SLOC of the functions and the cumulative percentage of faults found in selected from the function ranking list. Figure 6 is an example “SLOC-based” Alberg diagram showing the ranking performances of a prediction model m (in our context, the prediction model m could be the “B”model and the “B+C” model). To compute CE, we also include two additional curves: the “random” model and the “optimal” model. In the “random” model, functions are randomly selected to inspect or test. In the “optimal” model, functions are sorted in decreasing order according to their actual fault densities. Based on this diagram, the effort-aware ranking effectiveness of the prediction model m is defined as follows [2]: CEπ(m) = Areaπ(m) − Areaπ(random) Areaπ(optimal) − Areaπ(random) Here, Areaπ(m) is the area under the curve corresponding to model m for a given top 100% percentage of SLOC. The cut-off value π varies between 0 and 1, depending on the 302