正在加载图片...

R the resource locally shared by Ci and C2,the new rely condition (Expr)E ::=xX n E+E E-E... RVG2 for Cl is then too weak to be useful.The variation of the rule in Sec.2.3 has the same problem. (Bexp)B ::true l falseE=EE E .. (Stmts)C ::=x:=E:=[E]E]:=E I skip 3.Our Approach x:=cons(E,....E)|dispose(E)C:C As in SAGL and RGSep,we also split program states into thread- I if B then C else C|while B do C I C1 ll C2 private and shared parts.Each thread has exclusive access of its atomic(B)(C) own private resources.Rely/guarantee conditions only specify the shared part.But we try to borrow more ideas from Separation Logic Figure 3.The Language to address the remaining compositionality problems. We first introduce the separating conjunction over actions,i.e., binary relations of states specifying state transitions.Rely and guar- (Store)s∈Par一imlu antee conditions are all actions.Similar to the separating conjunc- tion p*p'in Separation Logic,R+R'(or G*G')means the two (LvMap)i∈LVar一fin Int sub-actions R and R'(or G and G')occur over disjoint parts of (Heap)h∈Nat一iaIt states.A formal definition will be given in Sec.5. (State)o E Storex LvMapx Heap We can now extend the frame rule in Separation Logic to sup- (Trans)R,G∈P(State x State) port local rely and guarantee conditions: R.G((p,r)C(q,r)m stable with respect to R' Figure 4.Program States R*R',G*G'(p,r*m)C[(g.r'sm) Following SAGL and RGSep,here we specify private and shared The hiding rule is particularly interesting when C is a parallel resources separately in pre-and post-conditions (but not in our composition (CIl C2).It allows us to derive a more general rule formal development).We use R,G(p.r))C(g.r)]to represent for parallel composition such that the children threads may share the old judgment C sat ((p,r).R,G,(g.r))described in Sec.2.3 resources that appear to the outside world as private resources The frame rule says we can verify C with the local specification of the parent thread.This also solves the last problem described ((p,r),R,G,(g.r)).When C is executed in different contexts with in Sec.2.4.Sharing of dynamically allocated resources usually the extra shared resource specified by m,we know C satisfies follows the pattern of Co:(CI II C2).like the example in Sec.2.4 the "bigger"specification without redoing the proof.The stability New resources are allocated by Co and then shared by CI and C2. check that causes compositionality problems in Rely-Guarantee Since they are unknown at the beginning of Co,we cannot specify reasoning,as explained in Sec.2.1,is no longer an issue here them in the global R and G.Our new rule allows us to leave them because we can prove r*m is stable with respect to R*R'if r unspecified in the R and G outside of Cil C2. is stable with respect to R and m is stable with respect to R'(we actually need some subtle constraints to prove this,which will be Note that the rules shown in this section are used to illustrate our explained in Sec.5). basic ideas in a semi-formal way.The actual ones in the LRG logic are presented in Sec.6 and are in different forms.In particular,we The simpler frame rule for private resources,as shown below,is do not need the pairs(p,r)as pre-and post-conditions. supported in SAGL and RGSep and is sound in our logic too. R,G{p,r)C(4,)} 4. The Language R,G上{(p*m,r)lC(q*m,r)刃 The syntax of the language is defined in Fig.3.We use x and X Since the rely/guarantee conditions specify only the shared re- to represent program variables (PVar)and logical variables (LVar) sources.they do not need to be changed with the extension of the respectively.The expressions E and B are pure.The statement private predicates. x:=[E]([E]:=E')loads values from (stores the value E'into) To allow the hiding of the local sharing of resources by a subset memory at the location E.x:=cons(E1,...,En)allocates n consec- of threads,we introduce a new hiding rule: utive fresh memory cells and initializes them with E.....En.The starting address is picked nondeterministically and assigned to x. R*R',G*G'((p.r*m))C((g.r'*m')][side-conditions omitted] dispose(E)frees the memory cell at the location E. R.GF(p*m,r)C((g*m',r') The atomic block atomic(B)(C)executes C atomically if B This rule says if the resource specified by m and m'is shared locally holds.Otherwise the current process is blocked until B becomes inside C.and transitions over the resource is specified by R'and G. true.As pointed out by Vafeiadis and Parkinson(2007).it can be we can treat it as private and hide R'and G'in the rely/guarantee used to model synchronizations at different levels,such as a system- conditions so that it is invisible from the outside world.Although wide lock or atomicity guaranteed by transactional memory.The this rule only converts part of the shared resource into private use of atomic(true)(C)can also be viewed as annotations of atomic and does not hide its existence in the pre-and post-conditions,it machine instructions for fine-grained concurrency (Parkinson et al. does hide the resource from other threads because rely/guarantee 2007).The other statements in Fig.3 have standard meanings. conditions are the interface between threads. Figure 4 presents the model of program states.The store s is At first glance,this rule seems to allow a thread to arbitrarily a finite partial mapping from program variables to integers;the hide any shared resources so that it can cheat its environment.The logical variable mapping i maps logical variables to integers;and thread,however,cannot abuse this freedom because the inappropri- the heap h maps memory locations (natural numbers)to integers ate hiding will be detected at the point of parallel composition.We The program state or is a triple of (s,i,h).State transitions R and G will explain this in detail in Sec.6. are binary relations of states.R the resource locally shared by C1 and C2, the new rely condition R ∨ G2 for C1 is then too weak to be useful. The variation of the rule in Sec. 2.3 has the same problem. 3. Our Approach As in SAGL and RGSep, we also split program states into threadprivate and shared parts. Each thread has exclusive access of its own private resources. Rely/guarantee conditions only specify the shared part. But we try to borrow more ideas from Separation Logic to address the remaining compositionality problems. We first introduce the separating conjunction over actions, i.e., binary relations of states specifying state transitions. Rely and guarantee conditions are all actions. Similar to the separating conjunction p ∗ p in Separation Logic, R ∗ R (or G ∗ G ) means the two sub-actions R and R (or G and G ) occur over disjoint parts of states. A formal definition will be given in Sec. 5. We can now extend the frame rule in Separation Logic to support local rely and guarantee conditions: R,G

{(p,r)}C{(q,r )} m stable with respect to R ... R∗R ,G ∗G

{(p,r ∗m)}C{(q,r ∗m)} Following SAGL and RGSep, here we specify private and shared resources separately in pre- and post-conditions (but not in our formal development). We use R,G

{(p,r)}C{(q,r )} to represent the old judgment C sat ((p,r),R,G,(q,r )) described in Sec. 2.3. The frame rule says we can verify C with the local specification ((p,r),R,G,(q,r )). When C is executed in different contexts with the extra shared resource specified by m, we know C satisfies the “bigger” specification without redoing the proof. The stability check that causes compositionality problems in Rely-Guarantee reasoning, as explained in Sec. 2.1, is no longer an issue here because we can prove r ∗ m is stable with respect to R ∗ R if r is stable with respect to R and m is stable with respect to R (we actually need some subtle constraints to prove this, which will be explained in Sec. 5). The simpler frame rule for private resources, as shown below, is supported in SAGL and RGSep and is sound in our logic too. R,G

{(p,r)}C{(q,r )} R,G

{(p ∗m,r)}C{(q ∗m,r )} Since the rely/guarantee conditions specify only the shared resources, they do not need to be changed with the extension of the private predicates. To allow the hiding of the local sharing of resources by a subset of threads, we introduce a new hiding rule: R∗R ,G ∗G

{(p,r ∗m)}C{(q,r ∗m )} [side-conditions omitted] R,G

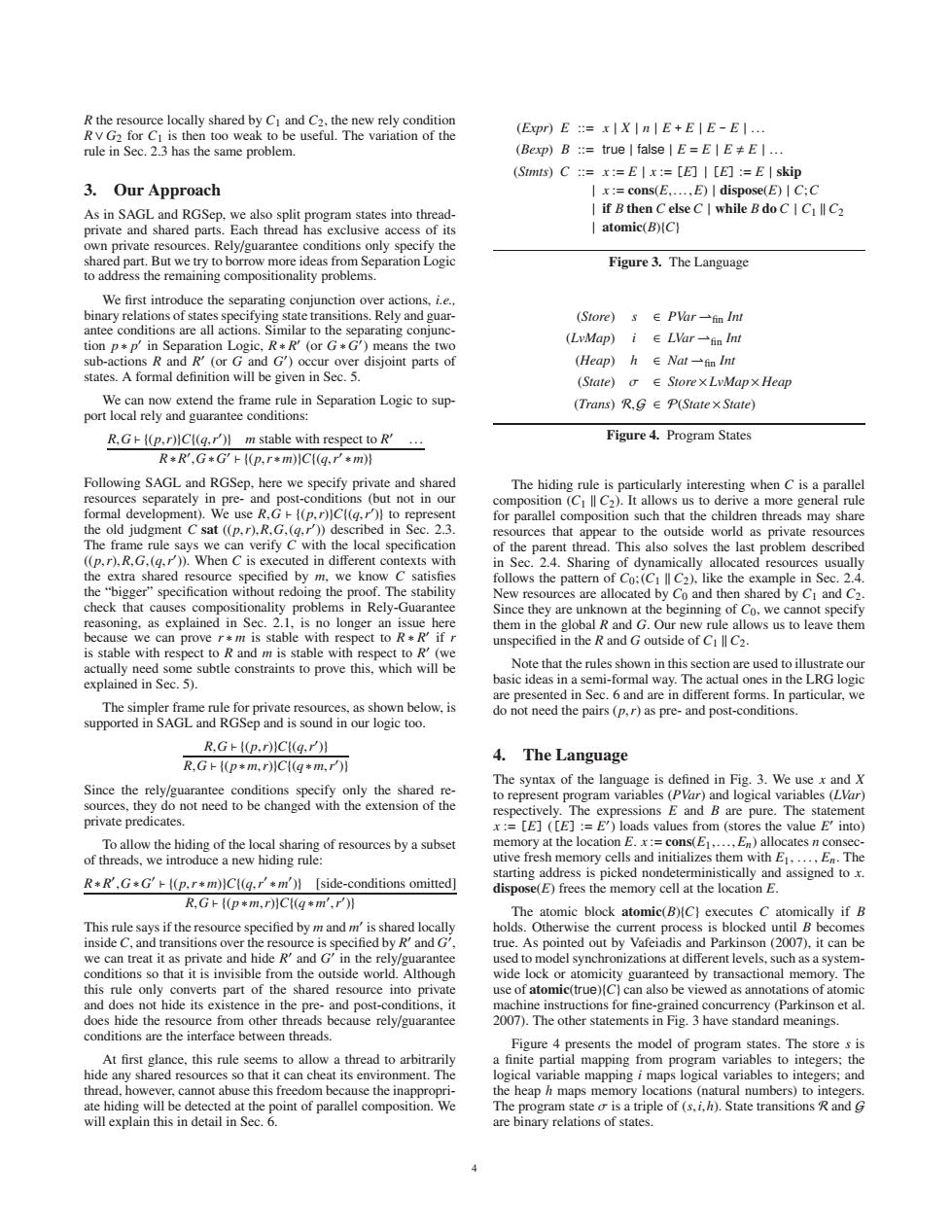

{(p ∗m,r)}C{(q ∗m ,r )} This rule says if the resource specified by m and m is shared locally inside C, and transitions over the resource is specified by R and G , we can treat it as private and hide R and G in the rely/guarantee conditions so that it is invisible from the outside world. Although this rule only converts part of the shared resource into private and does not hide its existence in the pre- and post-conditions, it does hide the resource from other threads because rely/guarantee conditions are the interface between threads. At first glance, this rule seems to allow a thread to arbitrarily hide any shared resources so that it can cheat its environment. The thread, however, cannot abuse this freedom because the inappropriate hiding will be detected at the point of parallel composition. We will explain this in detail in Sec. 6. (Expr) E ::= x | X | n | E + E | E - E | ... (Bexp) B ::= true | false | E = E | E E | ... (Stmts) C ::= x := E | x := [E] | [E] := E | skip | x := cons(E,...,E) | dispose(E) | C;C | if B then C else C | while B do C | C1 C2 | atomic(B){C} Figure 3. The Language (Store) s ∈ PVar fin Int (LvMap) i ∈ LVar fin Int (Heap) h ∈ Nat fin Int (State) σ ∈ Store×LvMap×Heap (Trans) R,G∈P(State×State) Figure 4. Program States The hiding rule is particularly interesting when C is a parallel composition (C1 C2). It allows us to derive a more general rule for parallel composition such that the children threads may share resources that appear to the outside world as private resources of the parent thread. This also solves the last problem described in Sec. 2.4. Sharing of dynamically allocated resources usually follows the pattern of C0; (C1 C2), like the example in Sec. 2.4. New resources are allocated by C0 and then shared by C1 and C2. Since they are unknown at the beginning of C0, we cannot specify them in the global R and G. Our new rule allows us to leave them unspecified in the R and G outside of C1 C2. Note that the rules shown in this section are used to illustrate our basic ideas in a semi-formal way. The actual ones in the LRG logic are presented in Sec. 6 and are in different forms. In particular, we do not need the pairs (p,r) as pre- and post-conditions. 4. The Language The syntax of the language is defined in Fig. 3. We use x and X to represent program variables (PVar) and logical variables (LVar) respectively. The expressions E and B are pure. The statement x := [E] ([E] := E ) loads values from (stores the value E into) memory at the location E. x := cons(E1,...,En) allocates n consecutive fresh memory cells and initializes them with E1,..., En. The starting address is picked nondeterministically and assigned to x. dispose(E) frees the memory cell at the location E. The atomic block atomic(B){C} executes C atomically if B holds. Otherwise the current process is blocked until B becomes true. As pointed out by Vafeiadis and Parkinson (2007), it can be used to model synchronizations at different levels, such as a systemwide lock or atomicity guaranteed by transactional memory. The use of atomic(true){C} can also be viewed as annotations of atomic machine instructions for fine-grained concurrency (Parkinson et al. 2007). The other statements in Fig. 3 have standard meanings. Figure 4 presents the model of program states. The store s is a finite partial mapping from program variables to integers; the logical variable mapping i maps logical variables to integers; and the heap h maps memory locations (natural numbers) to integers. The program state σ is a triple of (s,i,h). State transitions R and G are binary relations of states. 4��������