正在加载图片...

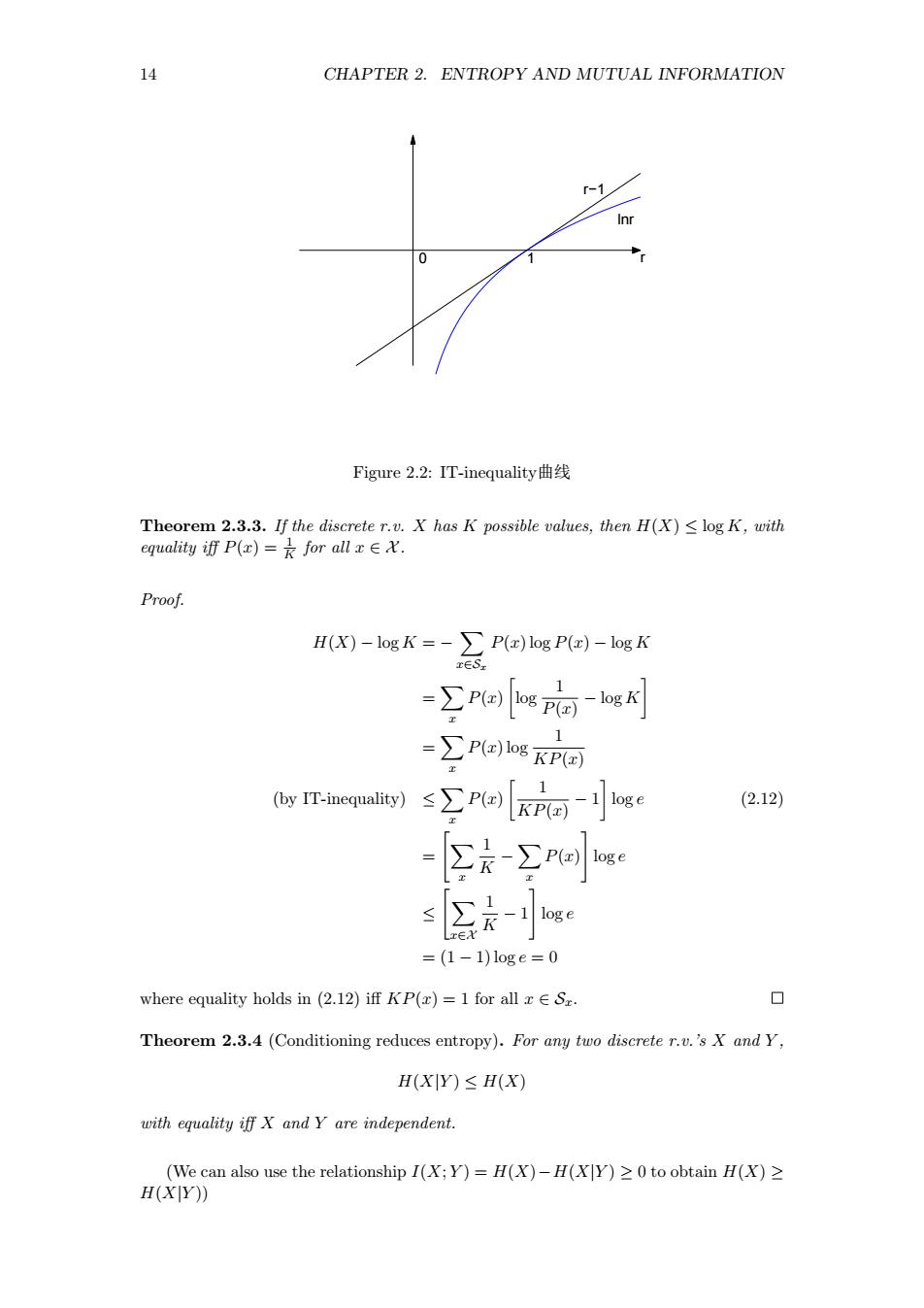

14 CHAPTER 2.ENTROPY AND MUTUAL INFORMATION r-1 Figure2.2:IT-inequality曲线 Theorem 2.3.3.If the discrete r.v.X has K possible values,then H(X)log K,with equality fP()=for all x∈. Proof. H(X)-logK=->P()log P()-log K ES, =∑Pasa-s =∑P)1ogKP 1 x (yn)≤∑PKP-]oge (2.12) =[∑-∑ra间e =(1-1)1oge=0 where equality holds in (2.12)iff KP()=1 for all z E Sz. ◇ Theorem 2.3.(Conditioning reduces entropy).For any two discreter.'X and Y, H(XY)≤H(X) with equality iff X and Y are independent. (We can also use the relationship I(X;Y)=H(X)-H(XY)20 to obtain H(X)2 H()) 14 CHAPTER 2. ENTROPY AND MUTUAL INFORMATION r−1 1 lnr 0 r Figure 2.2: IT-inequality曲线 Theorem 2.3.3. If the discrete r.v. X has K possible values, then H(X) ≤ log K, with equality iff P(x) = 1 K for all x ∈ X . Proof. H(X) − log K = − ∑ x∈Sx P(x) log P(x) − log K = ∑ x P(x) [ log 1 P(x) − log K ] = ∑ x P(x) log 1 KP(x) (by IT-inequality) ≤ ∑ x P(x) [ 1 KP(x) − 1 ] log e (2.12) = [∑ x 1 K − ∑ x P(x) ] log e ≤ [∑ x∈X 1 K − 1 ] log e = (1 − 1) log e = 0 where equality holds in (2.12) iff KP(x) = 1 for all x ∈ Sx. Theorem 2.3.4 (Conditioning reduces entropy). For any two discrete r.v.’s X and Y , H(X|Y ) ≤ H(X) with equality iff X and Y are independent. (We can also use the relationship I(X; Y ) = H(X)−H(X|Y ) ≥ 0 to obtain H(X) ≥ H(X|Y ))