正在加载图片...

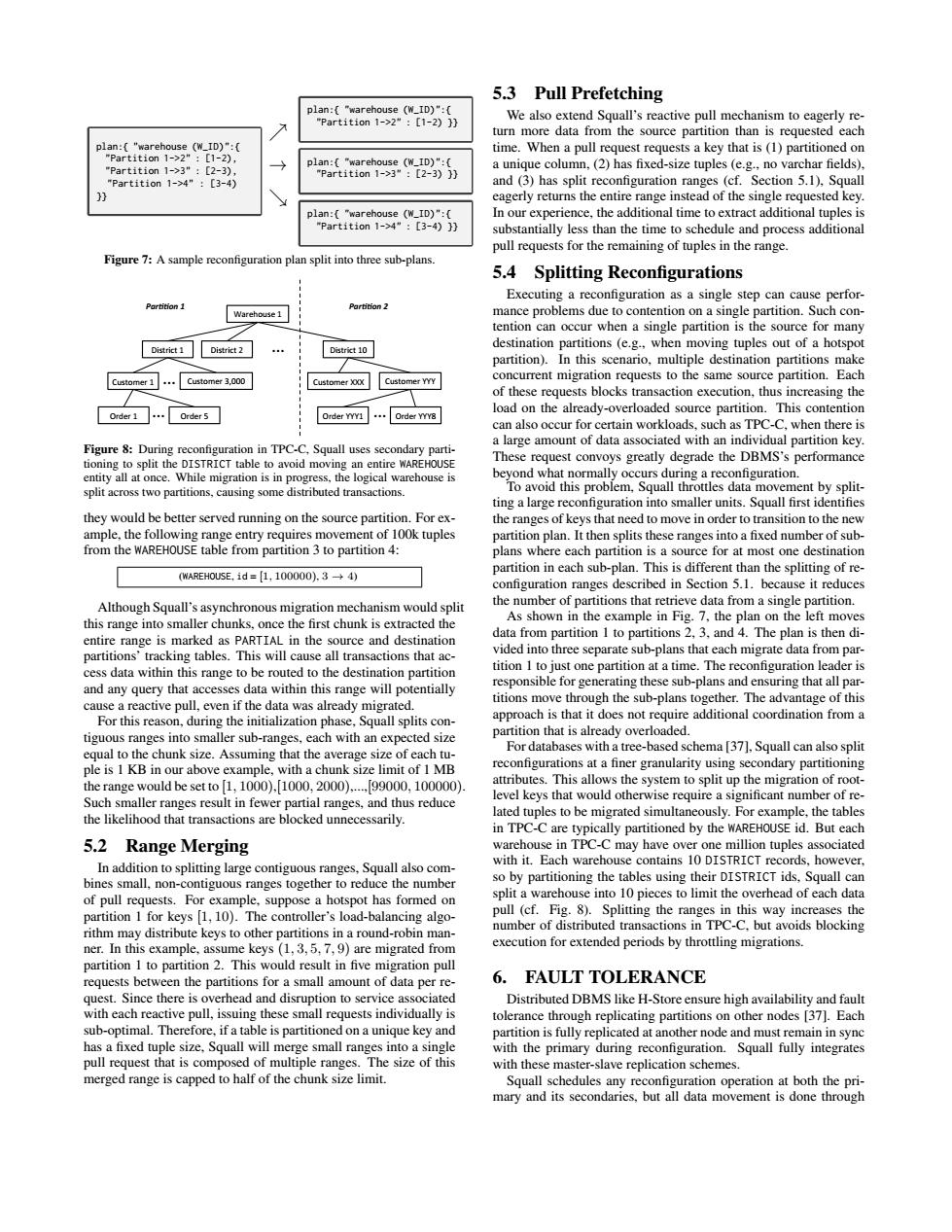

5.3 Pull Prefetching a pull request requests a key that is (parti and ()has split re s (ef.Section 5.D).Squal plan split into three sub-pans. 5.4 Splitting Reconfigurations Executing a reconfiguration as a single step can cause perfor tention an ccur when a single partition is the sour same s on the large amount of al part ting a large r d be better served running on the so rition plan It th 0NA3H0U5E.id=[L.100000).3→4) rtition in each sub-plan.This Although Squall'ssyronous would split entire range is marked as PARTIA in the source and destination sub-plan that ach mig range will pot respo ring that all p within this entially or this rea hema 1371 Sauall can als of cnecomlaeeoL.100100.20j-00.100m0. el keys tha in TPC-C are typ y partitioned by the w ac 5.2 Range Merging with it.Eac ea hotspot has formed execution for extended periods by throttling migrations. 6 FAULT TOLERANCE buted DBMS like H-Stor ailabil n each ests in ugh replic ge small rang merged range is capped to half of the chunk size limit. ion plan:{ "warehouse (W_ID)":{ "Partition 1->2" : [1-2), "Partition 1->3" : [2-3), "Partition 1->4" : [3-4) }} % → & plan:{ "warehouse (W_ID)":{ "Partition 1->2" : [1-2) }} plan:{ "warehouse (W_ID)":{ "Partition 1->3" : [2-3) }} plan:{ "warehouse (W_ID)":{ "Partition 1->4" : [3-4) }} Figure 7: A sample reconfiguration plan split into three sub-plans. District'1' District'10' Warehouse'1' Order'1' Customer'1' Customer'3,000' Order'5' …' …' …' Par$$on'1' Order'YYY1' Customer'XXX' …' Order'YYY8'' District'2' Customer'YYY' Par$$on'2' Figure 8: During reconfiguration in TPC-C, Squall uses secondary partitioning to split the DISTRICT table to avoid moving an entire WAREHOUSE entity all at once. While migration is in progress, the logical warehouse is split across two partitions, causing some distributed transactions. they would be better served running on the source partition. For example, the following range entry requires movement of 100k tuples from the WAREHOUSE table from partition 3 to partition 4: (WAREHOUSE, id = [1, 100000), 3 → 4) Although Squall’s asynchronous migration mechanism would split this range into smaller chunks, once the first chunk is extracted the entire range is marked as PARTIAL in the source and destination partitions’ tracking tables. This will cause all transactions that access data within this range to be routed to the destination partition and any query that accesses data within this range will potentially cause a reactive pull, even if the data was already migrated. For this reason, during the initialization phase, Squall splits contiguous ranges into smaller sub-ranges, each with an expected size equal to the chunk size. Assuming that the average size of each tuple is 1 KB in our above example, with a chunk size limit of 1 MB the range would be set to [1, 1000),[1000, 2000),...,[99000, 100000). Such smaller ranges result in fewer partial ranges, and thus reduce the likelihood that transactions are blocked unnecessarily. 5.2 Range Merging In addition to splitting large contiguous ranges, Squall also combines small, non-contiguous ranges together to reduce the number of pull requests. For example, suppose a hotspot has formed on partition 1 for keys [1, 10). The controller’s load-balancing algorithm may distribute keys to other partitions in a round-robin manner. In this example, assume keys (1, 3, 5, 7, 9) are migrated from partition 1 to partition 2. This would result in five migration pull requests between the partitions for a small amount of data per request. Since there is overhead and disruption to service associated with each reactive pull, issuing these small requests individually is sub-optimal. Therefore, if a table is partitioned on a unique key and has a fixed tuple size, Squall will merge small ranges into a single pull request that is composed of multiple ranges. The size of this merged range is capped to half of the chunk size limit. 5.3 Pull Prefetching We also extend Squall’s reactive pull mechanism to eagerly return more data from the source partition than is requested each time. When a pull request requests a key that is (1) partitioned on a unique column, (2) has fixed-size tuples (e.g., no varchar fields), and (3) has split reconfiguration ranges (cf. Section 5.1), Squall eagerly returns the entire range instead of the single requested key. In our experience, the additional time to extract additional tuples is substantially less than the time to schedule and process additional pull requests for the remaining of tuples in the range. 5.4 Splitting Reconfigurations Executing a reconfiguration as a single step can cause performance problems due to contention on a single partition. Such contention can occur when a single partition is the source for many destination partitions (e.g., when moving tuples out of a hotspot partition). In this scenario, multiple destination partitions make concurrent migration requests to the same source partition. Each of these requests blocks transaction execution, thus increasing the load on the already-overloaded source partition. This contention can also occur for certain workloads, such as TPC-C, when there is a large amount of data associated with an individual partition key. These request convoys greatly degrade the DBMS’s performance beyond what normally occurs during a reconfiguration. To avoid this problem, Squall throttles data movement by splitting a large reconfiguration into smaller units. Squall first identifies the ranges of keys that need to move in order to transition to the new partition plan. It then splits these ranges into a fixed number of subplans where each partition is a source for at most one destination partition in each sub-plan. This is different than the splitting of reconfiguration ranges described in Section 5.1. because it reduces the number of partitions that retrieve data from a single partition. As shown in the example in Fig. 7, the plan on the left moves data from partition 1 to partitions 2, 3, and 4. The plan is then divided into three separate sub-plans that each migrate data from partition 1 to just one partition at a time. The reconfiguration leader is responsible for generating these sub-plans and ensuring that all partitions move through the sub-plans together. The advantage of this approach is that it does not require additional coordination from a partition that is already overloaded. For databases with a tree-based schema [37], Squall can also split reconfigurations at a finer granularity using secondary partitioning attributes. This allows the system to split up the migration of rootlevel keys that would otherwise require a significant number of related tuples to be migrated simultaneously. For example, the tables in TPC-C are typically partitioned by the WAREHOUSE id. But each warehouse in TPC-C may have over one million tuples associated with it. Each warehouse contains 10 DISTRICT records, however, so by partitioning the tables using their DISTRICT ids, Squall can split a warehouse into 10 pieces to limit the overhead of each data pull (cf. Fig. 8). Splitting the ranges in this way increases the number of distributed transactions in TPC-C, but avoids blocking execution for extended periods by throttling migrations. 6. FAULT TOLERANCE Distributed DBMS like H-Store ensure high availability and fault tolerance through replicating partitions on other nodes [37]. Each partition is fully replicated at another node and must remain in sync with the primary during reconfiguration. Squall fully integrates with these master-slave replication schemes. Squall schedules any reconfiguration operation at both the primary and its secondaries, but all data movement is done through