正在加载图片...

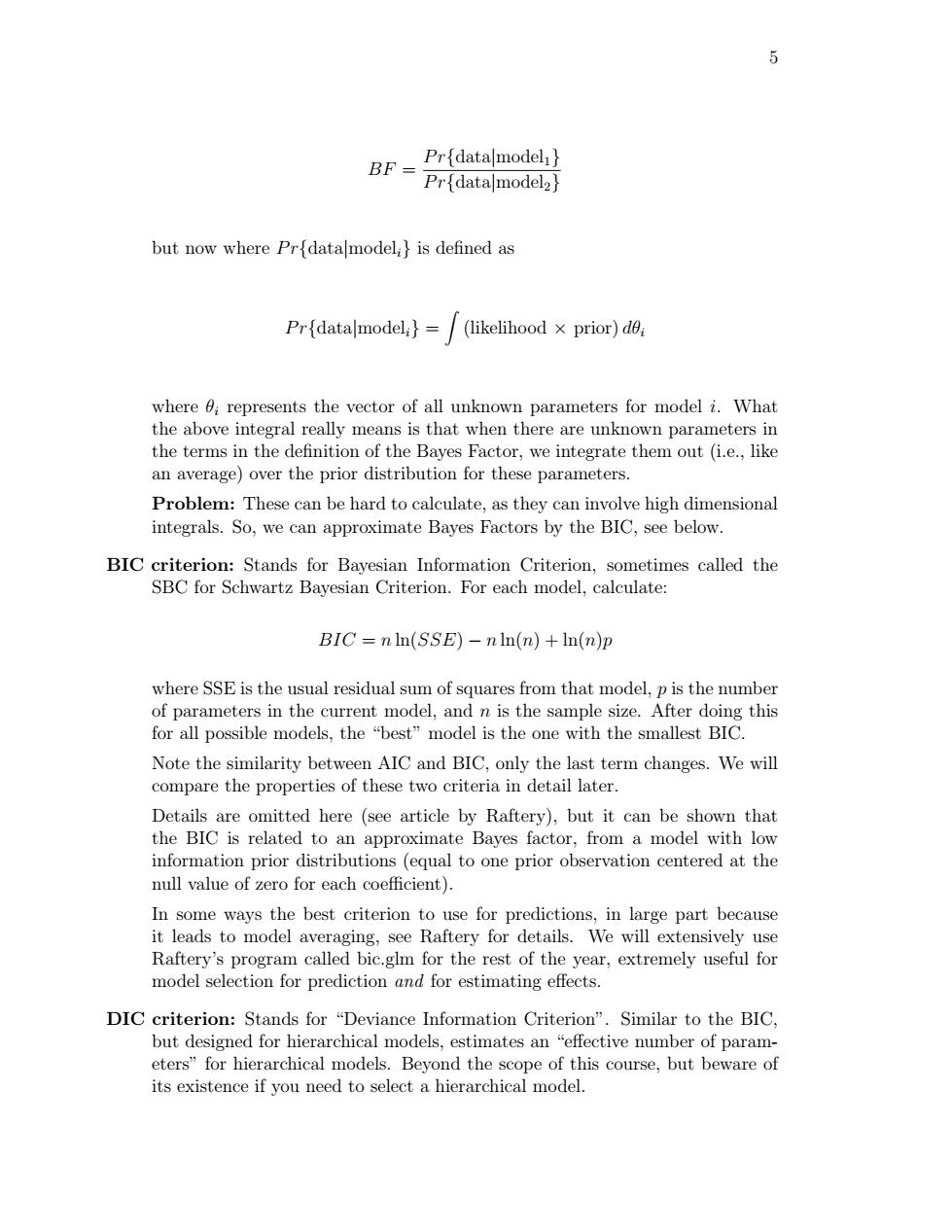

6 BF= Prfdatamodel Prfdatalmodel2 but now where Prfdatamodel is defined as Prfdatalmodeli}= (likelihood x prior)de where 0i represents the vector of all unknown parameters for model i.What the above integral really means is that when there are unknown parameters in the terms in the definition of the Bayes Factor,we integrate them out (i.e.,like an average)over the prior distribution for these parameters. Problem:These can be hard to calculate,as they can involve high dimensional integrals.So,we can approximate Bayes Factors by the BIC,see below. BIC criterion:Stands for Bayesian Information Criterion,sometimes called the SBC for Schwartz Bayesian Criterion.For each model,calculate: BIC nIn(SSE)-nln(n)+In(n)p where SSE is the usual residual sum of squares from that model,p is the number of parameters in the current model,and n is the sample size.After doing this for all possible models,the "best"model is the one with the smallest BIC. Note the similarity between AIC and BIC,only the last term changes.We will compare the properties of these two criteria in detail later. Details are omitted here (see article by Raftery),but it can be shown that the BIC is related to an approximate Bayes factor,from a model with low information prior distributions (equal to one prior observation centered at the null value of zero for each coefficient). In some ways the best criterion to use for predictions,in large part because it leads to model averaging,see Raftery for details.We will extensively use Raftery's program called bic.glm for the rest of the year,extremely useful for model selection for prediction and for estimating effects. DIC criterion:Stands for "Deviance Information Criterion".Similar to the BIC, but designed for hierarchical models,estimates an "effective number of param- eters"for hierarchical models.Beyond the scope of this course,but beware of its existence if you need to select a hierarchical model.5 BF = P r{data|model1} P r{data|model2} but now where P r{data|modeli} is defined as P r{data|modeli} = Z (likelihood × prior) dθi where θi represents the vector of all unknown parameters for model i. What the above integral really means is that when there are unknown parameters in the terms in the definition of the Bayes Factor, we integrate them out (i.e., like an average) over the prior distribution for these parameters. Problem: These can be hard to calculate, as they can involve high dimensional integrals. So, we can approximate Bayes Factors by the BIC, see below. BIC criterion: Stands for Bayesian Information Criterion, sometimes called the SBC for Schwartz Bayesian Criterion. For each model, calculate: BIC = n ln(SSE) − n ln(n) + ln(n)p where SSE is the usual residual sum of squares from that model, p is the number of parameters in the current model, and n is the sample size. After doing this for all possible models, the “best” model is the one with the smallest BIC. Note the similarity between AIC and BIC, only the last term changes. We will compare the properties of these two criteria in detail later. Details are omitted here (see article by Raftery), but it can be shown that the BIC is related to an approximate Bayes factor, from a model with low information prior distributions (equal to one prior observation centered at the null value of zero for each coefficient). In some ways the best criterion to use for predictions, in large part because it leads to model averaging, see Raftery for details. We will extensively use Raftery’s program called bic.glm for the rest of the year, extremely useful for model selection for prediction and for estimating effects. DIC criterion: Stands for “Deviance Information Criterion”. Similar to the BIC, but designed for hierarchical models, estimates an “effective number of parameters” for hierarchical models. Beyond the scope of this course, but beware of its existence if you need to select a hierarchical model