正在加载图片...

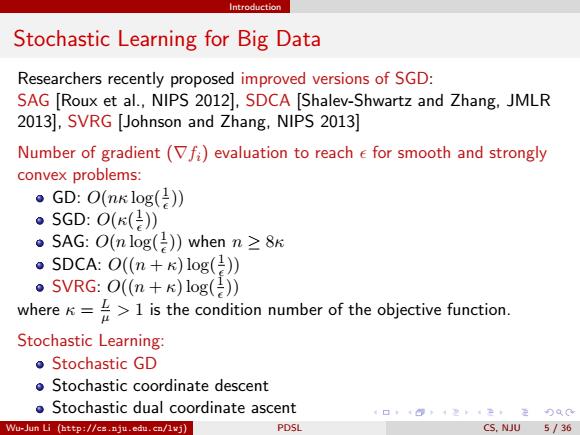

Introduction Stochastic Learning for Big Data Researchers recently proposed improved versions of SGD: SAG [Roux et al.,NIPS 2012],SDCA [Shalev-Shwartz and Zhang,JMLR 2013],SVRG [Johnson and Zhang,NIPS 2013] Number of gradient(Vfi)evaluation to reach e for smooth and strongly convex problems: 。GD:O(nk log(2) ·SGD:O((2) ·SAG:O(nlog()when n≥8k 。SDCA:O(n+)1log(2) ·SVRG:O(m+)log(2) where1 is the condition number of the objective function. Stochastic Learning: Stochastic GD o Stochastic coordinate descent Stochastic dual coordinate ascent 4口,4@下1242,2QC Wu-Jun Li (http://cs.nju.edu.cn/lvj) PDSL CS.NJU 5/36Introduction Stochastic Learning for Big Data Researchers recently proposed improved versions of SGD: SAG [Roux et al., NIPS 2012], SDCA [Shalev-Shwartz and Zhang, JMLR 2013], SVRG [Johnson and Zhang, NIPS 2013] Number of gradient (∇fi) evaluation to reach for smooth and strongly convex problems: GD: O(nκ log( 1 )) SGD: O(κ( 1 )) SAG: O(n log( 1 )) when n ≥ 8κ SDCA: O((n + κ) log( 1 )) SVRG: O((n + κ) log( 1 )) where κ = L µ > 1 is the condition number of the objective function. Stochastic Learning: Stochastic GD Stochastic coordinate descent Stochastic dual coordinate ascent Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS, NJU 5 / 36