正在加载图片...

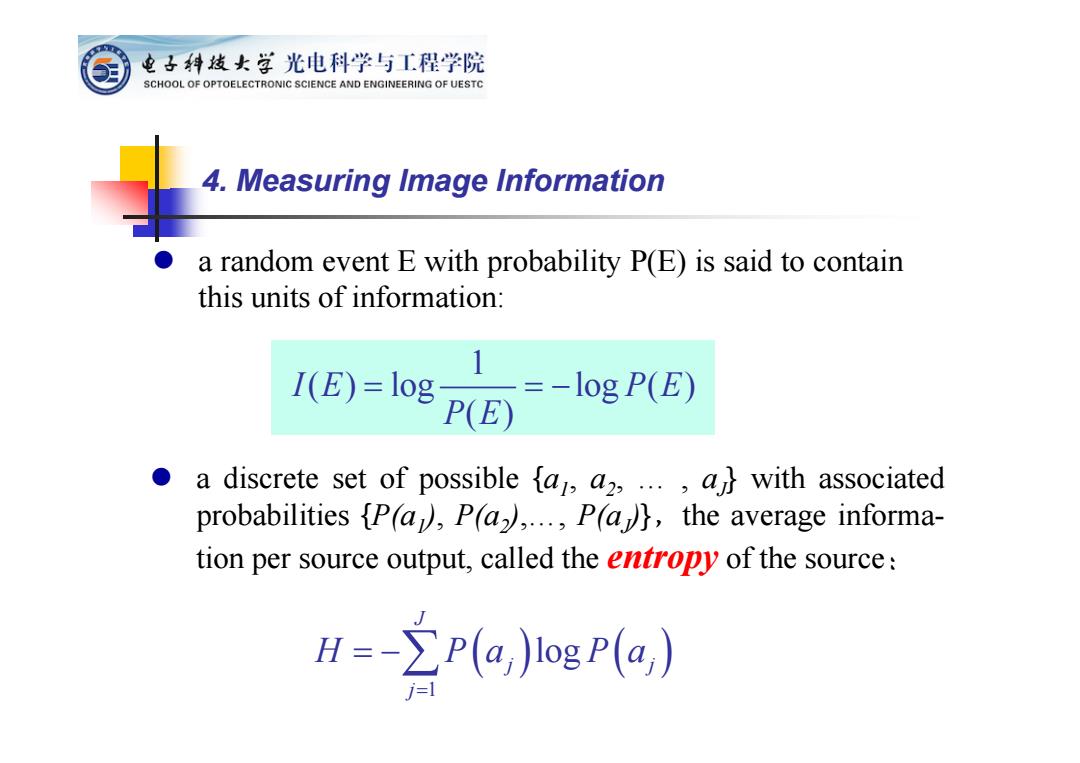

电子科技女学光电科学与工程学院 SCHOOL OF OPTOELECTRONIC SCIENCE AND ENGINEERING OF UESTC 4.Measuring Image Information a random event E with probability P(E)is said to contain this units of information: I(E)=log P(E) =-log P(E) ● a discrete set of possible fa,a2,....a}with associated probabilities [P(a),P(a),...,P(a)},the average informa- tion per source output,called the entropy of the source: H=-∑P(a,)logP(a,) a random event E with probability P(E) is said to contain this units of information: a discrete set of possible {a1, a2,…, aJ} with associated probabilities {P(a1), P(a2),…, P(aJ)},the average information per source output, called the entropy of the source: 1 ( ) log log ( ) ( ) I E PE P E 1 log J j j j H Pa Pa 4. Measuring Image Information