正在加载图片...

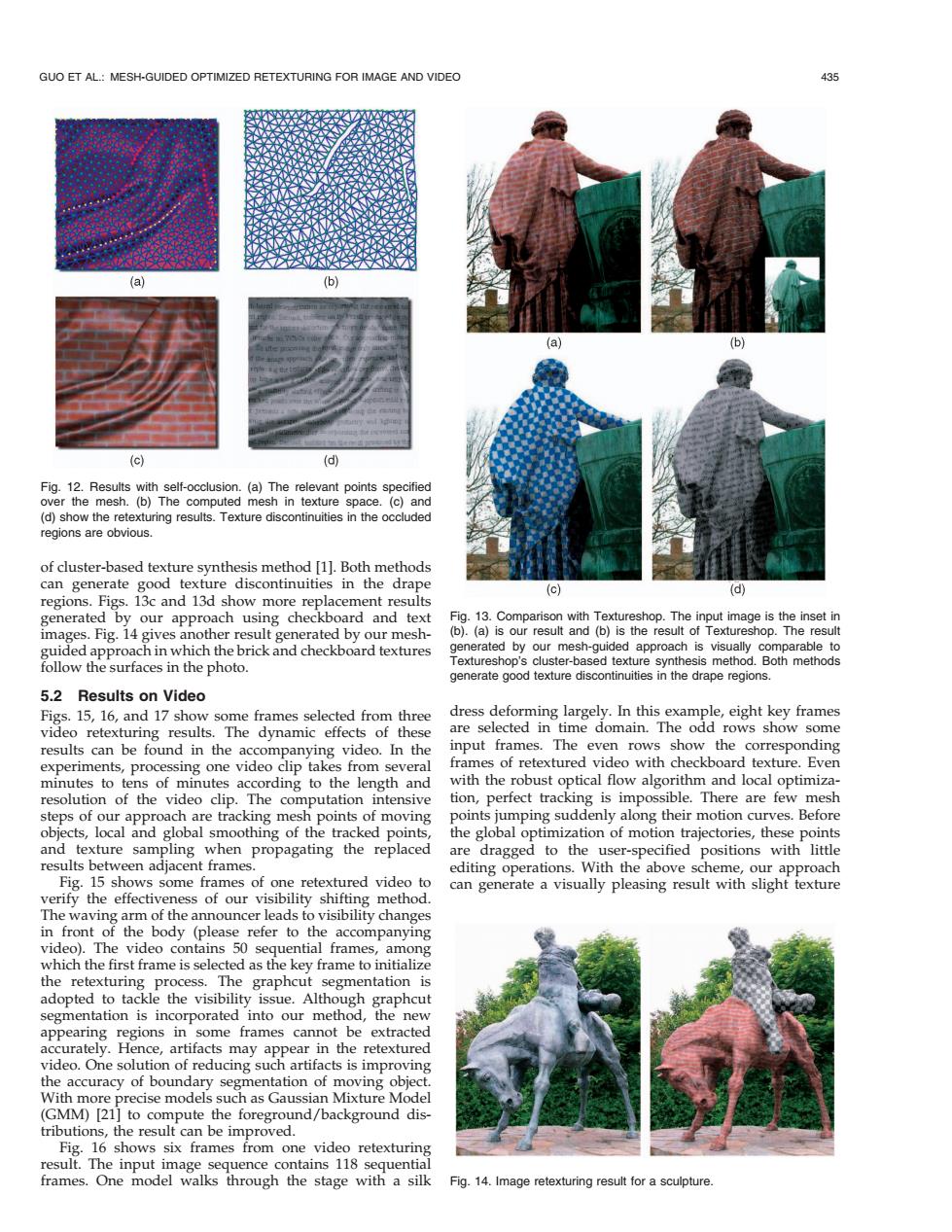

GUO ET AL.:MESH-GUIDED OPTIMIZED RETEXTURING FOR IMAGE AND VIDEO 435 (a) (b) (a) (b (c) (d) Fig.12.Results with self-occlusion.(a)The relevant points specified over the mesh.(b)The computed mesh in texture space.(c)and (d)show the retexturing results.Texture discontinuities in the occluded regions are obvious. of cluster-based texture synthesis method [1].Both methods can generate good texture discontinuities in the drape (c) (d) regions.Figs.13c and 13d show more replacement results generated by our approach using checkboard and text Fig.13.Comparison with Textureshop.The input image is the inset in images.Fig.14 gives another result generated by our mesh- (b).(a)is our result and (b)is the result of Textureshop.The result guided approach in which the brick and checkboard textures generated by our mesh-guided approach is visually comparable to follow the surfaces in the photo. Textureshop's cluster-based texture synthesis method.Both methods generate good texture discontinuities in the drape regions. 5.2 Results on Video Figs.15,16,and 17 show some frames selected from three dress deforming largely.In this example,eight key frames video retexturing results.The dynamic effects of these are selected in time domain.The odd rows show some results can be found in the accompanying video.In the input frames.The even rows show the corresponding experiments,processing one video clip takes from several frames of retextured video with checkboard texture.Even minutes to tens of minutes according to the length and with the robust optical flow algorithm and local optimiza- resolution of the video clip.The computation intensive tion,perfect tracking is impossible.There are few mesh steps of our approach are tracking mesh points of moving points jumping suddenly along their motion curves.Before objects,local and global smoothing of the tracked points, the global optimization of motion trajectories,these points and texture sampling when propagating the replaced are dragged to the user-specified positions with little results between adjacent frames. editing operations.With the above scheme,our approach Fig.15 shows some frames of one retextured video to can generate a visually pleasing result with slight texture verify the effectiveness of our visibility shifting method. The waving arm of the announcer leads to visibility changes in front of the body (please refer to the accompanying video).The video contains 50 sequential frames,among which the first frame is selected as the key frame to initialize the retexturing process.The graphcut segmentation is adopted to tackle the visibility issue.Although graphcut segmentation is incorporated into our method,the new appearing regions in some frames cannot be extracted accurately.Hence,artifacts may appear in the retextured video.One solution of reducing such artifacts is improving the accuracy of boundary segmentation of moving object. With more precise models such as Gaussian Mixture Model (GMM)[21]to compute the foreground/background dis- tributions,the result can be improved. Fig.16 shows six frames from one video retexturing result.The input image sequence contains 118 sequential frames.One model walks through the stage with a silk Fig.14.Image retexturing result for a sculpture.of cluster-based texture synthesis method [1]. Both methods can generate good texture discontinuities in the drape regions. Figs. 13c and 13d show more replacement results generated by our approach using checkboard and text images. Fig. 14 gives another result generated by our meshguided approach in which the brick and checkboard textures follow the surfaces in the photo. 5.2 Results on Video Figs. 15, 16, and 17 show some frames selected from three video retexturing results. The dynamic effects of these results can be found in the accompanying video. In the experiments, processing one video clip takes from several minutes to tens of minutes according to the length and resolution of the video clip. The computation intensive steps of our approach are tracking mesh points of moving objects, local and global smoothing of the tracked points, and texture sampling when propagating the replaced results between adjacent frames. Fig. 15 shows some frames of one retextured video to verify the effectiveness of our visibility shifting method. The waving arm of the announcer leads to visibility changes in front of the body (please refer to the accompanying video). The video contains 50 sequential frames, among which the first frame is selected as the key frame to initialize the retexturing process. The graphcut segmentation is adopted to tackle the visibility issue. Although graphcut segmentation is incorporated into our method, the new appearing regions in some frames cannot be extracted accurately. Hence, artifacts may appear in the retextured video. One solution of reducing such artifacts is improving the accuracy of boundary segmentation of moving object. With more precise models such as Gaussian Mixture Model (GMM) [21] to compute the foreground/background distributions, the result can be improved. Fig. 16 shows six frames from one video retexturing result. The input image sequence contains 118 sequential frames. One model walks through the stage with a silk dress deforming largely. In this example, eight key frames are selected in time domain. The odd rows show some input frames. The even rows show the corresponding frames of retextured video with checkboard texture. Even with the robust optical flow algorithm and local optimization, perfect tracking is impossible. There are few mesh points jumping suddenly along their motion curves. Before the global optimization of motion trajectories, these points are dragged to the user-specified positions with little editing operations. With the above scheme, our approach can generate a visually pleasing result with slight texture GUO ET AL.: MESH-GUIDED OPTIMIZED RETEXTURING FOR IMAGE AND VIDEO 435 Fig. 12. Results with self-occlusion. (a) The relevant points specified over the mesh. (b) The computed mesh in texture space. (c) and (d) show the retexturing results. Texture discontinuities in the occluded regions are obvious. Fig. 13. Comparison with Textureshop. The input image is the inset in (b). (a) is our result and (b) is the result of Textureshop. The result generated by our mesh-guided approach is visually comparable to Textureshop’s cluster-based texture synthesis method. Both methods generate good texture discontinuities in the drape regions. Fig. 14. Image retexturing result for a sculpture