正在加载图片...

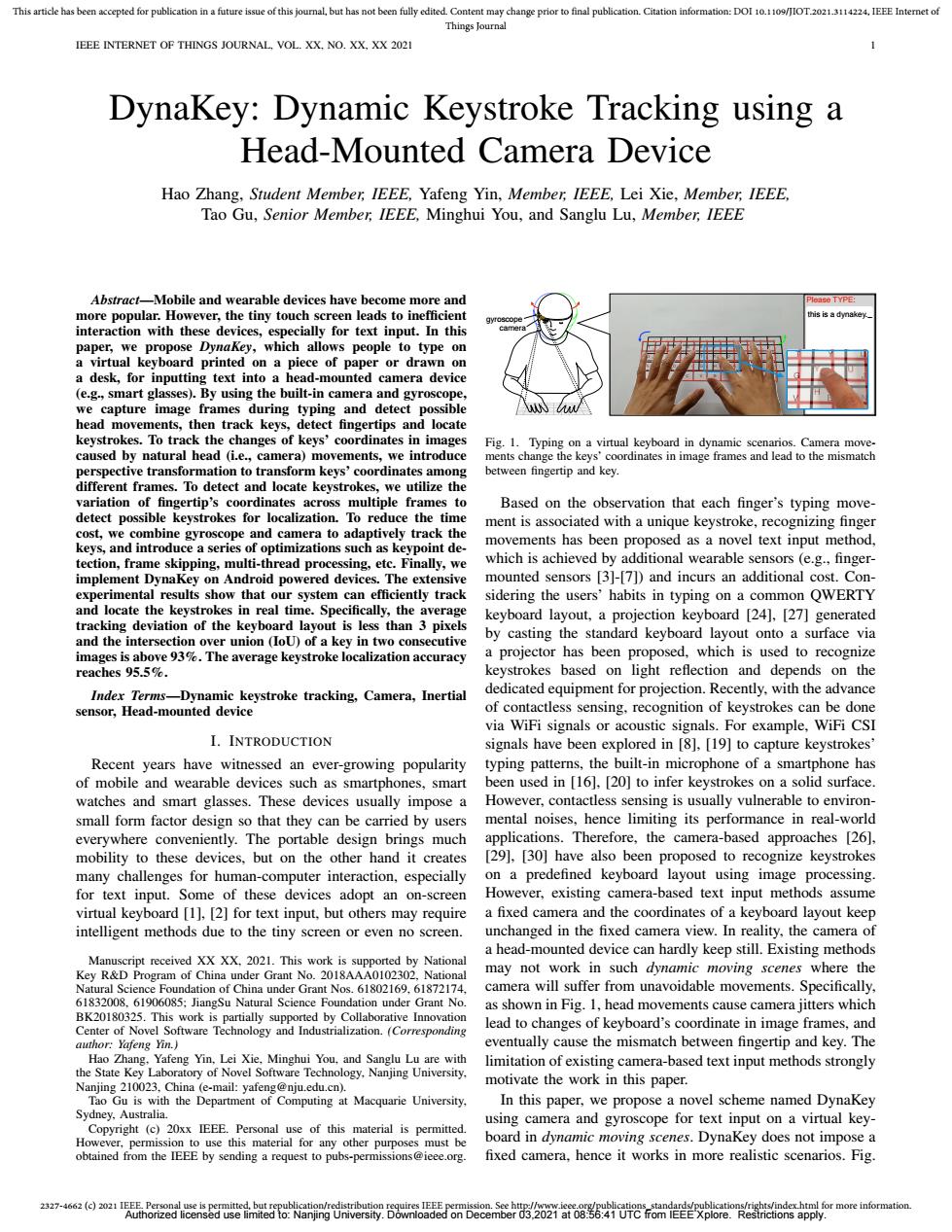

This article has been accepted for publication in a future issue of this journal,but has not been fully edited.Content may change prior to final publication.Citation information:DOI 10.1109/JIOT.2021.3114224.IEEE Internet of Things Journal IEEE INTERNET OF THINGS JOURNAL. VOL.XX.NO.XX.XX 2021 Dynakey:Dynamic Keystroke Tracking using a Head-Mounted Camera Device Hao Zhang,Student Member IEEE,Yafeng Yin,Member IEEE,Lei Xie,Member IEEE, Tao Gu,Senior Member,IEEE,Minghui You,and Sanglu Lu,Member,IEEE Abstract-Mobile and wearable devices have become more and Please TYPE: more popular.However,the tiny touch screen leads to inefficient gyroscope this is a dynakey interaction with these devices,especially for text input.In this paper,we propose Dynakey,which allows people to type on a virtual keyboard printed on a piece of paper or drawn on a desk,for inputting text into a head-mounted camera device (e.g,smart glasses).By using the built-in camera and gyroscope, we capture image frames during typing and detect possible head movements,then track keys,detect fingertips and locate keystrokes.To track the changes of keys'coordinates in images Fig.1.Typing on a virtual keyboard in dynamic scenarios.Camera move- caused by natural head (i.e.,camera)movements,we introduce ments change the keys'coordinates in image frames and lead to the mismatch perspective transformation to transform keys'coordinates among between fingertip and key. different frames.To detect and locate keystrokes,we utilize the variation of fingertip's coordinates across multiple frames to Based on the observation that each finger's typing move- detect possible keystrokes for localization.To reduce the time ment is associated with a unique keystroke,recognizing finger cost,we combine gyroscope and camera to adaptively track the keys,and introduce a series of optimizations such as keypoint de- movements has been proposed as a novel text input method, tection,frame skipping,multi-thread processing,etc.Finally,we which is achieved by additional wearable sensors (e.g.,finger- implement DynaKey on Android powered devices.The extensive mounted sensors [3]-[7])and incurs an additional cost.Con- experimental results show that our system can efficiently track sidering the users'habits in typing on a common QWERTY and locate the keystrokes in real time.Specifically,the average keyboard layout,a projection keyboard [24],[27 generated tracking deviation of the keyboard layout is less than 3 pixels by casting the standard keyboard layout onto a surface via and the intersection over union (IoU)of a key in two consecutive images is above 93%.The average keystroke localization accuracy a projector has been proposed,which is used to recognize reaches 95.5%. keystrokes based on light reflection and depends on the Index Terms-Dynamic keystroke tracking,Camera,Inertial dedicated equipment for projection.Recently.with the advance sensor,Head-mounted device of contactless sensing,recognition of keystrokes can be done via WiFi signals or acoustic signals.For example,WiFi CSI I.INTRODUCTION signals have been explored in [8],[19]to capture keystrokes' Recent years have witnessed an ever-growing popularity typing patterns,the built-in microphone of a smartphone has of mobile and wearable devices such as smartphones,smart been used in [16],[20]to infer keystrokes on a solid surface watches and smart glasses.These devices usually impose a However,contactless sensing is usually vulnerable to environ- small form factor design so that they can be carried by users mental noises,hence limiting its performance in real-world everywhere conveniently.The portable design brings much applications.Therefore,the camera-based approaches [26]. mobility to these devices,but on the other hand it creates [29],[30]have also been proposed to recognize keystrokes many challenges for human-computer interaction,especially on a predefined keyboard layout using image processing. for text input.Some of these devices adopt an on-screen However,existing camera-based text input methods assume virtual keyboard [1],[2]for text input,but others may require a fixed camera and the coordinates of a keyboard layout keep intelligent methods due to the tiny screen or even no screen. unchanged in the fixed camera view.In reality,the camera of a head-mounted device can hardly keep still.Existing methods Manuscript received XXXX.2021.This work is supported by National Key R&D Program of China under Grant No.2018AAA0102302,National may not work in such dynamic moving scenes where the Natural Science Foundation of China under Grant Nos.61802169,61872174, camera will suffer from unavoidable movements.Specifically. 61832008,61906085;JiangSu Natural Science Foundation under Grant No. as shown in Fig.1,head movements cause camera jitters which BK20180325.This work is partially supported by Collaborative Innovation Center of Novel Software Technology and Industrialization.(Corresponding lead to changes of keyboard's coordinate in image frames,and author:Yafeng Yin.) eventually cause the mismatch between fingertip and key.The Hao Zhang.Yafeng Yin,Lei Xie.Minghui You,and Sanglu Lu are with limitation of existing camera-based text input methods strongly the State Key Laboratory of Novel Software Technology,Nanjing University. Nanjing 210023,China (e-mail:yafeng@nju.edu.cn). motivate the work in this paper. Tao Gu is with the Department of Computing at Macquarie University, In this paper,we propose a novel scheme named DynaKey Sydney.Australia. using camera and gyroscope for text input on a virtual key- Copyright (c)20xx IEEE.Personal use of this material is permitted. However,permission to use this material for any other purposes must be board in dynamic moving scenes.DynaKey does not impose a obtained from the IEEE by sending a request to pubs-permissions@ieee.org. fixed camera,hence it works in more realistic scenarios.Fig2327-4662 (c) 2021 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/JIOT.2021.3114224, IEEE Internet of Things Journal IEEE INTERNET OF THINGS JOURNAL, VOL. XX, NO. XX, XX 2021 1 DynaKey: Dynamic Keystroke Tracking using a Head-Mounted Camera Device Hao Zhang, Student Member, IEEE, Yafeng Yin, Member, IEEE, Lei Xie, Member, IEEE, Tao Gu, Senior Member, IEEE, Minghui You, and Sanglu Lu, Member, IEEE Abstract—Mobile and wearable devices have become more and more popular. However, the tiny touch screen leads to inefficient interaction with these devices, especially for text input. In this paper, we propose DynaKey, which allows people to type on a virtual keyboard printed on a piece of paper or drawn on a desk, for inputting text into a head-mounted camera device (e.g., smart glasses). By using the built-in camera and gyroscope, we capture image frames during typing and detect possible head movements, then track keys, detect fingertips and locate keystrokes. To track the changes of keys’ coordinates in images caused by natural head (i.e., camera) movements, we introduce perspective transformation to transform keys’ coordinates among different frames. To detect and locate keystrokes, we utilize the variation of fingertip’s coordinates across multiple frames to detect possible keystrokes for localization. To reduce the time cost, we combine gyroscope and camera to adaptively track the keys, and introduce a series of optimizations such as keypoint detection, frame skipping, multi-thread processing, etc. Finally, we implement DynaKey on Android powered devices. The extensive experimental results show that our system can efficiently track and locate the keystrokes in real time. Specifically, the average tracking deviation of the keyboard layout is less than 3 pixels and the intersection over union (IoU) of a key in two consecutive images is above 93%. The average keystroke localization accuracy reaches 95.5%. Index Terms—Dynamic keystroke tracking, Camera, Inertial sensor, Head-mounted device I. INTRODUCTION Recent years have witnessed an ever-growing popularity of mobile and wearable devices such as smartphones, smart watches and smart glasses. These devices usually impose a small form factor design so that they can be carried by users everywhere conveniently. The portable design brings much mobility to these devices, but on the other hand it creates many challenges for human-computer interaction, especially for text input. Some of these devices adopt an on-screen virtual keyboard [1], [2] for text input, but others may require intelligent methods due to the tiny screen or even no screen. Manuscript received XX XX, 2021. This work is supported by National Key R&D Program of China under Grant No. 2018AAA0102302, National Natural Science Foundation of China under Grant Nos. 61802169, 61872174, 61832008, 61906085; JiangSu Natural Science Foundation under Grant No. BK20180325. This work is partially supported by Collaborative Innovation Center of Novel Software Technology and Industrialization. (Corresponding author: Yafeng Yin.) Hao Zhang, Yafeng Yin, Lei Xie, Minghui You, and Sanglu Lu are with the State Key Laboratory of Novel Software Technology, Nanjing University, Nanjing 210023, China (e-mail: yafeng@nju.edu.cn). Tao Gu is with the Department of Computing at Macquarie University, Sydney, Australia. Copyright (c) 20xx IEEE. Personal use of this material is permitted. However, permission to use this material for any other purposes must be obtained from the IEEE by sending a request to pubs-permissions@ieee.org. Please TYPE: this is a dynakey._ 1 2 3 4 5 6 7 8 9 0 Q W E camera gyroscope Fig. 1. Typing on a virtual keyboard in dynamic scenarios. Camera movements change the keys’ coordinates in image frames and lead to the mismatch between fingertip and key. Based on the observation that each finger’s typing movement is associated with a unique keystroke, recognizing finger movements has been proposed as a novel text input method, which is achieved by additional wearable sensors (e.g., fingermounted sensors [3]-[7]) and incurs an additional cost. Considering the users’ habits in typing on a common QWERTY keyboard layout, a projection keyboard [24], [27] generated by casting the standard keyboard layout onto a surface via a projector has been proposed, which is used to recognize keystrokes based on light reflection and depends on the dedicated equipment for projection. Recently, with the advance of contactless sensing, recognition of keystrokes can be done via WiFi signals or acoustic signals. For example, WiFi CSI signals have been explored in [8], [19] to capture keystrokes’ typing patterns, the built-in microphone of a smartphone has been used in [16], [20] to infer keystrokes on a solid surface. However, contactless sensing is usually vulnerable to environmental noises, hence limiting its performance in real-world applications. Therefore, the camera-based approaches [26], [29], [30] have also been proposed to recognize keystrokes on a predefined keyboard layout using image processing. However, existing camera-based text input methods assume a fixed camera and the coordinates of a keyboard layout keep unchanged in the fixed camera view. In reality, the camera of a head-mounted device can hardly keep still. Existing methods may not work in such dynamic moving scenes where the camera will suffer from unavoidable movements. Specifically, as shown in Fig. 1, head movements cause camera jitters which lead to changes of keyboard’s coordinate in image frames, and eventually cause the mismatch between fingertip and key. The limitation of existing camera-based text input methods strongly motivate the work in this paper. In this paper, we propose a novel scheme named DynaKey using camera and gyroscope for text input on a virtual keyboard in dynamic moving scenes. DynaKey does not impose a fixed camera, hence it works in more realistic scenarios. Fig. Authorized licensed use limited to: Nanjing University. Downloaded on December 03,2021 at 08:56:41 UTC from IEEE Xplore. Restrictions apply