正在加载图片...

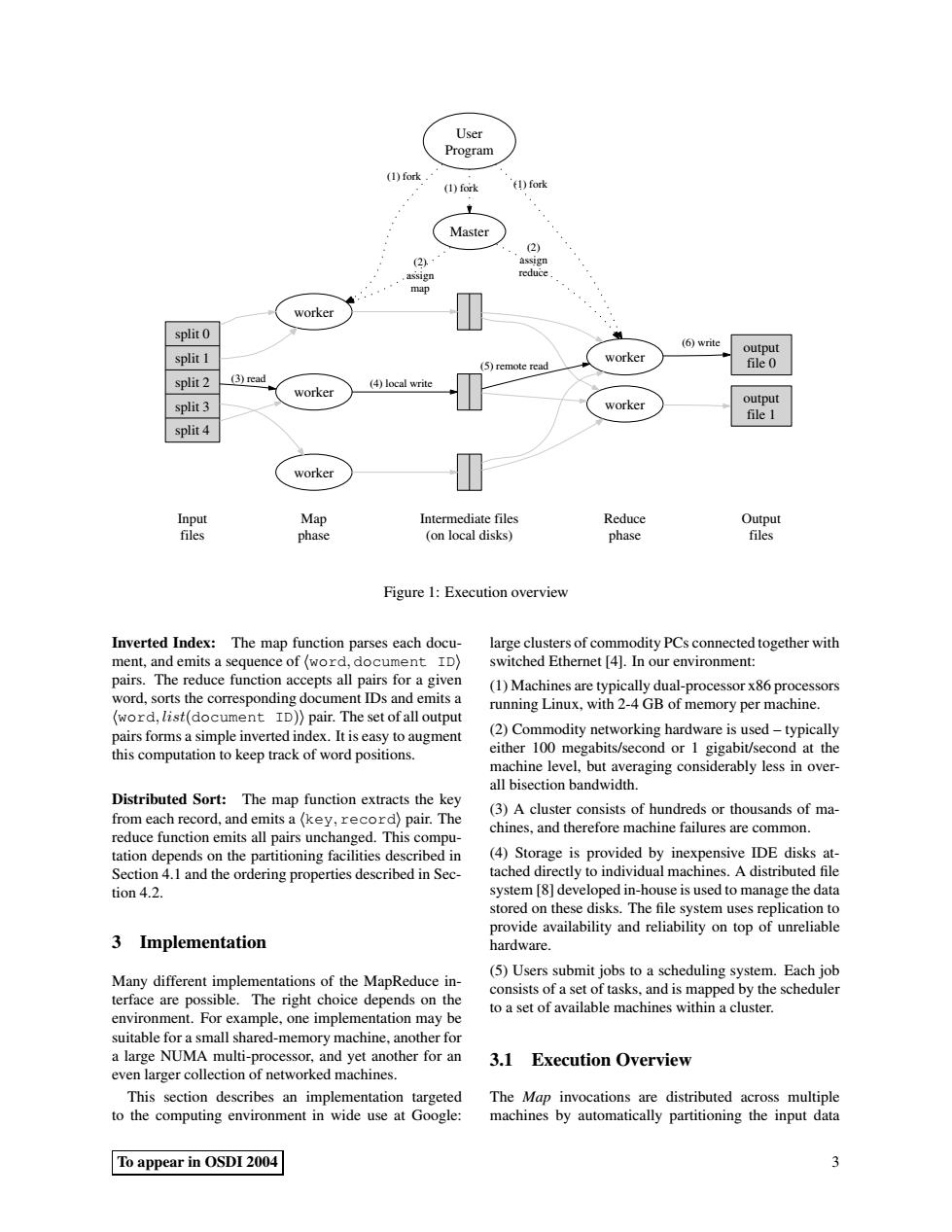

User Program (1)fork (1)fork (1)fork Master 2) (2) assign assign reduce. 、 map worker split 0 (6)write output split 1 worker (5)remote read file 0 split 2 (3)read (4)local write worker split 3 worker output file 1 split 4 worker Input Map Intermediate files Reduce Output files phase (on local disks) phase files Figure 1:Execution overview Inverted Index:The map function parses each docu- large clusters of commodity PCs connected together with ment,and emits a sequence of (word,document ID) switched Ethernet [4].In our environment: pairs.The reduce function accepts all pairs for a given (1)Machines are typically dual-processor x86 processors word,sorts the corresponding document IDs and emits a running Linux,with 2-4 GB of memory per machine. (word,list(document ID))pair.The set of all output pairs forms a simple inverted index.It is easy to augment (2)Commodity networking hardware is used-typically this computation to keep track of word positions. either 100 megabits/second or 1 gigabit/second at the machine level,but averaging considerably less in over- all bisection bandwidth. Distributed Sort:The map function extracts the key (3)A cluster consists of hundreds or thousands of ma- from each record,and emits a(key,record)pair.The chines.and therefore machine failures are common. reduce function emits all pairs unchanged.This compu- tation depends on the partitioning facilities described in (4)Storage is provided by inexpensive IDE disks at- Section 4.1 and the ordering properties described in Sec- tached directly to individual machines.A distributed file tion 4.2 system [8]developed in-house is used to manage the data stored on these disks.The file system uses replication to provide availability and reliability on top of unreliable 3 Implementation hardware. (5)Users submit jobs to a scheduling system.Each job Many different implementations of the MapReduce in- terface are possible.The right choice depends on the consists of a set of tasks,and is mapped by the scheduler to a set of available machines within a cluster. environment.For example,one implementation may be suitable for a small shared-memory machine,another for a large NUMA multi-processor,and yet another for an 3.1 Execution Overview even larger collection of networked machines This section describes an implementation targeted The Map invocations are distributed across multiple to the computing environment in wide use at Google: machines by automatically partitioning the input data To appear in OSDI 2004 3User Program Master (1) fork worker (1) fork worker (1) fork (2) assign map (2) assign reduce split 0 split 1 split 2 split 3 split 4 output file 0 (6) write worker (3) read worker (4) local write Map phase Intermediate files (on local disks) worker output file 1 Input files (5) remote read Reduce phase Output files Figure 1: Execution overview Inverted Index: The map function parses each document, and emits a sequence of hword, document IDi pairs. The reduce function accepts all pairs for a given word, sorts the corresponding document IDs and emits a hword, list(document ID)i pair. The set of all output pairs forms a simple inverted index. It is easy to augment this computation to keep track of word positions. Distributed Sort: The map function extracts the key from each record, and emits a hkey, recordi pair. The reduce function emits all pairs unchanged. This computation depends on the partitioning facilities described in Section 4.1 and the ordering properties described in Section 4.2. 3 Implementation Many different implementations of the MapReduce interface are possible. The right choice depends on the environment. For example, one implementation may be suitable for a small shared-memory machine, another for a large NUMA multi-processor, and yet another for an even larger collection of networked machines. This section describes an implementation targeted to the computing environment in wide use at Google: large clusters of commodity PCs connected together with switched Ethernet [4]. In our environment: (1) Machines are typically dual-processor x86 processors running Linux, with 2-4 GB of memory per machine. (2) Commodity networking hardware is used – typically either 100 megabits/second or 1 gigabit/second at the machine level, but averaging considerably less in overall bisection bandwidth. (3) A cluster consists of hundreds or thousands of machines, and therefore machine failures are common. (4) Storage is provided by inexpensive IDE disks attached directly to individual machines. A distributed file system [8] developed in-house is used to manage the data stored on these disks. The file system uses replication to provide availability and reliability on top of unreliable hardware. (5) Users submit jobs to a scheduling system. Each job consists of a set of tasks, and is mapped by the scheduler to a set of available machines within a cluster. 3.1 Execution Overview The Map invocations are distributed across multiple machines by automatically partitioning the input data To appear in OSDI 2004 3