正在加载图片...

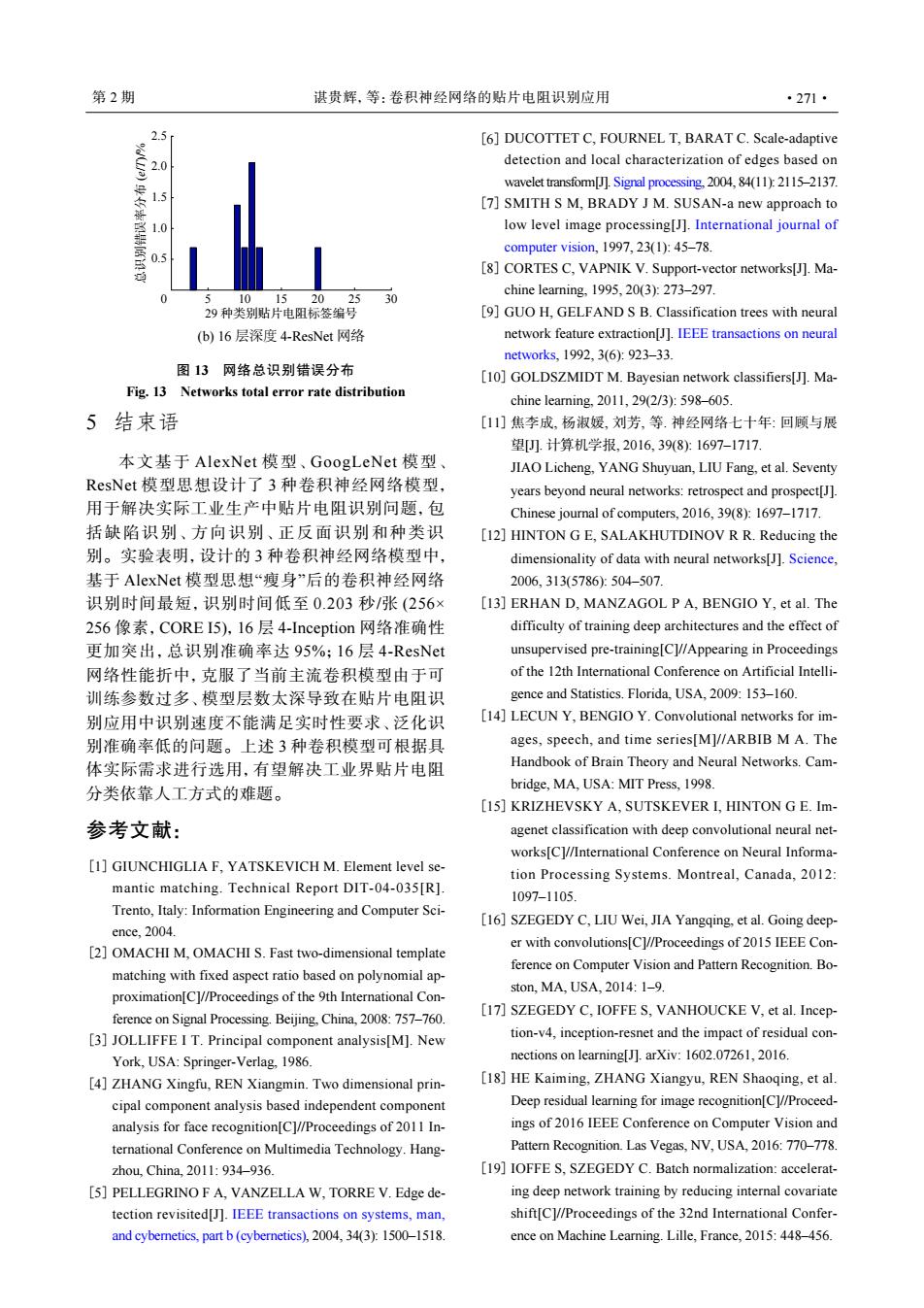

第2期 湛贵辉,等:卷积神经网络的贴片电阻识别应用 ·271· 2.5 [6]DUCOTTET C,FOURNEL T,BARAT C.Scale-adaptive 2.0 detection and local characterization of edges based on wavelet transform[J].Signal processing,2004,84(11):2115-2137. 1.5 [7]SMITH S M,BRADY J M.SUSAN-a new approach to 0 low level image processing[J].International journal of computer vision,1997.23(1):45-78. 0.5 [8]CORTES C,VAPNIK V.Support-vector networks[J].Ma- chine learning.1995,20(3):273-297. 10 15 20. 25 30 29种类别贴片电阻标签编号 [9]GUO H.GELFAND S B.Classification trees with neural (b)16层深度4-ResNet网络 network feature extraction[J.IEEE transactions on neural networks.1992.3(6):923-33. 图13网络总识别错误分布 [10]GOLDSZMIDT M.Bayesian network classifiers[J].Ma- Fig.13 Networks total error rate distribution chine learning.2011.29(2/3):598-605 5结束语 [11]焦李成,杨淑媛,刘芳,等.神经网络七十年:回顾与展 望U.计算机学报,2016,39(8):1697-1717 本文基于AlexNet模型、GoogLeNet模型、 JIAO Licheng,YANG Shuyuan,LIU Fang,et al.Seventy ResNet模型思想设计了3种卷积神经网络模型, years beyond neural networks:retrospect and prospect[J]. 用于解决实际工业生产中贴片电阻识别问题,包 Chinese journal of computers,2016,39(8):1697-1717. 括缺陷识别、方向识别、正反面识别和种类识 [12]HINTON G E,SALAKHUTDINOV RR.Reducing the 别。实验表明,设计的3种卷积神经网络模型中, dimensionality of data with neural networks[J].Science, 基于AlexNet模型思想“瘦身”后的卷积神经网络 2006,313(5786):504-507. 识别时间最短,识别时间低至0.203秒/张(256× [13]ERHAN D.MANZAGOL P A,BENGIO Y,et al.The 256像素,CORE I5),16层4-Inception网络准确性 difficulty of training deep architectures and the effect of 更加突出,总识别准确率达95%;16层4-ResNet unsupervised pre-training[C]//Appearing in Proceedings 网络性能折中,克服了当前主流卷积模型由于可 of the 12th International Conference on Artificial Intelli- 训练参数过多、模型层数太深导致在贴片电阻识 gence and Statistics.Florida.USA.2009:153-160 别应用中识别速度不能满足实时性要求、泛化识 [14]LECUN Y.BENGIO Y.Convolutional networks for im 别准确率低的问题。上述3种卷积模型可根据具 ages,speech,and time series[M]//ARBIB M A.The 体实际需求进行选用,有望解决工业界贴片电阻 Handbook of Brain Theory and Neural Networks.Cam- 分类依靠人工方式的难题。 bridge,MA,USA:MIT Press,1998 [15]KRIZHEVSKY A,SUTSKEVER I,HINTON G E.Im- 参考文献: agenet classification with deep convolutional neural net- works[C]//International Conference on Neural Informa- [1]GIUNCHIGLIA F.YATSKEVICH M.Element level se- tion Processing Systems.Montreal,Canada,2012: mantic matching.Technical Report DIT-04-035[R]. 1097-1105. Trento,Italy:Information Engineering and Computer Sci- [16]SZEGEDY C,LIU Wei,JIA Yangqing,et al.Going deep- ence,2004. er with convolutions[Cl//Proceedings of 2015 IEEE Con- [2]OMACHI M,OMACHI S.Fast two-dimensional template ference on Computer Vision and Pattern Recognition.Bo- matching with fixed aspect ratio based on polynomial ap- ston.MA.USA.2014:1-9. proximation[C]//Proceedings of the 9th International Con- ference on Signal Processing.Beijing,China,2008:757-760. [17]SZEGEDY C,IOFFE S,VANHOUCKE V,et al.Incep- [3]JOLLIFFE I T.Principal component analysis[M].New tion-v4,inception-resnet and the impact of residual con- York,USA:Springer-Verlag,1986. nections on learning[J].arXiv:1602.07261,2016. [4]ZHANG Xingfu,REN Xiangmin.Two dimensional prin- [18]HE Kaiming,ZHANG Xiangyu,REN Shaoqing,et al. cipal component analysis based independent component Deep residual learning for image recognition[C]//Proceed- analysis for face recognition[C]//Proceedings of 2011 In- ings of 2016 IEEE Conference on Computer Vision and ternational Conference on Multimedia Technology.Hang- Pattern Recognition.Las Vegas,NV,USA,2016:770-778. zhou,China,2011:934-936. [19]IOFFE S,SZEGEDY C.Batch normalization:accelerat- [5]PELLEGRINO F A,VANZELLA W,TORRE V.Edge de- ing deep network training by reducing internal covariate tection revisited[J].IEEE transactions on systems,man, shift[C]//Proceedings of the 32nd International Confer- and cybernetics,part b(cybernetics),2004,34(3):1500-1518. ence on Machine Learning.Lille,France,2015:448-456.5 结束语 本文基于 AlexNet 模型、GoogLeNet 模型、 ResNet 模型思想设计了 3 种卷积神经网络模型, 用于解决实际工业生产中贴片电阻识别问题,包 括缺陷识别、方向识别、正反面识别和种类识 别。实验表明,设计的 3 种卷积神经网络模型中, 基于 AlexNet 模型思想“瘦身”后的卷积神经网络 识别时间最短,识别时间低至 0.203 秒/张 (256× 256 像素,CORE I5),16 层 4-Inception 网络准确性 更加突出,总识别准确率达 95%;16 层 4-ResNet 网络性能折中,克服了当前主流卷积模型由于可 训练参数过多、模型层数太深导致在贴片电阻识 别应用中识别速度不能满足实时性要求、泛化识 别准确率低的问题。上述 3 种卷积模型可根据具 体实际需求进行选用,有望解决工业界贴片电阻 分类依靠人工方式的难题。 参考文献: GIUNCHIGLIA F, YATSKEVICH M. Element level semantic matching. Technical Report DIT-04-035[R]. Trento, Italy: Information Engineering and Computer Science, 2004. [1] OMACHI M, OMACHI S. Fast two-dimensional template matching with fixed aspect ratio based on polynomial approximation[C]//Proceedings of the 9th International Conference on Signal Processing. Beijing, China, 2008: 757–760. [2] JOLLIFFE I T. Principal component analysis[M]. New York, USA: Springer-Verlag, 1986. [3] ZHANG Xingfu, REN Xiangmin. Two dimensional principal component analysis based independent component analysis for face recognition[C]//Proceedings of 2011 International Conference on Multimedia Technology. Hangzhou, China, 2011: 934–936. [4] PELLEGRINO F A, VANZELLA W, TORRE V. Edge detection revisited[J]. IEEE transactions on systems, man, and cybernetics, part b (cybernetics), 2004, 34(3): 1500–1518. [5] DUCOTTET C, FOURNEL T, BARAT C. Scale-adaptive detection and local characterization of edges based on wavelet transform[J]. Signal processing, 2004, 84(11): 2115–2137. [6] SMITH S M, BRADY J M. SUSAN-a new approach to low level image processing[J]. International journal of computer vision, 1997, 23(1): 45–78. [7] CORTES C, VAPNIK V. Support-vector networks[J]. Machine learning, 1995, 20(3): 273–297. [8] GUO H, GELFAND S B. Classification trees with neural network feature extraction[J]. IEEE transactions on neural networks, 1992, 3(6): 923–33. [9] GOLDSZMIDT M. Bayesian network classifiers[J]. Machine learning, 2011, 29(2/3): 598–605. [10] 焦李成, 杨淑媛, 刘芳, 等. 神经网络七十年: 回顾与展 望[J]. 计算机学报, 2016, 39(8): 1697–1717. JIAO Licheng, YANG Shuyuan, LIU Fang, et al. Seventy years beyond neural networks: retrospect and prospect[J]. Chinese journal of computers, 2016, 39(8): 1697–1717. [11] HINTON G E, SALAKHUTDINOV R R. Reducing the dimensionality of data with neural networks[J]. Science, 2006, 313(5786): 504–507. [12] ERHAN D, MANZAGOL P A, BENGIO Y, et al. The difficulty of training deep architectures and the effect of unsupervised pre-training[C]//Appearing in Proceedings of the 12th International Conference on Artificial Intelligence and Statistics. Florida, USA, 2009: 153–160. [13] LECUN Y, BENGIO Y. Convolutional networks for images, speech, and time series[M]//ARBIB M A. The Handbook of Brain Theory and Neural Networks. Cambridge, MA, USA: MIT Press, 1998. [14] KRIZHEVSKY A, SUTSKEVER I, HINTON G E. Imagenet classification with deep convolutional neural networks[C]//International Conference on Neural Information Processing Systems. Montreal, Canada, 2012: 1097–1105. [15] SZEGEDY C, LIU Wei, JIA Yangqing, et al. Going deeper with convolutions[C]//Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston, MA, USA, 2014: 1–9. [16] SZEGEDY C, IOFFE S, VANHOUCKE V, et al. Inception-v4, inception-resnet and the impact of residual connections on learning[J]. arXiv: 1602.07261, 2016. [17] HE Kaiming, ZHANG Xiangyu, REN Shaoqing, et al. Deep residual learning for image recognition[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. Las Vegas, NV, USA, 2016: 770–778. [18] IOFFE S, SZEGEDY C. Batch normalization: accelerating deep network training by reducing internal covariate shift[C]//Proceedings of the 32nd International Conference on Machine Learning. Lille, France, 2015: 448–456. [19] 0 5 10 15 20 25 30 0.5 1.0 1.5 2.0 2.5 29 种类别贴片电阻标签编号 总识别错误率分布 (e/T)/% (b) 16 层深度 4-ResNet 网络 图 13 网络总识别错误分布 Fig. 13 Networks total error rate distribution 第 2 期 谌贵辉,等:卷积神经网络的贴片电阻识别应用 ·271·