正在加载图片...

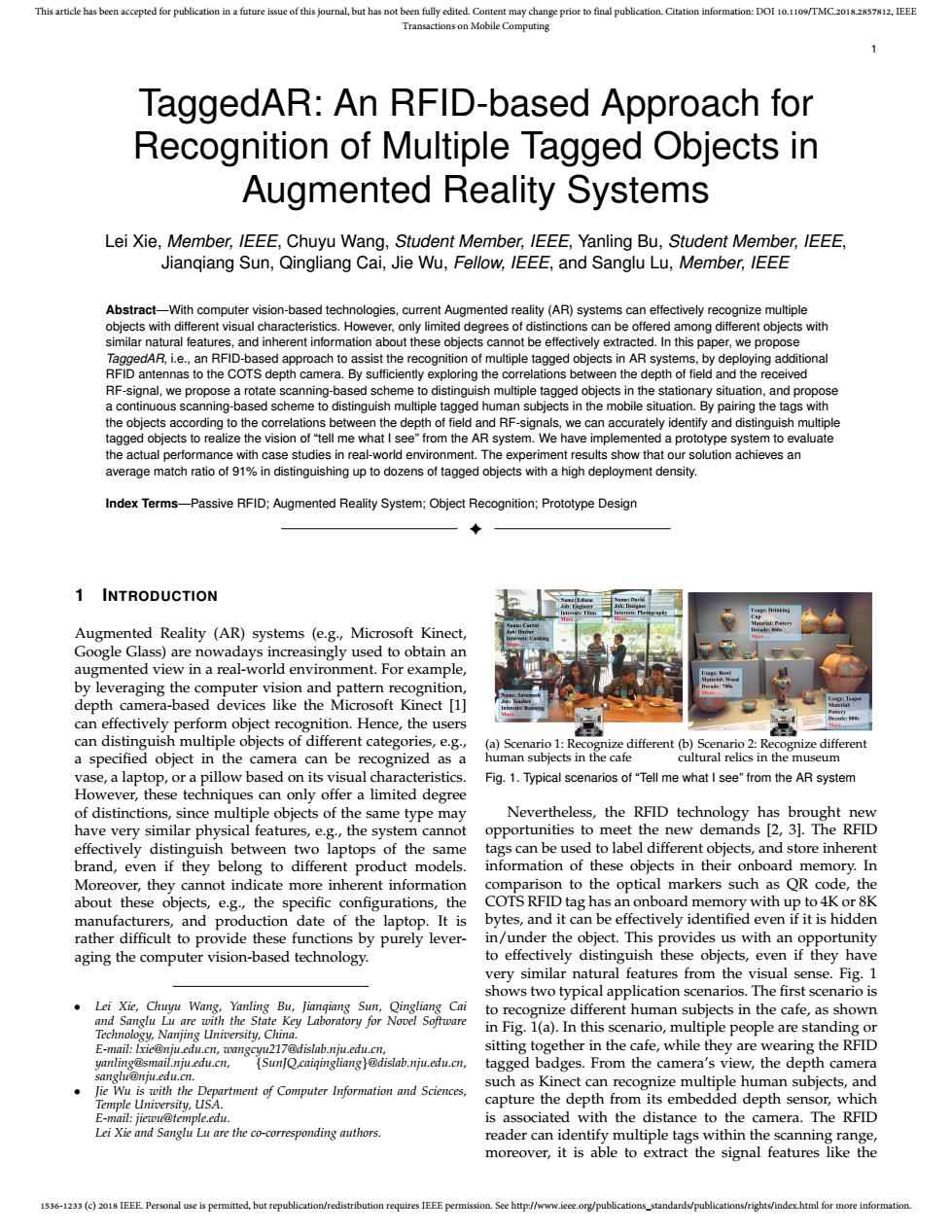

This article has been accepted for publication in a future issue of this journal,but has not been fully edited.Content may change prior to final publication.Citation information:DOI 10.1109/TMC.2018.2857812.IEEE Transactions on Mobile Computing TaggedAR:An RFID-based Approach for Recognition of Multiple Tagged Objects in Augmented Reality Systems Lei Xie,Member,IEEE,Chuyu Wang,Student Member,IEEE,Yanling Bu,Student Member,IEEE, Jianqiang Sun,Qingliang Cai,Jie Wu,Fellow,IEEE,and Sanglu Lu,Member,IEEE Abstract-With computer vision-based technologies,current Augmented reality(AR)systems can effectively recognize multiple objects with different visual characteristics.However,only limited degrees of distinctions can be offered among different objects with similar natural features,and inherent information about these objects cannot be effectively extracted.In this paper,we propose TaggedAR,i.e.,an RFID-based approach to assist the recognition of multiple tagged objects in AR systems,by deploying additional RFID antennas to the COTS depth camera.By sufficiently exploring the correlations between the depth of field and the received RF-signal,we propose a rotate scanning-based scheme to distinguish multiple tagged objects in the stationary situation,and propose a continuous scanning-based scheme to distinguish multiple tagged human subjects in the mobile situation.By pairing the tags with the objects according to the correlations between the depth of field and RF-signals,we can accurately identify and distinguish multiple tagged objects to realize the vision of "tell me what I see"from the AR system.We have implemented a prototype system to evaluate the actual performance with case studies in real-world environment.The experiment results show that our solution achieves an average match ratio of 91%in distinguishing up to dozens of tagged objects with a high deployment density. Index Terms-Passive RFID;Augmented Reality System;Object Recognition;Prototype Design 1 INTRODUCTION Augmented Reality (AR)systems (e.g.,Microsoft Kinect, Google Glass)are nowadays increasingly used to obtain an augmented view in a real-world environment.For example, by leveraging the computer vision and pattern recognition depth camera-based devices like the Microsoft Kinect [1] can effectively perform object recognition.Hence,the users can distinguish multiple objects of different categories,e.g., (a)Scenario 1:Recognize different (b)Scenario 2:Recognize different a specified object in the camera can be recognized as a human subjects in the cafe cultural relics in the museum vase,a laptop,or a pillow based on its visual characteristics. Fig.1.Typical scenarios of"Tell me what I see"from the AR system However,these techniques can only offer a limited degree of distinctions,since multiple objects of the same type may Nevertheless,the RFID technology has brought new have very similar physical features,e.g.,the system cannot opportunities to meet the new demands [2,3].The RFID effectively distinguish between two laptops of the same tags can be used to label different objects,and store inherent brand,even if they belong to different product models. information of these objects in their onboard memory.In Moreover,they cannot indicate more inherent information comparison to the optical markers such as OR code,the about these objects,e.g.,the specific configurations,the COTS RFID tag has an onboard memory with up to 4K or 8K manufacturers,and production date of the laptop.It is bytes,and it can be effectively identified even if it is hidden rather difficult to provide these functions by purely lever- in/under the object.This provides us with an opportunity aging the computer vision-based technology. to effectively distinguish these objects,even if they have very similar natural features from the visual sense.Fig.1 shows two typical application scenarios.The first scenario is Lei Xie,Chuyu Wang,Yanling Bu,Jiangiang Sun,Qingliang Cai to recognize different human subjects in the cafe,as shown and Sanglu Lu are with the State Key Laboratory for Novel Software Technology,Nanjing University,China. in Fig.1(a).In this scenario,multiple people are standing or E-mail:Ixie@nju.edu.cn,wangcyu217@dislab.nju.edu.cn, sitting together in the cafe,while they are wearing the RFID yanling@smail.nju.edu.cn, SunJQ,caiqingliang@dislab.nju.edu.cn, tagged badges.From the camera's view,the depth camera sanglu@nju.edu.cn. such as Kinect can recognize multiple human subjects,and Jie Wu is with the Department of Computer Information and Sciences, Temple University,UISA. capture the depth from its embedded depth sensor,which E-mail:jiewu@temple.edu. is associated with the distance to the camera.The RFID Lei Xie and Sanglu Lu are the co-corresponding authors. reader can identify multiple tags within the scanning range, moreover,it is able to extract the signal features like the 1536-1233(c)2018 IEEE Personal use is permitted,but republication/redistribution requires IEEE permission.See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.1536-1233 (c) 2018 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TMC.2018.2857812, IEEE Transactions on Mobile Computing 1 TaggedAR: An RFID-based Approach for Recognition of Multiple Tagged Objects in Augmented Reality Systems Lei Xie, Member, IEEE, Chuyu Wang, Student Member, IEEE, Yanling Bu, Student Member, IEEE, Jianqiang Sun, Qingliang Cai, Jie Wu, Fellow, IEEE, and Sanglu Lu, Member, IEEE Abstract—With computer vision-based technologies, current Augmented reality (AR) systems can effectively recognize multiple objects with different visual characteristics. However, only limited degrees of distinctions can be offered among different objects with similar natural features, and inherent information about these objects cannot be effectively extracted. In this paper, we propose TaggedAR, i.e., an RFID-based approach to assist the recognition of multiple tagged objects in AR systems, by deploying additional RFID antennas to the COTS depth camera. By sufficiently exploring the correlations between the depth of field and the received RF-signal, we propose a rotate scanning-based scheme to distinguish multiple tagged objects in the stationary situation, and propose a continuous scanning-based scheme to distinguish multiple tagged human subjects in the mobile situation. By pairing the tags with the objects according to the correlations between the depth of field and RF-signals, we can accurately identify and distinguish multiple tagged objects to realize the vision of “tell me what I see” from the AR system. We have implemented a prototype system to evaluate the actual performance with case studies in real-world environment. The experiment results show that our solution achieves an average match ratio of 91% in distinguishing up to dozens of tagged objects with a high deployment density. Index Terms—Passive RFID; Augmented Reality System; Object Recognition; Prototype Design ✦ 1 INTRODUCTION Augmented Reality (AR) systems (e.g., Microsoft Kinect, Google Glass) are nowadays increasingly used to obtain an augmented view in a real-world environment. For example, by leveraging the computer vision and pattern recognition, depth camera-based devices like the Microsoft Kinect [1] can effectively perform object recognition. Hence, the users can distinguish multiple objects of different categories, e.g., a specified object in the camera can be recognized as a vase, a laptop, or a pillow based on its visual characteristics. However, these techniques can only offer a limited degree of distinctions, since multiple objects of the same type may have very similar physical features, e.g., the system cannot effectively distinguish between two laptops of the same brand, even if they belong to different product models. Moreover, they cannot indicate more inherent information about these objects, e.g., the specific configurations, the manufacturers, and production date of the laptop. It is rather difficult to provide these functions by purely leveraging the computer vision-based technology. • Lei Xie, Chuyu Wang, Yanling Bu, Jianqiang Sun, Qingliang Cai and Sanglu Lu are with the State Key Laboratory for Novel Software Technology, Nanjing University, China. E-mail: lxie@nju.edu.cn, wangcyu217@dislab.nju.edu.cn, yanling@smail.nju.edu.cn, {SunJQ,caiqingliang}@dislab.nju.edu.cn, sanglu@nju.edu.cn. • Jie Wu is with the Department of Computer Information and Sciences, Temple University, USA. E-mail: jiewu@temple.edu. Lei Xie and Sanglu Lu are the co-corresponding authors. (a) Scenario 1: Recognize different human subjects in the cafe (b) Scenario 2: Recognize different cultural relics in the museum Fig. 1. Typical scenarios of “Tell me what I see” from the AR system Nevertheless, the RFID technology has brought new opportunities to meet the new demands [2, 3]. The RFID tags can be used to label different objects, and store inherent information of these objects in their onboard memory. In comparison to the optical markers such as QR code, the COTS RFID tag has an onboard memory with up to 4K or 8K bytes, and it can be effectively identified even if it is hidden in/under the object. This provides us with an opportunity to effectively distinguish these objects, even if they have very similar natural features from the visual sense. Fig. 1 shows two typical application scenarios. The first scenario is to recognize different human subjects in the cafe, as shown in Fig. 1(a). In this scenario, multiple people are standing or sitting together in the cafe, while they are wearing the RFID tagged badges. From the camera’s view, the depth camera such as Kinect can recognize multiple human subjects, and capture the depth from its embedded depth sensor, which is associated with the distance to the camera. The RFID reader can identify multiple tags within the scanning range, moreover, it is able to extract the signal features like the