正在加载图片...

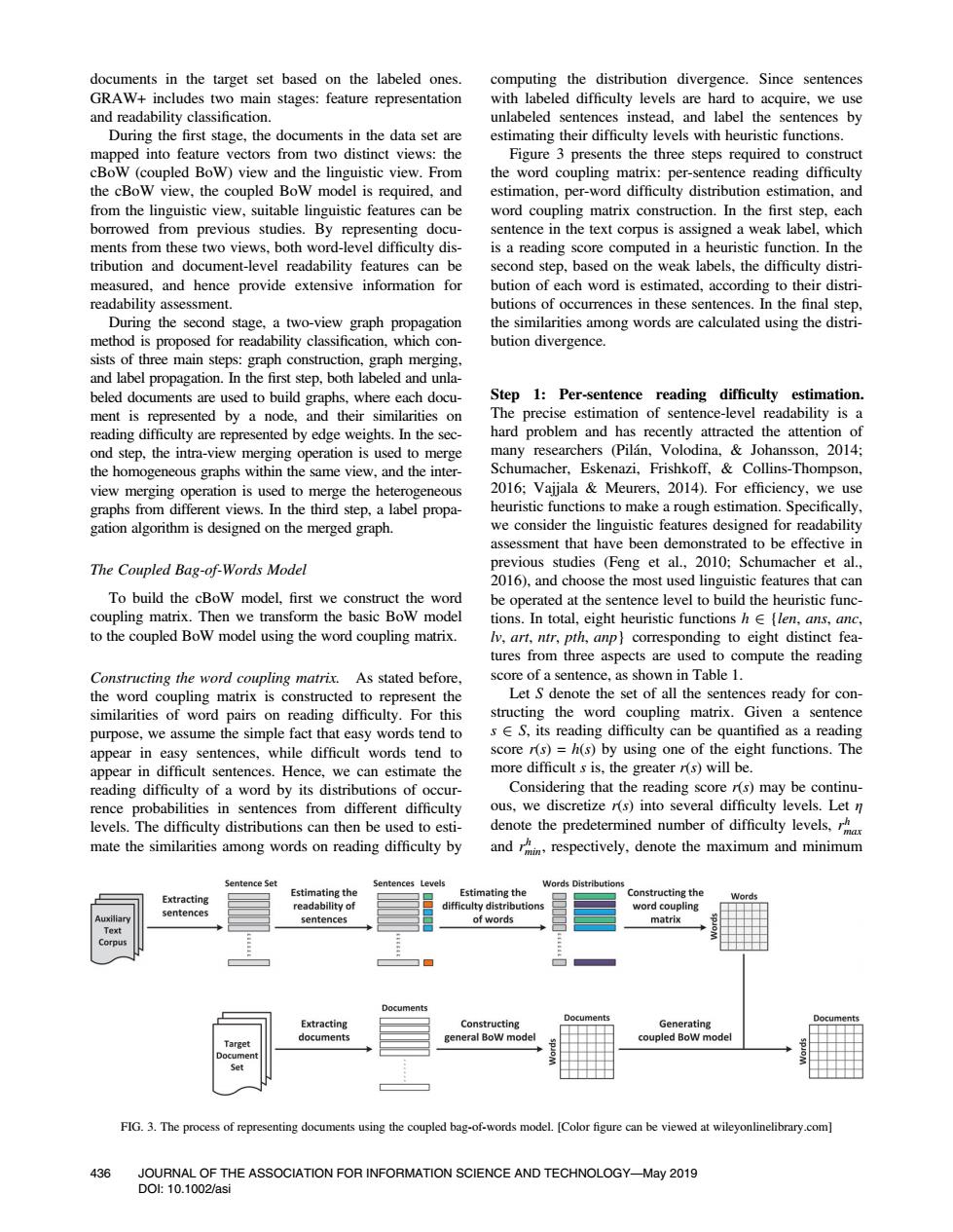

documents in the target set based on the labeled ones. computing the distribution divergence.Since sentences GRAW+includes two main stages:feature representation with labeled difficulty levels are hard to acquire,we use and readability classification. unlabeled sentences instead,and label the sentences by During the first stage,the documents in the data set are estimating their difficulty levels with heuristic functions mapped into feature vectors from two distinct views:the Figure 3 presents the three steps required to construct cBow (coupled Bow)view and the linguistic view.From the word coupling matrix:per-sentence reading difficulty the cBow view,the coupled Bow model is required,and estimation,per-word difficulty distribution estimation,and from the linguistic view,suitable linguistic features can be word coupling matrix construction.In the first step,each borrowed from previous studies.By representing docu- sentence in the text corpus is assigned a weak label,which ments from these two views,both word-level difficulty dis- is a reading score computed in a heuristic function.In the tribution and document-level readability features can be second step,based on the weak labels,the difficulty distri- measured,and hence provide extensive information for bution of each word is estimated,according to their distri- readability assessment. butions of occurrences in these sentences.In the final step, During the second stage,a two-view graph propagation the similarities among words are calculated using the distri- method is proposed for readability classification,which con- bution divergence. sists of three main steps:graph construction,graph merging, and label propagation.In the first step,both labeled and unla- beled documents are used to build graphs,where each docu- Step 1:Per-sentence reading difficulty estimation. ment is represented by a node,and their similarities on The precise estimation of sentence-level readability is a reading difficulty are represented by edge weights.In the sec- hard problem and has recently attracted the attention of ond step,the intra-view merging operation is used to merge many researchers (Pilan,Volodina,Johansson,2014; the homogeneous graphs within the same view,and the inter- Schumacher,Eskenazi,Frishkoff,&Collins-Thompson, view merging operation is used to merge the heterogeneous 2016;Vajjala Meurers,2014).For efficiency,we use graphs from different views.In the third step,a label propa- heuristic functions to make a rough estimation.Specifically, gation algorithm is designed on the merged graph. we consider the linguistic features designed for readability assessment that have been demonstrated to be effective in The Coupled Bag-of-Words Model previous studies (Feng et al.,2010;Schumacher et al., 2016),and choose the most used linguistic features that can To build the cBow model,first we construct the word be operated at the sentence level to build the heuristic func- coupling matrix.Then we transform the basic Bow model tions.In total,eight heuristic functions h E(len,ans,anc, to the coupled BoW model using the word coupling matrix. l,art,ntr,pth,anp}corresponding to eight distinct fea- tures from three aspects are used to compute the reading Constructing the word coupling matrix.As stated before, score of a sentence,as shown in Table 1. the word coupling matrix is constructed to represent the Let S denote the set of all the sentences ready for con- similarities of word pairs on reading difficulty.For this structing the word coupling matrix.Given a sentence purpose,we assume the simple fact that easy words tend to s E S,its reading difficulty can be quantified as a reading appear in easy sentences,while difficult words tend to score r(s)=h(s)by using one of the eight functions.The appear in difficult sentences.Hence,we can estimate the more difficult s is,the greater r(s)will be. reading difficulty of a word by its distributions of occur- Considering that the reading score r(s)may be continu- rence probabilities in sentences from different difficulty ous,we discretize r(s)into several difficulty levels.Let n levels.The difficulty distributions can then be used to esti- denote the predetermined number of difficulty levels, mate the similarities among words on reading difficulty by andrespectively,denote the maximum and minimum Sentence set Sentences Levels Words Distributions Extracting Estimating the Estimating the Constructing the readability of difficulty distributions word coupling sentences sentences of words matrix Documents Documents Extracting Constructing Generating documents general BoW model coupled BoW model FIG.3.The process of representing documents using the coupled bag-of-words model.[Color figure can be viewed at wileyonlinelibrary.com] 436 JOURNAL OF THE ASSOCIATION FOR INFORMATION SCIENCE AND TECHNOLOGY-May 2019 Dol:10.1002/asidocuments in the target set based on the labeled ones. GRAW+ includes two main stages: feature representation and readability classification. During the first stage, the documents in the data set are mapped into feature vectors from two distinct views: the cBoW (coupled BoW) view and the linguistic view. From the cBoW view, the coupled BoW model is required, and from the linguistic view, suitable linguistic features can be borrowed from previous studies. By representing documents from these two views, both word-level difficulty distribution and document-level readability features can be measured, and hence provide extensive information for readability assessment. During the second stage, a two-view graph propagation method is proposed for readability classification, which consists of three main steps: graph construction, graph merging, and label propagation. In the first step, both labeled and unlabeled documents are used to build graphs, where each document is represented by a node, and their similarities on reading difficulty are represented by edge weights. In the second step, the intra-view merging operation is used to merge the homogeneous graphs within the same view, and the interview merging operation is used to merge the heterogeneous graphs from different views. In the third step, a label propagation algorithm is designed on the merged graph. The Coupled Bag-of-Words Model To build the cBoW model, first we construct the word coupling matrix. Then we transform the basic BoW model to the coupled BoW model using the word coupling matrix. Constructing the word coupling matrix. As stated before, the word coupling matrix is constructed to represent the similarities of word pairs on reading difficulty. For this purpose, we assume the simple fact that easy words tend to appear in easy sentences, while difficult words tend to appear in difficult sentences. Hence, we can estimate the reading difficulty of a word by its distributions of occurrence probabilities in sentences from different difficulty levels. The difficulty distributions can then be used to estimate the similarities among words on reading difficulty by computing the distribution divergence. Since sentences with labeled difficulty levels are hard to acquire, we use unlabeled sentences instead, and label the sentences by estimating their difficulty levels with heuristic functions. Figure 3 presents the three steps required to construct the word coupling matrix: per-sentence reading difficulty estimation, per-word difficulty distribution estimation, and word coupling matrix construction. In the first step, each sentence in the text corpus is assigned a weak label, which is a reading score computed in a heuristic function. In the second step, based on the weak labels, the difficulty distribution of each word is estimated, according to their distributions of occurrences in these sentences. In the final step, the similarities among words are calculated using the distribution divergence. Step 1: Per-sentence reading difficulty estimation. The precise estimation of sentence-level readability is a hard problem and has recently attracted the attention of many researchers (Pilán, Volodina, & Johansson, 2014; Schumacher, Eskenazi, Frishkoff, & Collins-Thompson, 2016; Vajjala & Meurers, 2014). For efficiency, we use heuristic functions to make a rough estimation. Specifically, we consider the linguistic features designed for readability assessment that have been demonstrated to be effective in previous studies (Feng et al., 2010; Schumacher et al., 2016), and choose the most used linguistic features that can be operated at the sentence level to build the heuristic functions. In total, eight heuristic functions h 2 {len, ans, anc, lv, art, ntr, pth, anp} corresponding to eight distinct features from three aspects are used to compute the reading score of a sentence, as shown in Table 1. Let S denote the set of all the sentences ready for constructing the word coupling matrix. Given a sentence s 2 S, its reading difficulty can be quantified as a reading score r(s) = h(s) by using one of the eight functions. The more difficult s is, the greater r(s) will be. Considering that the reading score r(s) may be continuous, we discretize r(s) into several difficulty levels. Let η denote the predetermined number of difficulty levels, rh max and rh min, respectively, denote the maximum and minimum FIG. 3. The process of representing documents using the coupled bag-of-words model. [Color figure can be viewed at wileyonlinelibrary.com] 436 JOURNAL OF THE ASSOCIATION FOR INFORMATION SCIENCE AND TECHNOLOGY—May 2019 DOI: 10.1002/asi