正在加载图片...

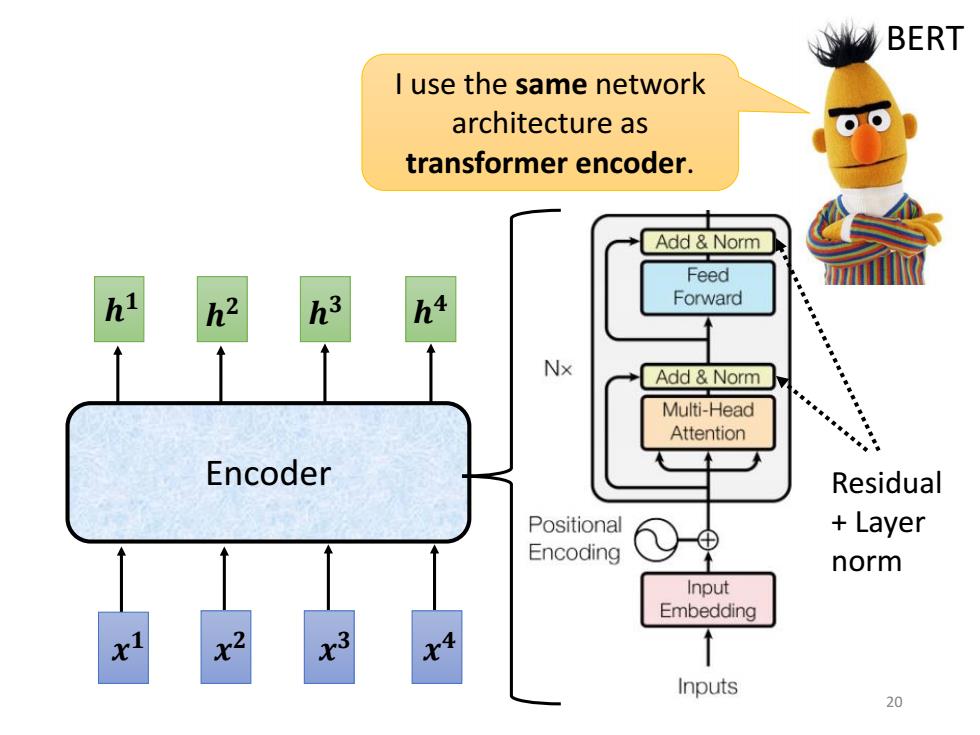

BERT I use the same network architecture as transformer encoder. Add Norm Feed h2 h3 Forward Nx Add Norm Multi-Head Attention Encoder Residual Positional Layer Encoding norm Input Embedding 2 3 Inputs 20Encoder 𝒉 𝟒 𝒉 𝟑 𝒉 𝒉 𝟏 𝟐 𝒙 𝟒 𝒙 𝟑 𝒙 𝟐 𝒙 𝟏 I use the same network architecture as transformer encoder. BERT Residual + Layer norm 20