正在加载图片...

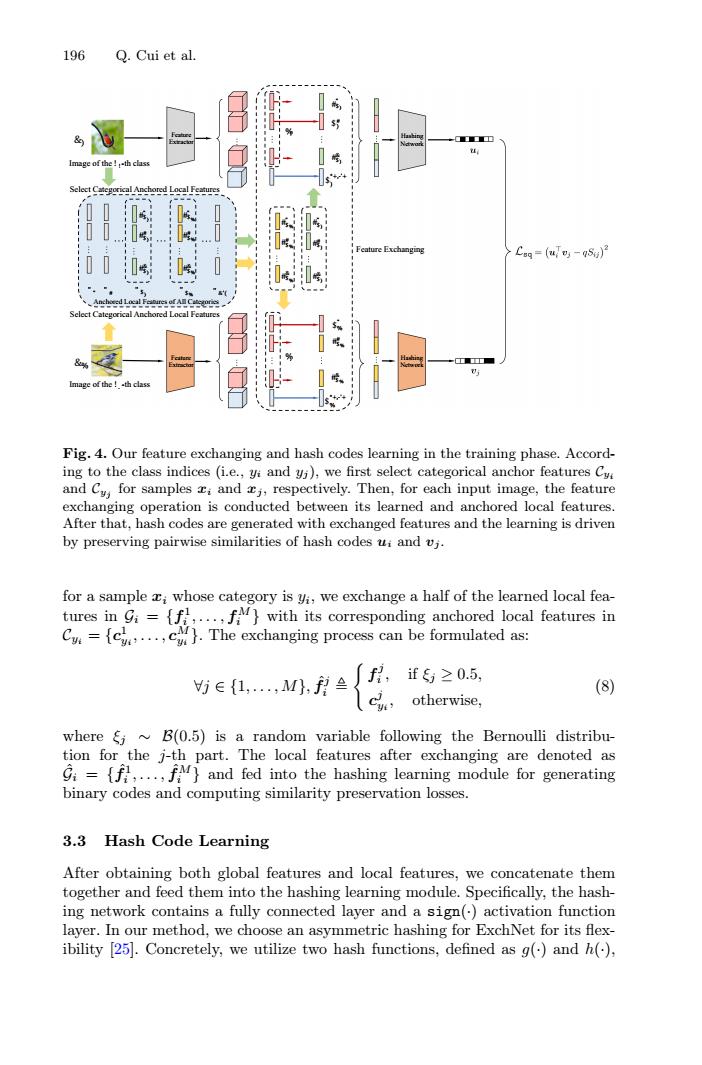

196 Q.Cui et al. ■■■口 u mage of the-th class Select Categorical Anchored Local Features Feature Exchanging Csg=(u-5)月 select Categoncal Anchored Local Featu nage of the Fig.4.Our feature exchanging and hash codes learning in the training phase.Accord- ing to the class indices (i.e.,yi and yi),we first select categorical anchor features Cv and Cv,for samples ci and j,respectively.Then,for each input image,the feature exchanging operation is conducted between its learned and anchored local features. After that,hash codes are generated with exchanged features and the learning is driven by preserving pairwise similarities of hash codes ui and vj. for a sample i whose category is yi,we exchange a half of the learned local fea- tures in Gi={f,...,fM}with its corresponding anchored local features in C).The exchanging process can be formulated as: ie{1,,M,f月 f, if5≥0.5, (8) otherwise, where i B(0.5)is a random variable following the Bernoulli distribu- tion for the j-th part.The local features after exchanging are denoted as Gi={f,...,fM}and fed into the hashing learning module for generating binary codes and computing similarity preservation losses. 3.3 Hash Code Learning After obtaining both global features and local features,we concatenate them together and feed them into the hashing learning module.Specifically,the hash- ing network contains a fully connected layer and a sign()activation function layer.In our method,we choose an asymmetric hashing for ExchNet for its flex- ibility [25].Concretely,we utilize two hash functions,defined as g()and h(),196 Q. Cui et al. Hashing Network Feature Extractor Feature Exchanging Image of the ! !-th class " " " # " $% " &'( #$% " #$% # #$% & Anchored Local Features of All Categories Hashing Network Feature Extractor #$) " #$) & #$% # #$% & $) # $% " Select Categorical Anchored Local Features $) *+,-'+ $% *+,-'+ " $) #$) " #$) # #$) & #$% " #$% # #$% & #$) " #$) # #$) & %. &) &% Image of the ! . -th class %! Select Categorical Anchored Local Features Fig. 4. Our feature exchanging and hash codes learning in the training phase. According to the class indices (i.e., yi and yj ), we first select categorical anchor features Cyi and Cyj for samples xi and xj , respectively. Then, for each input image, the feature exchanging operation is conducted between its learned and anchored local features. After that, hash codes are generated with exchanged features and the learning is driven by preserving pairwise similarities of hash codes ui and vj . for a sample xi whose category is yi, we exchange a half of the learned local features in Gi = {f 1 i ,..., fM i } with its corresponding anchored local features in Cyi = {c1 yi ,..., cM yi }. The exchanging process can be formulated as: ∀j ∈ {1,...,M}, fˆj i

fj i , if ξj ≥ 0.5, cj yi , otherwise, (8) where ξj ∼ B(0.5) is a random variable following the Bernoulli distribution for the j-th part. The local features after exchanging are denoted as Gˆi = {fˆ1 i ,..., fˆM i } and fed into the hashing learning module for generating binary codes and computing similarity preservation losses. 3.3 Hash Code Learning After obtaining both global features and local features, we concatenate them together and feed them into the hashing learning module. Specifically, the hashing network contains a fully connected layer and a sign(·) activation function layer. In our method, we choose an asymmetric hashing for ExchNet for its flexibility [25]. Concretely, we utilize two hash functions, defined as g(·) and h(·),�