正在加载图片...

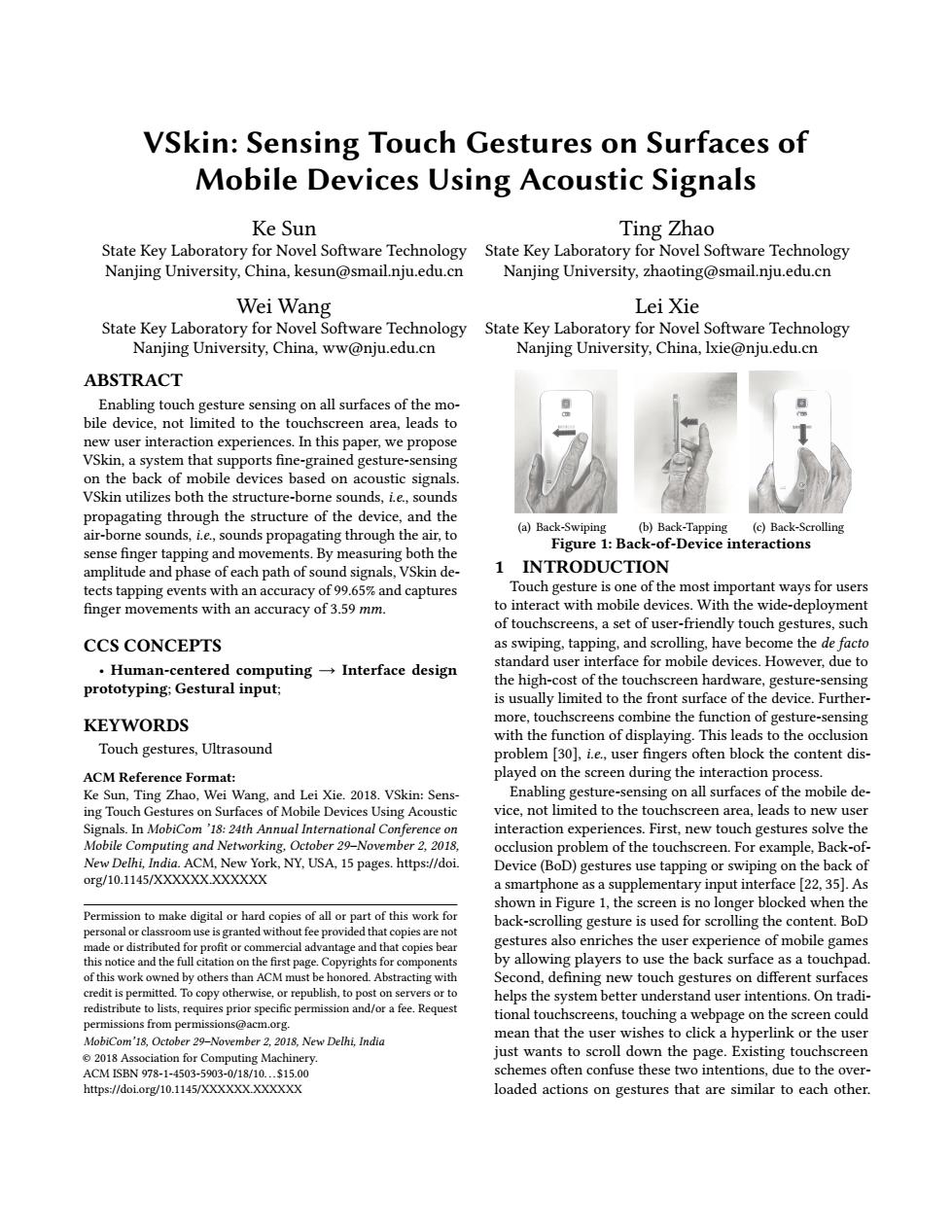

VSkin:Sensing Touch Gestures on Surfaces of Mobile Devices Using Acoustic Signals Ke Sun Ting Zhao State Key Laboratory for Novel Software Technology State Key Laboratory for Novel Software Technology Nanjing University,China,kesun@smail.nju.edu.cn Nanjing University,zhaoting@smail.nju.edu.cn Wei Wang Lei Xie State Key Laboratory for Novel Software Technology State Key Laboratory for Novel Software Technology Nanjing University,China,ww@nju.edu.cn Nanjing University,China,lxie@nju.edu.cn ABSTRACT Enabling touch gesture sensing on all surfaces of the mo- bile device,not limited to the touchscreen area,leads to new user interaction experiences.In this paper,we propose VSkin,a system that supports fine-grained gesture-sensing on the back of mobile devices based on acoustic signals. VSkin utilizes both the structure-borne sounds,i.e.,sounds propagating through the structure of the device,and the air-borne sounds,i.e.,sounds propagating through the air,to (a)Back-Swiping (b)Back-Tapping (c)Back-Scrolling sense finger tapping and movements.By measuring both the Figure 1:Back-of-Device interactions amplitude and phase of each path of sound signals,VSkin de- 1 INTRODUCTION tects tapping events with an accuracy of 99.65%and captures Touch gesture is one of the most important ways for users finger movements with an accuracy of 3.59 mm. to interact with mobile devices.With the wide-deployment of touchscreens,a set of user-friendly touch gestures,such CCS CONCEPTS as swiping,tapping,and scrolling,have become the de facto Human-centered computing-Interface design standard user interface for mobile devices.However,due to prototyping;Gestural input; the high-cost of the touchscreen hardware,gesture-sensing is usually limited to the front surface of the device.Further- KEYWORDS more,touchscreens combine the function of gesture-sensing with the function of displaying.This leads to the occlusion Touch gestures,Ultrasound problem [30],i.e.,user fingers often block the content dis- ACM Reference Format: played on the screen during the interaction process. Ke Sun,Ting Zhao,Wei Wang,and Lei Xie.2018.VSkin:Sens- Enabling gesture-sensing on all surfaces of the mobile de- ing Touch Gestures on Surfaces of Mobile Devices Using Acoustic vice,not limited to the touchscreen area,leads to new user Signals.In MobiCom'18:24th Annual International Conference on interaction experiences.First,new touch gestures solve the Mobile Computing and Networking,October 29-November 2,2018, occlusion problem of the touchscreen.For example,Back-of- New Delhi,India.ACM,New York,NY,USA,15 pages.https://doi. Device(BoD)gestures use tapping or swiping on the back of org/10.1145/XXXXXX.XXXXXx a smartphone as a supplementary input interface [22,35].As shown in Figure 1,the screen is no longer blocked when the Permission to make digital or hard copies of all or part of this work for back-scrolling gesture is used for scrolling the content.BoD personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear gestures also enriches the user experience of mobile games this notice and the full citation on the first page.Copyrights for components by allowing players to use the back surface as a touchpad. of this work owned by others than ACM must be honored.Abstracting with Second,defining new touch gestures on different surfaces credit is permitted.To copy otherwise,or republish,to post on servers or to helps the system better understand user intentions.On tradi- redistribute to lists,requires prior specific permission and/or a fee.Request tional touchscreens,touching a webpage on the screen could permissions from permissions@acm.org. MobiCom'18,October 29-November 2,2018,New Delhi,India mean that the user wishes to click a hyperlink or the user e2018 Association for Computing Machinery. just wants to scroll down the page.Existing touchscreen ACM ISBN978-1-4503-5903-0/18/10..$15.00 schemes often confuse these two intentions,due to the over- https://doi.org/10.1145/XXXXXX.XXXXXX loaded actions on gestures that are similar to each other.VSkin: Sensing Touch Gestures on Surfaces of Mobile Devices Using Acoustic Signals Ke Sun State Key Laboratory for Novel Software Technology Nanjing University, China, kesun@smail.nju.edu.cn Ting Zhao State Key Laboratory for Novel Software Technology Nanjing University, zhaoting@smail.nju.edu.cn Wei Wang State Key Laboratory for Novel Software Technology Nanjing University, China, ww@nju.edu.cn Lei Xie State Key Laboratory for Novel Software Technology Nanjing University, China, lxie@nju.edu.cn ABSTRACT Enabling touch gesture sensing on all surfaces of the mobile device, not limited to the touchscreen area, leads to new user interaction experiences. In this paper, we propose VSkin, a system that supports fine-grained gesture-sensing on the back of mobile devices based on acoustic signals. VSkin utilizes both the structure-borne sounds, i.e., sounds propagating through the structure of the device, and the air-borne sounds, i.e., sounds propagating through the air, to sense finger tapping and movements. By measuring both the amplitude and phase of each path of sound signals, VSkin detects tapping events with an accuracy of 99.65% and captures finger movements with an accuracy of 3.59 mm. CCS CONCEPTS • Human-centered computing → Interface design prototyping; Gestural input; KEYWORDS Touch gestures, Ultrasound ACM Reference Format: Ke Sun, Ting Zhao, Wei Wang, and Lei Xie. 2018. VSkin: Sensing Touch Gestures on Surfaces of Mobile Devices Using Acoustic Signals. In MobiCom ’18: 24th Annual International Conference on Mobile Computing and Networking, October 29–November 2, 2018, New Delhi, India. ACM, New York, NY, USA, 15 pages. https://doi. org/10.1145/XXXXXX.XXXXXX Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org. MobiCom’18, October 29–November 2, 2018, New Delhi, India © 2018 Association for Computing Machinery. ACM ISBN 978-1-4503-5903-0/18/10. . . $15.00 https://doi.org/10.1145/XXXXXX.XXXXXX (a) Back-Swiping (b) Back-Tapping (c) Back-Scrolling Figure 1: Back-of-Device interactions 1 INTRODUCTION Touch gesture is one of the most important ways for users to interact with mobile devices. With the wide-deployment of touchscreens, a set of user-friendly touch gestures, such as swiping, tapping, and scrolling, have become the de facto standard user interface for mobile devices. However, due to the high-cost of the touchscreen hardware, gesture-sensing is usually limited to the front surface of the device. Furthermore, touchscreens combine the function of gesture-sensing with the function of displaying. This leads to the occlusion problem [30], i.e., user fingers often block the content displayed on the screen during the interaction process. Enabling gesture-sensing on all surfaces of the mobile device, not limited to the touchscreen area, leads to new user interaction experiences. First, new touch gestures solve the occlusion problem of the touchscreen. For example, Back-ofDevice (BoD) gestures use tapping or swiping on the back of a smartphone as a supplementary input interface [22, 35]. As shown in Figure 1, the screen is no longer blocked when the back-scrolling gesture is used for scrolling the content. BoD gestures also enriches the user experience of mobile games by allowing players to use the back surface as a touchpad. Second, defining new touch gestures on different surfaces helps the system better understand user intentions. On traditional touchscreens, touching a webpage on the screen could mean that the user wishes to click a hyperlink or the user just wants to scroll down the page. Existing touchscreen schemes often confuse these two intentions, due to the overloaded actions on gestures that are similar to each other