正在加载图片...

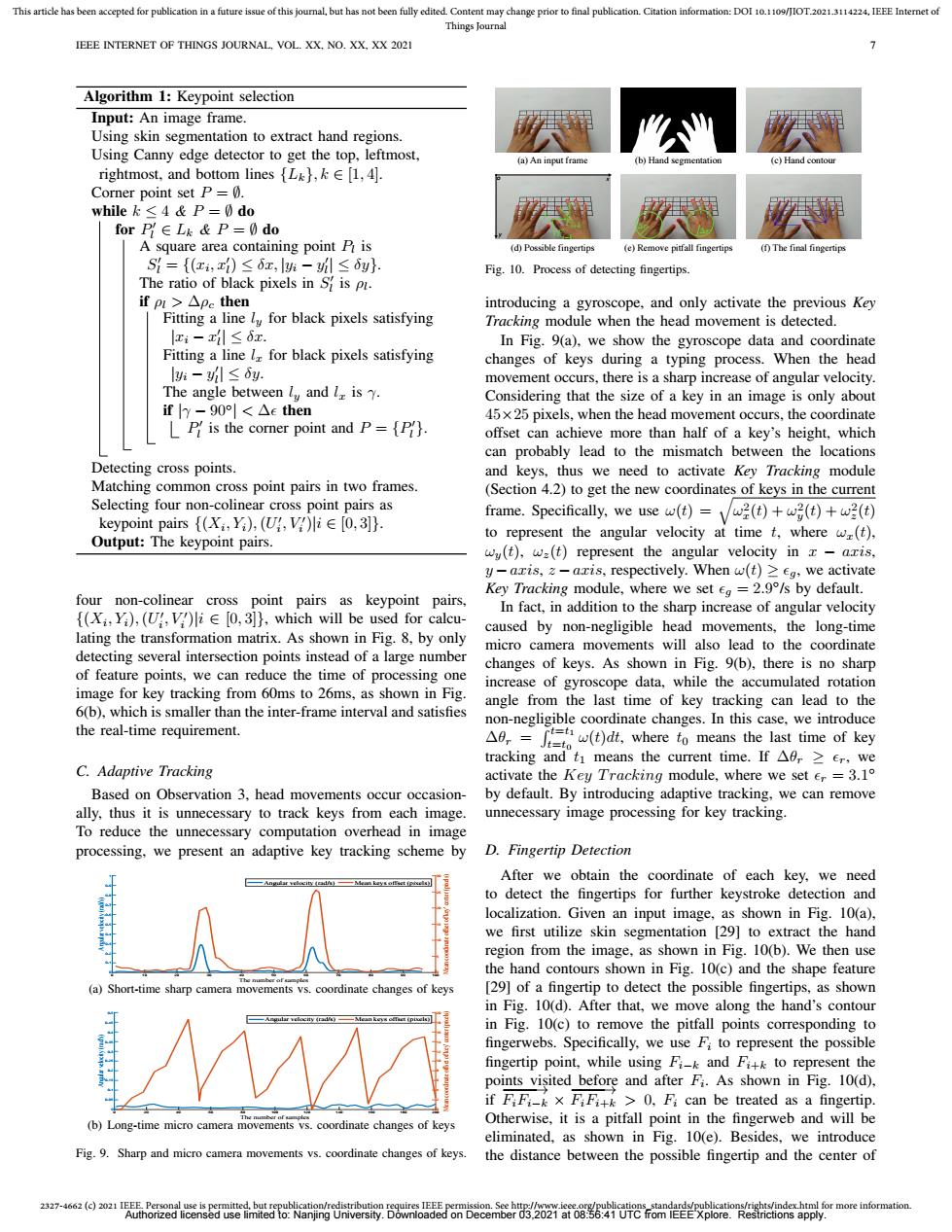

This article has been accepted for publication in a future issue of this journal,but has not been fully edited.Content may change prior to final publication.Citation information:DOI 10.1109/JIOT.2021.3114224.IEEE Internet of Things Journal EEE INTERNET OF THINGS JOURNAL,VOL.XX,NO.XX,XX 2021 Algorithm 1:Keypoint selection Input:An image frame. Using skin segmentation to extract hand regions. Using Canny edge detector to get the top,leftmost, (a)An input frame (b)Hand segmentation (c)Hand contour rightmost,and bottom lines ,k E [1,4]. Corner point set P=0. while k<4&P=0do forP∈Lk&P=0do A square area containing point P is (d)Possible fingertips (e)Remove pitfall fingertips (f)The final fingertips S={(x,z)≤6x,lh-l≤y}. Fig.10.Process of detecting fingertips The ratio of black pixels in S is pi. ifpl>△Pc then introducing a gyroscope,and only activate the previous Key Fitting a line ly for black pixels satisfying Tracking module when the head movement is detected. z-xl≤6x In Fig.9(a),we show the gyroscope data and coordinate Fitting a line lz for black pixels satisfying changes of keys during a typing process.When the head l-≤6y. movement occurs,there is a sharp increase of angular velocity. The angle between l and l is y. Considering that the size of a key in an image is only about ify-90|<△then 45x25 pixels,when the head movement occurs,the coordinate P is the corner point and P=[P. offset can achieve more than half of a key's height,which can probably lead to the mismatch between the locations Detecting cross points. and keys,thus we need to activate Key Tracking module Matching common cross point pairs in two frames. (Section 4.2)to get the new coordinates of keys in the current Selecting four non-colinear cross point pairs as keypoint pairs {(Xi,Yi),(U,V)iE[0,3]). frame.Specifically,we use w(t)=w(t)(t)+w(t) Output:The keypoint pairs. to represent the angular velocity at time t,where w(t), wy(t),w=(t)represent the angular velocity in x-aris, y-aris,z-aris,respectively.When w(t)>eg,we activate Key Tracking module,where we set eg=2.9/s by default. four non-colinear cross point pairs as keypoint pairs, In fact,in addition to the sharp increase of angular velocity {(Xi Yi),(U,V)liE[0,3]),which will be used for calcu- caused by non-negligible head movements,the long-time lating the transformation matrix.As shown in Fig.8,by only micro camera movements will also lead to the coordinate detecting several intersection points instead of a large number changes of keys.As shown in Fig.9(b),there is no sharp of feature points,we can reduce the time of processing one increase of gyroscope data,while the accumulated rotation image for key tracking from 60ms to 26ms,as shown in Fig. angle from the last time of key tracking can lead to the 6(b),which is smaller than the inter-frame interval and satisfies non-negligible coordinate changes.In this case,we introduce the real-time requirement △f,=,。w(ddt,where to means the last time of key tracking and ti means the current time.If Ar er,we C.Adaptive Tracking activate the Key Tracking module,where we set er =3.1 Based on Observation 3,head movements occur occasion- by default.By introducing adaptive tracking,we can remove ally,thus it is unnecessary to track keys from each image. unnecessary image processing for key tracking. To reduce the unnecessary computation overhead in image processing,we present an adaptive key tracking scheme by D.Fingertip Detection After we obtain the coordinate of each key,we need to detect the fingertips for further keystroke detection and localization.Given an input image,as shown in Fig.10(a), we first utilize skin segmentation [29]to extract the hand region from the image,as shown in Fig.10(b).We then use the hand contours shown in Fig.10(c)and the shape feature (a)Short-time sharp camera movements vs.coordinate changes of Keys [29]of a fingertip to detect the possible fingertips,as shown in Fig.10(d).After that,we move along the hand's contour in Fig.10(c)to remove the pitfall points corresponding to fingerwebs.Specifically,we use Fi to represent the possible fingertip point,while using Fi-k and Fi+k to represent the points visited before and after Fi.As shown in Fig.10(d). if FF_kxFFtk>0,Fi can be treated as a fingertip. (b)Long-time micro camera movements vs.coordinate changes of keys Otherwise,it is a pitfall point in the fingerweb and will be eliminated,as shown in Fig.10(e).Besides,we introduce Fig.9.Sharp and micro camera movements vs.coordinate changes of keys. the distance between the possible fingertip and the center of2327-4662 (c) 2021 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/JIOT.2021.3114224, IEEE Internet of Things Journal IEEE INTERNET OF THINGS JOURNAL, VOL. XX, NO. XX, XX 2021 7 Algorithm 1: Keypoint selection Input: An image frame. Using skin segmentation to extract hand regions. Using Canny edge detector to get the top, leftmost, rightmost, and bottom lines {Lk}, k ∈ [1, 4]. Corner point set P = ∅. while k ≤ 4 & P = ∅ do for P 0 l ∈ Lk & P = ∅ do A square area containing point Pl is S 0 l = {(xi , x0 l ) ≤ δx, |yi − y 0 l | ≤ δy}. The ratio of black pixels in S 0 l is ρl . if ρl > ∆ρc then Fitting a line ly for black pixels satisfying |xi − x 0 l | ≤ δx. Fitting a line lx for black pixels satisfying |yi − y 0 l | ≤ δy. The angle between ly and lx is γ. if |γ − 90◦ | < ∆ then P 0 l is the corner point and P = {P 0 l }. Detecting cross points. Matching common cross point pairs in two frames. Selecting four non-colinear cross point pairs as keypoint pairs {(Xi , Yi),(U 0 i , V 0 i )|i ∈ [0, 3]}. Output: The keypoint pairs. four non-colinear cross point pairs as keypoint pairs, {(Xi , Yi),(U 0 i , V 0 i )|i ∈ [0, 3]}, which will be used for calculating the transformation matrix. As shown in Fig. 8, by only detecting several intersection points instead of a large number of feature points, we can reduce the time of processing one image for key tracking from 60ms to 26ms, as shown in Fig. 6(b), which is smaller than the inter-frame interval and satisfies the real-time requirement. C. Adaptive Tracking Based on Observation 3, head movements occur occasionally, thus it is unnecessary to track keys from each image. To reduce the unnecessary computation overhead in image processing, we present an adaptive key tracking scheme by 0 10 20 30 40 50 60 70 80 90 100 The number of samples 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 Angular velocity (rad/s) 0 5 10 15 20 25 30 Mean coordinate offset of key' center (pixels) Angular velocity (rad/s) Mean keys offset (pixels) (a) Short-time sharp camera movements vs. coordinate changes of keys 0 20 40 60 80 100 120 140 160 180 200 The number of samples 0 0.05 0.1 0.15 0.2 0.25 0.3 0.35 0.4 0.45 0.5 Angular velocity (rad/s) 0 2 4 6 8 10 12 14 16 18 20 Mean coordinate offset of key' center (pixels) Angular velocity (rad/s) Mean keys offset (pixels) (b) Long-time micro camera movements vs. coordinate changes of keys Fig. 9. Sharp and micro camera movements vs. coordinate changes of keys. (a) An input frame (b) Hand segmentation (d) Possible fingertips (f) The final fingertips Sensys (c) Hand contour Fi Fi−k Fi+k Δr Δr o x y (e) Remove pitfall fingertips Fig. 10. Process of detecting fingertips. introducing a gyroscope, and only activate the previous Key Tracking module when the head movement is detected. In Fig. 9(a), we show the gyroscope data and coordinate changes of keys during a typing process. When the head movement occurs, there is a sharp increase of angular velocity. Considering that the size of a key in an image is only about 45×25 pixels, when the head movement occurs, the coordinate offset can achieve more than half of a key’s height, which can probably lead to the mismatch between the locations and keys, thus we need to activate Key Tracking module (Section 4.2) to get the new coordinates of keys in the current frame. Specifically, we use ω(t) = q ω2 x (t) + ω2 y (t) + ω2 z (t) to represent the angular velocity at time t, where ωx(t), ωy(t), ωz(t) represent the angular velocity in x − axis, y −axis, z −axis, respectively. When ω(t) ≥ g, we activate Key Tracking module, where we set g = 2.9 ◦ /s by default. In fact, in addition to the sharp increase of angular velocity caused by non-negligible head movements, the long-time micro camera movements will also lead to the coordinate changes of keys. As shown in Fig. 9(b), there is no sharp increase of gyroscope data, while the accumulated rotation angle from the last time of key tracking can lead to the non-negligible coordinate changes. In this case, we introduce ∆θr = R t=t1 t=t0 ω(t)dt, where t0 means the last time of key tracking and t1 means the current time. If ∆θr ≥ r, we activate the Key T racking module, where we set r = 3.1 ◦ by default. By introducing adaptive tracking, we can remove unnecessary image processing for key tracking. D. Fingertip Detection After we obtain the coordinate of each key, we need to detect the fingertips for further keystroke detection and localization. Given an input image, as shown in Fig. 10(a), we first utilize skin segmentation [29] to extract the hand region from the image, as shown in Fig. 10(b). We then use the hand contours shown in Fig. 10(c) and the shape feature [29] of a fingertip to detect the possible fingertips, as shown in Fig. 10(d). After that, we move along the hand’s contour in Fig. 10(c) to remove the pitfall points corresponding to fingerwebs. Specifically, we use Fi to represent the possible fingertip point, while using Fi−k and Fi+k to represent the points visited before and after Fi . As shown in Fig. 10(d), if −−−−→ FiFi−k × −−−−→ FiFi+k > 0, Fi can be treated as a fingertip. Otherwise, it is a pitfall point in the fingerweb and will be eliminated, as shown in Fig. 10(e). Besides, we introduce the distance between the possible fingertip and the center of Authorized licensed use limited to: Nanjing University. Downloaded on December 03,2021 at 08:56:41 UTC from IEEE Xplore. Restrictions apply