正在加载图片...

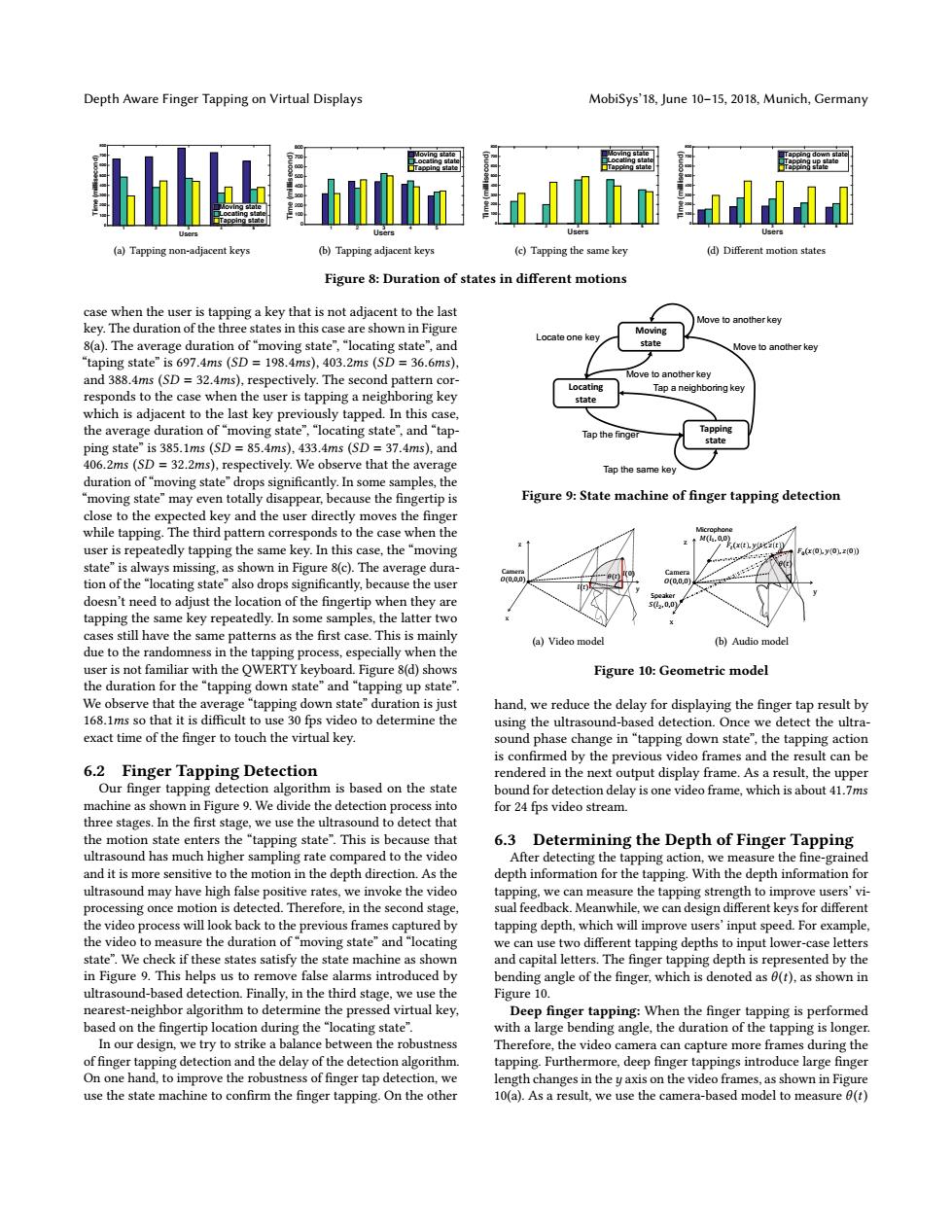

Depth Aware Finger Tapping on Virtual Displays MobiSys'18,June 10-15,2018,Munich,Germany S.C Users Users Users (a)Tapping non-adjacent keys (b)Tapping adjacent keys (c)Tapping the same key (d)Different motion states Figure 8:Duration of states in different motions case when the user is tapping a key that is not adjacent to the last Move to another key key.The duration of the three states in this case are shown in Figure ocate one kev 8(a).The average duration of"moving state","locating state",and stat Move to another key 'taping state"is 697.4ms(SD 198.4ms),403.2ms (SD 36.6ms), and 388.4ms (SD 32.4ms),respectively.The second pattern cor- Move to another key Locating Tap a neighboring key responds to the case when the user is tapping a neighboring key state which is adjacent to the last key previously tapped.In this case, the average duration of "moving state","locating state",and "tap- Tap the finge Tapping ping state"is 385.1ms(SD =85.4ms),433.4ms (SD =37.4ms),and 406.2ms(SD 32.2ms),respectively.We observe that the average Tap the same key duration of"moving state"drops significantly.In some samples,the "moving state"may even totally disappear,because the fingertip is Figure 9:State machine of finger tapping detection close to the expected key and the user directly moves the finger while tapping.The third pattern corresponds to the case when the M(:.0, user is repeatedly tapping the same key.In this case,the"moving F(x(tLyuczit state"is always missing,as shown in Figure 8(c).The average dura- tion of the "locating state"also drops significantly,because the user 00.0.0 doesn't need to adjust the location of the fingertip when they are tapping the same key repeatedly.In some samples,the latter two cases still have the same patterns as the first case.This is mainly (a)Video model (b)Audio model due to the randomness in the tapping process,especially when the user is not familiar with the OWERTY keyboard.Figure 8(d)shows Figure 10:Geometric model the duration for the“tapping down state"and“tapping up state”. We observe that the average"tapping down state"duration is just hand,we reduce the delay for displaying the finger tap result by 168.1ms so that it is difficult to use 30 fps video to determine the using the ultrasound-based detection.Once we detect the ultra- exact time of the finger to touch the virtual key. sound phase change in"tapping down state",the tapping action is confirmed by the previous video frames and the result can be 6.2 Finger Tapping Detection rendered in the next output display frame.As a result,the upper Our finger tapping detection algorithm is based on the state bound for detection delay is one video frame,which is about 41.7ms machine as shown in Figure 9.We divide the detection process into for 24 fps video stream. three stages.In the first stage,we use the ultrasound to detect that the motion state enters the"tapping state".This is because that 6.3 Determining the Depth of Finger Tapping ultrasound has much higher sampling rate compared to the video After detecting the tapping action,we measure the fine-grained and it is more sensitive to the motion in the depth direction.As the depth information for the tapping.With the depth information for ultrasound may have high false positive rates,we invoke the video tapping,we can measure the tapping strength to improve users'vi- processing once motion is detected.Therefore,in the second stage, sual feedback.Meanwhile,we can design different keys for different the video process will look back to the previous frames captured by tapping depth,which will improve users'input speed.For example, the video to measure the duration of "moving state"and"locating we can use two different tapping depths to input lower-case letters state".We check if these states satisfy the state machine as shown and capital letters.The finger tapping depth is represented by the in Figure 9.This helps us to remove false alarms introduced by bending angle of the finger,which is denoted as 0(t),as shown in ultrasound-based detection.Finally,in the third stage,we use the Figure 10. nearest-neighbor algorithm to determine the pressed virtual key, Deep finger tapping:When the finger tapping is performed based on the fingertip location during the"locating state". with a large bending angle,the duration of the tapping is longer. In our design,we try to strike a balance between the robustness Therefore,the video camera can capture more frames during the of finger tapping detection and the delay of the detection algorithm tapping.Furthermore,deep finger tappings introduce large finger On one hand,to improve the robustness of finger tap detection,we length changes in the y axis on the video frames,as shown in Figure use the state machine to confirm the finger tapping.On the other 10(a).As a result,we use the camera-based model to measure 0(t)Depth Aware Finger Tapping on Virtual Displays MobiSys’18, June 10–15, 2018, Munich, Germany Users 12345 Time (millisecond) 0 100 200 300 400 500 600 700 800 Moving state Locating state Tapping state (a) Tapping non-adjacent keys Users 12345 Time (millisecond) 0 100 200 300 400 500 600 700 800 Moving state Locating state Tapping state (b) Tapping adjacent keys Users 12345 Time (millisecond) 0 100 200 300 400 500 600 700 800 Moving state Locating state Tapping state (c) Tapping the same key Users 12345 Time (millisecond) 0 100 200 300 400 500 600 700 800 Tapping down state Tapping up state Tapping state (d) Different motion states Figure 8: Duration of states in different motions case when the user is tapping a key that is not adjacent to the last key. The duration of the three states in this case are shown in Figure 8(a). The average duration of “moving state”, “locating state”, and “taping state” is 697.4ms (SD = 198.4ms), 403.2ms (SD = 36.6ms), and 388.4ms (SD = 32.4ms), respectively. The second pattern corresponds to the case when the user is tapping a neighboring key which is adjacent to the last key previously tapped. In this case, the average duration of “moving state”, “locating state”, and “tapping state” is 385.1ms (SD = 85.4ms), 433.4ms (SD = 37.4ms), and 406.2ms (SD = 32.2ms), respectively. We observe that the average duration of “moving state” drops significantly. In some samples, the “moving state” may even totally disappear, because the fingertip is close to the expected key and the user directly moves the finger while tapping. The third pattern corresponds to the case when the user is repeatedly tapping the same key. In this case, the “moving state” is always missing, as shown in Figure 8(c). The average duration of the “locating state” also drops significantly, because the user doesn’t need to adjust the location of the fingertip when they are tapping the same key repeatedly. In some samples, the latter two cases still have the same patterns as the first case. This is mainly due to the randomness in the tapping process, especially when the user is not familiar with the QWERTY keyboard. Figure 8(d) shows the duration for the “tapping down state” and “tapping up state”. We observe that the average “tapping down state” duration is just 168.1ms so that it is difficult to use 30 fps video to determine the exact time of the finger to touch the virtual key. 6.2 Finger Tapping Detection Our finger tapping detection algorithm is based on the state machine as shown in Figure 9. We divide the detection process into three stages. In the first stage, we use the ultrasound to detect that the motion state enters the “tapping state”. This is because that ultrasound has much higher sampling rate compared to the video and it is more sensitive to the motion in the depth direction. As the ultrasound may have high false positive rates, we invoke the video processing once motion is detected. Therefore, in the second stage, the video process will look back to the previous frames captured by the video to measure the duration of “moving state” and “locating state”. We check if these states satisfy the state machine as shown in Figure 9. This helps us to remove false alarms introduced by ultrasound-based detection. Finally, in the third stage, we use the nearest-neighbor algorithm to determine the pressed virtual key, based on the fingertip location during the “locating state”. In our design, we try to strike a balance between the robustness of finger tapping detection and the delay of the detection algorithm. On one hand, to improve the robustness of finger tap detection, we use the state machine to confirm the finger tapping. On the other Moving state Locating state Tapping state Locate one key Tap the finger Tap a neighboring key Tap the same key Move to another key Move to another key Move to another key Figure 9: State machine of finger tapping detection Camera 4(0,0,0) @(?) 2(0) 2(?) y x z (a) Video model Camera 4(0,0,0) x z 3 @(?) y Microphone ;(2), 0,0) Speaker <(2*, 0,0) 0=(# 0 , & 0 , D 0 ) 0>(# ? , & ? , D ? ) (b) Audio model Figure 10: Geometric model hand, we reduce the delay for displaying the finger tap result by using the ultrasound-based detection. Once we detect the ultrasound phase change in “tapping down state”, the tapping action is confirmed by the previous video frames and the result can be rendered in the next output display frame. As a result, the upper bound for detection delay is one video frame, which is about 41.7ms for 24 fps video stream. 6.3 Determining the Depth of Finger Tapping After detecting the tapping action, we measure the fine-grained depth information for the tapping. With the depth information for tapping, we can measure the tapping strength to improve users’ visual feedback. Meanwhile, we can design different keys for different tapping depth, which will improve users’ input speed. For example, we can use two different tapping depths to input lower-case letters and capital letters. The finger tapping depth is represented by the bending angle of the finger, which is denoted as θ (t), as shown in Figure 10. Deep finger tapping: When the finger tapping is performed with a large bending angle, the duration of the tapping is longer. Therefore, the video camera can capture more frames during the tapping. Furthermore, deep finger tappings introduce large finger length changes in the y axis on the video frames, as shown in Figure 10(a). As a result, we use the camera-based model to measure θ (t)