正在加载图片...

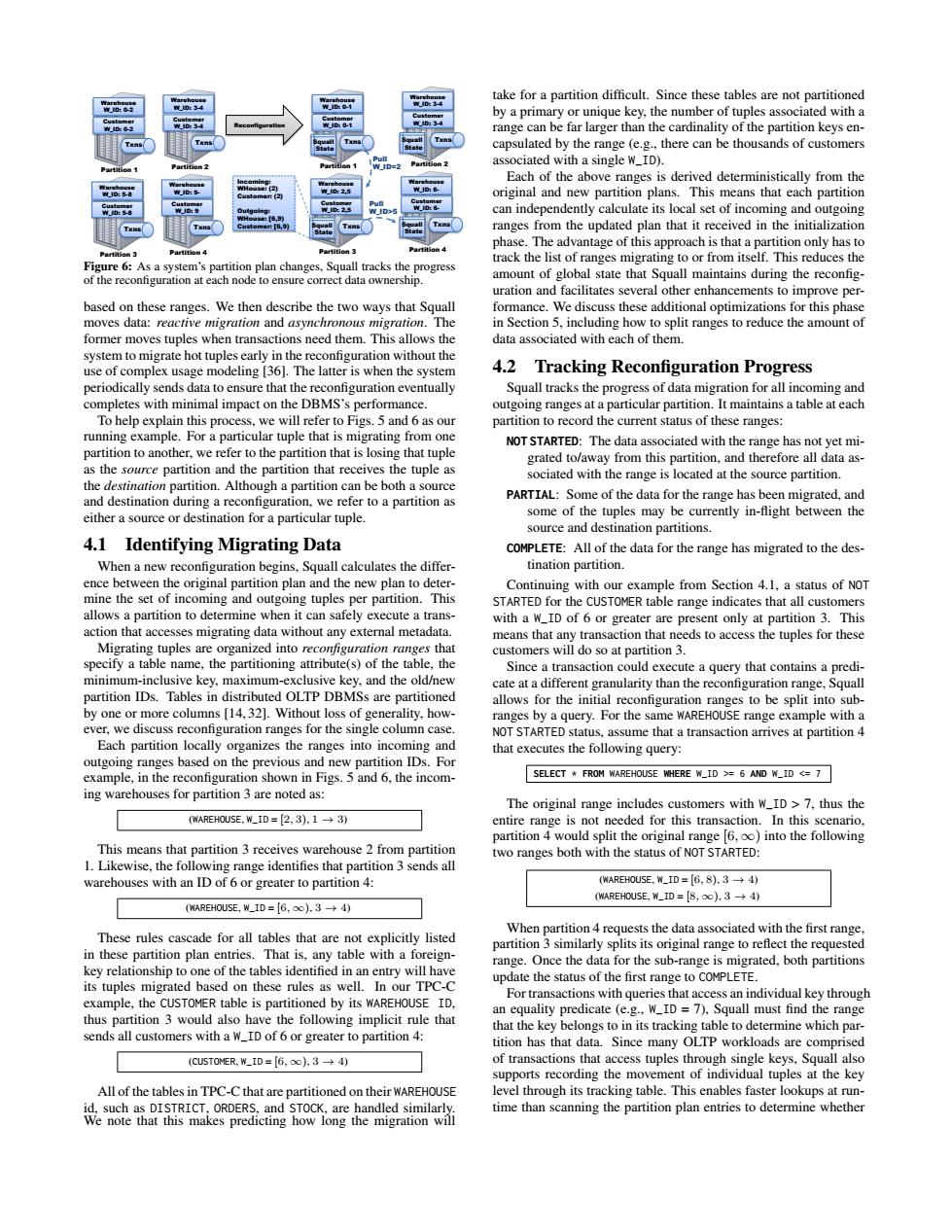

take for a partition difficult Since these tables are not partitic 是→ f cus tracke The pproach is that artniononyhd amount of s al state that Squall mai ntains during the recon d on these We then de ribe the two ways that Squal data 1L This alows the Section5.including how to split rangesmoun o e the am 4.2 Tracking Reconfiguration Progress are th o help explain this pro partition to record the curent status of these range that tupl NOT STARTED:The data as ociated with the range has n that rec PARTIAL:Son ome of tween the 4.1 Identifying Migrating Data COMPLETE:All of the data for the range has migrated to the des- When a new reconfiguration begins.Squall calculates the differ tination partition and ou This Each partition locally anizes the ranges into incor SELECT FROM MAREHOUSE WHERE W_ID 6 AND W_ID 7 ing warehouses for partition3 are noted as edfor this tra ers with wI WAREHOUSE,W_ID [2.3).13) )into the following ve areh E,1D=6,o.3→4 in these That is.an mple.the sends all customers with greater to partition4: the key which p C5 CHER.W_ID=6,6o.3→ of transacti ions that All of the tables in TPC-C that are partitioned on their WAREHOUSE time than scanning the partition plan entres to de Reconfiguration Pull W_ID=2 Pull W_ID>5 Warehouse W_ID: 3-4 Customer W_ID: 3-4 Txns Partition 2 Warehouse W_ID: 0-2 Customer W_ID: 0-2 Txns Partition 1 Warehouse W_ID: 5-8 Customer W_ID: 5-8 Txns Partition 3 Warehouse W_ID: 9- Customer W_ID: 9 Txns Partition 4 Warehouse W_ID: 3-4 Customer W_ID: 3-4 Txns Partition 2 Warehouse W_ID: 0-1 Customer W_ID: 0-1 Txns Partition 1 Warehouse W_ID: 2,5 Customer W_ID: 2,5 Txns Partition 3 Warehouse W_ID: 6- Customer W_ID: 6- Txns Partition 4 Squall State Squall State Squall State Squall State Incoming: WHouse: (2) Customer: (2) Outgoing: WHouse: [6,9) Customer: [6,9) Figure 6: As a system’s partition plan changes, Squall tracks the progress of the reconfiguration at each node to ensure correct data ownership. based on these ranges. We then describe the two ways that Squall moves data: reactive migration and asynchronous migration. The former moves tuples when transactions need them. This allows the system to migrate hot tuples early in the reconfiguration without the use of complex usage modeling [36]. The latter is when the system periodically sends data to ensure that the reconfiguration eventually completes with minimal impact on the DBMS’s performance. To help explain this process, we will refer to Figs. 5 and 6 as our running example. For a particular tuple that is migrating from one partition to another, we refer to the partition that is losing that tuple as the source partition and the partition that receives the tuple as the destination partition. Although a partition can be both a source and destination during a reconfiguration, we refer to a partition as either a source or destination for a particular tuple. 4.1 Identifying Migrating Data When a new reconfiguration begins, Squall calculates the difference between the original partition plan and the new plan to determine the set of incoming and outgoing tuples per partition. This allows a partition to determine when it can safely execute a transaction that accesses migrating data without any external metadata. Migrating tuples are organized into reconfiguration ranges that specify a table name, the partitioning attribute(s) of the table, the minimum-inclusive key, maximum-exclusive key, and the old/new partition IDs. Tables in distributed OLTP DBMSs are partitioned by one or more columns [14, 32]. Without loss of generality, however, we discuss reconfiguration ranges for the single column case. Each partition locally organizes the ranges into incoming and outgoing ranges based on the previous and new partition IDs. For example, in the reconfiguration shown in Figs. 5 and 6, the incoming warehouses for partition 3 are noted as: (WAREHOUSE, W_ID = [2, 3), 1 → 3) This means that partition 3 receives warehouse 2 from partition 1. Likewise, the following range identifies that partition 3 sends all warehouses with an ID of 6 or greater to partition 4: (WAREHOUSE, W_ID = [6, ∞), 3 → 4) These rules cascade for all tables that are not explicitly listed in these partition plan entries. That is, any table with a foreignkey relationship to one of the tables identified in an entry will have its tuples migrated based on these rules as well. In our TPC-C example, the CUSTOMER table is partitioned by its WAREHOUSE ID, thus partition 3 would also have the following implicit rule that sends all customers with a W_ID of 6 or greater to partition 4: (CUSTOMER, W_ID = [6, ∞), 3 → 4) All of the tables in TPC-C that are partitioned on their WAREHOUSE id, such as DISTRICT, ORDERS, and STOCK, are handled similarly. We note that this makes predicting how long the migration will take for a partition difficult. Since these tables are not partitioned by a primary or unique key, the number of tuples associated with a range can be far larger than the cardinality of the partition keys encapsulated by the range (e.g., there can be thousands of customers associated with a single W_ID). Each of the above ranges is derived deterministically from the original and new partition plans. This means that each partition can independently calculate its local set of incoming and outgoing ranges from the updated plan that it received in the initialization phase. The advantage of this approach is that a partition only has to track the list of ranges migrating to or from itself. This reduces the amount of global state that Squall maintains during the reconfiguration and facilitates several other enhancements to improve performance. We discuss these additional optimizations for this phase in Section 5, including how to split ranges to reduce the amount of data associated with each of them. 4.2 Tracking Reconfiguration Progress Squall tracks the progress of data migration for all incoming and outgoing ranges at a particular partition. It maintains a table at each partition to record the current status of these ranges: NOT STARTED: The data associated with the range has not yet migrated to/away from this partition, and therefore all data associated with the range is located at the source partition. PARTIAL: Some of the data for the range has been migrated, and some of the tuples may be currently in-flight between the source and destination partitions. COMPLETE: All of the data for the range has migrated to the destination partition. Continuing with our example from Section 4.1, a status of NOT STARTED for the CUSTOMER table range indicates that all customers with a W_ID of 6 or greater are present only at partition 3. This means that any transaction that needs to access the tuples for these customers will do so at partition 3. Since a transaction could execute a query that contains a predicate at a different granularity than the reconfiguration range, Squall allows for the initial reconfiguration ranges to be split into subranges by a query. For the same WAREHOUSE range example with a NOT STARTED status, assume that a transaction arrives at partition 4 that executes the following query: SELECT * FROM WAREHOUSE WHERE W_ID >= 6 AND W_ID <= 7 The original range includes customers with W_ID > 7, thus the entire range is not needed for this transaction. In this scenario, partition 4 would split the original range [6, ∞) into the following two ranges both with the status of NOT STARTED: (WAREHOUSE, W_ID = [6, 8), 3 → 4) (WAREHOUSE, W_ID = [8, ∞), 3 → 4) When partition 4 requests the data associated with the first range, partition 3 similarly splits its original range to reflect the requested range. Once the data for the sub-range is migrated, both partitions update the status of the first range to COMPLETE. For transactions with queries that access an individual key through an equality predicate (e.g., W_ID = 7), Squall must find the range that the key belongs to in its tracking table to determine which partition has that data. Since many OLTP workloads are comprised of transactions that access tuples through single keys, Squall also supports recording the movement of individual tuples at the key level through its tracking table. This enables faster lookups at runtime than scanning the partition plan entries to determine whether