正在加载图片...

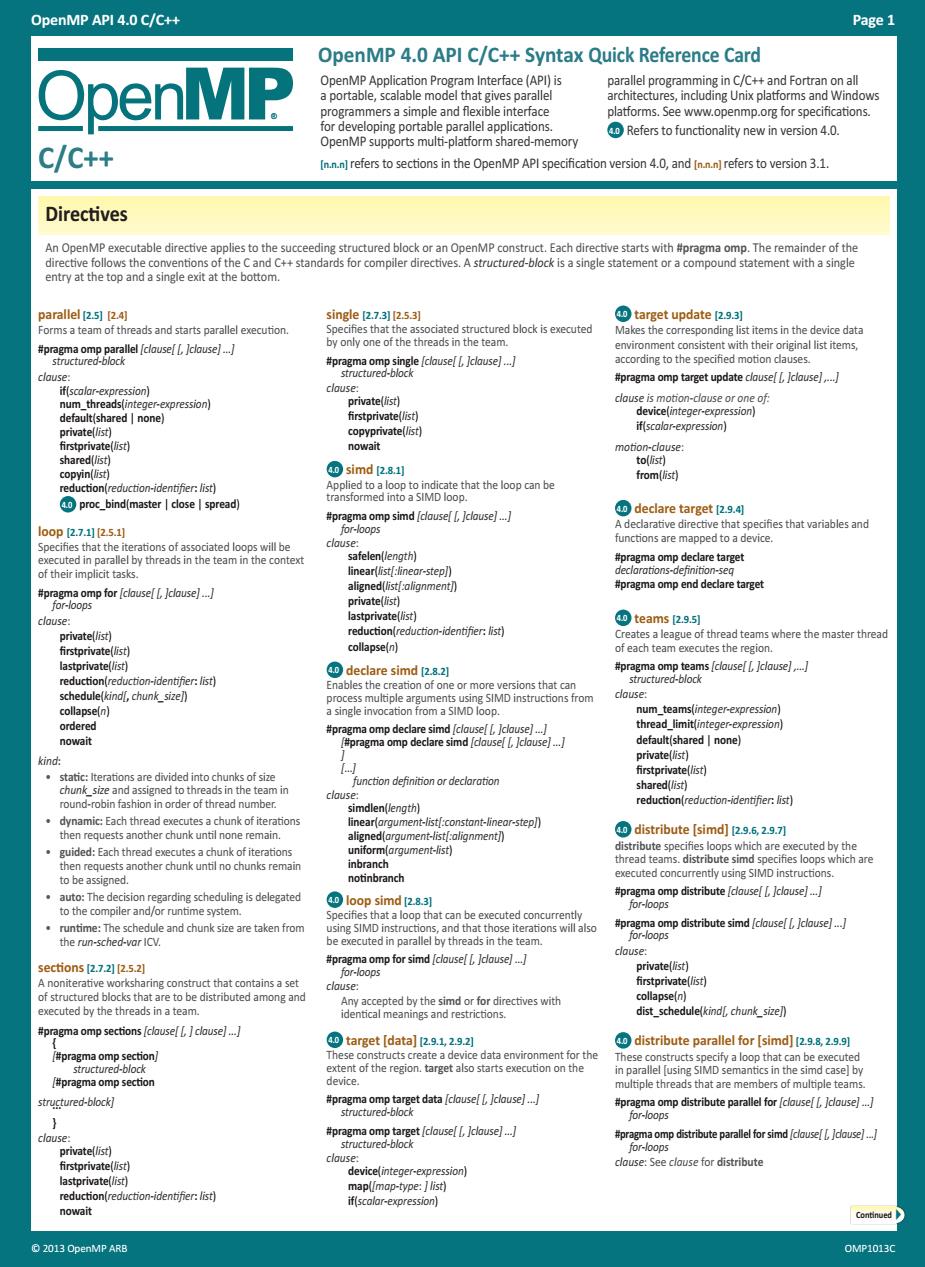

OpenMP API 4.0 C/C++ Page 1 OpenMP 4.0 API C/C++Syntax Quick Reference Card OpenMP tions C/C++ Directives entry at the top and a single exit at the bottom target update2sa到 eou网. 3cordeen5istentwntmth ault shared I none) m听 atel侧 d o simd [28.11 declare target 00p2.7.125.1】 2他化地a网 ared I none) function definition or declaratio redchionlredtcton-identferil step/) distribute [simd] d: nt -list o the c loop simd at can sing SIMD i that th ections [2.7.2)[2.5.2] target [data] distribute parallel for [simd] pra structured-block) Contirued D 2013 OpenMP ARE OMP1013© 2013 OpenMP ARB OMP1013C OpenMP API 4.0 C/C++ Page 1 OpenMP 4.0 API C/C++ Syntax Quick Reference Card C/C++ OpenMP Application Program Interface (API) is a portable, scalable model that gives parallel programmers a simple and flexible interface for developing portable parallel applications. OpenMP supports multi-platform shared-memory parallel programming in C/C++ and Fortran on all architectures, including Unix platforms and Windows platforms. See www.openmp.org for specifications. 4.0 Refers to functionality new in version 4.0. [n.n.n] refers to sections in the OpenMP API specification version 4.0, and [n.n.n] refers to version 3.1. ® Directives An OpenMP executable directive applies to the succeeding structured block or an OpenMP construct. Each directive starts with #pragma omp. The remainder of the directive follows the conventions of the C and C++ standards for compiler directives. A structured-block is a single statement or a compound statement with a single entry at the top and a single exit at the bottom. parallel [2.5] [2.4] Forms a team of threads and starts parallel execution. #pragma omp parallel [clause[ [, ]clause] ...] structured-block clause: if(scalar-expression) num_threads(integer-expression) default(shared | none) private(list) firstprivate(list) shared(list) copyin(list) reduction(reduction-identifier: list) 4.0 proc_bind(master | close | spread) loop [2.7.1] [2.5.1] Specifies that the iterations of associated loops will be executed in parallel by threads in the team in the context of their implicit tasks. #pragma omp for [clause[ [, ]clause] ...] for-loops clause: private(list) firstprivate(list) lastprivate(list) reduction(reduction-identifier: list) schedule(kind[, chunk_size]) collapse(n) ordered nowait kind: • static: Iterations are divided into chunks of size chunk_size and assigned to threads in the team in round-robin fashion in order of thread number. • dynamic: Each thread executes a chunk of iterations then requests another chunk until none remain. • guided: Each thread executes a chunk of iterations then requests another chunk until no chunks remain to be assigned. • auto: The decision regarding scheduling is delegated to the compiler and/or runtime system. • runtime: The schedule and chunk size are taken from the run-sched-var ICV. sections [2.7.2] [2.5.2] A noniterative worksharing construct that contains a set of structured blocks that are to be distributed among and executed by the threads in a team. #pragma omp sections [clause[ [, ] clause] ...] { [#pragma omp section] structured-block [#pragma omp section structured-block] ... } clause: private(list) firstprivate(list) lastprivate(list) reduction(reduction-identifier: list) nowait single [2.7.3] [2.5.3] Specifies that the associated structured block is executed by only one of the threads in the team. #pragma omp single [clause[ [, ]clause] ...] structured-block clause: private(list) firstprivate(list) copyprivate(list) nowait 4.0 simd [2.8.1] Applied to a loop to indicate that the loop can be transformed into a SIMD loop. #pragma omp simd [clause[ [, ]clause] ...] for-loops clause: safelen(length) linear(list[:linear-step]) aligned(list[:alignment]) private(list) lastprivate(list) reduction(reduction-identifier: list) collapse(n) 4.0 declare simd [2.8.2] Enables the creation of one or more versions that can process multiple arguments using SIMD instructions from a single invocation from a SIMD loop. #pragma omp declare simd [clause[ [, ]clause] ...] [#pragma omp declare simd [clause[ [, ]clause] ...] ] [...] function definition or declaration clause: simdlen(length) linear(argument-list[:constant-linear-step]) aligned(argument-list[:alignment]) uniform(argument-list) inbranch notinbranch 4.0 loop simd [2.8.3] Specifies that a loop that can be executed concurrently using SIMD instructions, and that those iterations will also be executed in parallel by threads in the team. #pragma omp for simd [clause[ [, ]clause] ...] for-loops clause: Any accepted by the simd or for directives with identical meanings and restrictions. 4.0 target [data] [2.9.1, 2.9.2] These constructs create a device data environment for the extent of the region. target also starts execution on the device. #pragma omp target data [clause[ [, ]clause] ...] structured-block #pragma omp target [clause[ [, ]clause] ...] structured-block clause: device(integer-expression) map([map-type: ] list) if(scalar-expression) 4.0 target update [2.9.3] Makes the corresponding list items in the device data environment consistent with their original list items, according to the specified motion clauses. #pragma omp target update clause[ [, ]clause] ,...] clause is motion-clause or one of: device(integer-expression) if(scalar-expression) motion-clause: to(list) from(list) 4.0 declare target [2.9.4] A declarative directive that specifies that variables and functions are mapped to a device. #pragma omp declare target declarations-definition-seq #pragma omp end declare target 4.0 teams [2.9.5] Creates a league of thread teams where the master thread of each team executes the region. #pragma omp teams [clause[ [, ]clause] ,...] structured-block clause: num_teams(integer-expression) thread_limit(integer-expression) default(shared | none) private(list) firstprivate(list) shared(list) reduction(reduction-identifier: list) 4.0 distribute [simd] [2.9.6, 2.9.7] distribute specifies loops which are executed by the thread teams. distribute simd specifies loops which are executed concurrently using SIMD instructions. #pragma omp distribute [clause[ [, ]clause] ...] for-loops #pragma omp distribute simd [clause[ [, ]clause] ...] for-loops clause: private(list) firstprivate(list) collapse(n) dist_schedule(kind[, chunk_size]) 4.0 distribute parallel for [simd] [2.9.8, 2.9.9] These constructs specify a loop that can be executed in parallel [using SIMD semantics in the simd case] by multiple threads that are members of multiple teams. #pragma omp distribute parallel for [clause[ [, ]clause] ...] for-loops #pragma omp distribute parallel for simd [clause[ [, ]clause] ...] for-loops clause: See clause for distribute Continued4