正在加载图片...

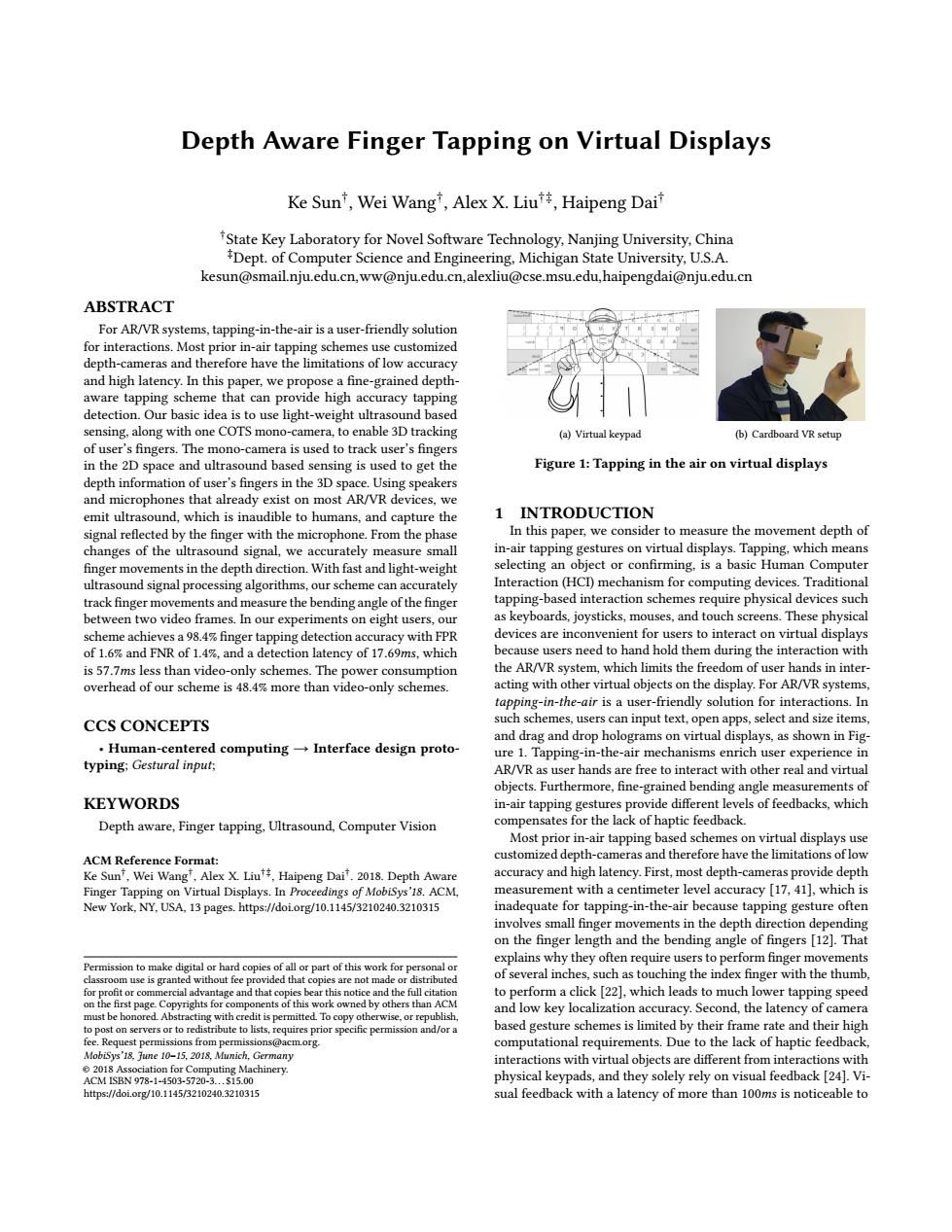

Depth Aware Finger Tapping on Virtual Displays Ke Sun,Wei Wang',Alex X.Liu,Haipeng Dai State Key Laboratory for Novel Software Technology,Nanjing University,China Dept.of Computer Science and Engineering,Michigan State University,U.S.A. kesun@smail.nju.edu.cn,ww@nju.edu.cn,alexliu@cse.msu.edu,haipengdai@nju.edu.cn ABSTRACT For AR/VR systems,tapping-in-the-air is a user-friendly solution for interactions.Most prior in-air tapping schemes use customized depth-cameras and therefore have the limitations of low accuracy and high latency.In this paper,we propose a fine-grained depth- aware tapping scheme that can provide high accuracy tapping detection.Our basic idea is to use light-weight ultrasound based sensing,along with one COTS mono-camera,to enable 3D tracking (a)Virtual keypad (b)Cardboard VR setup of user's fingers.The mono-camera is used to track user's fingers in the 2D space and ultrasound based sensing is used to get the Figure 1:Tapping in the air on virtual displays depth information of user's fingers in the 3D space.Using speakers and microphones that already exist on most AR/VR devices,we emit ultrasound,which is inaudible to humans,and capture the 1 INTRODUCTION signal reflected by the finger with the microphone.From the phase In this paper,we consider to measure the movement depth of changes of the ultrasound signal,we accurately measure small in-air tapping gestures on virtual displays.Tapping,which means finger movements in the depth direction.With fast and light-weight selecting an object or confirming,is a basic Human Computer ultrasound signal processing algorithms,our scheme can accurately Interaction(HCI)mechanism for computing devices.Traditional track finger movements and measure the bending angle of the finger tapping-based interaction schemes require physical devices such between two video frames.In our experiments on eight users,our as keyboards,joysticks,mouses,and touch screens.These physical scheme achieves a98.4%finger tapping detection accuracy with FPR devices are inconvenient for users to interact on virtual displays of 1.6%and FNR of 1.4%,and a detection latency of 17.69ms,which because users need to hand hold them during the interaction with is 57.7ms less than video-only schemes.The power consumption the AR/VR system,which limits the freedom of user hands in inter- overhead of our scheme is 48.4%more than video-only schemes. acting with other virtual objects on the display.For AR/VR systems, tapping-in-the-air is a user-friendly solution for interactions.In CCS CONCEPTS such schemes,users can input text,open apps,select and size items, and drag and drop holograms on virtual displays,as shown in Fig- ·Human-centered computing一→Interface design proto- ure 1.Tapping-in-the-air mechanisms enrich user experience in typing;Gestural input; AR/VR as user hands are free to interact with other real and virtual objects.Furthermore,fine-grained bending angle measurements of KEYWORDS in-air tapping gestures provide different levels of feedbacks,which Depth aware,Finger tapping,Ultrasound,Computer Vision compensates for the lack of haptic feedback. Most prior in-air tapping based schemes on virtual displays use ACM Reference Format: customized depth-cameras and therefore have the limitations of low Ke Sunt,Wei Wang',Alex X.Liu,Haipeng Dai".2018.Depth Aware accuracy and high latency.First,most depth-cameras provide depth Finger Tapping on Virtual Displays.In Proceedings of MobiSys'18.ACM. measurement with a centimeter level accuracy [17,41],which is New York,NY,USA.13 pages.https://doi.org/10.1145/3210240.3210315 inadequate for tapping-in-the-air because tapping gesture often involves small finger movements in the depth direction depending on the finger length and the bending angle of fingers [12].That explains why they often require users to perform finger movements Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed of several inches,such as touching the index finger with the thumb for profit or commercial advantage and that copies bear this notice and the full citation to perform a click [22],which leads to much lower tapping speed and low key localization accuracy.Second,the latency of camera must be honored.Abstracting with credit is permitted.To copy otherwise,or republish. to post on servers or to redistribute to lists,requires prior specific permission and/or a based gesture schemes is limited by their frame rate and their high fee.Request permissions from permissions@acmorg. computational requirements.Due to the lack of haptic feedback. MobiSys'18,June 10-15,2018,Munich,Germany interactions with virtual objects are different from interactions with e2018 Association for Computing Machinery ACM1SBN978-1-4503-5720-3.s15.00 physical keypads,and they solely rely on visual feedback [24].Vi- https:/doi.org/10.1145/3210240.3210315 sual feedback with a latency of more than 100ms is noticeable toDepth Aware Finger Tapping on Virtual Displays Ke Sun† , Wei Wang† , Alex X. Liu†‡, Haipeng Dai† †State Key Laboratory for Novel Software Technology, Nanjing University, China ‡Dept. of Computer Science and Engineering, Michigan State University, U.S.A. kesun@smail.nju.edu.cn,ww@nju.edu.cn,alexliu@cse.msu.edu,haipengdai@nju.edu.cn ABSTRACT For AR/VR systems, tapping-in-the-air is a user-friendly solution for interactions. Most prior in-air tapping schemes use customized depth-cameras and therefore have the limitations of low accuracy and high latency. In this paper, we propose a fine-grained depthaware tapping scheme that can provide high accuracy tapping detection. Our basic idea is to use light-weight ultrasound based sensing, along with one COTS mono-camera, to enable 3D tracking of user’s fingers. The mono-camera is used to track user’s fingers in the 2D space and ultrasound based sensing is used to get the depth information of user’s fingers in the 3D space. Using speakers and microphones that already exist on most AR/VR devices, we emit ultrasound, which is inaudible to humans, and capture the signal reflected by the finger with the microphone. From the phase changes of the ultrasound signal, we accurately measure small finger movements in the depth direction. With fast and light-weight ultrasound signal processing algorithms, our scheme can accurately track finger movements and measure the bending angle of the finger between two video frames. In our experiments on eight users, our scheme achieves a 98.4% finger tapping detection accuracy with FPR of 1.6% and FNR of 1.4%, and a detection latency of 17.69ms, which is 57.7ms less than video-only schemes. The power consumption overhead of our scheme is 48.4% more than video-only schemes. CCS CONCEPTS • Human-centered computing → Interface design prototyping; Gestural input; KEYWORDS Depth aware, Finger tapping, Ultrasound, Computer Vision ACM Reference Format: Ke Sun† , Wei Wang† , Alex X. Liu†‡, Haipeng Dai† . 2018. Depth Aware Finger Tapping on Virtual Displays. In Proceedings of MobiSys’18. ACM, New York, NY, USA, 13 pages. https://doi.org/10.1145/3210240.3210315 Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org. MobiSys’18, June 10–15, 2018, Munich, Germany © 2018 Association for Computing Machinery. ACM ISBN 978-1-4503-5720-3. . . $15.00 https://doi.org/10.1145/3210240.3210315 (a) Virtual keypad (b) Cardboard VR setup Figure 1: Tapping in the air on virtual displays 1 INTRODUCTION In this paper, we consider to measure the movement depth of in-air tapping gestures on virtual displays. Tapping, which means selecting an object or confirming, is a basic Human Computer Interaction (HCI) mechanism for computing devices. Traditional tapping-based interaction schemes require physical devices such as keyboards, joysticks, mouses, and touch screens. These physical devices are inconvenient for users to interact on virtual displays because users need to hand hold them during the interaction with the AR/VR system, which limits the freedom of user hands in interacting with other virtual objects on the display. For AR/VR systems, tapping-in-the-air is a user-friendly solution for interactions. In such schemes, users can input text, open apps, select and size items, and drag and drop holograms on virtual displays, as shown in Figure 1. Tapping-in-the-air mechanisms enrich user experience in AR/VR as user hands are free to interact with other real and virtual objects. Furthermore, fine-grained bending angle measurements of in-air tapping gestures provide different levels of feedbacks, which compensates for the lack of haptic feedback. Most prior in-air tapping based schemes on virtual displays use customized depth-cameras and therefore have the limitations of low accuracy and high latency. First, most depth-cameras provide depth measurement with a centimeter level accuracy [17, 41], which is inadequate for tapping-in-the-air because tapping gesture often involves small finger movements in the depth direction depending on the finger length and the bending angle of fingers [12]. That explains why they often require users to perform finger movements of several inches, such as touching the index finger with the thumb, to perform a click [22], which leads to much lower tapping speed and low key localization accuracy. Second, the latency of camera based gesture schemes is limited by their frame rate and their high computational requirements. Due to the lack of haptic feedback, interactions with virtual objects are different from interactions with physical keypads, and they solely rely on visual feedback [24]. Visual feedback with a latency of more than 100ms is noticeable to