正在加载图片...

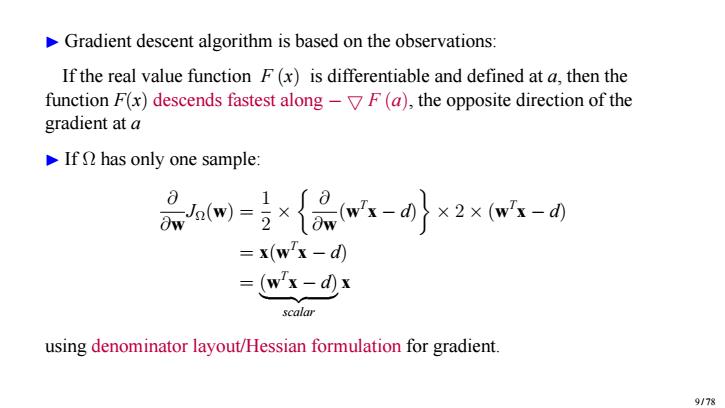

Gradient descent algorithm is based on the observations: If the real value function F(x)is differentiable and defined at a,then the function F(x)descends fastest along-VF(a),the opposite direction of the gradient at a If n has only one sample: 品am)=x{品wx-}x2xwx-0 =x(wx-d) =w-0x scalar using denominator layout/Hessian formulation for gradient. 9178▶ Gradient descent algorithm is based on the observations: If the real value function F (x) is differentiable and defined at a, then the function F(x) descends fastest along − ▽ F (a), the opposite direction of the gradient at a ▶ If Ω has only one sample: ∂ ∂w JΩ(w) = 1 2 × ∂ ∂w (w T x − d) × 2 × (w T x − d) = x(w T x − d) = (w T x − d) | {z } scalar x using denominator layout/Hessian formulation for gradient. 9 / 78