正在加载图片...

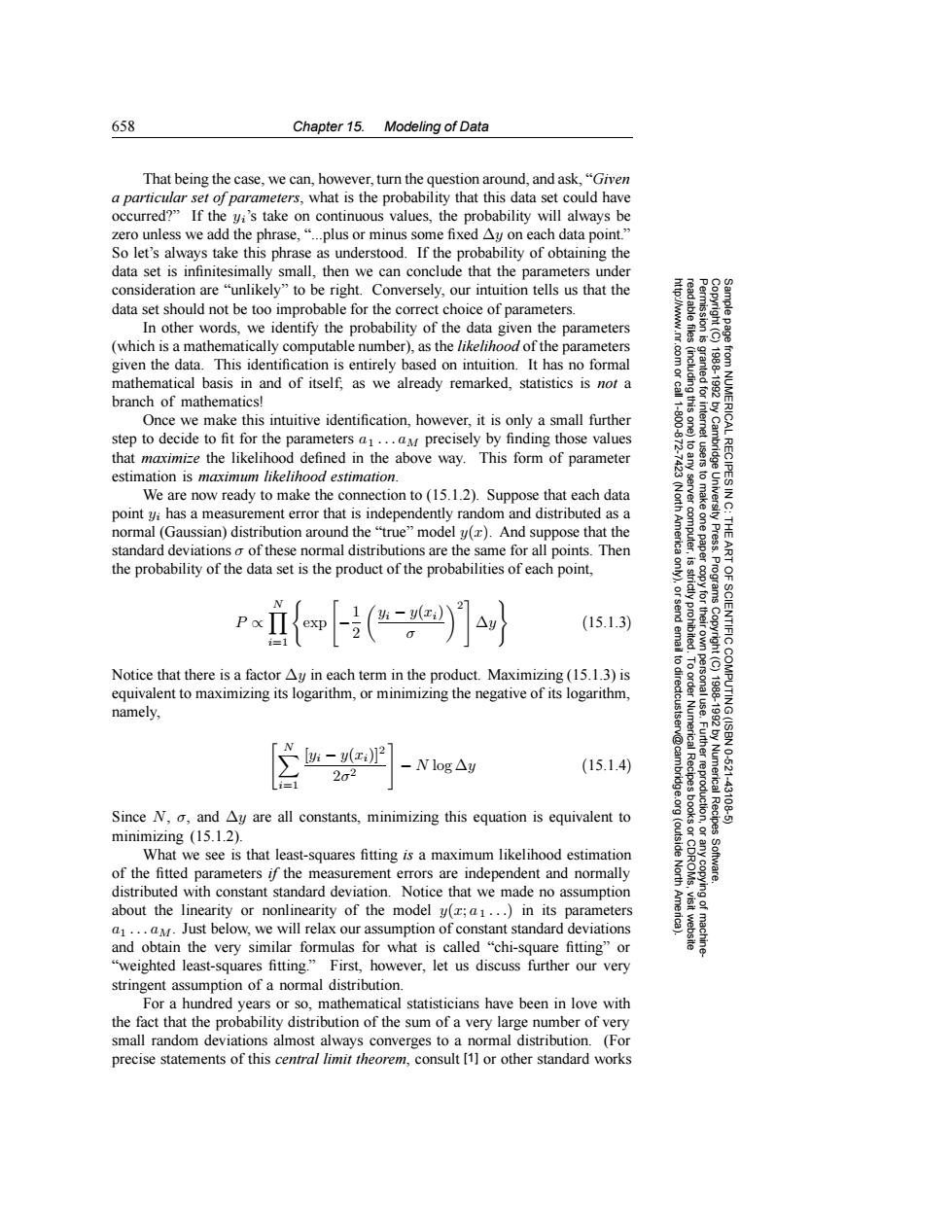

658 Chapter 15.Modeling of Data That being the case,we can,however,turn the question around,and ask."Given a particular set ofparameters,what is the probability that this data set could have occurred?"If the yi's take on continuous values,the probability will always be zero unless we add the phrase,"..plus or minus some fixed Ay on each data point." So let's always take this phrase as understood.If the probability of obtaining the data set is infinitesimally small,then we can conclude that the parameters under consideration are "unlikely"to be right.Conversely,our intuition tells us that the data set should not be too improbable for the correct choice of parameters. In other words,we identify the probability of the data given the parameters (which is a mathematically computable number),as the likelihood of the parameters 8 given the data.This identification is entirely based on intuition.It has no formal mathematical basis in and of itself as we already remarked,statistics is not a branch of mathematics! Once we make this intuitive identification,however,it is only a small further 餐 step to decide to fit for the parameters a...a precisely by finding those values that maximize the likelihood defined in the above way.This form of parameter estimation is maximum likelihood estimation. RECIPES We are now ready to make the connection to(15.1.2).Suppose that each data point yi has a measurement error that is independently random and distributed as a 男新 9 normal(Gaussian)distribution around the "true"model y(z).And suppose that the standard deviations o of these normal distributions are the same for all points.Then the probability of the data set is the product of the probabilities of each point, 力 (15.1.3) 6 Notice that there is a factor Ay in each term in the product.Maximizing(15.1.3)is equivalent to maximizing its logarithm,or minimizing the negative of its logarithm. namely, :-y()]2 202 -Nlog△y (15.1.4) 鱼 Numerica 10.621 43126 Since N,o,and Ay are all constants,minimizing this equation is equivalent to minimizing (15.1.2). What we see is that least-squares fitting is a maximum likelihood estimation of the fitted parameters if the measurement errors are independent and normally North distributed with constant standard deviation.Notice that we made no assumption about the linearity or nonlinearity of the model y(;a1...)in its parameters a1...aM.Just below,we will relax our assumption of constant standard deviations and obtain the very similar formulas for what is called "chi-square fitting"or "weighted least-squares fitting."First,however,let us discuss further our very stringent assumption of a normal distribution. For a hundred years or so,mathematical statisticians have been in love with the fact that the probability distribution of the sum of a very large number of very small random deviations almost always converges to a normal distribution.(For precise statements of this central limit theorem,consult [1]or other standard works658 Chapter 15. Modeling of Data Permission is granted for internet users to make one paper copy for their own personal use. Further reproduction, or any copyin Copyright (C) 1988-1992 by Cambridge University Press. Programs Copyright (C) 1988-1992 by Numerical Recipes Software. Sample page from NUMERICAL RECIPES IN C: THE ART OF SCIENTIFIC COMPUTING (ISBN 0-521-43108-5) g of machinereadable files (including this one) to any server computer, is strictly prohibited. To order Numerical Recipes books or CDROMs, visit website http://www.nr.com or call 1-800-872-7423 (North America only), or send email to directcustserv@cambridge.org (outside North America). That being the case, we can, however, turn the question around, and ask, “Given a particular set of parameters, what is the probability that this data set could have occurred?” If the yi’s take on continuous values, the probability will always be zero unless we add the phrase, “...plus or minus some fixed ∆y on each data point.” So let’s always take this phrase as understood. If the probability of obtaining the data set is infinitesimally small, then we can conclude that the parameters under consideration are “unlikely” to be right. Conversely, our intuition tells us that the data set should not be too improbable for the correct choice of parameters. In other words, we identify the probability of the data given the parameters (which is a mathematically computable number), as the likelihood of the parameters given the data. This identification is entirely based on intuition. It has no formal mathematical basis in and of itself; as we already remarked, statistics is not a branch of mathematics! Once we make this intuitive identification, however, it is only a small further step to decide to fit for the parameters a1 ...aM precisely by finding those values that maximize the likelihood defined in the above way. This form of parameter estimation is maximum likelihood estimation. We are now ready to make the connection to (15.1.2). Suppose that each data point yi has a measurement error that is independently random and distributed as a normal (Gaussian) distribution around the “true” model y(x). And suppose that the standard deviations σ of these normal distributions are the same for all points. Then the probability of the data set is the product of the probabilities of each point, P ∝ N i=1 exp −1 2 yi − y(xi) σ 2 ∆y (15.1.3) Notice that there is a factor ∆y in each term in the product. Maximizing (15.1.3) is equivalent to maximizing its logarithm, or minimizing the negative of its logarithm, namely, N i=1 [yi − y(xi)]2 2σ2 − N log ∆y (15.1.4) Since N, σ, and ∆y are all constants, minimizing this equation is equivalent to minimizing (15.1.2). What we see is that least-squares fitting is a maximum likelihood estimation of the fitted parameters if the measurement errors are independent and normally distributed with constant standard deviation. Notice that we made no assumption about the linearity or nonlinearity of the model y(x; a 1 ...) in its parameters a1 ...aM. Just below, we will relax our assumption of constant standard deviations and obtain the very similar formulas for what is called “chi-square fitting” or “weighted least-squares fitting.” First, however, let us discuss further our very stringent assumption of a normal distribution. For a hundred years or so, mathematical statisticians have been in love with the fact that the probability distribution of the sum of a very large number of very small random deviations almost always converges to a normal distribution. (For precise statements of this central limit theorem, consult [1] or other standard works��