正在加载图片...

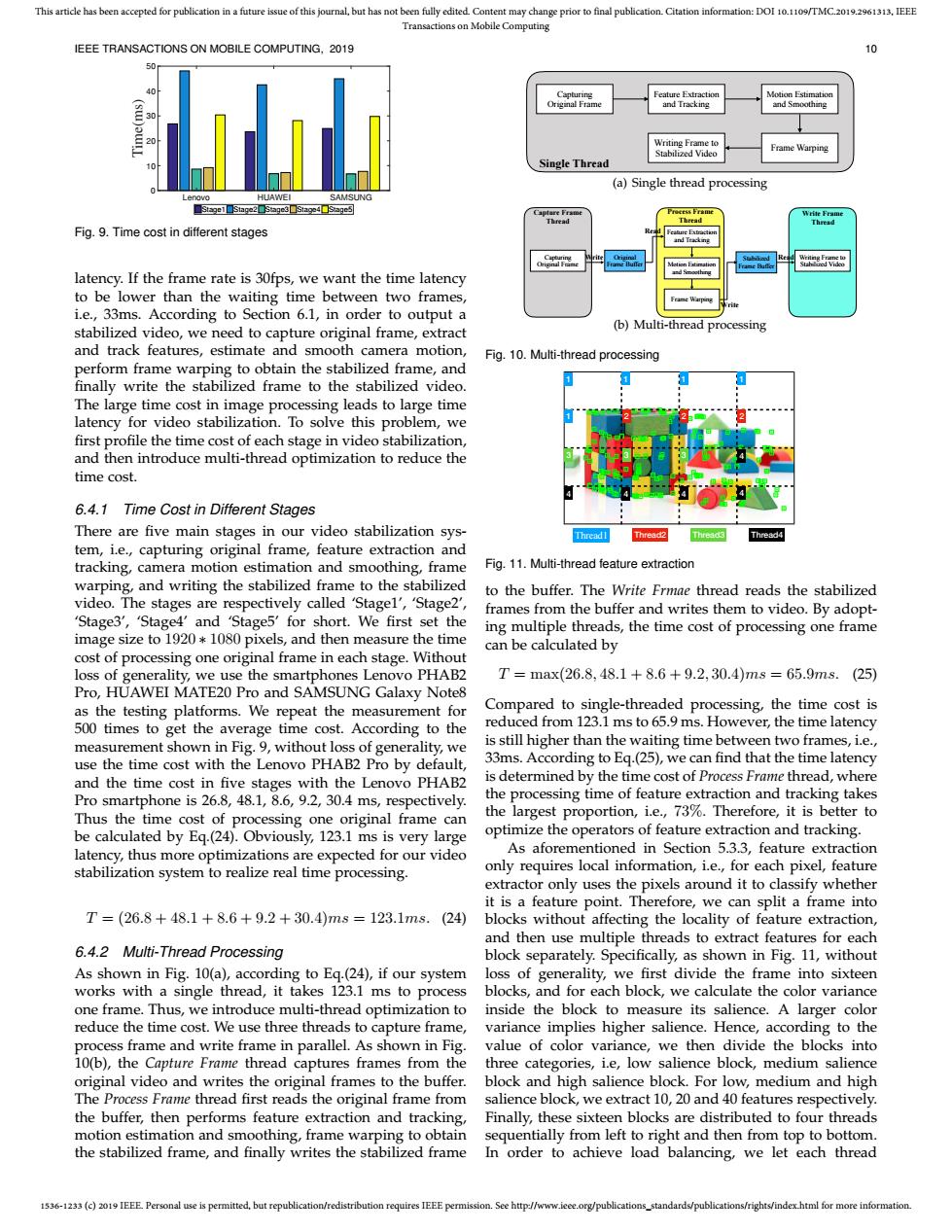

This article has been accepted for publication in a future issue of this journal,but has not been fully edited.Content may change prior to final publication.Citation information:DOI 10.1109/TMC.2019.2961313.IEEE Transactions on Mobile Computing IEEE TRANSACTIONS ON MOBILE COMPUTING,2019 10 山 eature Extracton and Tracking and Smoothing Writing Frame to Frame Warping Stabilized Video Single Thread (a)Single thread processing HUAWEI SAMSUNG ■3ta91Sta9e23a9e3■Sa24☐a9e☒ re Frame EssT室配 Thread Thread Fig.9.Time cost in different stages g latency.If the frame rate is 30fps,we want the time latency to be lower than the waiting time between two frames, i.e.,33ms.According to Section 6.1,in order to output a stabilized video,we need to capture original frame,extract (b)Multi-thread processing and track features,estimate and smooth camera motion, Fig.10.Multi-thread processing perform frame warping to obtain the stabilized frame,and finally write the stabilized frame to the stabilized video. The large time cost in image processing leads to large time latency for video stabilization.To solve this problem,we first profile the time cost of each stage in video stabilization, and then introduce multi-thread optimization to reduce the time cost. 6.4.1 Time Cost in Different Stages There are five main stages in our video stabilization sys- Threadl Thread2 Thread4 tem,i.e.,capturing original frame,feature extraction and tracking,camera motion estimation and smoothing,frame Fig.11.Multi-thread feature extraction warping,and writing the stabilized frame to the stabilized to the buffer.The Write Frmae thread reads the stabilized video.The stages are respectively called 'Stagel','Stage2', frames from the buffer and writes them to video.By adopt- 'Stage3','Stage4'and 'Stage5'for short.We first set the ing multiple threads,the time cost of processing one frame image size to 1920*1080 pixels,and then measure the time can be calculated by cost of processing one original frame in each stage.Without loss of generality,we use the smartphones Lenovo PHAB2 T=max(26.8,48.1+8.6+9.2,30.4)ms=65.9ms.(25) Pro,HUAWEI MATE20 Pro and SAMSUNG Galaxy Note8 as the testing platforms.We repeat the measurement for Compared to single-threaded processing,the time cost is 500 times to get the average time cost.According to the reduced from 123.1 ms to 65.9 ms.However,the time latency measurement shown in Fig.9,without loss of generality,we is still higher than the waiting time between two frames,i.e., use the time cost with the Lenovo PHAB2 Pro by default, 33ms.According to Eq.(25),we can find that the time latency and the time cost in five stages with the Lenovo PHAB2 is determined by the time cost of Process Frame thread,where Pro smartphone is 26.8,48.1,8.6,9.2,30.4 ms,respectively. the processing time of feature extraction and tracking takes Thus the time cost of processing one original frame can the largest proportion,i.e.,73%.Therefore,it is better to be calculated by Eq.(24).Obviously,123.1 ms is very large optimize the operators of feature extraction and tracking. latency,thus more optimizations are expected for our video As aforementioned in Section 5.3.3,feature extraction stabilization system to realize real time processing. only requires local information,i.e.,for each pixel,feature extractor only uses the pixels around it to classify whether it is a feature point.Therefore,we can split a frame into T=(26.8+48.1+8.6+9.2+30.4)ms=123.1ms.(24) blocks without affecting the locality of feature extraction, and then use multiple threads to extract features for each 6.4.2 Multi-Thread Processing block separately.Specifically,as shown in Fig.11,without As shown in Fig.10(a),according to Eq.(24),if our system loss of generality,we first divide the frame into sixteen works with a single thread,it takes 123.1 ms to process blocks,and for each block,we calculate the color variance one frame.Thus,we introduce multi-thread optimization to inside the block to measure its salience.A larger color reduce the time cost.We use three threads to capture frame, variance implies higher salience.Hence,according to the process frame and write frame in parallel.As shown in Fig. value of color variance,we then divide the blocks into 10(b),the Capture Frame thread captures frames from the three categories,i.e,low salience block,medium salience original video and writes the original frames to the buffer. block and high salience block.For low,medium and high The Process Frame thread first reads the original frame from salience block,we extract 10,20 and 40 features respectively. the buffer,then performs feature extraction and tracking, Finally,these sixteen blocks are distributed to four threads motion estimation and smoothing,frame warping to obtain sequentially from left to right and then from top to bottom. the stabilized frame,and finally writes the stabilized frame In order to achieve load balancing,we let each thread 1536-1233(c)2019 IEEE Personal use is permitted,but republication/redistribution requires IEEE permission.See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.1536-1233 (c) 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TMC.2019.2961313, IEEE Transactions on Mobile Computing IEEE TRANSACTIONS ON MOBILE COMPUTING, 2019 10 Lenovo HUAWEI SAMSUNG 0 10 20 30 40 50 Time(ms) Stage1 Stage2 Stage3 Stage4 Stage5 Fig. 9. Time cost in different stages latency. If the frame rate is 30fps, we want the time latency to be lower than the waiting time between two frames, i.e., 33ms. According to Section 6.1, in order to output a stabilized video, we need to capture original frame, extract and track features, estimate and smooth camera motion, perform frame warping to obtain the stabilized frame, and finally write the stabilized frame to the stabilized video. The large time cost in image processing leads to large time latency for video stabilization. To solve this problem, we first profile the time cost of each stage in video stabilization, and then introduce multi-thread optimization to reduce the time cost. 6.4.1 Time Cost in Different Stages There are five main stages in our video stabilization system, i.e., capturing original frame, feature extraction and tracking, camera motion estimation and smoothing, frame warping, and writing the stabilized frame to the stabilized video. The stages are respectively called ‘Stage1’, ‘Stage2’, ‘Stage3’, ‘Stage4’ and ‘Stage5’ for short. We first set the image size to 1920 ∗ 1080 pixels, and then measure the time cost of processing one original frame in each stage. Without loss of generality, we use the smartphones Lenovo PHAB2 Pro, HUAWEI MATE20 Pro and SAMSUNG Galaxy Note8 as the testing platforms. We repeat the measurement for 500 times to get the average time cost. According to the measurement shown in Fig. 9, without loss of generality, we use the time cost with the Lenovo PHAB2 Pro by default, and the time cost in five stages with the Lenovo PHAB2 Pro smartphone is 26.8, 48.1, 8.6, 9.2, 30.4 ms, respectively. Thus the time cost of processing one original frame can be calculated by Eq.(24). Obviously, 123.1 ms is very large latency, thus more optimizations are expected for our video stabilization system to realize real time processing. T = (26.8 + 48.1 + 8.6 + 9.2 + 30.4)ms = 123.1ms. (24) 6.4.2 Multi-Thread Processing As shown in Fig. 10(a), according to Eq.(24), if our system works with a single thread, it takes 123.1 ms to process one frame. Thus, we introduce multi-thread optimization to reduce the time cost. We use three threads to capture frame, process frame and write frame in parallel. As shown in Fig. 10(b), the Capture Frame thread captures frames from the original video and writes the original frames to the buffer. The Process Frame thread first reads the original frame from the buffer, then performs feature extraction and tracking, motion estimation and smoothing, frame warping to obtain the stabilized frame, and finally writes the stabilized frame Capturing Original Frame Feature Extraction and Tracking Motion Estimation and Smoothing Frame Warping Writing Frame to Stabilized Video Single Thread (a) Single thread processing Capturing Original Frame Capture Frame Thread Process Frame Thread Write Frame Thread Original Frame Buffer Feature Extraction and Tracking Motion Estimation and Smoothing Frame Warping Writing Frame to Stabilized Video Stabilized Frame Buffer Write Write Read Read (b) Multi-thread processing Fig. 10. Multi-thread processing 1 1 1 1 1 2 2 2 3 3 3 4 4 4 4 4 Thread1 Thread2 Thread3 Thread4 Fig. 11. Multi-thread feature extraction to the buffer. The Write Frmae thread reads the stabilized frames from the buffer and writes them to video. By adopting multiple threads, the time cost of processing one frame can be calculated by T = max(26.8, 48.1 + 8.6 + 9.2, 30.4)ms = 65.9ms. (25) Compared to single-threaded processing, the time cost is reduced from 123.1 ms to 65.9 ms. However, the time latency is still higher than the waiting time between two frames, i.e., 33ms. According to Eq.(25), we can find that the time latency is determined by the time cost of Process Frame thread, where the processing time of feature extraction and tracking takes the largest proportion, i.e., 73%. Therefore, it is better to optimize the operators of feature extraction and tracking. As aforementioned in Section 5.3.3, feature extraction only requires local information, i.e., for each pixel, feature extractor only uses the pixels around it to classify whether it is a feature point. Therefore, we can split a frame into blocks without affecting the locality of feature extraction, and then use multiple threads to extract features for each block separately. Specifically, as shown in Fig. 11, without loss of generality, we first divide the frame into sixteen blocks, and for each block, we calculate the color variance inside the block to measure its salience. A larger color variance implies higher salience. Hence, according to the value of color variance, we then divide the blocks into three categories, i.e, low salience block, medium salience block and high salience block. For low, medium and high salience block, we extract 10, 20 and 40 features respectively. Finally, these sixteen blocks are distributed to four threads sequentially from left to right and then from top to bottom. In order to achieve load balancing, we let each thread