正在加载图片...

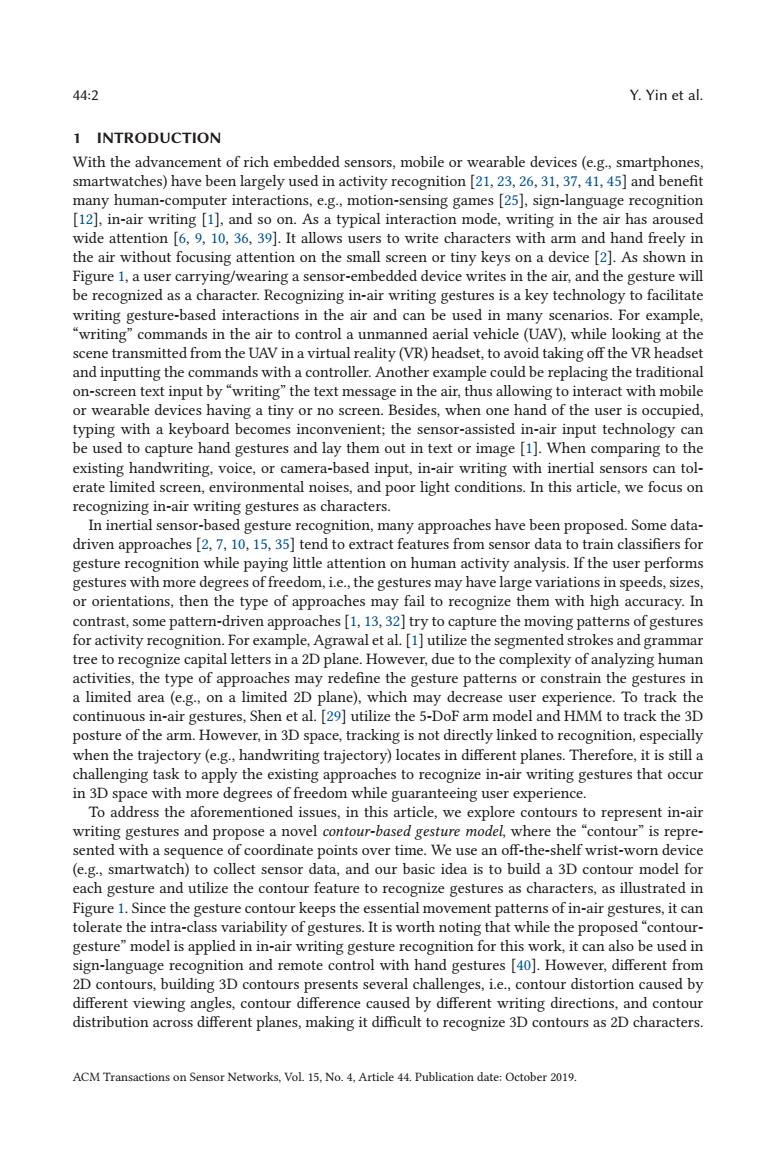

44:2 Y.Yin et al. 1 INTRODUCTION With the advancement of rich embedded sensors,mobile or wearable devices (e.g.,smartphones, smartwatches)have been largely used in activity recognition [21,23,26,31,37,41,45]and benefit many human-computer interactions,e.g.,motion-sensing games [25],sign-language recognition [12],in-air writing [1],and so on.As a typical interaction mode,writing in the air has aroused wide attention [6,9,10,36,39].It allows users to write characters with arm and hand freely in the air without focusing attention on the small screen or tiny keys on a device [2].As shown in Figure 1,a user carrying/wearing a sensor-embedded device writes in the air,and the gesture will be recognized as a character.Recognizing in-air writing gestures is a key technology to facilitate writing gesture-based interactions in the air and can be used in many scenarios.For example, 'writing"commands in the air to control a unmanned aerial vehicle(UAV),while looking at the scene transmitted from the UAV in a virtual reality(VR)headset,to avoid taking off the VR headset and inputting the commands with a controller.Another example could be replacing the traditional on-screen text input by"writing"the text message in the air,thus allowing to interact with mobile or wearable devices having a tiny or no screen.Besides,when one hand of the user is occupied, typing with a keyboard becomes inconvenient;the sensor-assisted in-air input technology can be used to capture hand gestures and lay them out in text or image [1].When comparing to the existing handwriting,voice,or camera-based input,in-air writing with inertial sensors can tol- erate limited screen,environmental noises,and poor light conditions.In this article,we focus on recognizing in-air writing gestures as characters. In inertial sensor-based gesture recognition,many approaches have been proposed.Some data- driven approaches [2,7,10,15,35]tend to extract features from sensor data to train classifiers for gesture recognition while paying little attention on human activity analysis.If the user performs gestures with more degrees of freedom,i.e.,the gestures may have large variations in speeds,sizes, or orientations,then the type of approaches may fail to recognize them with high accuracy.In contrast,some pattern-driven approaches[1,13,32]try to capture the moving patterns of gestures for activity recognition.For example,Agrawal et al.[1]utilize the segmented strokes and grammar tree to recognize capital letters in a 2D plane.However,due to the complexity of analyzing human activities,the type of approaches may redefine the gesture patterns or constrain the gestures in a limited area (e.g.,on a limited 2D plane),which may decrease user experience.To track the continuous in-air gestures,Shen et al.[29]utilize the 5-DoF arm model and HMM to track the 3D posture of the arm.However,in 3D space,tracking is not directly linked to recognition,especially when the trajectory(e.g..handwriting trajectory)locates in different planes.Therefore,it is still a challenging task to apply the existing approaches to recognize in-air writing gestures that occur in 3D space with more degrees of freedom while guaranteeing user experience. To address the aforementioned issues,in this article,we explore contours to represent in-air writing gestures and propose a novel contour-based gesture model,where the "contour"is repre- sented with a sequence of coordinate points over time.We use an off-the-shelf wrist-worn device (e.g,smartwatch)to collect sensor data,and our basic idea is to build a 3D contour model for each gesture and utilize the contour feature to recognize gestures as characters,as illustrated in Figure 1.Since the gesture contour keeps the essential movement patterns of in-air gestures,it can tolerate the intra-class variability of gestures.It is worth noting that while the proposed"contour- gesture"model is applied in in-air writing gesture recognition for this work,it can also be used in sign-language recognition and remote control with hand gestures [40].However,different from 2D contours,building 3D contours presents several challenges,i.e.,contour distortion caused by different viewing angles,contour difference caused by different writing directions,and contour distribution across different planes,making it difficult to recognize 3D contours as 2D characters. ACM Transactions on Sensor Networks,Vol 15.No.4,Article 44.Publication date:October 2019.44:2 Y. Yin et al. 1 INTRODUCTION With the advancement of rich embedded sensors, mobile or wearable devices (e.g., smartphones, smartwatches) have been largely used in activity recognition [21, 23, 26, 31, 37, 41, 45] and benefit many human-computer interactions, e.g., motion-sensing games [25], sign-language recognition [12], in-air writing [1], and so on. As a typical interaction mode, writing in the air has aroused wide attention [6, 9, 10, 36, 39]. It allows users to write characters with arm and hand freely in the air without focusing attention on the small screen or tiny keys on a device [2]. As shown in Figure 1, a user carrying/wearing a sensor-embedded device writes in the air, and the gesture will be recognized as a character. Recognizing in-air writing gestures is a key technology to facilitate writing gesture-based interactions in the air and can be used in many scenarios. For example, “writing” commands in the air to control a unmanned aerial vehicle (UAV), while looking at the scene transmitted from the UAV in a virtual reality (VR) headset, to avoid taking off the VR headset and inputting the commands with a controller. Another example could be replacing the traditional on-screen text input by “writing” the text message in the air, thus allowing to interact with mobile or wearable devices having a tiny or no screen. Besides, when one hand of the user is occupied, typing with a keyboard becomes inconvenient; the sensor-assisted in-air input technology can be used to capture hand gestures and lay them out in text or image [1]. When comparing to the existing handwriting, voice, or camera-based input, in-air writing with inertial sensors can tolerate limited screen, environmental noises, and poor light conditions. In this article, we focus on recognizing in-air writing gestures as characters. In inertial sensor-based gesture recognition, many approaches have been proposed. Some datadriven approaches [2, 7, 10, 15, 35] tend to extract features from sensor data to train classifiers for gesture recognition while paying little attention on human activity analysis. If the user performs gestures with more degrees of freedom, i.e., the gestures may have large variations in speeds, sizes, or orientations, then the type of approaches may fail to recognize them with high accuracy. In contrast, some pattern-driven approaches [1, 13, 32] try to capture the moving patterns of gestures for activity recognition. For example, Agrawal et al. [1] utilize the segmented strokes and grammar tree to recognize capital letters in a 2D plane. However, due to the complexity of analyzing human activities, the type of approaches may redefine the gesture patterns or constrain the gestures in a limited area (e.g., on a limited 2D plane), which may decrease user experience. To track the continuous in-air gestures, Shen et al. [29] utilize the 5-DoF arm model and HMM to track the 3D posture of the arm. However, in 3D space, tracking is not directly linked to recognition, especially when the trajectory (e.g., handwriting trajectory) locates in different planes. Therefore, it is still a challenging task to apply the existing approaches to recognize in-air writing gestures that occur in 3D space with more degrees of freedom while guaranteeing user experience. To address the aforementioned issues, in this article, we explore contours to represent in-air writing gestures and propose a novel contour-based gesture model, where the “contour” is represented with a sequence of coordinate points over time. We use an off-the-shelf wrist-worn device (e.g., smartwatch) to collect sensor data, and our basic idea is to build a 3D contour model for each gesture and utilize the contour feature to recognize gestures as characters, as illustrated in Figure 1. Since the gesture contour keeps the essential movement patterns of in-air gestures, it can tolerate the intra-class variability of gestures. It is worth noting that while the proposed “contourgesture” model is applied in in-air writing gesture recognition for this work, it can also be used in sign-language recognition and remote control with hand gestures [40]. However, different from 2D contours, building 3D contours presents several challenges, i.e., contour distortion caused by different viewing angles, contour difference caused by different writing directions, and contour distribution across different planes, making it difficult to recognize 3D contours as 2D characters. ACM Transactions on Sensor Networks, Vol. 15, No. 4, Article 44. Publication date: October 2019