正在加载图片...

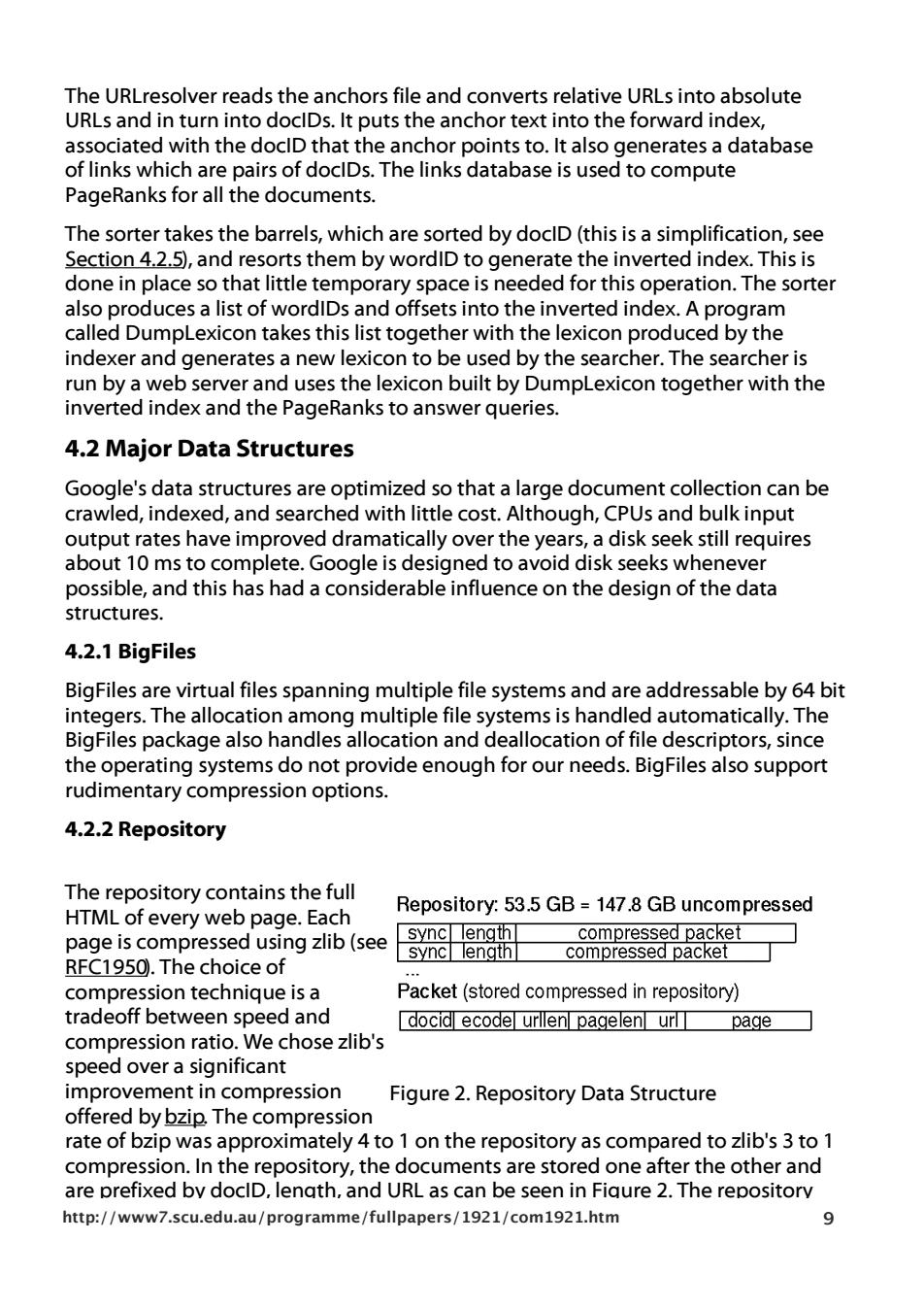

The URLresolver reads the anchors file and converts relative URLs into absolute URLs and in turn into doclDs.It puts the anchor text into the forward index, associated with the doclD that the anchor points to.It also generates a database of links which are pairs of doclDs.The links database is used to compute PageRanks for all the documents. The sorter takes the barrels,which are sorted by doclD(this is a simplification,see Section 4.2.5,and resorts them by wordID to generate the inverted index.This is done in place so that little temporary space is needed for this operation.The sorter also produces a list of wordIDs and offsets into the inverted index.A program called DumpLexicon takes this list together with the lexicon produced by the indexer and generates a new lexicon to be used by the searcher.The searcher is run by a web server and uses the lexicon built by DumpLexicon together with the inverted index and the PageRanks to answer queries. 4.2 Major Data Structures Google's data structures are optimized so that a large document collection can be crawled,indexed,and searched with little cost.Although,CPUs and bulk input output rates have improved dramatically over the years,a disk seek still requires about 10 ms to complete.Google is designed to avoid disk seeks whenever possible,and this has had a considerable influence on the design of the data structures. 4.2.1 BigFiles BigFiles are virtual files spanning multiple file systems and are addressable by 64 bit integers.The allocation among multiple file systems is handled automatically.The BigFiles package also handles allocation and deallocation of file descriptors,since the operating systems do not provide enough for our needs.BigFiles also support rudimentary compression options. 4.2.2 Repository The repository contains the full Repository:53.5 GB=147.8 GB uncompressed HTML of every web page.Each page is compressed using zlib(see sync length compressed packet sync length compressed packet RFC1950.The choice of compression technique is a Packet(stored compressed in repository) tradeoff between speed and docid ecode urllen pagelen url page compression ratio.We chose zlib's speed over a significant improvement in compression Figure 2.Repository Data Structure offered by bzip.The compression rate of bzip was approximately 4 to 1 on the repository as compared to zlib's 3 to 1 compression.In the repository,the documents are stored one after the other and are prefixed by doclD,lenath,and URL as can be seen in Fiqure 2.The repository http://www7.scu.edu.au/programme/fullpapers/1921/com1921.htm 9The URLresolver reads the anchors file and converts relative URLs into absolute URLs and in turn into docIDs. It puts the anchor text into the forward index, associated with the docID that the anchor points to. It also generates a database of links which are pairs of docIDs. The links database is used to compute PageRanks for all the documents. The sorter takes the barrels, which are sorted by docID (this is a simplification, see Section 4.2.5), and resorts them by wordID to generate the inverted index. This is done in place so that little temporary space is needed for this operation. The sorter also produces a list of wordIDs and offsets into the inverted index. A program called DumpLexicon takes this list together with the lexicon produced by the indexer and generates a new lexicon to be used by the searcher. The searcher is run by a web server and uses the lexicon built by DumpLexicon together with the inverted index and the PageRanks to answer queries. 4.2 Major Data Structures Google's data structures are optimized so that a large document collection can be crawled, indexed, and searched with little cost. Although, CPUs and bulk input output rates have improved dramatically over the years, a disk seek still requires about 10 ms to complete. Google is designed to avoid disk seeks whenever possible, and this has had a considerable influence on the design of the data structures. 4.2.1 BigFiles BigFiles are virtual files spanning multiple file systems and are addressable by 64 bit integers. The allocation among multiple file systems is handled automatically. The BigFiles package also handles allocation and deallocation of file descriptors, since the operating systems do not provide enough for our needs. BigFiles also support rudimentary compression options. 4.2.2 Repository Figure 2. Repository Data Structure The repository contains the full HTML of every web page. Each page is compressed using zlib (see RFC1950). The choice of compression technique is a tradeoff between speed and compression ratio. We chose zlib's speed over a significant improvement in compression offered by bzip. The compression rate of bzip was approximately 4 to 1 on the repository as compared to zlib's 3 to 1 compression. In the repository, the documents are stored one after the other and are prefixed by docID, length, and URL as can be seen in Figure 2. The repository requires no other data structures to be used in order to access it. This helps with data consistency and makes development much easier; we can rebuild all the other data structures from only the repository and a file which lists crawler errors. http://www7.scu.edu.au/programme/fullpapers/1921/com1921.htm 9