正在加载图片...

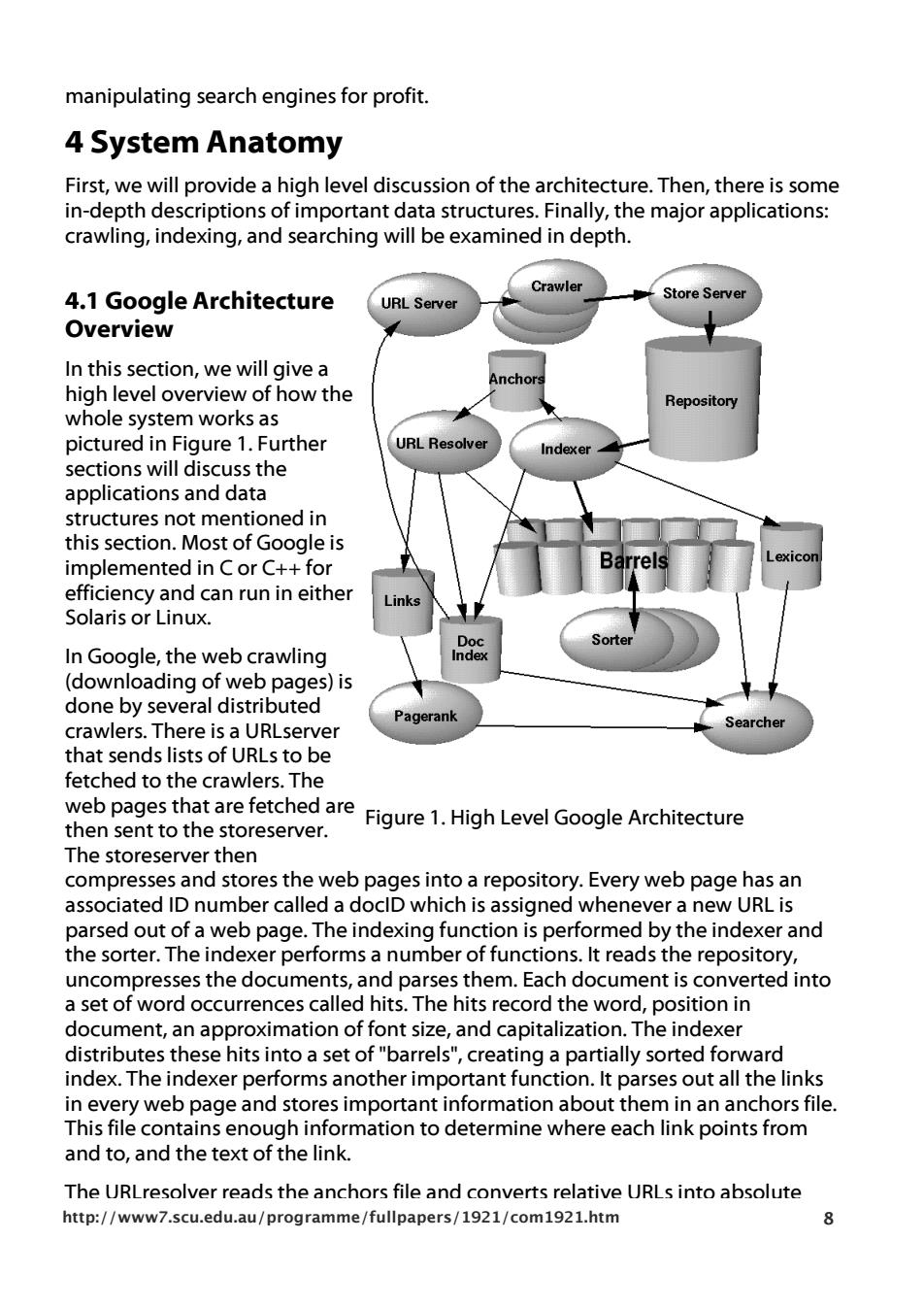

manipulating search engines for profit. 4 System Anatomy First,we will provide a high level discussion of the architecture.Then,there is some in-depth descriptions of important data structures.Finally,the major applications: crawling,indexing,and searching will be examined in depth. Crawler 4.1 Google Architecture URL Server Store Server Overview In this section,we will give a high level overview of how the Repository whole system works as pictured in Figure 1.Further URL Resolver Indexer sections will discuss the applications and data structures not mentioned in this section.Most of Google is arrel Lexicon implemented in C or C++for efficiency and can run in either Links Solaris or Linux. Doc Sorter In Google,the web crawling Index (downloading of web pages)is done by several distributed Pagerank crawlers.There is a URLserver Searcher that sends lists of URLs to be fetched to the crawlers.The web pages that are fetched are Figure 1.High Level Googe Architecture then sent to the storeserver. The storeserver then compresses and stores the web pages into a repository.Every web page has an associated ID number called a doclD which is assigned whenever a new URL is parsed out of a web page.The indexing function is performed by the indexer and the sorter.The indexer performs a number of functions.It reads the repository, uncompresses the documents,and parses them.Each document is converted into a set of word occurrences called hits.The hits record the word,position in document,an approximation of font size,and capitalization.The indexer distributes these hits into a set of "barrels",creating a partially sorted forward index.The indexer performs another important function.It parses out all the links in every web page and stores important information about them in an anchors file. This file contains enough information to determine where each link points from and to,and the text of the link. The URLresolver reads the anchors file and converts relative URLs into absolute http://www7.scu.edu.au/programme/fullpapers/1921/com1921.htm 8Another big difference between the web and traditional well controlled collections is that there is virtually no control over what people can put on the web. Couple this flexibility to publish anything with the enormous influence of search engines to route traffic and companies which deliberately manipulating search engines for profit become a serious problem. This problem that has not been addressed in traditional closed information retrieval systems. Also, it is interesting to note that metadata efforts have largely failed with web search engines, because any text on the page which is not directly represented to the user is abused to manipulate search engines. There are even numerous companies which specialize in manipulating search engines for profit. 4 System Anatomy First, we will provide a high level discussion of the architecture. Then, there is some in-depth descriptions of important data structures. Finally, the major applications: crawling, indexing, and searching will be examined in depth. Figure 1. High Level Google Architecture 4.1 Google Architecture Overview In this section, we will give a high level overview of how the whole system works as pictured in Figure 1. Further sections will discuss the applications and data structures not mentioned in this section. Most of Google is implemented in C or C++ for efficiency and can run in either Solaris or Linux. In Google, the web crawling (downloading of web pages) is done by several distributed crawlers. There is a URLserver that sends lists of URLs to be fetched to the crawlers. The web pages that are fetched are then sent to the storeserver. The storeserver then compresses and stores the web pages into a repository. Every web page has an associated ID number called a docID which is assigned whenever a new URL is parsed out of a web page. The indexing function is performed by the indexer and the sorter. The indexer performs a number of functions. It reads the repository, uncompresses the documents, and parses them. Each document is converted into a set of word occurrences called hits. The hits record the word, position in document, an approximation of font size, and capitalization. The indexer distributes these hits into a set of "barrels", creating a partially sorted forward index. The indexer performs another important function. It parses out all the links in every web page and stores important information about them in an anchors file. This file contains enough information to determine where each link points from and to, and the text of the link. The URLresolver reads the anchors file and converts relative URLs into absolute URLs and in turn into docIDs. It puts the anchor text into the forward index, associated with the docID that the anchor points to. It also generates a database of links which are pairs of docIDs. The links database is used to compute PageRanks for all the documents. http://www7.scu.edu.au/programme/fullpapers/1921/com1921.htm 8