正在加载图片...

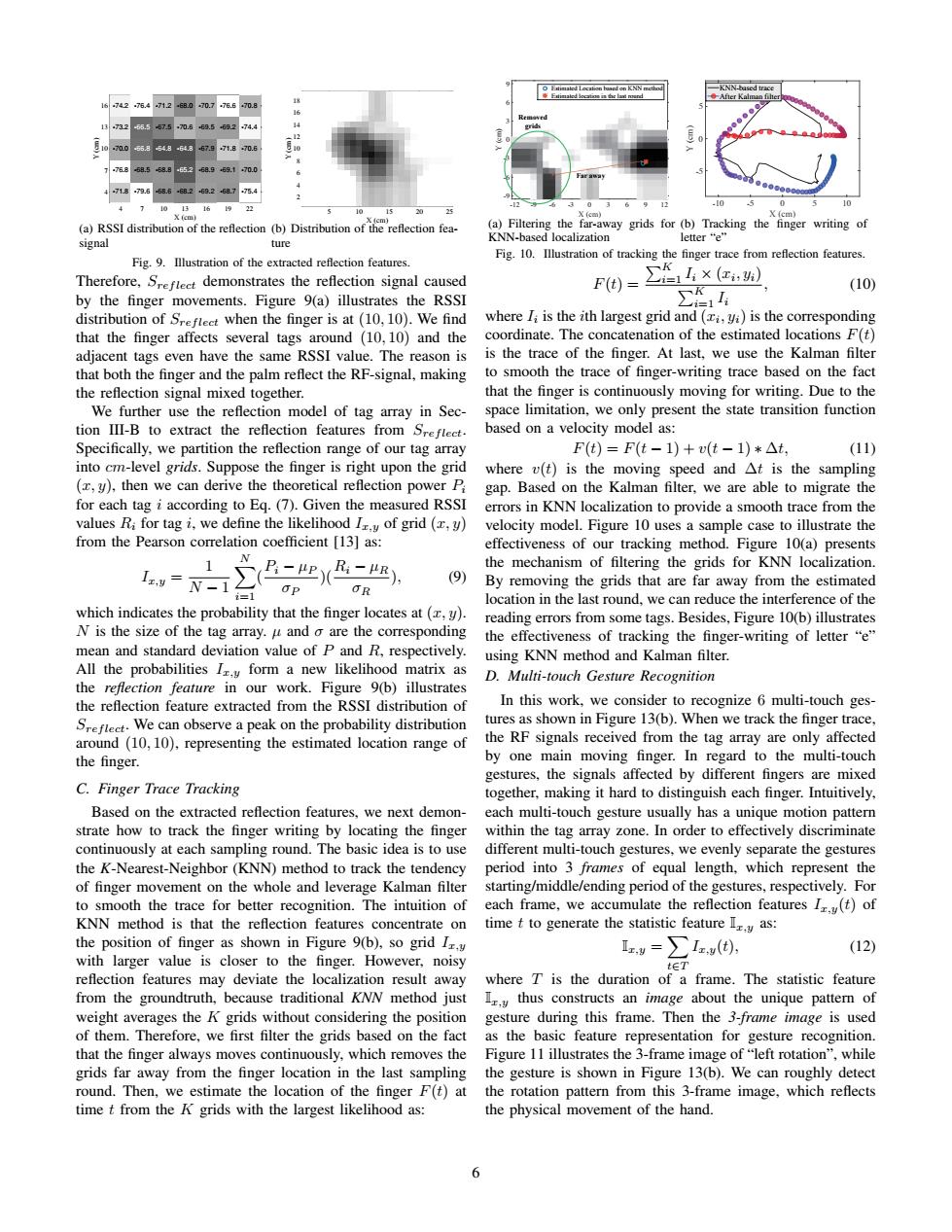

NN-based trac 1674276.4-71.2-68070.776.670.8 173255.57570568.5682 74.4 1070055a548648679718 775858558.865268.969.170.0 6 471873.5-58.6-68269.268775.4 9 4 1 101B16922 -12 6 30 25 (a)RSSI distribution of the reflection (b)Distribution of the reflection fea (a)Filering theway grids for (b)Tracking thenger writing of KNN-based localization letter“e” signal ture Fig.10.Illustration of tracking the finger trace from reflection features Fig.9.Illustration of the extracted reflection features. Therefore,Sreftect demonstrates the reflection signal caused F0=∑x, (10) by the finger movements.Figure 9(a)illustrates the RSSI ∑4 distribution of Sreftect when the finger is at(10,10).We find where Ii is the ith largest grid and(i,yi)is the corresponding that the finger affects several tags around (10,10)and the coordinate.The concatenation of the estimated locations F(t) adjacent tags even have the same RSSI value.The reason is is the trace of the finger.At last,we use the Kalman filter that both the finger and the palm reflect the RF-signal,making to smooth the trace of finger-writing trace based on the fact the reflection signal mixed together. that the finger is continuously moving for writing.Due to the We further use the reflection model of tag array in Sec- space limitation,we only present the state transition function tion III-B to extract the reflection features from Srefleet. based on a velocity model as: Specifically,we partition the reflection range of our tag array F(t)=F(t-1)+v(t-1)*△t, (11) into cm-level grids.Suppose the finger is right upon the grid where v(t)is the moving speed and At is the sampling (y),then we can derive the theoretical reflection power P gap.Based on the Kalman filter,we are able to migrate the for each tag i according to Eq.(7).Given the measured RSSI errors in KNN localization to provide a smooth trace from the values Ri for tag i,we define the likelihood I.y of grid(r,y) velocity model.Figure 10 uses a sample case to illustrate the from the Pearson correlation coefficient [13]as: effectiveness of our tracking method.Figure 10(a)presents N the mechanism of filtering the grids for KNN localization. z,y= 1 N-1台 ) (9) OR By removing the grids that are far away from the estimated location in the last round,we can reduce the interference of the which indicates the probability that the finger locates at(,y). reading errors from some tags.Besides,Figure 10(b)illustrates N is the size of the tag array.u and o are the corresponding the effectiveness of tracking the finger-writing of letter"e" mean and standard deviation value of P and R,respectively. using KNN method and Kalman filter. All the probabilities Iy form a new likelihood matrix as D.Multi-touch Gesture Recognition the refection feature in our work.Figure 9(b)illustrates the reflection feature extracted from the RSSI distribution of In this work,we consider to recognize 6 multi-touch ges- Sreftect.We can observe a peak on the probability distribution tures as shown in Figure 13(b).When we track the finger trace, around (10,10),representing the estimated location range of the RF signals received from the tag array are only affected the finger by one main moving finger.In regard to the multi-touch gestures,the signals affected by different fingers are mixed C.Finger Trace Tracking together,making it hard to distinguish each finger.Intuitively, Based on the extracted reflection features,we next demon- each multi-touch gesture usually has a unique motion pattern strate how to track the finger writing by locating the finger within the tag array zone.In order to effectively discriminate continuously at each sampling round.The basic idea is to use different multi-touch gestures,we evenly separate the gestures the K-Nearest-Neighbor (KNN)method to track the tendency period into 3 frames of equal length,which represent the of finger movement on the whole and leverage Kalman filter starting/middle/ending period of the gestures,respectively.For to smooth the trace for better recognition.The intuition of each frame,we accumulate the reflection features I(t)of KNN method is that the reflection features concentrate on time t to generate the statistic feature I.as: the position of finger as shown in Figure 9(b),so grid Ir. 1z.g=∑1zy(, (12) with larger value is closer to the finger.However,noisy tET reflection features may deviate the localization result away where T is the duration of a frame.The statistic feature from the groundtruth,because traditional KNN method just Lr.y thus constructs an image about the unique pattern of weight averages the K grids without considering the position gesture during this frame.Then the 3-frame image is used of them.Therefore,we first filter the grids based on the fact as the basic feature representation for gesture recognition. that the finger always moves continuously,which removes the Figure 11 illustrates the 3-frame image of"left rotation",while grids far away from the finger location in the last sampling the gesture is shown in Figure 13(b).We can roughly detect round.Then,we estimate the location of the finger F(t)at the rotation pattern from this 3-frame image,which reflects time t from the K grids with the largest likelihood as: the physical movement of the hand. 6X (cm) 4 7 10 13 16 19 22 Y (cm) 4 7 10 13 16 -71.8 -76.8 -70.0 -73.2 -74.2 -79.6 -68.5 -66.8 -66.5 -76.4 -68.6 -68.8 -64.8 -67.5 -71.2 -68.2 -65.2 -64.8 -70.6 -68.0 -69.2 -68.9 -67.9 -69.5 -70.7 -68.7 -69.1 -71.8 -69.2 -76.6 -75.4 -70.0 -70.6 -74.4 -70.8 -71.8 -76.8 -70.0 -73.2 -74.2 -79.6 -68.5 -66.8 -66.5 -76.4 -68.6 -68.8 -64.8 -67.5 -71.2 -68.2 -65.2 -64.8 -70.6 -68.0 -69.2 -68.9 -67.9 -69.5 -70.7 -68.7 -69.1 -71.8 -69.2 -76.6 -75.4 -70.0 -70.6 -74.4 -70.8 -71.8 -76.8 -70.0 -73.2 -74.2 -79.6 -68.5 -66.8 -66.5 -76.4 -68.6 -68.8 -64.8 -67.5 -71.2 -68.2 -65.2 -64.8 -70.6 -68.0 -69.2 -68.9 -67.9 -69.5 -70.7 -68.7 -69.1 -71.8 -69.2 -76.6 -75.4 -70.0 -70.6 -74.4 -70.8 -71.8 -76.8 -70.0 -73.2 -74.2 -79.6 -68.5 -66.8 -66.5 -76.4 -68.6 -68.8 -64.8 -67.5 -71.2 -68.2 -65.2 -64.8 -70.6 -68.0 -69.2 -68.9 -67.9 -69.5 -70.7 -68.7 -69.1 -71.8 -69.2 -76.6 -75.4 -70.0 -70.6 -74.4 -70.8 -71.8 -76.8 -70.0 -73.2 -74.2 -79.6 -68.5 -66.8 -66.5 -76.4 -68.6 -68.8 -64.8 -67.5 -71.2 -68.2 -65.2 -64.8 -70.6 -68.0 -69.2 -68.9 -67.9 -69.5 -70.7 -68.7 -69.1 -71.8 -69.2 -76.6 -75.4 -70.0 -70.6 -74.4 -70.8 (a) RSSI distribution of the reflection signal X (cm) 5 10 15 20 25 Y (cm) 2 4 6 8 10 12 14 16 18 (b) Distribution of the reflection feature Fig. 9. Illustration of the extracted reflection features. Therefore, Sref lect demonstrates the reflection signal caused by the finger movements. Figure 9(a) illustrates the RSSI distribution of Sref lect when the finger is at (10, 10). We find that the finger affects several tags around (10, 10) and the adjacent tags even have the same RSSI value. The reason is that both the finger and the palm reflect the RF-signal, making the reflection signal mixed together. We further use the reflection model of tag array in Section III-B to extract the reflection features from Sref lect. Specifically, we partition the reflection range of our tag array into cm-level grids. Suppose the finger is right upon the grid (x, y), then we can derive the theoretical reflection power Pi for each tag i according to Eq. (7). Given the measured RSSI values Ri for tag i, we define the likelihood Ix,y of grid (x, y) from the Pearson correlation coefficient [13] as: Ix,y = 1 N − 1 X N i=1 ( Pi − µP σP )(Ri − µR σR ), (9) which indicates the probability that the finger locates at (x, y). N is the size of the tag array. µ and σ are the corresponding mean and standard deviation value of P and R, respectively. All the probabilities Ix,y form a new likelihood matrix as the reflection feature in our work. Figure 9(b) illustrates the reflection feature extracted from the RSSI distribution of Sref lect. We can observe a peak on the probability distribution around (10, 10), representing the estimated location range of the finger. C. Finger Trace Tracking Based on the extracted reflection features, we next demonstrate how to track the finger writing by locating the finger continuously at each sampling round. The basic idea is to use the K-Nearest-Neighbor (KNN) method to track the tendency of finger movement on the whole and leverage Kalman filter to smooth the trace for better recognition. The intuition of KNN method is that the reflection features concentrate on the position of finger as shown in Figure 9(b), so grid Ix,y with larger value is closer to the finger. However, noisy reflection features may deviate the localization result away from the groundtruth, because traditional KNN method just weight averages the K grids without considering the position of them. Therefore, we first filter the grids based on the fact that the finger always moves continuously, which removes the grids far away from the finger location in the last sampling round. Then, we estimate the location of the finger F(t) at time t from the K grids with the largest likelihood as: X (cm) -12 -9 -6 -3 0 3 6 9 12 Y (cm) -9 -6 -3 0 3 6 9 Estimated Location based on KNN method Estimated location in the last round Removed grids Far away (a) Filtering the far-away grids for KNN-based localization X (cm) -10 -5 0 5 10 Y (cm) -5 0 5 KNN-based trace After Kalman filter (b) Tracking the finger writing of letter “e” Fig. 10. Illustration of tracking the finger trace from reflection features. F(t) = PK i=1 Ii × (xi , yi) PK i=1 Ii , (10) where Ii is the ith largest grid and (xi , yi) is the corresponding coordinate. The concatenation of the estimated locations F(t) is the trace of the finger. At last, we use the Kalman filter to smooth the trace of finger-writing trace based on the fact that the finger is continuously moving for writing. Due to the space limitation, we only present the state transition function based on a velocity model as: F(t) = F(t − 1) + v(t − 1) ∗ ∆t, (11) where v(t) is the moving speed and ∆t is the sampling gap. Based on the Kalman filter, we are able to migrate the errors in KNN localization to provide a smooth trace from the velocity model. Figure 10 uses a sample case to illustrate the effectiveness of our tracking method. Figure 10(a) presents the mechanism of filtering the grids for KNN localization. By removing the grids that are far away from the estimated location in the last round, we can reduce the interference of the reading errors from some tags. Besides, Figure 10(b) illustrates the effectiveness of tracking the finger-writing of letter “e” using KNN method and Kalman filter. D. Multi-touch Gesture Recognition In this work, we consider to recognize 6 multi-touch gestures as shown in Figure 13(b). When we track the finger trace, the RF signals received from the tag array are only affected by one main moving finger. In regard to the multi-touch gestures, the signals affected by different fingers are mixed together, making it hard to distinguish each finger. Intuitively, each multi-touch gesture usually has a unique motion pattern within the tag array zone. In order to effectively discriminate different multi-touch gestures, we evenly separate the gestures period into 3 frames of equal length, which represent the starting/middle/ending period of the gestures, respectively. For each frame, we accumulate the reflection features Ix,y(t) of time t to generate the statistic feature Ix,y as: Ix,y = X t∈T Ix,y(t), (12) where T is the duration of a frame. The statistic feature Ix,y thus constructs an image about the unique pattern of gesture during this frame. Then the 3-frame image is used as the basic feature representation for gesture recognition. Figure 11 illustrates the 3-frame image of “left rotation”, while the gesture is shown in Figure 13(b). We can roughly detect the rotation pattern from this 3-frame image, which reflects the physical movement of the hand. 6