正在加载图片...

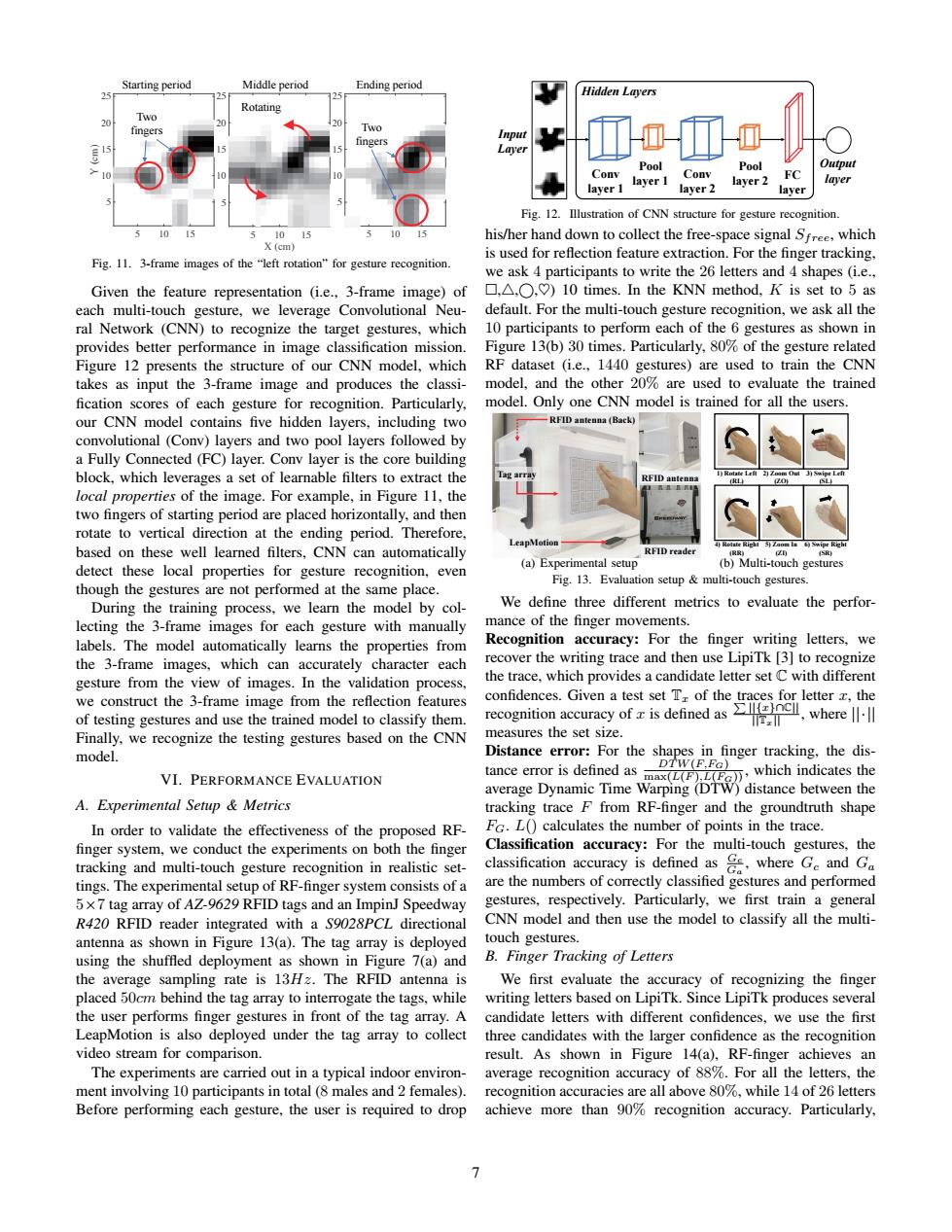

Starting period Middle period Ending period 25 Hidden Layers Rotating 10 fingers Two fingers Input Laver Pool Conv Conv Pool Output layer 1 layer 2 FC layer layer 1 layer 2 layer Fig.12.Illustration of CNN structure for gesture recognition 10 15 10 his/her hand down to collect the free-space signal Sfree,which X(cm) is used for reflection feature extraction.For the finger tracking, Fig.11.3-frame images of the "left rotation"for gesture recognition. we ask 4 participants to write the 26 letters and 4 shapes (i.e.. Given the feature representation (i.e..3-frame image)of ▣,△,O,)l0 times.In the KNN method,K is set to5as each multi-touch gesture,we leverage Convolutional Neu- default.For the multi-touch gesture recognition,we ask all the ral Network (CNN)to recognize the target gestures,which 10 participants to perform each of the 6 gestures as shown in provides better performance in image classification mission. Figure 13(b)30 times.Particularly,80%of the gesture related Figure 12 presents the structure of our CNN model,which RF dataset (i.e.,1440 gestures)are used to train the CNN takes as input the 3-frame image and produces the classi- model,and the other 20%are used to evaluate the trained fication scores of each gesture for recognition.Particularly, model.Only one CNN model is trained for all the users our CNN model contains five hidden layers,including two RFID antenna (Back) convolutional (Conv)layers and two pool layers followed by a Fully Connected (FC)layer.Conv layer is the core building block,which leverages a set of learnable filters to extract the RFID antenna local properties of the image.For example,in Figure 11,the two fingers of starting period are placed horizontally,and then rotate to vertical direction at the ending period.Therefore, LeapMotion RrRe士5功Zaom Is制wipeig五 based on these well learned filters,CNN can automatically RFID reader detect these local properties for gesture recognition,even (a)Experimental setup (b)Multi-touch gestures Fig.13.Evaluation setup multi-touch gestures. though the gestures are not performed at the same place. During the training process,we learn the model by col- We define three different metrics to evaluate the perfor- lecting the 3-frame images for each gesture with manually mance of the finger movements. labels.The model automatically learns the properties from Recognition accuracy:For the finger writing letters,we the 3-frame images,which can accurately character each recover the writing trace and then use LipiTk [3]to recognize gesture from the view of images.In the validation process, the trace,which provides a candidate letter set C with different we construct the 3-frame image from the reflection features confidences.Given a test set Tr of the traces for letter x,the of testing gestures and use the trained model to classify them. recognition accuracy of is defined as,where T Finally,we recognize the testing gestures based on the CNN measures the set size. model. Distance error:For the shapes in finger tracking,the dis- tance error is defined as. VI.PERFORMANCE EVALUATION max(()),which indicates the DTW(F.FG) average Dynamic Time Warping (DTW)distance between the A.Experimental Setup Metrics tracking trace F from RF-finger and the groundtruth shape In order to validate the effectiveness of the proposed RF- FC.L()calculates the number of points in the trace. finger system,we conduct the experiments on both the finger Classification accuracy:For the multi-touch gestures,the tracking and multi-touch gesture recognition in realistic set- classification accuracy is defined aswhere G and Ga tings.The experimental setup of RF-finger system consists of a are the numbers of correctly classified gestures and performed 5x 7 tag array of AZ-9629 RFID tags and an ImpinJ Speedway gestures,respectively.Particularly,we first train a general R420 RFID reader integrated with a S9028PCL directional CNN model and then use the model to classify all the multi- antenna as shown in Figure 13(a).The tag array is deployed touch gestures. using the shuffled deployment as shown in Figure 7(a)and B.Finger Tracking of Letters the average sampling rate is 13Hz.The RFID antenna is We first evaluate the accuracy of recognizing the finger placed 50cm behind the tag array to interrogate the tags,while writing letters based on LipiTk.Since LipiTk produces several the user performs finger gestures in front of the tag array.A candidate letters with different confidences,we use the first LeapMotion is also deployed under the tag array to collect three candidates with the larger confidence as the recognition video stream for comparison. result.As shown in Figure 14(a).RF-finger achieves an The experiments are carried out in a typical indoor environ-average recognition accuracy of 88%.For all the letters,the ment involving 10 participants in total (8 males and 2 females). recognition accuracies are all above 80%,while 14 of 26 letters Before performing each gesture,the user is required to drop achieve more than 90%recognition accuracy.Particularly, >X (cm) 5 10 15 Y (cm) 5 10 15 20 25 X (cm) 5 10 15 Y (cm) 5 10 15 20 25 X (cm) 5 10 15 Y (cm) 5 10 15 20 25 Starting period Middle period Ending period Two fingers Rotating Two fingers Fig. 11. 3-frame images of the “left rotation” for gesture recognition. Given the feature representation (i.e., 3-frame image) of each multi-touch gesture, we leverage Convolutional Neural Network (CNN) to recognize the target gestures, which provides better performance in image classification mission. Figure 12 presents the structure of our CNN model, which takes as input the 3-frame image and produces the classi- fication scores of each gesture for recognition. Particularly, our CNN model contains five hidden layers, including two convolutional (Conv) layers and two pool layers followed by a Fully Connected (FC) layer. Conv layer is the core building block, which leverages a set of learnable filters to extract the local properties of the image. For example, in Figure 11, the two fingers of starting period are placed horizontally, and then rotate to vertical direction at the ending period. Therefore, based on these well learned filters, CNN can automatically detect these local properties for gesture recognition, even though the gestures are not performed at the same place. During the training process, we learn the model by collecting the 3-frame images for each gesture with manually labels. The model automatically learns the properties from the 3-frame images, which can accurately character each gesture from the view of images. In the validation process, we construct the 3-frame image from the reflection features of testing gestures and use the trained model to classify them. Finally, we recognize the testing gestures based on the CNN model. VI. PERFORMANCE EVALUATION A. Experimental Setup & Metrics In order to validate the effectiveness of the proposed RF- finger system, we conduct the experiments on both the finger tracking and multi-touch gesture recognition in realistic settings. The experimental setup of RF-finger system consists of a 5×7 tag array of AZ-9629 RFID tags and an ImpinJ Speedway R420 RFID reader integrated with a S9028PCL directional antenna as shown in Figure 13(a). The tag array is deployed using the shuffled deployment as shown in Figure 7(a) and the average sampling rate is 13Hz. The RFID antenna is placed 50cm behind the tag array to interrogate the tags, while the user performs finger gestures in front of the tag array. A LeapMotion is also deployed under the tag array to collect video stream for comparison. The experiments are carried out in a typical indoor environment involving 10 participants in total (8 males and 2 females). Before performing each gesture, the user is required to drop Input Layer Conv layer 1 Pool layer 1 Conv layer 2 Pool layer 2 FC layer Hidden Layers Output layer Fig. 12. Illustration of CNN structure for gesture recognition. his/her hand down to collect the free-space signal Sf ree, which is used for reflection feature extraction. For the finger tracking, we ask 4 participants to write the 26 letters and 4 shapes (i.e., ,4, ,♥) 10 times. In the KNN method, K is set to 5 as default. For the multi-touch gesture recognition, we ask all the 10 participants to perform each of the 6 gestures as shown in Figure 13(b) 30 times. Particularly, 80% of the gesture related RF dataset (i.e., 1440 gestures) are used to train the CNN model, and the other 20% are used to evaluate the trained model. Only one CNN model is trained for all the users. Tag array LeapMotion RFID antenna (Back) RFID antenna RFID reader (a) Experimental setup 2) Zoom Out (ZO) 3) Swipe Left (SL) 1) Rotate Left (RL) 5) Zoom In (ZI) 6) Swipe Right (SR) 4) Rotate Right (RR) (b) Multi-touch gestures Fig. 13. Evaluation setup & multi-touch gestures. We define three different metrics to evaluate the performance of the finger movements. Recognition accuracy: For the finger writing letters, we recover the writing trace and then use LipiTk [3] to recognize the trace, which provides a candidate letter set C with different confidences. Given a test set Tx of the traces for letter x, the recognition accuracy of x is defined as P||{x}∩C|| ||Tx|| , where ||·|| measures the set size. Distance error: For the shapes in finger tracking, the distance error is defined as DTW(F,FG) max(L(F ),L(FG)) , which indicates the average Dynamic Time Warping (DTW) distance between the tracking trace F from RF-finger and the groundtruth shape FG. L() calculates the number of points in the trace. Classification accuracy: For the multi-touch gestures, the classification accuracy is defined as Gc Ga , where Gc and Ga are the numbers of correctly classified gestures and performed gestures, respectively. Particularly, we first train a general CNN model and then use the model to classify all the multitouch gestures. B. Finger Tracking of Letters We first evaluate the accuracy of recognizing the finger writing letters based on LipiTk. Since LipiTk produces several candidate letters with different confidences, we use the first three candidates with the larger confidence as the recognition result. As shown in Figure 14(a), RF-finger achieves an average recognition accuracy of 88%. For all the letters, the recognition accuracies are all above 80%, while 14 of 26 letters achieve more than 90% recognition accuracy. Particularly, 7�