正在加载图片...

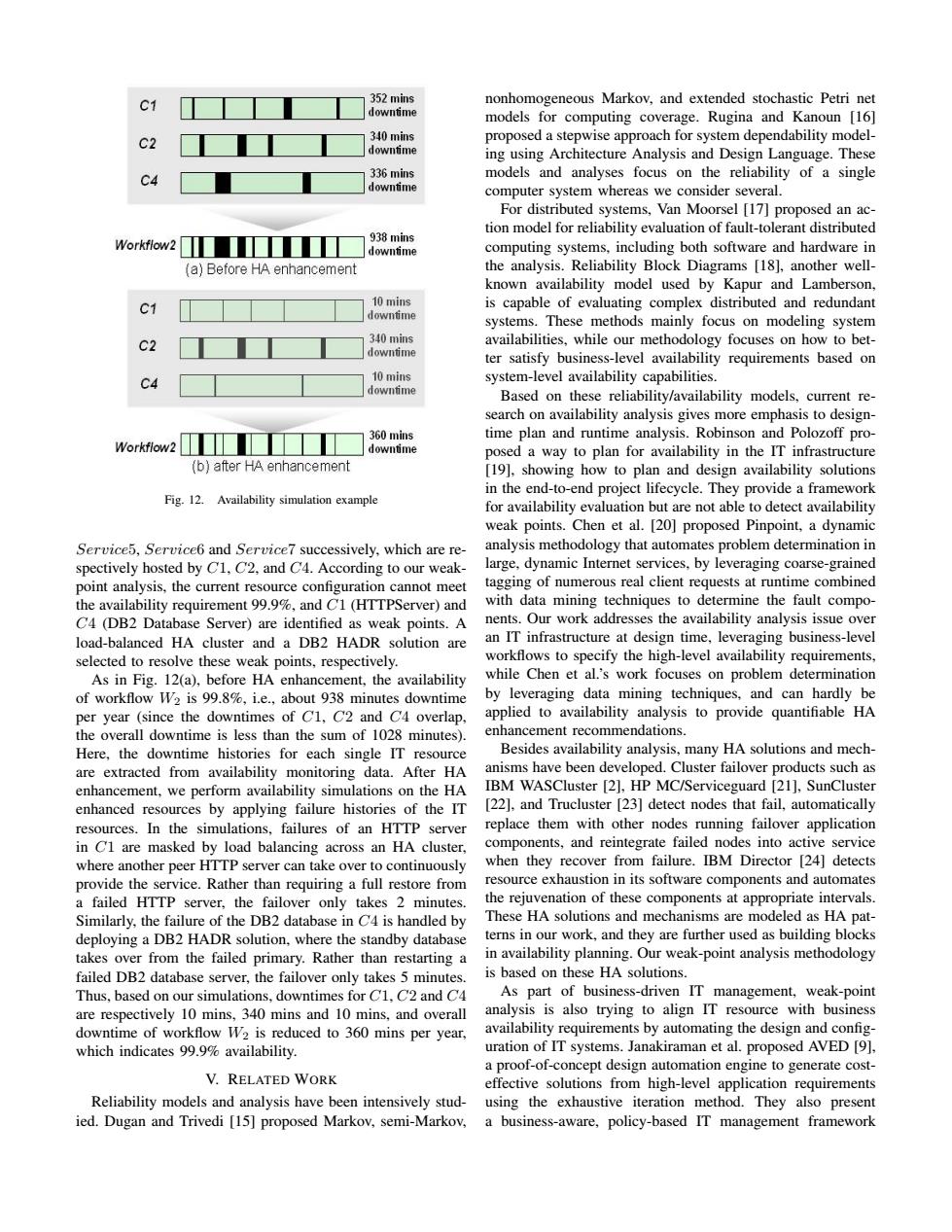

352 mins nonhomogeneous Markov,and extended stochastic Petri net downtime models for computing coverage.Rugina and Kanoun [16] 340 mins proposed a stepwise approach for system dependability model- downtime ing using Architecture Analysis and Design Language.These 336 mins models and analyses focus on the reliability of a single downtime computer system whereas we consider several. For distributed systems,Van Moorsel [17]proposed an ac- tion model for reliability evaluation of fault-tolerant distributed Workflow2 938 mins downtime computing systems,including both software and hardware in (a)Before HA enhancement the analysis.Reliability Block Diagrams [18],another well- known availability model used by Kapur and Lamberson, 10 mins is capable of evaluating complex distributed and redundant downtime systems.These methods mainly focus on modeling system C2 340 mins availabilities,while our methodology focuses on how to bet- downtime ter satisfy business-level availability requirements based on 10 mins system-level availability capabilities. downtime Based on these reliability/availability models.current re- search on availability analysis gives more emphasis to design- 360 mins time plan and runtime analysis.Robinson and Polozoff pro- Workflow2☐ downtime posed a way to plan for availability in the IT infrastructure (b)after HA enhancement [19],showing how to plan and design availability solutions in the end-to-end project lifecycle.They provide a framework Fig.12.Availability simulation example for availability evaluation but are not able to detect availability weak points.Chen et al.[20]proposed Pinpoint,a dynamic Service5,Service6 and Service7 successively,which are re- analysis methodology that automates problem determination in spectively hosted by C1,C2,and C4.According to our weak- large,dynamic Internet services,by leveraging coarse-grained point analysis,the current resource configuration cannot meet tagging of numerous real client requests at runtime combined the availability requirement 99.9%,and C1 (HTTPServer)and with data mining techniques to determine the fault compo- C4 (DB2 Database Server)are identified as weak points.A nents.Our work addresses the availability analysis issue over load-balanced HA cluster and a DB2 HADR solution are an IT infrastructure at design time,leveraging business-level selected to resolve these weak points,respectively. workflows to specify the high-level availability requirements, As in Fig.12(a),before HA enhancement,the availability while Chen et al.'s work focuses on problem determination of workflow W2 is 99.8%,i.e.,about 938 minutes downtime by leveraging data mining techniques,and can hardly be per year (since the downtimes of C1,C2 and C4 overlap, applied to availability analysis to provide quantifiable HA the overall downtime is less than the sum of 1028 minutes). enhancement recommendations. Here,the downtime histories for each single IT resource Besides availability analysis,many HA solutions and mech- are extracted from availability monitoring data.After HA anisms have been developed.Cluster failover products such as enhancement,we perform availability simulations on the HA IBM WASCluster [2],HP MC/Serviceguard [21],SunCluster enhanced resources by applying failure histories of the IT [22],and Trucluster [23]detect nodes that fail,automatically resources.In the simulations,failures of an HTTP server replace them with other nodes running failover application in Cl are masked by load balancing across an HA cluster, components,and reintegrate failed nodes into active service where another peer HTTP server can take over to continuously when they recover from failure.IBM Director [24]detects provide the service.Rather than requiring a full restore from resource exhaustion in its software components and automates a failed HTTP server,the failover only takes 2 minutes. the rejuvenation of these components at appropriate intervals. Similarly,the failure of the DB2 database in C4 is handled by These HA solutions and mechanisms are modeled as HA pat- deploying a DB2 HADR solution,where the standby database terns in our work,and they are further used as building blocks takes over from the failed primary.Rather than restarting a in availability planning.Our weak-point analysis methodology failed DB2 database server,the failover only takes 5 minutes. is based on these HA solutions. Thus,based on our simulations,downtimes for C1,C2 and C4 As part of business-driven IT management,weak-point are respectively 10 mins,340 mins and 10 mins,and overall analysis is also trying to align IT resource with business downtime of workflow W2 is reduced to 360 mins per year, availability requirements by automating the design and config- which indicates 99.9%availability. uration of IT systems.Janakiraman et al.proposed AVED [9], a proof-of-concept design automation engine to generate cost- V.RELATED WORK effective solutions from high-level application requirements Reliability models and analysis have been intensively stud- using the exhaustive iteration method.They also present ied.Dugan and Trivedi [15]proposed Markov,semi-Markov,a business-aware,policy-based IT management frameworkFig. 12. Availability simulation example Service5, Service6 and Service7 successively, which are respectively hosted by C1, C2, and C4. According to our weakpoint analysis, the current resource configuration cannot meet the availability requirement 99.9%, and C1 (HTTPServer) and C4 (DB2 Database Server) are identified as weak points. A load-balanced HA cluster and a DB2 HADR solution are selected to resolve these weak points, respectively. As in Fig. 12(a), before HA enhancement, the availability of workflow W2 is 99.8%, i.e., about 938 minutes downtime per year (since the downtimes of C1, C2 and C4 overlap, the overall downtime is less than the sum of 1028 minutes). Here, the downtime histories for each single IT resource are extracted from availability monitoring data. After HA enhancement, we perform availability simulations on the HA enhanced resources by applying failure histories of the IT resources. In the simulations, failures of an HTTP server in C1 are masked by load balancing across an HA cluster, where another peer HTTP server can take over to continuously provide the service. Rather than requiring a full restore from a failed HTTP server, the failover only takes 2 minutes. Similarly, the failure of the DB2 database in C4 is handled by deploying a DB2 HADR solution, where the standby database takes over from the failed primary. Rather than restarting a failed DB2 database server, the failover only takes 5 minutes. Thus, based on our simulations, downtimes for C1, C2 and C4 are respectively 10 mins, 340 mins and 10 mins, and overall downtime of workflow W2 is reduced to 360 mins per year, which indicates 99.9% availability. V. RELATED WORK Reliability models and analysis have been intensively studied. Dugan and Trivedi [15] proposed Markov, semi-Markov, nonhomogeneous Markov, and extended stochastic Petri net models for computing coverage. Rugina and Kanoun [16] proposed a stepwise approach for system dependability modeling using Architecture Analysis and Design Language. These models and analyses focus on the reliability of a single computer system whereas we consider several. For distributed systems, Van Moorsel [17] proposed an action model for reliability evaluation of fault-tolerant distributed computing systems, including both software and hardware in the analysis. Reliability Block Diagrams [18], another wellknown availability model used by Kapur and Lamberson, is capable of evaluating complex distributed and redundant systems. These methods mainly focus on modeling system availabilities, while our methodology focuses on how to better satisfy business-level availability requirements based on system-level availability capabilities. Based on these reliability/availability models, current research on availability analysis gives more emphasis to designtime plan and runtime analysis. Robinson and Polozoff proposed a way to plan for availability in the IT infrastructure [19], showing how to plan and design availability solutions in the end-to-end project lifecycle. They provide a framework for availability evaluation but are not able to detect availability weak points. Chen et al. [20] proposed Pinpoint, a dynamic analysis methodology that automates problem determination in large, dynamic Internet services, by leveraging coarse-grained tagging of numerous real client requests at runtime combined with data mining techniques to determine the fault components. Our work addresses the availability analysis issue over an IT infrastructure at design time, leveraging business-level workflows to specify the high-level availability requirements, while Chen et al.’s work focuses on problem determination by leveraging data mining techniques, and can hardly be applied to availability analysis to provide quantifiable HA enhancement recommendations. Besides availability analysis, many HA solutions and mechanisms have been developed. Cluster failover products such as IBM WASCluster [2], HP MC/Serviceguard [21], SunCluster [22], and Trucluster [23] detect nodes that fail, automatically replace them with other nodes running failover application components, and reintegrate failed nodes into active service when they recover from failure. IBM Director [24] detects resource exhaustion in its software components and automates the rejuvenation of these components at appropriate intervals. These HA solutions and mechanisms are modeled as HA patterns in our work, and they are further used as building blocks in availability planning. Our weak-point analysis methodology is based on these HA solutions. As part of business-driven IT management, weak-point analysis is also trying to align IT resource with business availability requirements by automating the design and configuration of IT systems. Janakiraman et al. proposed AVED [9], a proof-of-concept design automation engine to generate costeffective solutions from high-level application requirements using the exhaustive iteration method. They also present a business-aware, policy-based IT management framework