正在加载图片...

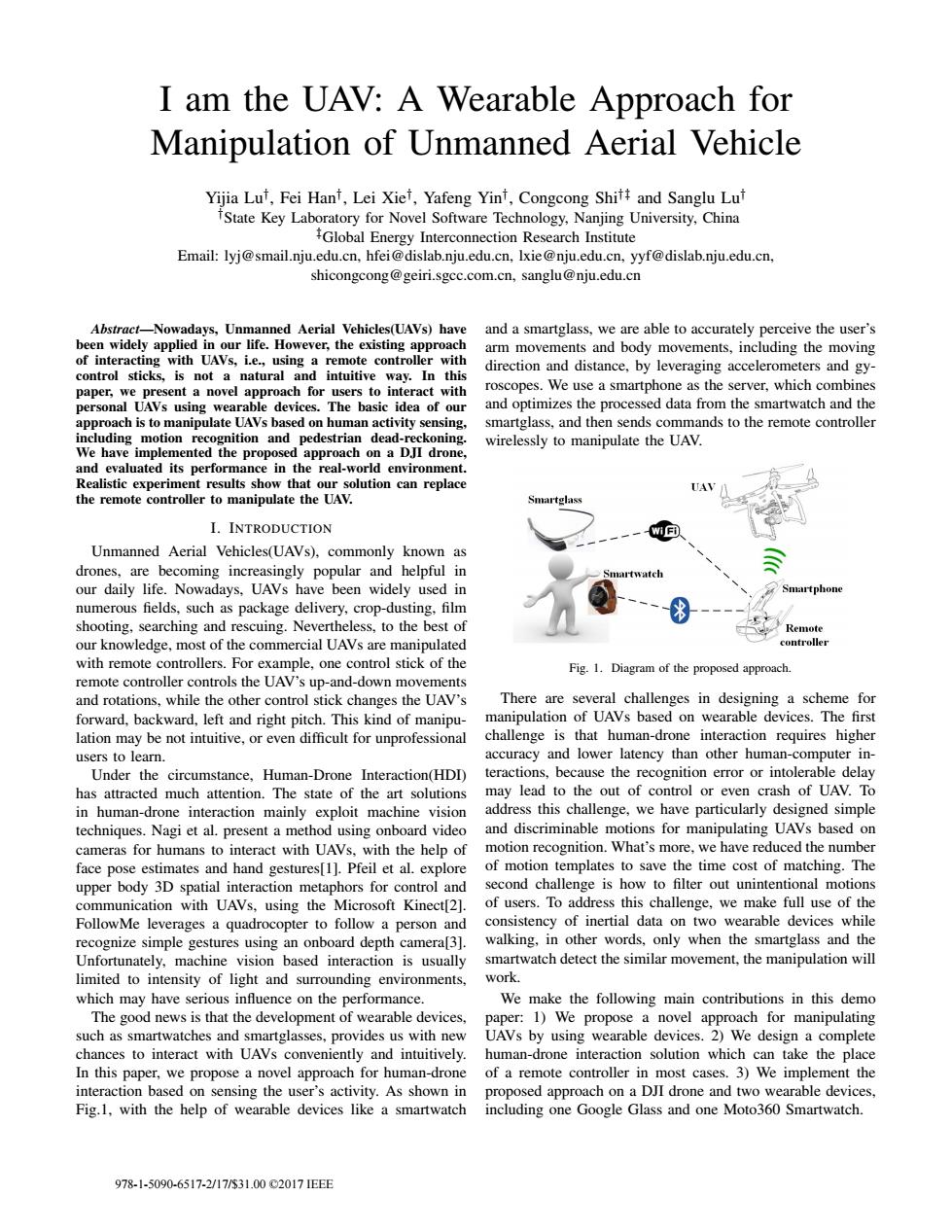

I am the UAV:A Wearable Approach for Manipulation of Unmanned Aerial Vehicle Yijia Lut,Fei Hant,Lei Xief,Yafeng Yint,Congcong Shitt and Sanglu Lut iState Key Laboratory for Novel Software Technology,Nanjing University,China Global Energy Interconnection Research Institute Email:lyj@smail.nju.edu.cn,hfei@dislab.nju.edu.cn,lxie@nju.edu.cn,yyf@dislab.nju.edu.cn. shicongcong@geiri.sgcc.com.cn,sanglu@nju.edu.cn Abstract-Nowadays,Unmanned Aerial Vehicles(UAVs)have and a smartglass,we are able to accurately perceive the user's been widely applied in our life.However,the existing approach arm movements and body movements,including the moving of interacting with UAVs,i.e,using a remote controller with direction and distance,by leveraging accelerometers and gy- control sticks,is not a natural and intuitive way.In this paper,we present a novel approach for users to interact with roscopes.We use a smartphone as the server,which combines personal UAVs using wearable devices.The basic idea of our and optimizes the processed data from the smartwatch and the approach is to manipulate UAVs based on human activity sensing, smartglass,and then sends commands to the remote controller including motion recognition and pedestrian dead-reckoning. wirelessly to manipulate the UAV. We have implemented the proposed approach on a DJI drone and evaluated its performance in the real-world environment Realistic experiment results show that our solution can replace UAV the remote controller to manipulate the UAV. Smartglass I.INTRODUCTION Unmanned Aerial Vehicles(UAVs),commonly known as drones,are becoming increasingly popular and helpful in nartwatch our daily life.Nowadays,UAVs have been widely used in Smartphone numerous fields,such as package delivery,crop-dusting,film shooting,searching and rescuing.Nevertheless,to the best of Remote our knowledge,most of the commercial UAVs are manipulated controller with remote controllers.For example,one control stick of the Fig.1.Diagram of the proposed approach remote controller controls the UAV's up-and-down movements and rotations,while the other control stick changes the UAV's There are several challenges in designing a scheme for forward,backward,left and right pitch.This kind of manipu- manipulation of UAVs based on wearable devices.The first lation may be not intuitive,or even difficult for unprofessional challenge is that human-drone interaction requires higher users to learn. accuracy and lower latency than other human-computer in- Under the circumstance,Human-Drone Interaction(HDI) teractions,because the recognition error or intolerable delay has attracted much attention.The state of the art solutions may lead to the out of control or even crash of UAV.To in human-drone interaction mainly exploit machine vision address this challenge,we have particularly designed simple techniques.Nagi et al.present a method using onboard video and discriminable motions for manipulating UAVs based on cameras for humans to interact with UAVs,with the help of motion recognition.What's more,we have reduced the number face pose estimates and hand gestures[1].Pfeil et al.explore of motion templates to save the time cost of matching.The upper body 3D spatial interaction metaphors for control and second challenge is how to filter out unintentional motions communication with UAVs,using the Microsoft Kinect[2]. of users.To address this challenge,we make full use of the FollowMe leverages a quadrocopter to follow a person and consistency of inertial data on two wearable devices while recognize simple gestures using an onboard depth camera[3]. walking,in other words,only when the smartglass and the Unfortunately,machine vision based interaction is usually smartwatch detect the similar movement,the manipulation will limited to intensity of light and surrounding environments, work. which may have serious influence on the performance. We make the following main contributions in this demo The good news is that the development of wearable devices, paper:1)We propose a novel approach for manipulating such as smartwatches and smartglasses,provides us with new UAVs by using wearable devices.2)We design a complete chances to interact with UAVs conveniently and intuitively. human-drone interaction solution which can take the place In this paper,we propose a novel approach for human-drone of a remote controller in most cases.3)We implement the interaction based on sensing the user's activity.As shown in proposed approach on a DJI drone and two wearable devices, Fig.1,with the help of wearable devices like a smartwatch including one Google Glass and one Moto360 Smartwatch. 978-1-5090-6517-2/17/$31.00C2017EEEI am the UAV: A Wearable Approach for Manipulation of Unmanned Aerial Vehicle Yijia Lu†, Fei Han†, Lei Xie†, Yafeng Yin†, Congcong Shi†‡ and Sanglu Lu† †State Key Laboratory for Novel Software Technology, Nanjing University, China ‡Global Energy Interconnection Research Institute Email: lyj@smail.nju.edu.cn, hfei@dislab.nju.edu.cn, lxie@nju.edu.cn, yyf@dislab.nju.edu.cn, shicongcong@geiri.sgcc.com.cn, sanglu@nju.edu.cn Abstract—Nowadays, Unmanned Aerial Vehicles(UAVs) have been widely applied in our life. However, the existing approach of interacting with UAVs, i.e., using a remote controller with control sticks, is not a natural and intuitive way. In this paper, we present a novel approach for users to interact with personal UAVs using wearable devices. The basic idea of our approach is to manipulate UAVs based on human activity sensing, including motion recognition and pedestrian dead-reckoning. We have implemented the proposed approach on a DJI drone, and evaluated its performance in the real-world environment. Realistic experiment results show that our solution can replace the remote controller to manipulate the UAV. I. INTRODUCTION Unmanned Aerial Vehicles(UAVs), commonly known as drones, are becoming increasingly popular and helpful in our daily life. Nowadays, UAVs have been widely used in numerous fields, such as package delivery, crop-dusting, film shooting, searching and rescuing. Nevertheless, to the best of our knowledge, most of the commercial UAVs are manipulated with remote controllers. For example, one control stick of the remote controller controls the UAV’s up-and-down movements and rotations, while the other control stick changes the UAV’s forward, backward, left and right pitch. This kind of manipulation may be not intuitive, or even difficult for unprofessional users to learn. Under the circumstance, Human-Drone Interaction(HDI) has attracted much attention. The state of the art solutions in human-drone interaction mainly exploit machine vision techniques. Nagi et al. present a method using onboard video cameras for humans to interact with UAVs, with the help of face pose estimates and hand gestures[1]. Pfeil et al. explore upper body 3D spatial interaction metaphors for control and communication with UAVs, using the Microsoft Kinect[2]. FollowMe leverages a quadrocopter to follow a person and recognize simple gestures using an onboard depth camera[3]. Unfortunately, machine vision based interaction is usually limited to intensity of light and surrounding environments, which may have serious influence on the performance. The good news is that the development of wearable devices, such as smartwatches and smartglasses, provides us with new chances to interact with UAVs conveniently and intuitively. In this paper, we propose a novel approach for human-drone interaction based on sensing the user’s activity. As shown in Fig.1, with the help of wearable devices like a smartwatch and a smartglass, we are able to accurately perceive the user’s arm movements and body movements, including the moving direction and distance, by leveraging accelerometers and gyroscopes. We use a smartphone as the server, which combines and optimizes the processed data from the smartwatch and the smartglass, and then sends commands to the remote controller wirelessly to manipulate the UAV. Fig. 1. Diagram of the proposed approach. There are several challenges in designing a scheme for manipulation of UAVs based on wearable devices. The first challenge is that human-drone interaction requires higher accuracy and lower latency than other human-computer interactions, because the recognition error or intolerable delay may lead to the out of control or even crash of UAV. To address this challenge, we have particularly designed simple and discriminable motions for manipulating UAVs based on motion recognition. What’s more, we have reduced the number of motion templates to save the time cost of matching. The second challenge is how to filter out unintentional motions of users. To address this challenge, we make full use of the consistency of inertial data on two wearable devices while walking, in other words, only when the smartglass and the smartwatch detect the similar movement, the manipulation will work. We make the following main contributions in this demo paper: 1) We propose a novel approach for manipulating UAVs by using wearable devices. 2) We design a complete human-drone interaction solution which can take the place of a remote controller in most cases. 3) We implement the proposed approach on a DJI drone and two wearable devices, including one Google Glass and one Moto360 Smartwatch. 978-1-5090-6517-2/17/$31.00 ©2017 IEEE