正在加载图片...

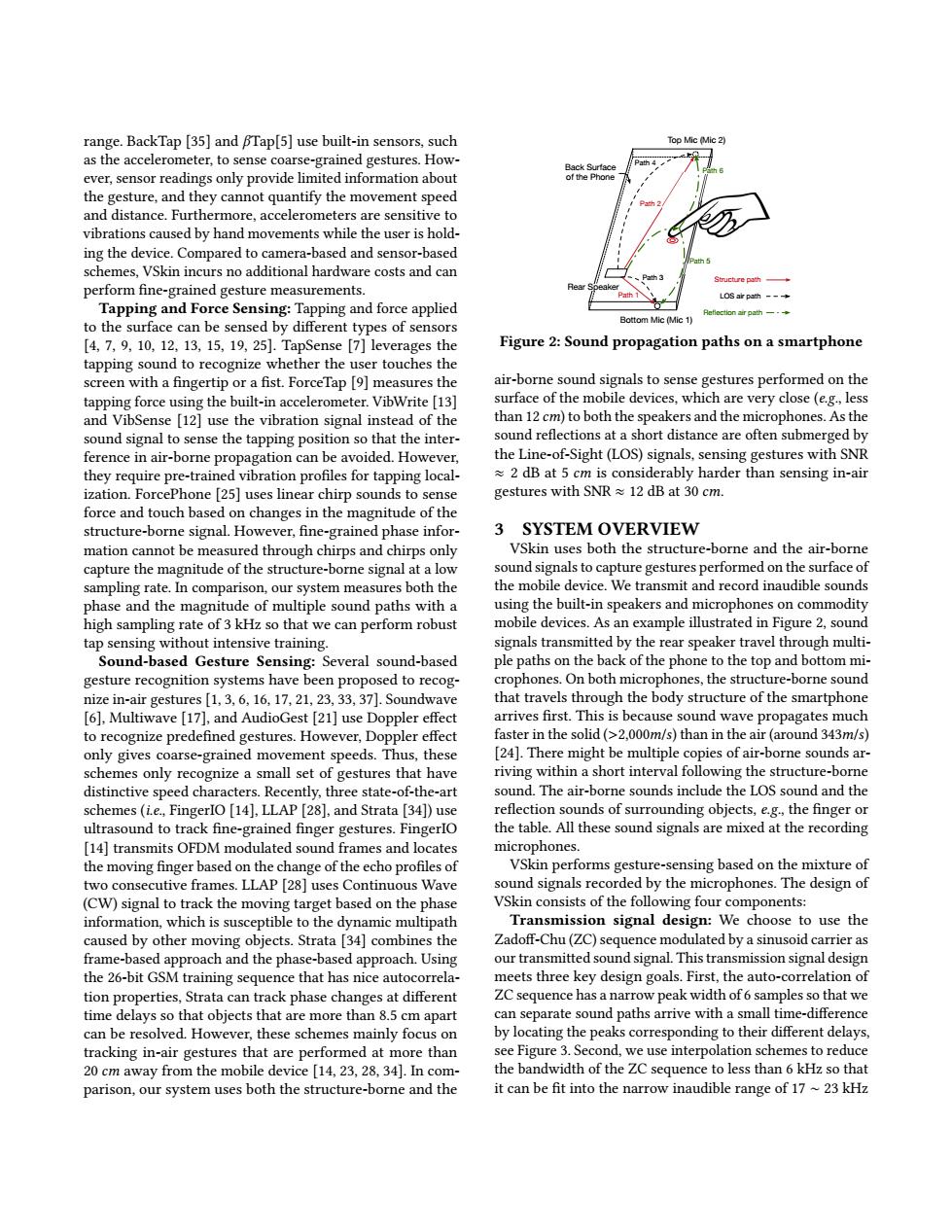

range.BackTap [35]and BTap[5]use built-in sensors,such Top Mic (Mic 2) as the accelerometer,to sense coarse-grained gestures.How- Rack Surtace ever,sensor readings only provide limited information about f the the gesture,and they cannot quantify the movement speed and distance.Furthermore,accelerometers are sensitive to vibrations caused by hand movements while the user is hold- ing the device.Compared to camera-based and sensor-based schemes,VSkin incurs no additional hardware costs and can Struchure path- perform fine-grained gesture measurements. LOS ar path - Tapping and Force Sensing:Tapping and force applied to the surface can be sensed by different types of sensors Bottom Mic (Mic 1) [4,7,9,10,12,13,15,19,25].TapSense [7]leverages the Figure 2:Sound propagation paths on a smartphone tapping sound to recognize whether the user touches the screen with a fingertip or a fist.Force Tap [9]measures the air-borne sound signals to sense gestures performed on the tapping force using the built-in accelerometer.VibWrite [13] surface of the mobile devices,which are very close (e.g.,less and VibSense [12]use the vibration signal instead of the than 12 cm)to both the speakers and the microphones.As the sound signal to sense the tapping position so that the inter- sound reflections at a short distance are often submerged by ference in air-borne propagation can be avoided.However, the Line-of-Sight(LOS)signals,sensing gestures with SNR they require pre-trained vibration profiles for tapping local- 2 dB at 5 cm is considerably harder than sensing in-air ization.ForcePhone [25]uses linear chirp sounds to sense gestures with SNR 12 dB at 30 cm. force and touch based on changes in the magnitude of the structure-borne signal.However,fine-grained phase infor- 3 SYSTEM OVERVIEW mation cannot be measured through chirps and chirps only VSkin uses both the structure-borne and the air-borne capture the magnitude of the structure-borne signal at a low sound signals to capture gestures performed on the surface of sampling rate.In comparison,our system measures both the the mobile device.We transmit and record inaudible sounds phase and the magnitude of multiple sound paths with a using the built-in speakers and microphones on commodity high sampling rate of 3 kHz so that we can perform robust mobile devices.As an example illustrated in Figure 2,sound tap sensing without intensive training. signals transmitted by the rear speaker travel through multi- Sound-based Gesture Sensing:Several sound-based ple paths on the back of the phone to the top and bottom mi- gesture recognition systems have been proposed to recog- crophones.On both microphones,the structure-borne sound nize in-air gestures [1,3,6,16,17,21,23,33,37].Soundwave that travels through the body structure of the smartphone [6],Multiwave [17],and AudioGest [21]use Doppler effect arrives first.This is because sound wave propagates much to recognize predefined gestures.However,Doppler effect faster in the solid(>2.000m/s)than in the air(around 343m/s) only gives coarse-grained movement speeds.Thus,these [24].There might be multiple copies of air-borne sounds ar- schemes only recognize a small set of gestures that have riving within a short interval following the structure-borne distinctive speed characters.Recently,three state-of-the-art sound.The air-borne sounds include the LOS sound and the schemes (i.e,FingerIO [14],LLAP [28],and Strata [34])use reflection sounds of surrounding objects,e.g.,the finger or ultrasound to track fine-grained finger gestures.FingerIO the table.All these sound signals are mixed at the recording [14]transmits OFDM modulated sound frames and locates microphones. the moving finger based on the change of the echo profiles of VSkin performs gesture-sensing based on the mixture of two consecutive frames.LLAP [28]uses Continuous Wave sound signals recorded by the microphones.The design of (CW)signal to track the moving target based on the phase VSkin consists of the following four components: information,which is susceptible to the dynamic multipath Transmission signal design:We choose to use the caused by other moving objects.Strata [34]combines the Zadoff-Chu(ZC)sequence modulated by a sinusoid carrier as frame-based approach and the phase-based approach.Using our transmitted sound signal.This transmission signal design the 26-bit GSM training sequence that has nice autocorrela- meets three key design goals.First,the auto-correlation of tion properties,Strata can track phase changes at different ZC sequence has a narrow peak width of 6 samples so that we time delays so that objects that are more than 8.5 cm apart can separate sound paths arrive with a small time-difference can be resolved.However,these schemes mainly focus on by locating the peaks corresponding to their different delays tracking in-air gestures that are performed at more than see Figure 3.Second,we use interpolation schemes to reduce 20 cm away from the mobile device [14,23,28,34].In com- the bandwidth of the ZC sequence to less than 6 kHz so that parison,our system uses both the structure-borne and the it can be fit into the narrow inaudible range of 17~23 kHzrange. BackTap [35] and βTap[5] use built-in sensors, such as the accelerometer, to sense coarse-grained gestures. However, sensor readings only provide limited information about the gesture, and they cannot quantify the movement speed and distance. Furthermore, accelerometers are sensitive to vibrations caused by hand movements while the user is holding the device. Compared to camera-based and sensor-based schemes, VSkin incurs no additional hardware costs and can perform fine-grained gesture measurements. Tapping and Force Sensing: Tapping and force applied to the surface can be sensed by different types of sensors [4, 7, 9, 10, 12, 13, 15, 19, 25]. TapSense [7] leverages the tapping sound to recognize whether the user touches the screen with a fingertip or a fist. ForceTap [9] measures the tapping force using the built-in accelerometer. VibWrite [13] and VibSense [12] use the vibration signal instead of the sound signal to sense the tapping position so that the interference in air-borne propagation can be avoided. However, they require pre-trained vibration profiles for tapping localization. ForcePhone [25] uses linear chirp sounds to sense force and touch based on changes in the magnitude of the structure-borne signal. However, fine-grained phase information cannot be measured through chirps and chirps only capture the magnitude of the structure-borne signal at a low sampling rate. In comparison, our system measures both the phase and the magnitude of multiple sound paths with a high sampling rate of 3 kHz so that we can perform robust tap sensing without intensive training. Sound-based Gesture Sensing: Several sound-based gesture recognition systems have been proposed to recognize in-air gestures [1, 3, 6, 16, 17, 21, 23, 33, 37]. Soundwave [6], Multiwave [17], and AudioGest [21] use Doppler effect to recognize predefined gestures. However, Doppler effect only gives coarse-grained movement speeds. Thus, these schemes only recognize a small set of gestures that have distinctive speed characters. Recently, three state-of-the-art schemes (i.e., FingerIO [14], LLAP [28], and Strata [34]) use ultrasound to track fine-grained finger gestures. FingerIO [14] transmits OFDM modulated sound frames and locates the moving finger based on the change of the echo profiles of two consecutive frames. LLAP [28] uses Continuous Wave (CW) signal to track the moving target based on the phase information, which is susceptible to the dynamic multipath caused by other moving objects. Strata [34] combines the frame-based approach and the phase-based approach. Using the 26-bit GSM training sequence that has nice autocorrelation properties, Strata can track phase changes at different time delays so that objects that are more than 8.5 cm apart can be resolved. However, these schemes mainly focus on tracking in-air gestures that are performed at more than 20 cm away from the mobile device [14, 23, 28, 34]. In comparison, our system uses both the structure-borne and the Top Mic (Mic 2) Bottom Mic (Mic 1) Path 2 Path 1 Path 3 Path 4 Path 6 Path 5 Rear Speaker Structure path LOS air path Reflection air path Back Surface of the Phone Figure 2: Sound propagation paths on a smartphone air-borne sound signals to sense gestures performed on the surface of the mobile devices, which are very close (e.g., less than 12 cm) to both the speakers and the microphones. As the sound reflections at a short distance are often submerged by the Line-of-Sight (LOS) signals, sensing gestures with SNR ≈ 2 dB at 5 cm is considerably harder than sensing in-air gestures with SNR ≈ 12 dB at 30 cm. 3 SYSTEM OVERVIEW VSkin uses both the structure-borne and the air-borne sound signals to capture gestures performed on the surface of the mobile device. We transmit and record inaudible sounds using the built-in speakers and microphones on commodity mobile devices. As an example illustrated in Figure 2, sound signals transmitted by the rear speaker travel through multiple paths on the back of the phone to the top and bottom microphones. On both microphones, the structure-borne sound that travels through the body structure of the smartphone arrives first. This is because sound wave propagates much faster in the solid (>2,000m/s) than in the air (around 343m/s) [24]. There might be multiple copies of air-borne sounds arriving within a short interval following the structure-borne sound. The air-borne sounds include the LOS sound and the reflection sounds of surrounding objects, e.g., the finger or the table. All these sound signals are mixed at the recording microphones. VSkin performs gesture-sensing based on the mixture of sound signals recorded by the microphones. The design of VSkin consists of the following four components: Transmission signal design: We choose to use the Zadoff-Chu (ZC) sequence modulated by a sinusoid carrier as our transmitted sound signal. This transmission signal design meets three key design goals. First, the auto-correlation of ZC sequence has a narrow peak width of 6 samples so that we can separate sound paths arrive with a small time-difference by locating the peaks corresponding to their different delays, see Figure 3. Second, we use interpolation schemes to reduce the bandwidth of the ZC sequence to less than 6 kHz so that it can be fit into the narrow inaudible range of 17 ∼ 23 kHz