正在加载图片...

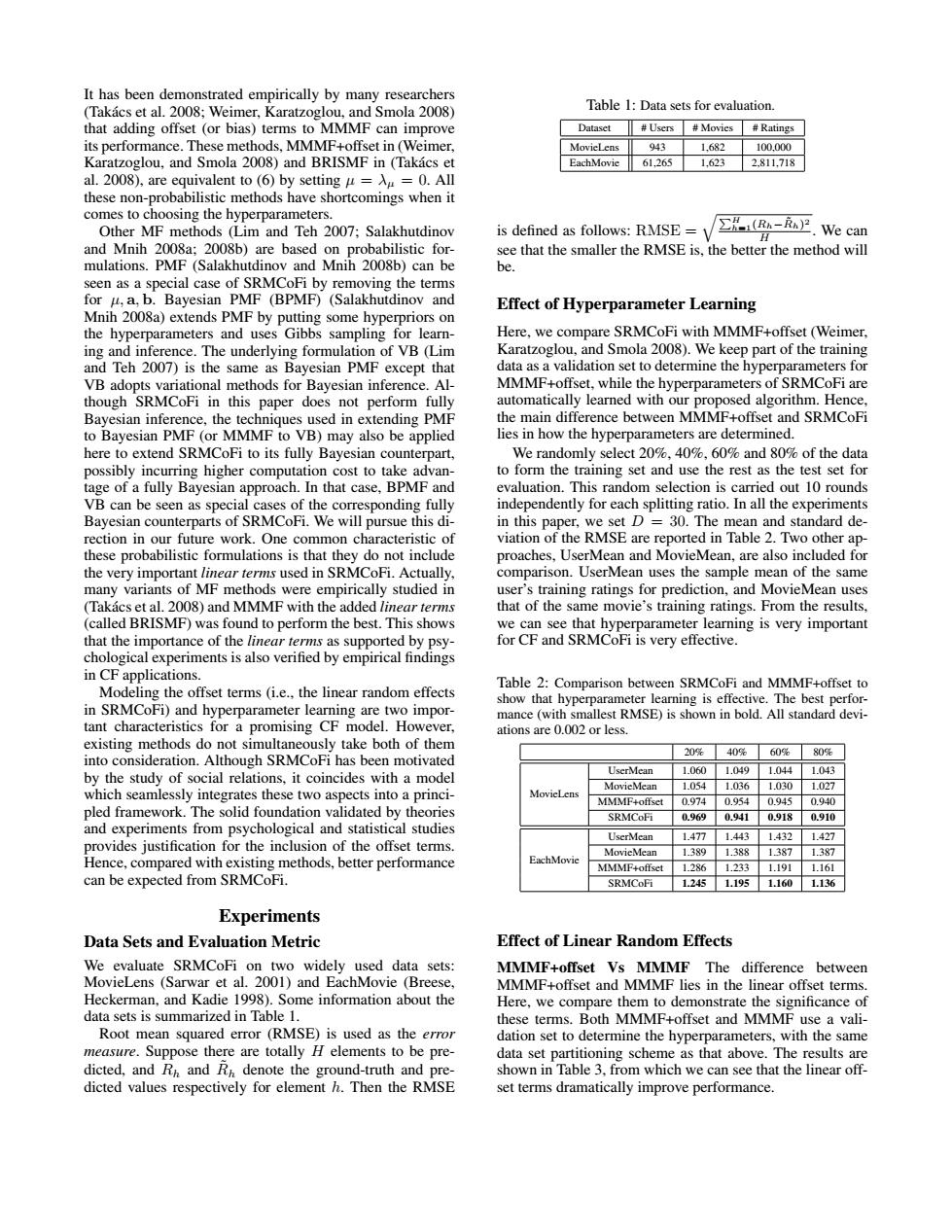

It has been demonstrated empirically by many researchers (Takacs et al.2008;Weimer,Karatzoglou,and Smola 2008) Table 1:Data sets for evaluation. that adding offset (or bias)terms to MMMF can improve Dataset #Users Movies Ratings its performance.These methods,MMMF+offset in(Weimer, 943 1,682 100.000 Karatzoglou,and Smola 2008)and BRISMF in (Takacs et EachMovic 61.265 1,623 2.811.718 al.2008),are equivalent to (6)by setting ==0.All these non-probabilistic methods have shortcomings when it comes to choosing the hyperparameters. Other MF methods (Lim and Teh 2007;Salakhutdinov is defined as follows:RMSE=\/ ∑H(R-上.We can and Mnih 2008a;2008b)are based on probabilistic for- see that the smaller the RMSE is.the better the method will mulations.PMF(Salakhutdinov and Mnih 2008b)can be be. seen as a special case of SRMCoFi by removing the terms for u,a,b.Bayesian PMF (BPMF)(Salakhutdinov and Effect of Hyperparameter Learning Mnih 2008a)extends PMF by putting some hyperpriors on the hyperparameters and uses Gibbs sampling for learn- Here,we compare SRMCoFi with MMMF+offset (Weimer, ing and inference.The underlying formulation of VB (Lim Karatzoglou,and Smola 2008).We keep part of the training and Teh 2007)is the same as Bayesian PMF except that data as a validation set to determine the hyperparameters for VB adopts variational methods for Bayesian inference.Al- MMMF+offset,while the hyperparameters of SRMCoFi are though SRMCoFi in this paper does not perform fully automatically learned with our proposed algorithm.Hence. Bayesian inference,the techniques used in extending PMF the main difference between MMMF+offset and SRMCoFi to Bayesian PMF (or MMMF to VB)may also be applied lies in how the hyperparameters are determined. here to extend SRMCoFi to its fully Bayesian counterpart, We randomly select 20%,40%,60%and 80%of the data possibly incurring higher computation cost to take advan- to form the training set and use the rest as the test set for tage of a fully Bayesian approach.In that case,BPMF and evaluation.This random selection is carried out 10 rounds VB can be seen as special cases of the corresponding fully independently for each splitting ratio.In all the experiments Bayesian counterparts of SRMCoFi.We will pursue this di- in this paper,we set D=30.The mean and standard de- rection in our future work.One common characteristic of viation of the RMSE are reported in Table 2.Two other ap- these probabilistic formulations is that they do not include proaches,UserMean and MovieMean,are also included for the very important linear terms used in SRMCoFi.Actually, comparison.UserMean uses the sample mean of the same many variants of MF methods were empirically studied in user's training ratings for prediction,and MovieMean uses (Takacs et al.2008)and MMMF with the added linear terms that of the same movie's training ratings.From the results, (called BRISMF)was found to perform the best.This shows we can see that hyperparameter learning is very important that the importance of the linear terms as supported by psy- for CF and SRMCoFi is very effective. chological experiments is also verified by empirical findings in CF applications. Modeling the offset terms (i.e.,the linear random effects Table 2:Comparison between SRMCoFi and MMMF+offset to show that hyperparameter learning is effective.The best perfor- in SRMCoFi)and hyperparameter learning are two impor- mance(with smallest RMSE)is shown in bold.All standard devi- tant characteristics for a promising CF model.However, ations are 0.002 or less. existing methods do not simultaneously take both of them 20% 40%60%80% into consideration.Although SRMCoFi has been motivated UserMean 1.0601.0491.0441.043 by the study of social relations,it coincides with a model MovieMean 1.054 11.03611.0301.027 which seamlessly integrates these two aspects into a princi- MovieLens MMMF+offset 0.9740.9540.9450.940 pled framework.The solid foundation validated by theories SRMCoFi 0.9690.9410.9180.910 and experiments from psychological and statistical studies UserMean 1.477 】.443 1.4321.427 provides justification for the inclusion of the offset terms. MovieMean 1.389 1.3881387 1.387 Hence,compared with existing methods,better performance EachMovic MMMF+offset 1.286 1233 1.1911.161 can be expected from SRMCoFi. SRMCoFi 1.245 1.195 1.160 1.136 Experiments Data Sets and Evaluation Metric Effect of Linear Random Effects We evaluate SRMCoFi on two widely used data sets: MMMF+offset Vs MMMF The difference between MovieLens (Sarwar et al.2001)and EachMovie (Breese. MMMF+offset and MMMF lies in the linear offset terms Heckerman,and Kadie 1998).Some information about the Here,we compare them to demonstrate the significance of data sets is summarized in Table 1. these terms.Both MMMF+offset and MMMF use a vali- Root mean squared error (RMSE)is used as the error dation set to determine the hyperparameters,with the same measure.Suppose there are totally H elements to be pre- data set partitioning scheme as that above.The results are dicted,and Rh and Rh denote the ground-truth and pre- shown in Table 3.from which we can see that the linear off- dicted values respectively for element h.Then the RMSE set terms dramatically improve performance.It has been demonstrated empirically by many researchers (Takacs et al. 2008; Weimer, Karatzoglou, and Smola 2008) ´ that adding offset (or bias) terms to MMMF can improve its performance. These methods, MMMF+offset in (Weimer, Karatzoglou, and Smola 2008) and BRISMF in (Takacs et ´ al. 2008), are equivalent to (6) by setting µ = λµ = 0. All these non-probabilistic methods have shortcomings when it comes to choosing the hyperparameters. Other MF methods (Lim and Teh 2007; Salakhutdinov and Mnih 2008a; 2008b) are based on probabilistic formulations. PMF (Salakhutdinov and Mnih 2008b) can be seen as a special case of SRMCoFi by removing the terms for µ, a, b. Bayesian PMF (BPMF) (Salakhutdinov and Mnih 2008a) extends PMF by putting some hyperpriors on the hyperparameters and uses Gibbs sampling for learning and inference. The underlying formulation of VB (Lim and Teh 2007) is the same as Bayesian PMF except that VB adopts variational methods for Bayesian inference. Although SRMCoFi in this paper does not perform fully Bayesian inference, the techniques used in extending PMF to Bayesian PMF (or MMMF to VB) may also be applied here to extend SRMCoFi to its fully Bayesian counterpart, possibly incurring higher computation cost to take advantage of a fully Bayesian approach. In that case, BPMF and VB can be seen as special cases of the corresponding fully Bayesian counterparts of SRMCoFi. We will pursue this direction in our future work. One common characteristic of these probabilistic formulations is that they do not include the very important linear terms used in SRMCoFi. Actually, many variants of MF methods were empirically studied in (Takacs et al. 2008) and MMMF with the added ´ linear terms (called BRISMF) was found to perform the best. This shows that the importance of the linear terms as supported by psychological experiments is also verified by empirical findings in CF applications. Modeling the offset terms (i.e., the linear random effects in SRMCoFi) and hyperparameter learning are two important characteristics for a promising CF model. However, existing methods do not simultaneously take both of them into consideration. Although SRMCoFi has been motivated by the study of social relations, it coincides with a model which seamlessly integrates these two aspects into a principled framework. The solid foundation validated by theories and experiments from psychological and statistical studies provides justification for the inclusion of the offset terms. Hence, compared with existing methods, better performance can be expected from SRMCoFi. Experiments Data Sets and Evaluation Metric We evaluate SRMCoFi on two widely used data sets: MovieLens (Sarwar et al. 2001) and EachMovie (Breese, Heckerman, and Kadie 1998). Some information about the data sets is summarized in Table 1. Root mean squared error (RMSE) is used as the error measure. Suppose there are totally H elements to be predicted, and Rh and R˜ h denote the ground-truth and predicted values respectively for element h. Then the RMSE Table 1: Data sets for evaluation. Dataset # Users # Movies # Ratings MovieLens 943 1,682 100,000 EachMovie 61,265 1,623 2,811,718 is defined as follows: RMSE = qPH h=1(Rh−R˜h) 2 H . We can see that the smaller the RMSE is, the better the method will be. Effect of Hyperparameter Learning Here, we compare SRMCoFi with MMMF+offset (Weimer, Karatzoglou, and Smola 2008). We keep part of the training data as a validation set to determine the hyperparameters for MMMF+offset, while the hyperparameters of SRMCoFi are automatically learned with our proposed algorithm. Hence, the main difference between MMMF+offset and SRMCoFi lies in how the hyperparameters are determined. We randomly select 20%, 40%, 60% and 80% of the data to form the training set and use the rest as the test set for evaluation. This random selection is carried out 10 rounds independently for each splitting ratio. In all the experiments in this paper, we set D = 30. The mean and standard deviation of the RMSE are reported in Table 2. Two other approaches, UserMean and MovieMean, are also included for comparison. UserMean uses the sample mean of the same user’s training ratings for prediction, and MovieMean uses that of the same movie’s training ratings. From the results, we can see that hyperparameter learning is very important for CF and SRMCoFi is very effective. Table 2: Comparison between SRMCoFi and MMMF+offset to show that hyperparameter learning is effective. The best performance (with smallest RMSE) is shown in bold. All standard deviations are 0.002 or less. 20% 40% 60% 80% MovieLens UserMean 1.060 1.049 1.044 1.043 MovieMean 1.054 1.036 1.030 1.027 MMMF+offset 0.974 0.954 0.945 0.940 SRMCoFi 0.969 0.941 0.918 0.910 EachMovie UserMean 1.477 1.443 1.432 1.427 MovieMean 1.389 1.388 1.387 1.387 MMMF+offset 1.286 1.233 1.191 1.161 SRMCoFi 1.245 1.195 1.160 1.136 Effect of Linear Random Effects MMMF+offset Vs MMMF The difference between MMMF+offset and MMMF lies in the linear offset terms. Here, we compare them to demonstrate the significance of these terms. Both MMMF+offset and MMMF use a validation set to determine the hyperparameters, with the same data set partitioning scheme as that above. The results are shown in Table 3, from which we can see that the linear offset terms dramatically improve performance