正在加载图片...

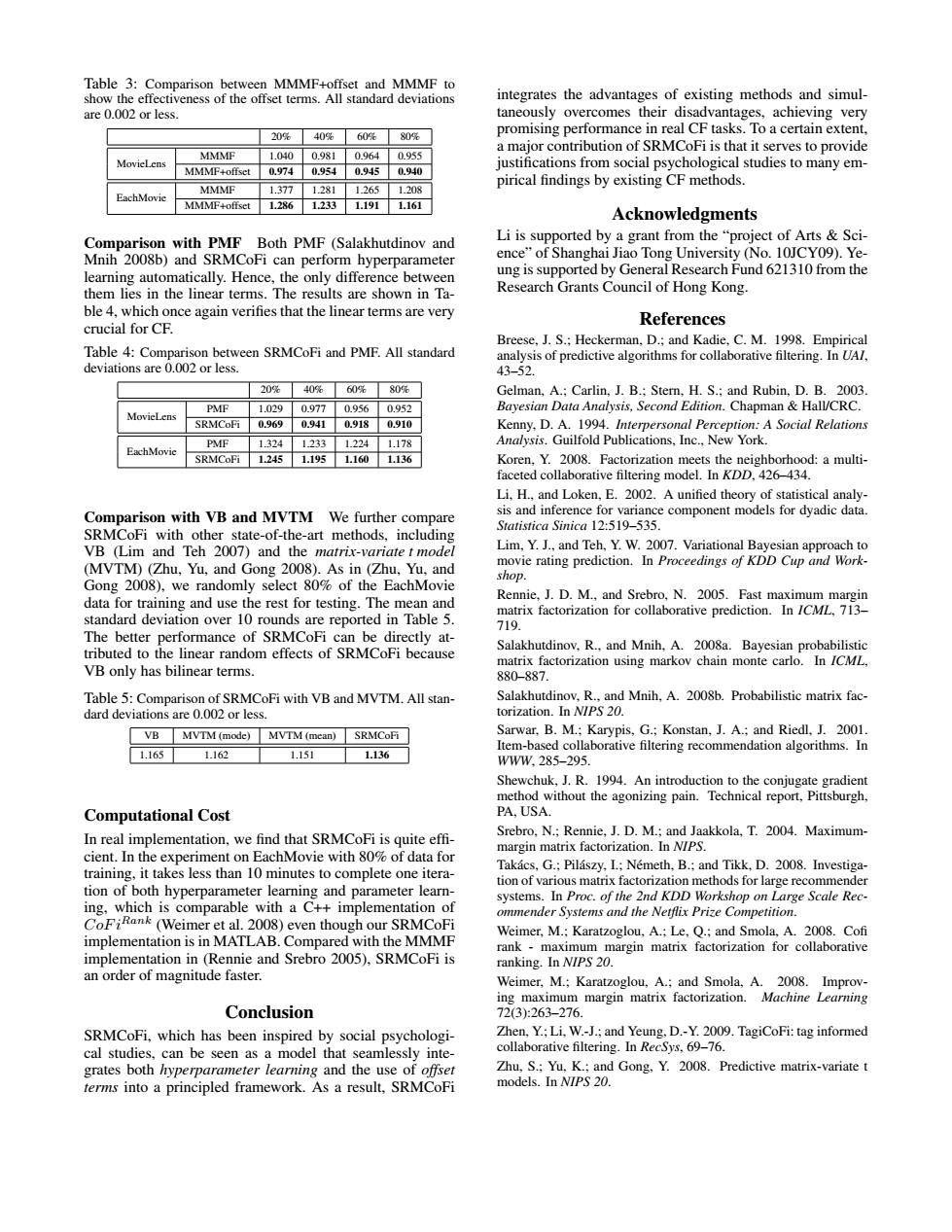

Table 3:Comparison between MMMF+offset and MMMF to show the effectiveness of the offset terms.All standard deviations integrates the advantages of existing methods and simul- are 0.002 or less. taneously overcomes their disadvantages,achieving very 20%409%60%80% promising performance in real CF tasks.To a certain extent, MMMF 1.0400.9810.9640.955 a major contribution of SRMCoFi is that it serves to provide MovieLens MMMF+offset 0.9740.9540.9450.940 justifications from social psychological studies to many em- MMMF 1.377128112651.208 pirical findings by existing CF methods. MMMF4 offset1.28612331.1911.161 Acknowledgments Comparison with PMF Both PMF(Salakhutdinov and Li is supported by a grant from the"project of Arts Sci- Mnih 2008b)and SRMCoFi can perform hyperparameter ence"of Shanghai Jiao Tong University (No.10JCY09).Ye- learning automatically.Hence,the only difference between ung is supported by General Research Fund 621310 from the them lies in the linear terms.The results are shown in Ta- Research Grants Council of Hong Kong. ble 4,which once again verifies that the linear terms are very References crucial for CF. Breese,J.S.;Heckerman,D.;and Kadie,C.M.1998.Empirical Table 4:Comparison between SRMCoFi and PMF.All standard analysis of predictive algorithms for collaborative filtering.In UAl, deviations are 0.002 or less. 43-52. 20% 40%60%80% Gelman,A.;Carlin,J.B.;Stern,H.S.;and Rubin,D.B.2003. PMF 1.029 0.9770.956 0.952 Bayesian Data Analysis,Second Edition.Chapman Hall/CRC. MovicLens SRMCoFi 0.969 0.941 0.918 0.910 Kenny,D.A.1994.Interpersonal Perception:A Social Relations PMF 1.324 1233 1.224 EachMovie 1.178 Analysis.Guilfold Publications,Inc.,New York. SRMCoFi 1.245 1.195 1.160 1.136 Koren,Y.2008.Factorization meets the neighborhood:a multi- faceted collaborative filtering model.In KDD,426-434. Li,H.,and Loken,E.2002.A unified theory of statistical analy- Comparison with VB and MVTM We further compare sis and inference for variance component models for dyadic data. Statistica Sinica 12:519-535. SRMCoFi with other state-of-the-art methods.including VB (Lim and Teh 2007)and the matrix-variate t model Lim,Y.J.,and Teh,Y.W.2007.Variational Bayesian approach to (MVTM)(Zhu,Yu,and Gong 2008).As in (Zhu,Yu,and movie rating prediction.In Proceedings of KDD Cup and Work- shop. Gong 2008),we randomly select 80%of the EachMovie data for training and use the rest for testing.The mean and Rennie,J.D.M.,and Srebro,N.2005.Fast maximum margin standard deviation over 10 rounds are reported in Table 5. matrix factorization for collaborative prediction.In /CML.713- 719. The better performance of SRMCoFi can be directly at- tributed to the linear random effects of SRMCoFi because Salakhutdinov,R..and Mnih,A.2008a.Bayesian probabilistic matrix factorization using markov chain monte carlo.In ICML. VB only has bilinear terms. 880-887. Table 5:Comparison of SRMCoFi with VB and MVTM.All stan- Salakhutdinov,R.,and Mnih,A.2008b.Probabilistic matrix fac- dard deviations are 0.002 or less. torization.In NIPS 20. VB MVTM (mode)MVTM (mean)SRMCoFi Sarwar,B.M.;Karypis,G.;Konstan,J.A.;and Riedl,J.2001. 1.165 Item-based collaborative filtering recommendation algorithms.In 1.162 1.151 1.136 WWW.285-295. Shewchuk,J.R.1994.An introduction to the conjugate gradient method without the agonizing pain.Technical report,Pittsburgh, Computational Cost PA.USA. In real implementation,we find that SRMCoFi is quite effi- Srebro,N.;Rennie,J.D.M.;and Jaakkola,T.2004.Maximum- margin matrix factorization.In N/PS. cient.In the experiment on EachMovie with 80%of data for training,it takes less than 10 minutes to complete one itera- Takacs,G.:Pilaszy,I;Nemeth,B.;and Tikk,D.2008.Investiga- tion of various matrix factorization methods for large recommender tion of both hyperparameter learning and parameter learn- systems.In Proc.of the 2nd KDD Workshop on Large Scale Rec- ing,which is comparable with a C++implementation of ommender Systems and the Netflix Prize Competition. CoFiRank(Weimer et al.2008)even though our SRMCoFi Weimer,M.:Karatzoglou,A.:Le,Q.;and Smola,A.2008.Cofi implementation is in MATLAB.Compared with the MMMF rank maximum margin matrix factorization for collaborative implementation in (Rennie and Srebro 2005),SRMCoFi is ranking.In NIPS 20. an order of magnitude faster. Weimer,M.;Karatzoglou,A.;and Smola,A.2008.Improv- ing maximum margin matrix factorization.Machine Learning Conclusion 72(3):263-276. SRMCoFi,which has been inspired by social psychologi- Zhen,Y.:Li.W.-J.;and Yeung,D.-Y.2009.TagiCoFi:tag informed cal studies,can be seen as a model that seamlessly inte- collaborative filtering.In RecSys,69-76. grates both hyperparameter learning and the use of offset Zhu,S.;Yu,K.;and Gong.Y.2008.Predictive matrix-variate t terms into a principled framework.As a result,SRMCoFi models.In NIPS 20.Table 3: Comparison between MMMF+offset and MMMF to show the effectiveness of the offset terms. All standard deviations are 0.002 or less. 20% 40% 60% 80% MovieLens MMMF 1.040 0.981 0.964 0.955 MMMF+offset 0.974 0.954 0.945 0.940 EachMovie MMMF 1.377 1.281 1.265 1.208 MMMF+offset 1.286 1.233 1.191 1.161 Comparison with PMF Both PMF (Salakhutdinov and Mnih 2008b) and SRMCoFi can perform hyperparameter learning automatically. Hence, the only difference between them lies in the linear terms. The results are shown in Table 4, which once again verifies that the linear terms are very crucial for CF. Table 4: Comparison between SRMCoFi and PMF. All standard deviations are 0.002 or less. 20% 40% 60% 80% MovieLens PMF 1.029 0.977 0.956 0.952 SRMCoFi 0.969 0.941 0.918 0.910 EachMovie PMF 1.324 1.233 1.224 1.178 SRMCoFi 1.245 1.195 1.160 1.136 Comparison with VB and MVTM We further compare SRMCoFi with other state-of-the-art methods, including VB (Lim and Teh 2007) and the matrix-variate t model (MVTM) (Zhu, Yu, and Gong 2008). As in (Zhu, Yu, and Gong 2008), we randomly select 80% of the EachMovie data for training and use the rest for testing. The mean and standard deviation over 10 rounds are reported in Table 5. The better performance of SRMCoFi can be directly attributed to the linear random effects of SRMCoFi because VB only has bilinear terms. Table 5: Comparison of SRMCoFi with VB and MVTM. All standard deviations are 0.002 or less. VB MVTM (mode) MVTM (mean) SRMCoFi 1.165 1.162 1.151 1.136 Computational Cost In real implementation, we find that SRMCoFi is quite effi- cient. In the experiment on EachMovie with 80% of data for training, it takes less than 10 minutes to complete one iteration of both hyperparameter learning and parameter learning, which is comparable with a C++ implementation of CoF iRank (Weimer et al. 2008) even though our SRMCoFi implementation is in MATLAB. Compared with the MMMF implementation in (Rennie and Srebro 2005), SRMCoFi is an order of magnitude faster. Conclusion SRMCoFi, which has been inspired by social psychological studies, can be seen as a model that seamlessly integrates both hyperparameter learning and the use of offset terms into a principled framework. As a result, SRMCoFi integrates the advantages of existing methods and simultaneously overcomes their disadvantages, achieving very promising performance in real CF tasks. To a certain extent, a major contribution of SRMCoFi is that it serves to provide justifications from social psychological studies to many empirical findings by existing CF methods. Acknowledgments Li is supported by a grant from the “project of Arts & Science” of Shanghai Jiao Tong University (No. 10JCY09). Yeung is supported by General Research Fund 621310 from the Research Grants Council of Hong Kong. References Breese, J. S.; Heckerman, D.; and Kadie, C. M. 1998. Empirical analysis of predictive algorithms for collaborative filtering. In UAI, 43–52. Gelman, A.; Carlin, J. B.; Stern, H. S.; and Rubin, D. B. 2003. Bayesian Data Analysis, Second Edition. Chapman & Hall/CRC. Kenny, D. A. 1994. Interpersonal Perception: A Social Relations Analysis. Guilfold Publications, Inc., New York. Koren, Y. 2008. Factorization meets the neighborhood: a multifaceted collaborative filtering model. In KDD, 426–434. Li, H., and Loken, E. 2002. A unified theory of statistical analysis and inference for variance component models for dyadic data. Statistica Sinica 12:519–535. Lim, Y. J., and Teh, Y. W. 2007. Variational Bayesian approach to movie rating prediction. In Proceedings of KDD Cup and Workshop. Rennie, J. D. M., and Srebro, N. 2005. Fast maximum margin matrix factorization for collaborative prediction. In ICML, 713– 719. Salakhutdinov, R., and Mnih, A. 2008a. Bayesian probabilistic matrix factorization using markov chain monte carlo. In ICML, 880–887. Salakhutdinov, R., and Mnih, A. 2008b. Probabilistic matrix factorization. In NIPS 20. Sarwar, B. M.; Karypis, G.; Konstan, J. A.; and Riedl, J. 2001. Item-based collaborative filtering recommendation algorithms. In WWW, 285–295. Shewchuk, J. R. 1994. An introduction to the conjugate gradient method without the agonizing pain. Technical report, Pittsburgh, PA, USA. Srebro, N.; Rennie, J. D. M.; and Jaakkola, T. 2004. Maximummargin matrix factorization. In NIPS. Takacs, G.; Pil ´ aszy, I.; N ´ emeth, B.; and Tikk, D. 2008. Investiga- ´ tion of various matrix factorization methods for large recommender systems. In Proc. of the 2nd KDD Workshop on Large Scale Recommender Systems and the Netflix Prize Competition. Weimer, M.; Karatzoglou, A.; Le, Q.; and Smola, A. 2008. Cofi rank - maximum margin matrix factorization for collaborative ranking. In NIPS 20. Weimer, M.; Karatzoglou, A.; and Smola, A. 2008. Improving maximum margin matrix factorization. Machine Learning 72(3):263–276. Zhen, Y.; Li, W.-J.; and Yeung, D.-Y. 2009. TagiCoFi: tag informed collaborative filtering. In RecSys, 69–76. Zhu, S.; Yu, K.; and Gong, Y. 2008. Predictive matrix-variate t models. In NIPS 20