正在加载图片...

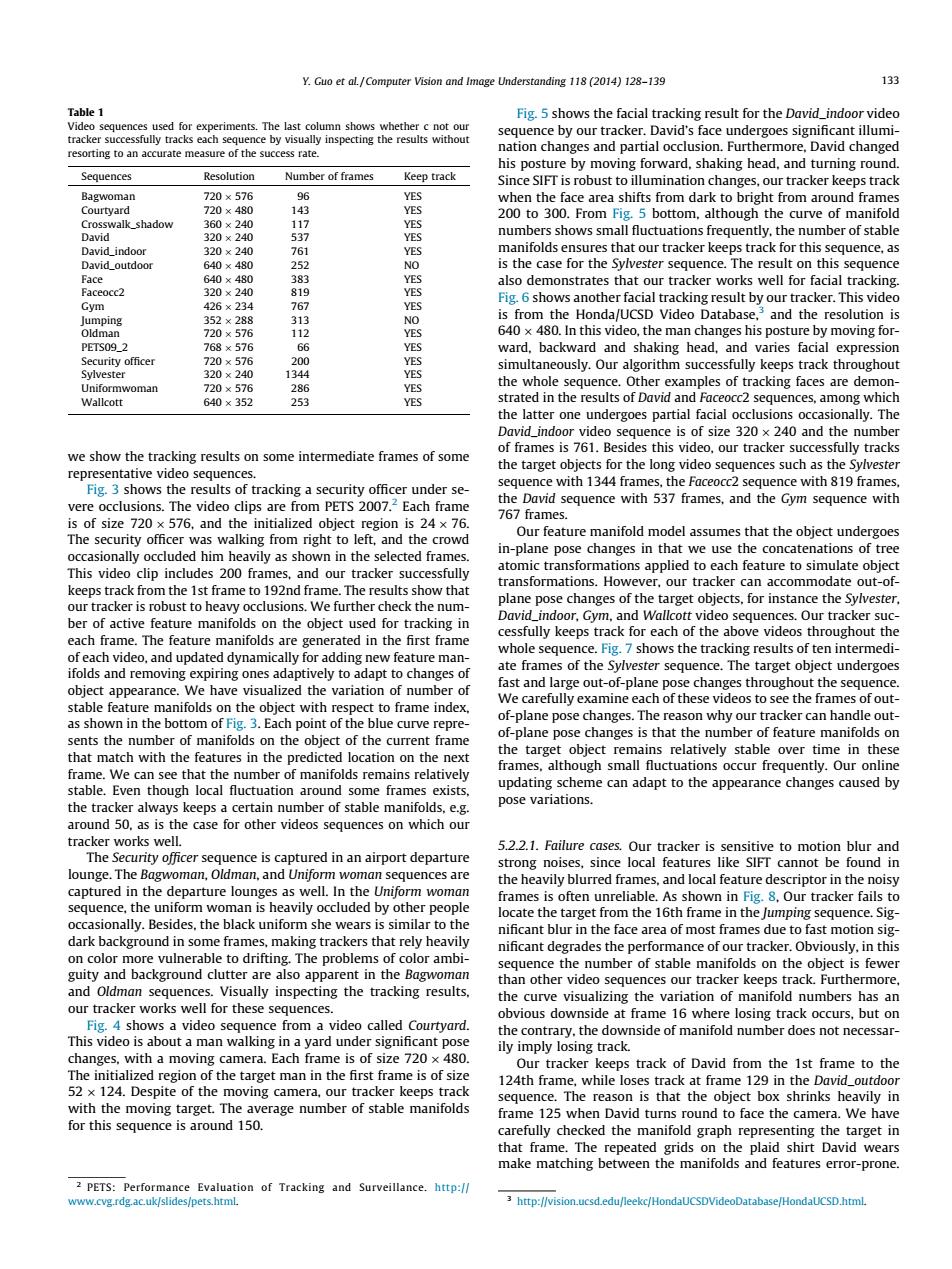

Y.Guo et al/Computer Vision and Image Understanding 118(2014)128-139 133 Table 1 Fig.5 shows the facial tracking result for the David_indoor video Video sequences used for experiments.The last column shows whether c not our sequence by our tracker.David's face undergoes significant illumi- tracker successfully tracks each sequence by visually inspecting the results without resorting to an accurate measure of the success rate. nation changes and partial occlusion.Furthermore,David changed his posture by moving forward,shaking head,and turning round Sequences Resolution Number of frames Keep track Since SIFT is robust to illumination changes,our tracker keeps track Bagwoman 720×576 96 YES when the face area shifts from dark to bright from around frames Courtyard 720×480 143 YES 200 to 300.From Fig.5 bottom,although the curve of manifold Crosswalk_shadow 360×240 117 YES David 320×240 537 YES numbers shows small fluctuations frequently,the number of stable David_indoor 320×240 761 YES manifolds ensures that our tracker keeps track for this sequence,as David_outdoor 640×480 252 NO is the case for the Sylvester sequence.The result on this sequence Face 640×480 383 YES also demonstrates that our tracker works well for facial tracking. Faceocc2 320×240 819 YES Fig.6 shows another facial tracking result by our tracker.This video Gym 426×234 767 YES Jumping 352×288 313 NO is from the Honda/UCSD Video Database, and the resolution is oldman 720×576 112 YES 640 x 480.In this video,the man changes his posture by moving for- PETS09_2 768×576 66 YES ward,backward and shaking head,and varies facial expression Security officer 720×576 200 VES simultaneously.Our algorithm successfully keeps track throughout Sylvester 320×240 1344 YES Uniformwoman 720×576 286 YES the whole sequence.Other examples of tracking faces are demon- Wallcott 640×352 253 VES strated in the results of David and Faceocc2 sequences,among which the latter one undergoes partial facial occlusions occasionally.The David_indoor video sequence is of size 320 x 240 and the number of frames is 761.Besides this video,our tracker successfully tracks we show the tracking results on some intermediate frames of some the target objects for the long video sequences such as the Sylvester representative video sequences. Fig.3 shows the results of tracking a security officer under se- sequence with 1344 frames,the Faceocc2 sequence with 819 frames vere occlusions.The video clips are from PETS 2007.2 Each frame the David sequence with 537 frames,and the Gym sequence with 767 frames. is of size 720 x 576,and the initialized object region is 24 x 76. The security officer was walking from right to left,and the crowd Our feature manifold model assumes that the object undergoes occasionally occluded him heavily as shown in the selected frames. in-plane pose changes in that we use the concatenations of tree This video clip includes 200 frames,and our tracker successfully atomic transformations applied to each feature to simulate object transformations.However,our tracker can accommodate out-of- keeps track from the 1st frame to 192nd frame.The results show that our tracker is robust to heavy occlusions.We further check the num- plane pose changes of the target objects,for instance the Sylvester. ber of active feature manifolds on the object used for tracking in David_indoor,Gym,and Wallcott video sequences.Our tracker suc- each frame.The feature manifolds are generated in the first frame cessfully keeps track for each of the above videos throughout the whole sequence.Fig.7 shows the tracking results of ten intermedi- of each video,and updated dynamically for adding new feature man- ifolds and removing expiring ones adaptively to adapt to changes of ate frames of the Sylvester sequence.The target object undergoes object appearance.We have visualized the variation of number of fast and large out-of-plane pose changes throughout the sequence. We carefully examine each of these videos to see the frames of out- stable feature manifolds on the object with respect to frame index, as shown in the bottom of Fig.3.Each point of the blue curve repre- of-plane pose changes.The reason why our tracker can handle out- sents the number of manifolds on the object of the current frame of-plane pose changes is that the number of feature manifolds on that match with the features in the predicted location on the next the target object remains relatively stable over time in these frame.We can see that the number of manifolds remains relatively frames,although small fluctuations occur frequently.Our online stable.Even though local fluctuation around some frames exists. updating scheme can adapt to the appearance changes caused by pose variations. the tracker always keeps a certain number of stable manifolds,e.g. around 50,as is the case for other videos sequences on which our tracker works well. 5.2.2.1.Failure cases.Our tracker is sensitive to motion blur and The Security officer sequence is captured in an airport departure strong noises,since local features like SIFT cannot be found in lounge.The Bagwoman,Oldman,and Uniform woman sequences are the heavily blurred frames,and local feature descriptor in the noisy captured in the departure lounges as well.In the Uniform woman frames is often unreliable.As shown in Fig.8,Our tracker fails to sequence,the uniform woman is heavily occluded by other people locate the target from the 16th frame in the Jumping sequence.Sig- occasionally.Besides,the black uniform she wears is similar to the nificant blur in the face area of most frames due to fast motion sig- dark background in some frames,making trackers that rely heavily nificant degrades the performance of our tracker.Obviously,in this on color more vulnerable to drifting.The problems of color ambi- sequence the number of stable manifolds on the object is fewer guity and background clutter are also apparent in the Bagwoman than other video sequences our tracker keeps track.Furthermore and Oldman sequences.Visually inspecting the tracking results, the curve visualizing the variation of manifold numbers has an our tracker works well for these sequences. obvious downside at frame 16 where losing track occurs,but on Fig.4 shows a video sequence from a video called Courtyard. the contrary,the downside of manifold number does not necessar- This video is about a man walking in a yard under significant pose ily imply losing track. changes,with a moving camera.Each frame is of size 720 x 480. Our tracker keeps track of David from the 1st frame to the The initialized region of the target man in the first frame is of size 124th frame,while loses track at frame 129 in the David_outdoor 52 x 124.Despite of the moving camera,our tracker keeps track sequence.The reason is that the object box shrinks heavily in with the moving target.The average number of stable manifolds frame 125 when David turns round to face the camera.We have for this sequence is around 150. carefully checked the manifold graph representing the target in that frame.The repeated grids on the plaid shirt David wears make matching between the manifolds and features error-prone. 2 PETS:Performance Evaluation of Tracking and Surveillance.http:// www.cvg.rdg.ac.uk/slides/pets.htmL. http://vision.ucsd.edu/leekc/HondaUCSDVideoDatabase/HondaUCSD.html.we show the tracking results on some intermediate frames of some representative video sequences. Fig. 3 shows the results of tracking a security officer under severe occlusions. The video clips are from PETS 2007.2 Each frame is of size 720 576, and the initialized object region is 24 76. The security officer was walking from right to left, and the crowd occasionally occluded him heavily as shown in the selected frames. This video clip includes 200 frames, and our tracker successfully keeps track from the 1st frame to 192nd frame. The results show that our tracker is robust to heavy occlusions. We further check the number of active feature manifolds on the object used for tracking in each frame. The feature manifolds are generated in the first frame of each video, and updated dynamically for adding new feature manifolds and removing expiring ones adaptively to adapt to changes of object appearance. We have visualized the variation of number of stable feature manifolds on the object with respect to frame index, as shown in the bottom of Fig. 3. Each point of the blue curve represents the number of manifolds on the object of the current frame that match with the features in the predicted location on the next frame. We can see that the number of manifolds remains relatively stable. Even though local fluctuation around some frames exists, the tracker always keeps a certain number of stable manifolds, e.g. around 50, as is the case for other videos sequences on which our tracker works well. The Security officer sequence is captured in an airport departure lounge. The Bagwoman, Oldman, and Uniform woman sequences are captured in the departure lounges as well. In the Uniform woman sequence, the uniform woman is heavily occluded by other people occasionally. Besides, the black uniform she wears is similar to the dark background in some frames, making trackers that rely heavily on color more vulnerable to drifting. The problems of color ambiguity and background clutter are also apparent in the Bagwoman and Oldman sequences. Visually inspecting the tracking results, our tracker works well for these sequences. Fig. 4 shows a video sequence from a video called Courtyard. This video is about a man walking in a yard under significant pose changes, with a moving camera. Each frame is of size 720 480. The initialized region of the target man in the first frame is of size 52 124. Despite of the moving camera, our tracker keeps track with the moving target. The average number of stable manifolds for this sequence is around 150. Fig. 5 shows the facial tracking result for the David_indoor video sequence by our tracker. David’s face undergoes significant illumination changes and partial occlusion. Furthermore, David changed his posture by moving forward, shaking head, and turning round. Since SIFT is robust to illumination changes, our tracker keeps track when the face area shifts from dark to bright from around frames 200 to 300. From Fig. 5 bottom, although the curve of manifold numbers shows small fluctuations frequently, the number of stable manifolds ensures that our tracker keeps track for this sequence, as is the case for the Sylvester sequence. The result on this sequence also demonstrates that our tracker works well for facial tracking. Fig. 6 shows another facial tracking result by our tracker. This video is from the Honda/UCSD Video Database,3 and the resolution is 640 480. In this video, the man changes his posture by moving forward, backward and shaking head, and varies facial expression simultaneously. Our algorithm successfully keeps track throughout the whole sequence. Other examples of tracking faces are demonstrated in the results of David and Faceocc2 sequences, among which the latter one undergoes partial facial occlusions occasionally. The David_indoor video sequence is of size 320 240 and the number of frames is 761. Besides this video, our tracker successfully tracks the target objects for the long video sequences such as the Sylvester sequence with 1344 frames, the Faceocc2 sequence with 819 frames, the David sequence with 537 frames, and the Gym sequence with 767 frames. Our feature manifold model assumes that the object undergoes in-plane pose changes in that we use the concatenations of tree atomic transformations applied to each feature to simulate object transformations. However, our tracker can accommodate out-ofplane pose changes of the target objects, for instance the Sylvester, David_indoor, Gym, and Wallcott video sequences. Our tracker successfully keeps track for each of the above videos throughout the whole sequence. Fig. 7 shows the tracking results of ten intermediate frames of the Sylvester sequence. The target object undergoes fast and large out-of-plane pose changes throughout the sequence. We carefully examine each of these videos to see the frames of outof-plane pose changes. The reason why our tracker can handle outof-plane pose changes is that the number of feature manifolds on the target object remains relatively stable over time in these frames, although small fluctuations occur frequently. Our online updating scheme can adapt to the appearance changes caused by pose variations. 5.2.2.1. Failure cases. Our tracker is sensitive to motion blur and strong noises, since local features like SIFT cannot be found in the heavily blurred frames, and local feature descriptor in the noisy frames is often unreliable. As shown in Fig. 8, Our tracker fails to locate the target from the 16th frame in the Jumping sequence. Significant blur in the face area of most frames due to fast motion significant degrades the performance of our tracker. Obviously, in this sequence the number of stable manifolds on the object is fewer than other video sequences our tracker keeps track. Furthermore, the curve visualizing the variation of manifold numbers has an obvious downside at frame 16 where losing track occurs, but on the contrary, the downside of manifold number does not necessarily imply losing track. Our tracker keeps track of David from the 1st frame to the 124th frame, while loses track at frame 129 in the David_outdoor sequence. The reason is that the object box shrinks heavily in frame 125 when David turns round to face the camera. We have carefully checked the manifold graph representing the target in that frame. The repeated grids on the plaid shirt David wears make matching between the manifolds and features error-prone. Table 1 Video sequences used for experiments. The last column shows whether c not our tracker successfully tracks each sequence by visually inspecting the results without resorting to an accurate measure of the success rate. Sequences Resolution Number of frames Keep track Bagwoman 720 576 96 YES Courtyard 720 480 143 YES Crosswalk_shadow 360 240 117 YES David 320 240 537 YES David_indoor 320 240 761 YES David_outdoor 640 480 252 NO Face 640 480 383 YES Faceocc2 320 240 819 YES Gym 426 234 767 YES Jumping 352 288 313 NO Oldman 720 576 112 YES PETS09_2 768 576 66 YES Security officer 720 576 200 YES Sylvester 320 240 1344 YES Uniformwoman 720 576 286 YES Wallcott 640 352 253 YES 2 PETS: Performance Evaluation of Tracking and Surveillance. http:// www.cvg.rdg.ac.uk/slides/pets.html. 3 http://vision.ucsd.edu/leekc/HondaUCSDVideoDatabase/HondaUCSD.html. Y. Guo et al. / Computer Vision and Image Understanding 118 (2014) 128–139 133