正在加载图片...

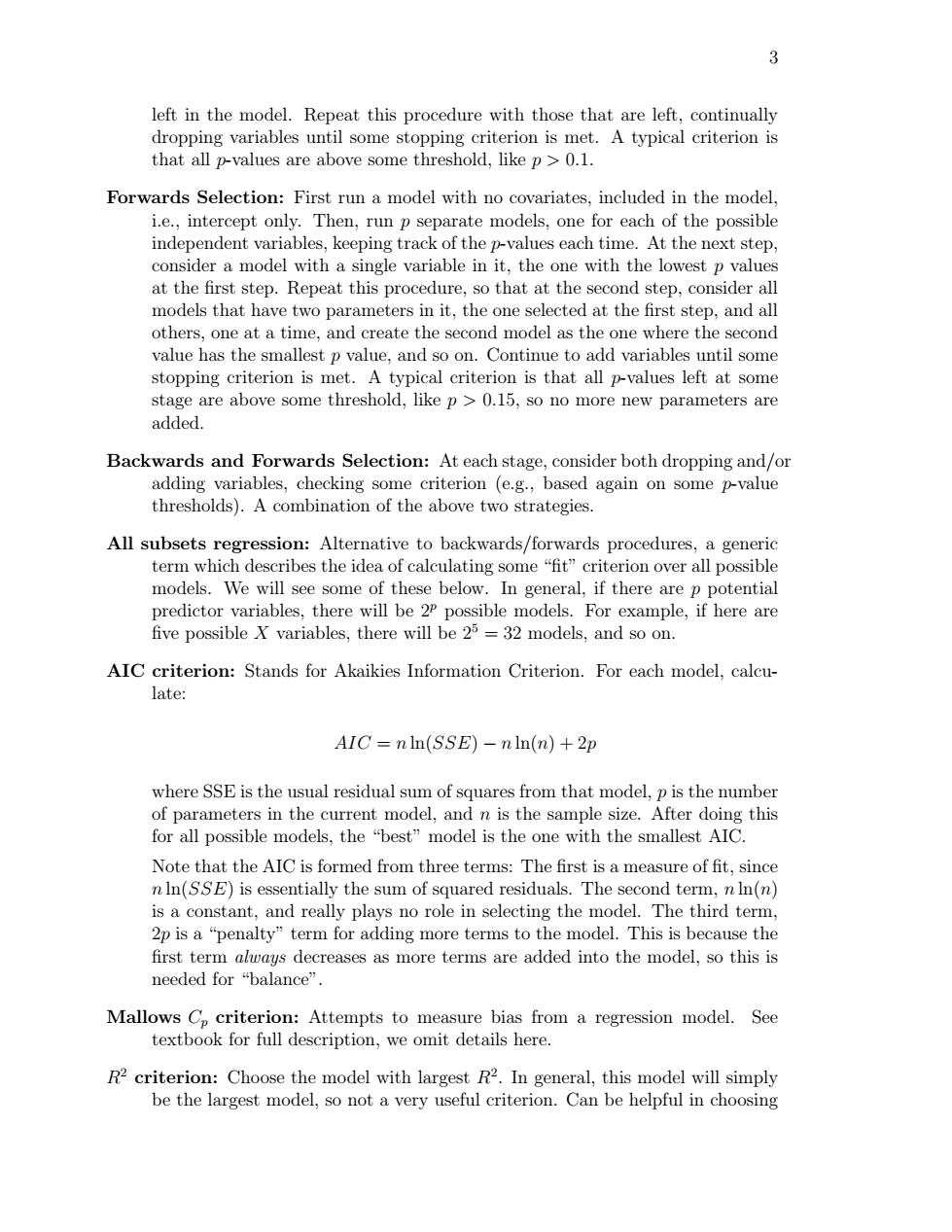

3 left in the model.Repeat this procedure with those that are left,continually dropping variables until some stopping criterion is met.A typical criterion is that all p-values are above some threshold,like p >0.1. Forwards Selection:First run a model with no covariates,included in the model, i.e.,intercept only.Then,run p separate models,one for each of the possible independent variables,keeping track of the p-values each time.At the next step, consider a model with a single variable in it,the one with the lowest p values at the first step.Repeat this procedure,so that at the second step,consider all models that have two parameters in it,the one selected at the first step,and all others,one at a time,and create the second model as the one where the second value has the smallest p value,and so on.Continue to add variables until some stopping criterion is met.A typical criterion is that all p-values left at some stage are above some threshold,like p >0.15,so no more new parameters are added. Backwards and Forwards Selection:At each stage,consider both dropping and/or adding variables,checking some criterion (e.g.,based again on some p-value thresholds).A combination of the above two strategies. All subsets regression:Alternative to backwards/forwards procedures,a generic term which describes the idea of calculating some"fit"criterion over all possible models.We will see some of these below.In general,if there are p potential predictor variables,there will be 2p possible models.For example,if here are five possible X variables,there will be 25=32 models,and so on. AIC criterion:Stands for Akaikies Information Criterion.For each model,calcu- late: AIC=nln(SSE)-nln(n)+2p where SSE is the usual residual sum of squares from that model,p is the number of parameters in the current model,and n is the sample size.After doing this for all possible models,the "best"model is the one with the smallest AIC Note that the AIC is formed from three terms:The first is a measure of fit,since nIn(SSE)is essentially the sum of squared residuals.The second term,n In(n) is a constant,and really plays no role in selecting the model.The third term, 2p is a "penalty"term for adding more terms to the model.This is because the first term always decreases as more terms are added into the model,so this is needed for“balance'”. Mallows Cp criterion:Attempts to measure bias from a regression model.See textbook for full description,we omit details here. R2 criterion:Choose the model with largest R2.In general,this model will simply be the largest model,so not a very useful criterion.Can be helpful in choosing3 left in the model. Repeat this procedure with those that are left, continually dropping variables until some stopping criterion is met. A typical criterion is that all p-values are above some threshold, like p > 0.1. Forwards Selection: First run a model with no covariates, included in the model, i.e., intercept only. Then, run p separate models, one for each of the possible independent variables, keeping track of the p-values each time. At the next step, consider a model with a single variable in it, the one with the lowest p values at the first step. Repeat this procedure, so that at the second step, consider all models that have two parameters in it, the one selected at the first step, and all others, one at a time, and create the second model as the one where the second value has the smallest p value, and so on. Continue to add variables until some stopping criterion is met. A typical criterion is that all p-values left at some stage are above some threshold, like p > 0.15, so no more new parameters are added. Backwards and Forwards Selection: At each stage, consider both dropping and/or adding variables, checking some criterion (e.g., based again on some p-value thresholds). A combination of the above two strategies. All subsets regression: Alternative to backwards/forwards procedures, a generic term which describes the idea of calculating some “fit” criterion over all possible models. We will see some of these below. In general, if there are p potential predictor variables, there will be 2p possible models. For example, if here are five possible X variables, there will be 25 = 32 models, and so on. AIC criterion: Stands for Akaikies Information Criterion. For each model, calculate: AIC = n ln(SSE) − n ln(n) + 2p where SSE is the usual residual sum of squares from that model, p is the number of parameters in the current model, and n is the sample size. After doing this for all possible models, the “best” model is the one with the smallest AIC. Note that the AIC is formed from three terms: The first is a measure of fit, since n ln(SSE) is essentially the sum of squared residuals. The second term, n ln(n) is a constant, and really plays no role in selecting the model. The third term, 2p is a “penalty” term for adding more terms to the model. This is because the first term always decreases as more terms are added into the model, so this is needed for “balance”. Mallows Cp criterion: Attempts to measure bias from a regression model. See textbook for full description, we omit details here. R2 criterion: Choose the model with largest R2 . In general, this model will simply be the largest model, so not a very useful criterion. Can be helpful in choosing