正在加载图片...

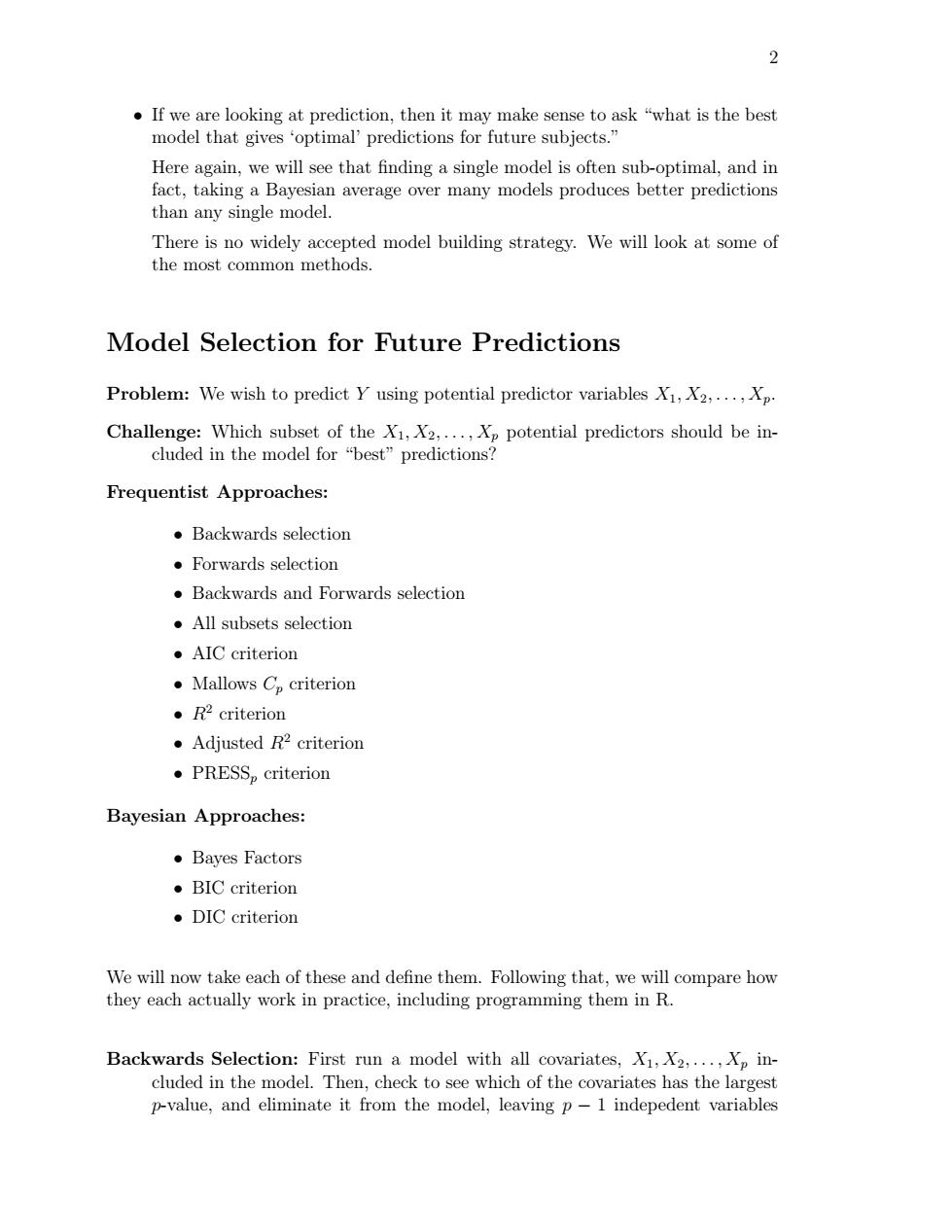

2 If we are looking at prediction,then it may make sense to ask "what is the best model that gives 'optimal'predictions for future subjects." Here again,we will see that finding a single model is often sub-optimal,and in fact,taking a Bayesian average over many models produces better predictions than any single model. There is no widely accepted model building strategy.We will look at some of the most common methods. Model Selection for Future Predictions Problem:We wish to predict Y using potential predictor variables X1,X2,...,Xp. Challenge:Which subset of the X1,X2,...,Xp potential predictors should be in- cluded in the model for“best”predictions? Frequentist Approaches: ●Backwards selection ●Forwards selection Backwards and Forwards selection All subsets selection ●AIC criterion ●Mallows Cp criterion ●R2 criterion Adjusted R2 criterion 。PRESSp criterion Bayesian Approaches: ●Bayes Factors ●BIC criterion 。DIC criterion We will now take each of these and define them.Following that,we will compare how they each actually work in practice,including programming them in R. Backwards Selection:First run a model with all covariates,X1,X2,...,Xp in- cluded in the model.Then,check to see which of the covariates has the largest p-value,and eliminate it from the model,leaving p-1 indepedent variables2 • If we are looking at prediction, then it may make sense to ask “what is the best model that gives ‘optimal’ predictions for future subjects.” Here again, we will see that finding a single model is often sub-optimal, and in fact, taking a Bayesian average over many models produces better predictions than any single model. There is no widely accepted model building strategy. We will look at some of the most common methods. Model Selection for Future Predictions Problem: We wish to predict Y using potential predictor variables X1, X2, . . . , Xp. Challenge: Which subset of the X1, X2, . . . , Xp potential predictors should be included in the model for “best” predictions? Frequentist Approaches: • Backwards selection • Forwards selection • Backwards and Forwards selection • All subsets selection • AIC criterion • Mallows Cp criterion • R2 criterion • Adjusted R2 criterion • PRESSp criterion Bayesian Approaches: • Bayes Factors • BIC criterion • DIC criterion We will now take each of these and define them. Following that, we will compare how they each actually work in practice, including programming them in R. Backwards Selection: First run a model with all covariates, X1, X2, . . . , Xp included in the model. Then, check to see which of the covariates has the largest p-value, and eliminate it from the model, leaving p − 1 indepedent variables