正在加载图片...

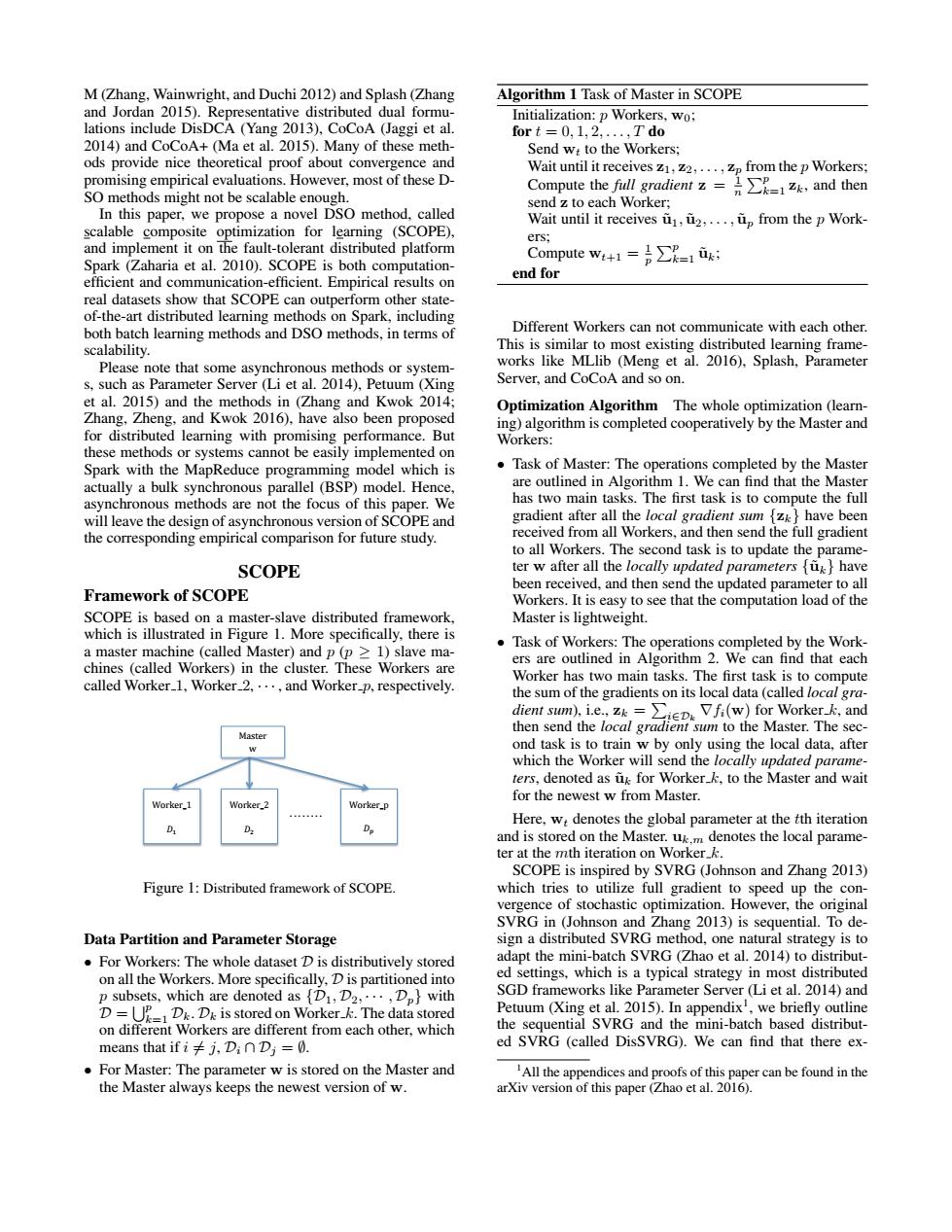

M(Zhang,Wainwright,and Duchi 2012)and Splash(Zhang Algorithm 1 Task of Master in SCOPE and Jordan 2015).Representative distributed dual formu- Initialization:p Workers,wo; lations include DisDCA (Yang 2013),CoCoA (Jaggi et al. fort =0,1,2,....T do 2014)and CoCoA+(Ma et al.2015).Many of these meth- Send w:to the Workers; ods provide nice theoretical proof about convergence and Wait until it receives z1,Z2,...,p from the p Workers; promising empirical evaluations.However,most of these D- SO methods might not be scalable enough. Compute the full&radient.=是∑-1zk,and then send z to each Worker; In this paper,we propose a novel DSO method,called Wait until it receives u,u2,...,p from the p Work- scalable composite optimization for learning (SCOPE), ers; and implement it on the fault-tolerant distributed platform Spark(Zaharia et al.2010).SCOPE is both computation- Compute w+1=号∑R=1a: end for efficient and communication-efficient.Empirical results on real datasets show that SCOPE can outperform other state- of-the-art distributed learning methods on Spark,including both batch learning methods and DSO methods,in terms of Different Workers can not communicate with each other. scalability. This is similar to most existing distributed learning frame- Please note that some asynchronous methods or system- works like MLlib (Meng et al.2016),Splash,Parameter s.such as Parameter Server (Li et al.2014),Petuum (Xing Server,and CoCoA and so on. et al.2015)and the methods in (Zhang and Kwok 2014; Optimization Algorithm The whole optimization (learn- Zhang,Zheng,and Kwok 2016).have also been proposed ing)algorithm is completed cooperatively by the Master and for distributed learning with promising performance.But Workers: these methods or systems cannot be easily implemented on Spark with the MapReduce programming model which is Task of Master:The operations completed by the Master actually a bulk synchronous parallel (BSP)model.Hence, are outlined in Algorithm 1.We can find that the Master asynchronous methods are not the focus of this paper.We has two main tasks.The first task is to compute the full will leave the design of asynchronous version of SCOPE and gradient after all the local gradient sum [zk}have been the corresponding empirical comparison for future study. received from all Workers,and then send the full gradient to all Workers.The second task is to update the parame- SCOPE ter w after all the locally updated parameters u have Framework of SCOPE been received,and then send the updated parameter to all Workers.It is easy to see that the computation load of the SCOPE is based on a master-slave distributed framework, Master is lightweight. which is illustrated in Figure 1.More specifically,there is a master machine (called Master)and p(p 1)slave ma- Task of Workers:The operations completed by the Work- ers are outlined in Algorithm 2.We can find that each chines (called Workers)in the cluster.These Workers are called Worker_1,Worker_2....,and Worker-p,respectively. Worker has two main tasks.The first task is to compute the sum of the gradients on its local data (called local gra- dient sum).i.e..=Vfi(w)for Worker k,and then send the local gradient 'sum to the Master.The sec- Master ond task is to train w by only using the local data,after which the Worker will send the locally updated parame- ters,denoted as uk for Workerk,to the Master and wait for the newest w from Master. Here,w:denotes the global parameter at the tth iteration and is stored on the Master.uk.m denotes the local parame- ter at the mth iteration on Worker k. SCOPE is inspired by SVRG (Johnson and Zhang 2013) Figure 1:Distributed framework of SCOPE. which tries to utilize full gradient to speed up the con- vergence of stochastic optimization.However,the original SVRG in (Johnson and Zhang 2013)is sequential.To de- Data Partition and Parameter Storage sign a distributed SVRG method,one natural strategy is to For Workers:The whole dataset D is distributively stored adapt the mini-batch SVRG (Zhao et al.2014)to distribut- on all the Workers.More specifically,D is partitioned into ed settings,which is a typical strategy in most distributed p subsets,which are denoted as [D1,D2,..,Dp}with SGD frameworks like Parameter Server (Li et al.2014)and D=D&.De is stored on Worker-k.The data stored Petuum (Xing et al.2015).In appendix',we briefly outline on different Workers are different from each other,which the sequential SVRG and the mini-batch based distribut- means that if i j,DinDj=0. ed SVRG(called DisSVRG).We can find that there ex- For Master:The parameter w is stored on the Master and All the appendices and proofs of this paper can be found in the the Master always keeps the newest version of w. arXiv version of this paper(Zhao et al.2016).M (Zhang, Wainwright, and Duchi 2012) and Splash (Zhang and Jordan 2015). Representative distributed dual formulations include DisDCA (Yang 2013), CoCoA (Jaggi et al. 2014) and CoCoA+ (Ma et al. 2015). Many of these methods provide nice theoretical proof about convergence and promising empirical evaluations. However, most of these DSO methods might not be scalable enough. In this paper, we propose a novel DSO method, called scalable composite optimization for learning (SCOPE), and implement it on the fault-tolerant distributed platform Spark (Zaharia et al. 2010). SCOPE is both computationefficient and communication-efficient. Empirical results on real datasets show that SCOPE can outperform other stateof-the-art distributed learning methods on Spark, including both batch learning methods and DSO methods, in terms of scalability. Please note that some asynchronous methods or systems, such as Parameter Server (Li et al. 2014), Petuum (Xing et al. 2015) and the methods in (Zhang and Kwok 2014; Zhang, Zheng, and Kwok 2016), have also been proposed for distributed learning with promising performance. But these methods or systems cannot be easily implemented on Spark with the MapReduce programming model which is actually a bulk synchronous parallel (BSP) model. Hence, asynchronous methods are not the focus of this paper. We will leave the design of asynchronous version of SCOPE and the corresponding empirical comparison for future study. SCOPE Framework of SCOPE SCOPE is based on a master-slave distributed framework, which is illustrated in Figure 1. More specifically, there is a master machine (called Master) and p (p ≥ 1) slave machines (called Workers) in the cluster. These Workers are called Worker 1, Worker 2, · · · , and Worker p, respectively. Master w Worker_1 �! . . . . . . . . Worker_p �! Worker_2 �! Figure 1: Distributed framework of SCOPE. Data Partition and Parameter Storage • For Workers: The whole dataset D is distributively stored on all the Workers. More specifically, D is partitioned into p subsets, which are denoted as {D1, D2, · · · , Dp} with D = Sp k=1 Dk. Dk is stored on Worker k. The data stored on different Workers are different from each other, which means that if i 6= j, Di ∩ Dj = ∅. • For Master: The parameter w is stored on the Master and the Master always keeps the newest version of w. Algorithm 1 Task of Master in SCOPE Initialization: p Workers, w0; for t = 0, 1, 2, . . . , T do Send wt to the Workers; Wait until it receives z1, z2, . . . , zp from the p Workers; Compute the full gradient z = 1 n Pp k=1 zk, and then send z to each Worker; Wait until it receives u˜1, u˜2, . . . , u˜p from the p Workers; Compute wt+1 = 1 p Pp k=1 u˜k; end for Different Workers can not communicate with each other. This is similar to most existing distributed learning frameworks like MLlib (Meng et al. 2016), Splash, Parameter Server, and CoCoA and so on. Optimization Algorithm The whole optimization (learning) algorithm is completed cooperatively by the Master and Workers: • Task of Master: The operations completed by the Master are outlined in Algorithm 1. We can find that the Master has two main tasks. The first task is to compute the full gradient after all the local gradient sum {zk} have been received from all Workers, and then send the full gradient to all Workers. The second task is to update the parameter w after all the locally updated parameters {u˜k} have been received, and then send the updated parameter to all Workers. It is easy to see that the computation load of the Master is lightweight. • Task of Workers: The operations completed by the Workers are outlined in Algorithm 2. We can find that each Worker has two main tasks. The first task is to compute the sum of the gradients on its local data (called local gradient sum), i.e., zk = P i∈Dk ∇fi(w) for Worker k, and then send the local gradient sum to the Master. The second task is to train w by only using the local data, after which the Worker will send the locally updated parameters, denoted as u˜k for Worker k, to the Master and wait for the newest w from Master. Here, wt denotes the global parameter at the tth iteration and is stored on the Master. uk,m denotes the local parameter at the mth iteration on Worker k. SCOPE is inspired by SVRG (Johnson and Zhang 2013) which tries to utilize full gradient to speed up the convergence of stochastic optimization. However, the original SVRG in (Johnson and Zhang 2013) is sequential. To design a distributed SVRG method, one natural strategy is to adapt the mini-batch SVRG (Zhao et al. 2014) to distributed settings, which is a typical strategy in most distributed SGD frameworks like Parameter Server (Li et al. 2014) and Petuum (Xing et al. 2015). In appendix1 , we briefly outline the sequential SVRG and the mini-batch based distributed SVRG (called DisSVRG). We can find that there ex- 1All the appendices and proofs of this paper can be found in the arXiv version of this paper (Zhao et al. 2016)