正在加载图片...

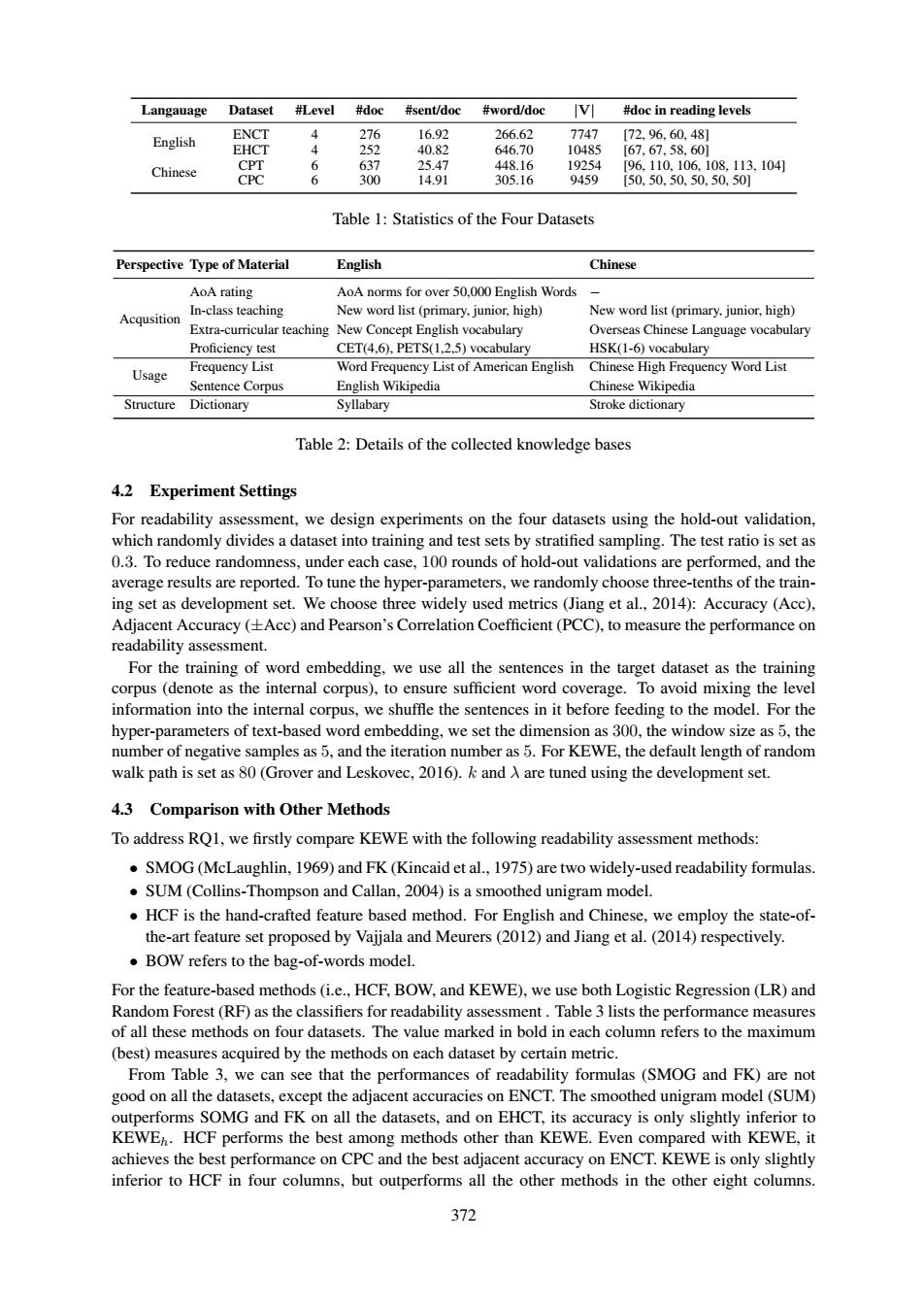

Langauage Dataset #Level #doc #sent/doc #word/doc V #doc in reading levels ENCT 4 276 16.92 7747 English 266.62 [72,96.60,48 EHCT 252 40.82 646.70 10485 [67,67,58.60] Chinese CPT 6 637 25.47 448.16 19254 96,110,106.108.113,104] CPC 6 3 14.91 305.16 9459 [50.50.50.50.50.50] Table 1:Statistics of the Four Datasets Perspective Type of Material English Chinese AoA rating AoA norms for over 50,000 English Words In-class teaching New word list (primary,junior,high) New word list(primary,junior,high) Acqusition Extra-curricular teaching New Concept English vocabulary Overseas Chinese Language vocabulary Proficiency test CET(4,6),PETS(1,2,5)vocabulary HSK(1-6)vocabulary Frequency List Usage Word Frequency List of American English Chinese High Frequency Word List Sentence Corpus English Wikipedia Chinese Wikipedia Structure Dictionary Syllabary Stroke dictionary Table 2:Details of the collected knowledge bases 4.2 Experiment Settings For readability assessment,we design experiments on the four datasets using the hold-out validation, which randomly divides a dataset into training and test sets by stratified sampling.The test ratio is set as 0.3.To reduce randomness,under each case,100 rounds of hold-out validations are performed,and the average results are reported.To tune the hyper-parameters,we randomly choose three-tenths of the train- ing set as development set.We choose three widely used metrics (Jiang et al.,2014):Accuracy (Acc), Adjacent Accuracy (+Acc)and Pearson's Correlation Coefficient(PCC),to measure the performance on readability assessment. For the training of word embedding,we use all the sentences in the target dataset as the training corpus (denote as the internal corpus),to ensure sufficient word coverage.To avoid mixing the level information into the internal corpus,we shuffle the sentences in it before feeding to the model.For the hyper-parameters of text-based word embedding,we set the dimension as 300,the window size as 5,the number of negative samples as 5,and the iteration number as 5.For KEWE,the default length of random walk path is set as 80(Grover and Leskovec,2016).k and A are tuned using the development set. 4.3 Comparison with Other Methods To address RQ1,we firstly compare KEWE with the following readability assessment methods: SMOG(McLaughlin,1969)and FK(Kincaid et al.,1975)are two widely-used readability formulas. .SUM (Collins-Thompson and Callan,2004)is a smoothed unigram model. HCF is the hand-crafted feature based method.For English and Chinese,we employ the state-of- the-art feature set proposed by Vajjala and Meurers(2012)and Jiang et al.(2014)respectively. BOW refers to the bag-of-words model. For the feature-based methods(i.e.,HCF,BOW,and KEWE),we use both Logistic Regression(LR)and Random Forest(RF)as the classifiers for readability assessment.Table 3 lists the performance measures of all these methods on four datasets.The value marked in bold in each column refers to the maximum (best)measures acquired by the methods on each dataset by certain metric. From Table 3,we can see that the performances of readability formulas (SMOG and FK)are not good on all the datasets,except the adjacent accuracies on ENCT.The smoothed unigram model(SUM) outperforms SOMG and FK on all the datasets,and on EHCT,its accuracy is only slightly inferior to KEWEh.HCF performs the best among methods other than KEWE.Even compared with KEWE,it achieves the best performance on CPC and the best adjacent accuracy on ENCT.KEWE is only slightly inferior to HCF in four columns,but outperforms all the other methods in the other eight columns 372372 Langauage Dataset #Level #doc #sent/doc #word/doc |V| #doc in reading levels English ENCT 4 276 16.92 266.62 7747 [72, 96, 60, 48] EHCT 4 252 40.82 646.70 10485 [67, 67, 58, 60] Chinese CPT 6 637 25.47 448.16 19254 [96, 110, 106, 108, 113, 104] CPC 6 300 14.91 305.16 9459 [50, 50, 50, 50, 50, 50] Table 1: Statistics of the Four Datasets Perspective Type of Material English Chinese Acqusition AoA rating AoA norms for over 50,000 English Words − In-class teaching New word list (primary, junior, high) New word list (primary, junior, high) Extra-curricular teaching New Concept English vocabulary Overseas Chinese Language vocabulary Proficiency test CET(4,6), PETS(1,2,5) vocabulary HSK(1-6) vocabulary Usage Frequency List Word Frequency List of American English Chinese High Frequency Word List Sentence Corpus English Wikipedia Chinese Wikipedia Structure Dictionary Syllabary Stroke dictionary Table 2: Details of the collected knowledge bases 4.2 Experiment Settings For readability assessment, we design experiments on the four datasets using the hold-out validation, which randomly divides a dataset into training and test sets by stratified sampling. The test ratio is set as 0.3. To reduce randomness, under each case, 100 rounds of hold-out validations are performed, and the average results are reported. To tune the hyper-parameters, we randomly choose three-tenths of the training set as development set. We choose three widely used metrics (Jiang et al., 2014): Accuracy (Acc), Adjacent Accuracy (±Acc) and Pearson’s Correlation Coefficient (PCC), to measure the performance on readability assessment. For the training of word embedding, we use all the sentences in the target dataset as the training corpus (denote as the internal corpus), to ensure sufficient word coverage. To avoid mixing the level information into the internal corpus, we shuffle the sentences in it before feeding to the model. For the hyper-parameters of text-based word embedding, we set the dimension as 300, the window size as 5, the number of negative samples as 5, and the iteration number as 5. For KEWE, the default length of random walk path is set as 80 (Grover and Leskovec, 2016). k and λ are tuned using the development set. 4.3 Comparison with Other Methods To address RQ1, we firstly compare KEWE with the following readability assessment methods: • SMOG (McLaughlin, 1969) and FK (Kincaid et al., 1975) are two widely-used readability formulas. • SUM (Collins-Thompson and Callan, 2004) is a smoothed unigram model. • HCF is the hand-crafted feature based method. For English and Chinese, we employ the state-ofthe-art feature set proposed by Vajjala and Meurers (2012) and Jiang et al. (2014) respectively. • BOW refers to the bag-of-words model. For the feature-based methods (i.e., HCF, BOW, and KEWE), we use both Logistic Regression (LR) and Random Forest (RF) as the classifiers for readability assessment . Table 3 lists the performance measures of all these methods on four datasets. The value marked in bold in each column refers to the maximum (best) measures acquired by the methods on each dataset by certain metric. From Table 3, we can see that the performances of readability formulas (SMOG and FK) are not good on all the datasets, except the adjacent accuracies on ENCT. The smoothed unigram model (SUM) outperforms SOMG and FK on all the datasets, and on EHCT, its accuracy is only slightly inferior to KEWEh. HCF performs the best among methods other than KEWE. Even compared with KEWE, it achieves the best performance on CPC and the best adjacent accuracy on ENCT. KEWE is only slightly inferior to HCF in four columns, but outperforms all the other methods in the other eight columns