正在加载图片...

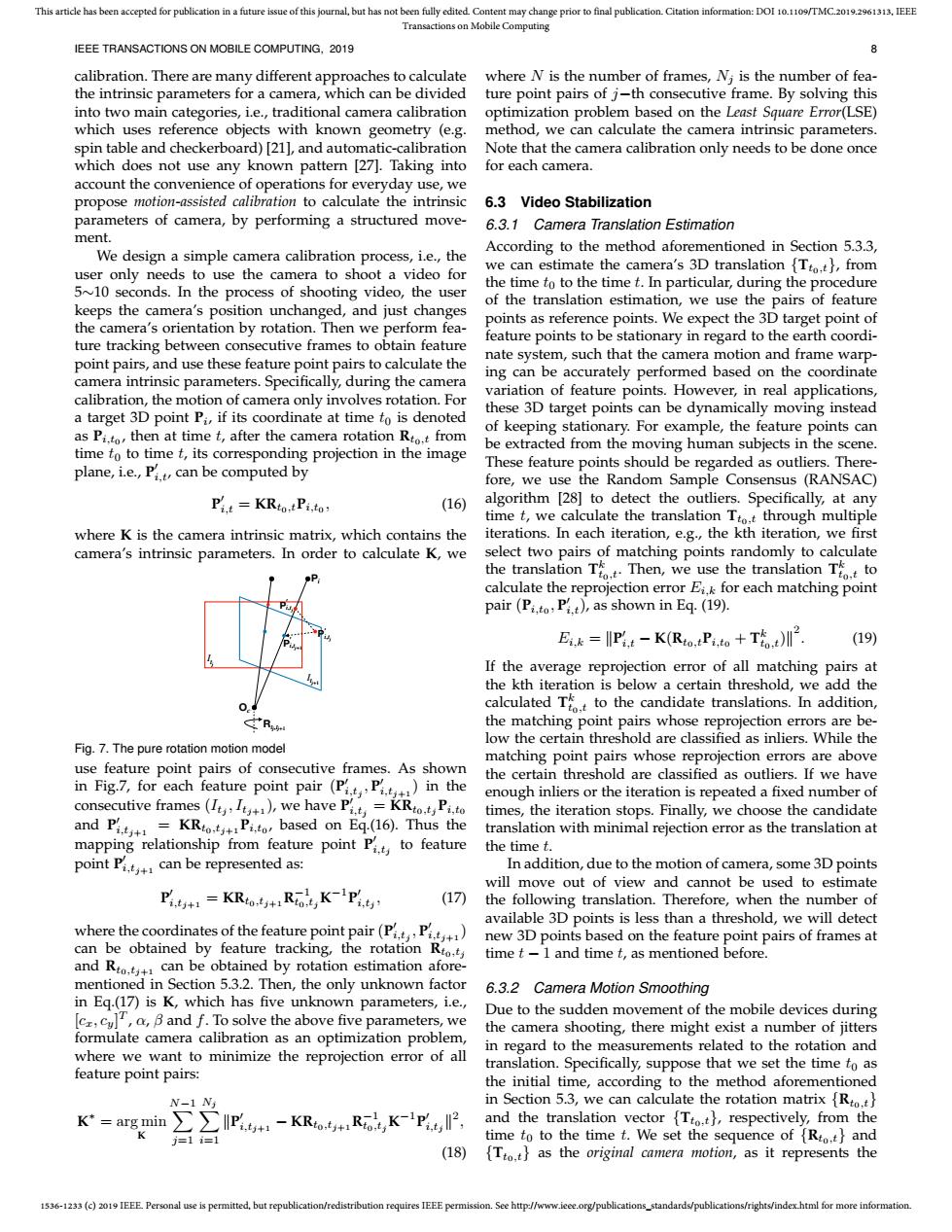

This article has been accepted for publication in a future issue of this journal,but has not been fully edited.Content may change prior to final publication.Citation information:DOI 10.1109/TMC.2019.2961313.IEEE Transactions on Mobile Computing IEEE TRANSACTIONS ON MOBILE COMPUTING,2019 calibration.There are many different approaches to calculate where N is the number of frames,Ni is the number of fea- the intrinsic parameters for a camera,which can be divided ture point pairs of j-th consecutive frame.By solving this into two main categories,ie.,traditional camera calibration optimization problem based on the Least Square Error(LSE) which uses reference objects with known geometry (e.g. method,we can calculate the camera intrinsic parameters. spin table and checkerboard)[21],and automatic-calibration Note that the camera calibration only needs to be done once which does not use any known pattern [27].Taking into for each camera. account the convenience of operations for everyday use,we propose motion-assisted calibration to calculate the intrinsic 6.3 Video Stabilization parameters of camera,by performing a structured move- 6.3.1 Camera Translation Estimation ment. According to the method aforementioned in Section 5.3.3, We design a simple camera calibration process,i.e.,the user only needs to use the camera to shoot a video for we can estimate the camera's 3D translation [Tto.t},from 5~10 seconds.In the process of shooting video,the user the time toto the time t.In particular,during the procedure of the translation estimation,we use the pairs of feature keeps the camera's position unchanged,and just changes points as reference points.We expect the 3D target point of the camera's orientation by rotation.Then we perform fea- ture tracking between consecutive frames to obtain feature feature points to be stationary in regard to the earth coordi- nate system,such that the camera motion and frame warp- point pairs,and use these feature point pairs to calculate the camera intrinsic parameters.Specifically,during the camera ing can be accurately performed based on the coordinate calibration,the motion of camera only involves rotation.For variation of feature points.However,in real applications, a target 3D point Pi,if its coordinate at time to is denoted these 3D target points can be dynamically moving instead as Pi.to,then at time t,after the camera rotation Rto.t from of keeping stationary.For example,the feature points can time to to time t,its corresponding projection in the image be extracted from the moving human subjects in the scene. plane,ie.,Pit,can be computed by These feature points should be regarded as outliers.There- fore,we use the Random Sample Consensus (RANSAC) Pit KRto.tPi.to (16) algorithm [28 to detect the outliers.Specifically,at any time t,we calculate the translation Tto.t through multiple where K is the camera intrinsic matrix,which contains the iterations.In each iteration,e.g.,the kth iteration,we first camera's intrinsic parameters.In order to calculate K,we select two pairs of matching points randomly to calculate the translation T Then,we use the translation Tt to calculate the reprojection error Ei.k for each matching point pair (Pi,to,Pi.t),as shown in Eq.(19). Ei=Pit -K(Rto.Pito+T) (19) If the average reprojection error of all matching pairs at the kth iteration is below a certain threshold,we add the calculated Tt to the candidate translations.In addition, the matching point pairs whose reprojection errors are be- low the certain threshold are classified as inliers.While the Fig.7.The pure rotation motion model matching point pairs whose reprojection errors are above use feature point pairs of consecutive frames.As shown the certain threshold are classified as outliers.If we have in Fig.7,for each feature point pair (PP)in the enough inliers or the iteration is repeated a fixed number of consecutive frames (ItI),we have Pi.t=KRto.t,Pi.to times,the iteration stops.Finally,we choose the candidate and P=KRPito based on Eq-(16).Thus the translation with minimal rejection error as the translation at mapping relationship from feature point Pit,to feature the time t. point Pi.t can be represented as: In addition,due to the motion of camera,some 3D points will move out of view and cannot be used to estimate 卫t+=KRto.t+Ro,K-l卫 (17 the following translation.Therefore,when the number of where the coordinates of the feature point pair(PP) available 3D points is less than a threshold,we will detect can be obtained by feature tracking,the rotation Rto.t new 3D points based on the feature point pairs of frames at time t-1 and time t,as mentioned before. and Ro.can be obtained by rotation estimation afore- mentioned in Section 5.3.2.Then,the only unknown factor 6.3.2 Camera Motion Smoothing in Eq.(17)is K,which has five unknown parameters,i.e., [cr,cul,a,B and f.To solve the above five parameters,we Due to the sudden movement of the mobile devices during the camera shooting,there might exist a number of jitters formulate camera calibration as an optimization problem, in regard to the measurements related to the rotation and where we want to minimize the reprojection error of all translation.Specifically,suppose that we set the time to as feature point pairs: the initial time,according to the method aforementioned N-1N; in Section 5.3,we can calculate the rotation matrix [Rto.t} K*=argmin∑∑IP+1-KRo+Ro,K-lP2, and the translation vector [Tto.t},respectively,from the j=1=1 time to to the time t.We set the sequence of {Rto.t}and (18)[Tto.t}as the original camera motion,as it represents the 1536-1233(c)2019 IEEE Personal use is permitted,but republication/redistribution requires IEEE permission.See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.1536-1233 (c) 2019 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information. This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TMC.2019.2961313, IEEE Transactions on Mobile Computing IEEE TRANSACTIONS ON MOBILE COMPUTING, 2019 8 calibration. There are many different approaches to calculate the intrinsic parameters for a camera, which can be divided into two main categories, i.e., traditional camera calibration which uses reference objects with known geometry (e.g. spin table and checkerboard) [21], and automatic-calibration which does not use any known pattern [27]. Taking into account the convenience of operations for everyday use, we propose motion-assisted calibration to calculate the intrinsic parameters of camera, by performing a structured movement. We design a simple camera calibration process, i.e., the user only needs to use the camera to shoot a video for 5∼10 seconds. In the process of shooting video, the user keeps the camera’s position unchanged, and just changes the camera’s orientation by rotation. Then we perform feature tracking between consecutive frames to obtain feature point pairs, and use these feature point pairs to calculate the camera intrinsic parameters. Specifically, during the camera calibration, the motion of camera only involves rotation. For a target 3D point Pi , if its coordinate at time t0 is denoted as Pi,t0 , then at time t, after the camera rotation Rt0,t from time t0 to time t, its corresponding projection in the image plane, i.e., P 0 i,t, can be computed by P 0 i,t = KRt0,tPi,t0 , (16) where K is the camera intrinsic matrix, which contains the camera’s intrinsic parameters. In order to calculate K, we Oc Rtj ,tj+1 Pi P ′ i,tj P ′ i,tj+1 Itj Itj+1 P ′ i,tj Fig. 7. The pure rotation motion model use feature point pairs of consecutive frames. As shown in Fig.7, for each feature point pair (P 0 i,tj , P 0 i,tj+1 ) in the consecutive frames (Itj , Itj+1 ), we have P 0 i,tj = KRt0,tjPi,t0 and P 0 i,tj+1 = KRt0,tj+1 Pi,t0 , based on Eq.(16). Thus the mapping relationship from feature point P 0 i,tj to feature point P 0 i,tj+1 can be represented as: P 0 i,tj+1 = KRt0,tj+1R −1 t0,tjK −1P 0 i,tj , (17) where the coordinates of the feature point pair (P 0 i,tj , P 0 i,tj+1 ) can be obtained by feature tracking, the rotation Rt0,tj and Rt0,tj+1 can be obtained by rotation estimation aforementioned in Section 5.3.2. Then, the only unknown factor in Eq.(17) is K, which has five unknown parameters, i.e., [cx, cy] T , α, β and f. To solve the above five parameters, we formulate camera calibration as an optimization problem, where we want to minimize the reprojection error of all feature point pairs: K ∗ = arg min K N X−1 j=1 X Nj i=1 kP 0 i,tj+1 − KRt0,tj+1R −1 t0,tjK −1P 0 i,tj k 2 , (18) where N is the number of frames, Nj is the number of feature point pairs of j−th consecutive frame. By solving this optimization problem based on the Least Square Error(LSE) method, we can calculate the camera intrinsic parameters. Note that the camera calibration only needs to be done once for each camera. 6.3 Video Stabilization 6.3.1 Camera Translation Estimation According to the method aforementioned in Section 5.3.3, we can estimate the camera’s 3D translation {Tt0,t}, from the time t0 to the time t. In particular, during the procedure of the translation estimation, we use the pairs of feature points as reference points. We expect the 3D target point of feature points to be stationary in regard to the earth coordinate system, such that the camera motion and frame warping can be accurately performed based on the coordinate variation of feature points. However, in real applications, these 3D target points can be dynamically moving instead of keeping stationary. For example, the feature points can be extracted from the moving human subjects in the scene. These feature points should be regarded as outliers. Therefore, we use the Random Sample Consensus (RANSAC) algorithm [28] to detect the outliers. Specifically, at any time t, we calculate the translation Tt0,t through multiple iterations. In each iteration, e.g., the kth iteration, we first select two pairs of matching points randomly to calculate the translation T k t0,t. Then, we use the translation T k t0,t to calculate the reprojection error Ei,k for each matching point pair (Pi,t0 , P 0 i,t), as shown in Eq. (19). Ei,k = kP 0 i,t − K(Rt0,tPi,t0 + T k t0,t)k 2 . (19) If the average reprojection error of all matching pairs at the kth iteration is below a certain threshold, we add the calculated T k t0,t to the candidate translations. In addition, the matching point pairs whose reprojection errors are below the certain threshold are classified as inliers. While the matching point pairs whose reprojection errors are above the certain threshold are classified as outliers. If we have enough inliers or the iteration is repeated a fixed number of times, the iteration stops. Finally, we choose the candidate translation with minimal rejection error as the translation at the time t. In addition, due to the motion of camera, some 3D points will move out of view and cannot be used to estimate the following translation. Therefore, when the number of available 3D points is less than a threshold, we will detect new 3D points based on the feature point pairs of frames at time t − 1 and time t, as mentioned before. 6.3.2 Camera Motion Smoothing Due to the sudden movement of the mobile devices during the camera shooting, there might exist a number of jitters in regard to the measurements related to the rotation and translation. Specifically, suppose that we set the time t0 as the initial time, according to the method aforementioned in Section 5.3, we can calculate the rotation matrix {Rt0,t} and the translation vector {Tt0,t}, respectively, from the time t0 to the time t. We set the sequence of {Rt0,t} and {Tt0,t} as the original camera motion, as it represents the