正在加载图片...

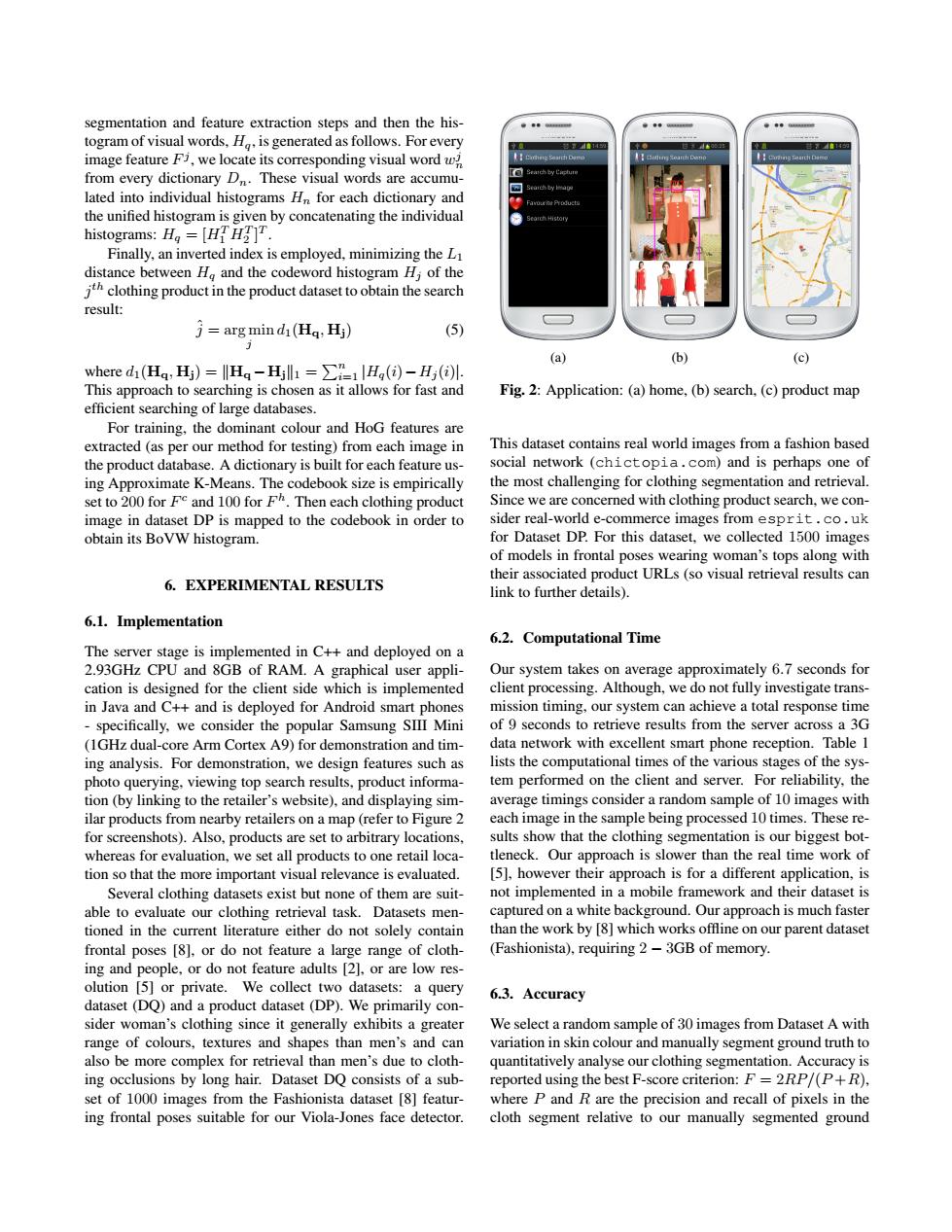

segmentation and feature extraction steps and then the his- togram of visual words,H.is generated as follows.For every 起的 image feature Fi,we locate its corresponding visual word w from every dictionary D.These visual words are accumu- lated into individual histograms Hn for each dictionary and the unified histogram is given by concatenating the individual histograms:H=[HH. Finally,an inverted index is employed,minimizing the L distance between Ho and the codeword histogram H;of the hclothing product in the product dataset to obtain the search result: 方=arg mind山(Hg,H) (5) (a) (b) (c) where di(Ha:Hj)=Ha-Hjll1 =H(i)-Hj(i). This approach to searching is chosen as it allows for fast and Fig.2:Application:(a)home,(b)search,(c)product map efficient searching of large databases. For training,the dominant colour and HoG features are extracted (as per our method for testing)from each image in This dataset contains real world images from a fashion based the product database.A dictionary is built for each feature us- social network (chictopia.com)and is perhaps one of ing Approximate K-Means.The codebook size is empirically the most challenging for clothing segmentation and retrieval. set to 200 for Fe and 100 for h.Then each clothing product Since we are concerned with clothing product search,we con- image in dataset DP is mapped to the codebook in order to sider real-world e-commerce images from esprit.co.uk obtain its BoVW histogram. for Dataset DP.For this dataset,we collected 1500 images of models in frontal poses wearing woman's tops along with their associated product URLs (so visual retrieval results can 6.EXPERIMENTAL RESULTS link to further details) 6.1.Implementation 6.2.Computational Time The server stage is implemented in C++and deployed on a 2.93GHz CPU and 8GB of RAM.A graphical user appli- Our system takes on average approximately 6.7 seconds for cation is designed for the client side which is implemented client processing.Although,we do not fully investigate trans- in Java and C++and is deployed for Android smart phones mission timing,our system can achieve a total response time specifically,we consider the popular Samsung SIII Mini of 9 seconds to retrieve results from the server across a 3G (IGHz dual-core Arm Cortex A9)for demonstration and tim- data network with excellent smart phone reception.Table 1 ing analysis.For demonstration,we design features such as lists the computational times of the various stages of the sys- photo querying,viewing top search results,product informa- tem performed on the client and server.For reliability,the tion (by linking to the retailer's website),and displaying sim- average timings consider a random sample of 10 images with ilar products from nearby retailers on a map(refer to Figure 2 each image in the sample being processed 10 times.These re- for screenshots).Also,products are set to arbitrary locations. sults show that the clothing segmentation is our biggest bot- whereas for evaluation,we set all products to one retail loca- tleneck.Our approach is slower than the real time work of tion so that the more important visual relevance is evaluated. [5],however their approach is for a different application,is Several clothing datasets exist but none of them are suit- not implemented in a mobile framework and their dataset is able to evaluate our clothing retrieval task.Datasets men- captured on a white background.Our approach is much faster tioned in the current literature either do not solely contain than the work by [8]which works offline on our parent dataset frontal poses [8],or do not feature a large range of cloth- (Fashionista),requiring 2-3GB of memory. ing and people,or do not feature adults [2].or are low res- olution [5]or private.We collect two datasets:a query 6.3.Accuracy dataset(DQ)and a product dataset(DP).We primarily con- sider woman's clothing since it generally exhibits a greater We select a random sample of 30 images from Dataset A with range of colours,textures and shapes than men's and can variation in skin colour and manually segment ground truth to also be more complex for retrieval than men's due to cloth- quantitatively analyse our clothing segmentation.Accuracy is ing occlusions by long hair.Dataset DQ consists of a sub- reported using the best F-score criterion:F=2RP/(P+R), set of 1000 images from the Fashionista dataset [8]featur- where P and R are the precision and recall of pixels in the ing frontal poses suitable for our Viola-Jones face detector. cloth segment relative to our manually segmented groundsegmentation and feature extraction steps and then the histogram of visual words, Hq, is generated as follows. For every image feature F j , we locate its corresponding visual word w j n from every dictionary Dn. These visual words are accumulated into individual histograms Hn for each dictionary and the unified histogram is given by concatenating the individual histograms: Hq = [HT 1 HT 2 ] T . Finally, an inverted index is employed, minimizing the L1 distance between Hq and the codeword histogram Hj of the j th clothing product in the product dataset to obtain the search result: ˆj = arg min j d1(Hq, Hj) (5) where d1(Hq, Hj) = kHq −Hjk1 = Pn i=1 |Hq(i)−Hj (i)|. This approach to searching is chosen as it allows for fast and efficient searching of large databases. For training, the dominant colour and HoG features are extracted (as per our method for testing) from each image in the product database. A dictionary is built for each feature using Approximate K-Means. The codebook size is empirically set to 200 for F c and 100 for F h . Then each clothing product image in dataset DP is mapped to the codebook in order to obtain its BoVW histogram. 6. EXPERIMENTAL RESULTS 6.1. Implementation The server stage is implemented in C++ and deployed on a 2.93GHz CPU and 8GB of RAM. A graphical user application is designed for the client side which is implemented in Java and C++ and is deployed for Android smart phones - specifically, we consider the popular Samsung SIII Mini (1GHz dual-core Arm Cortex A9) for demonstration and timing analysis. For demonstration, we design features such as photo querying, viewing top search results, product information (by linking to the retailer’s website), and displaying similar products from nearby retailers on a map (refer to Figure 2 for screenshots). Also, products are set to arbitrary locations, whereas for evaluation, we set all products to one retail location so that the more important visual relevance is evaluated. Several clothing datasets exist but none of them are suitable to evaluate our clothing retrieval task. Datasets mentioned in the current literature either do not solely contain frontal poses [8], or do not feature a large range of clothing and people, or do not feature adults [2], or are low resolution [5] or private. We collect two datasets: a query dataset (DQ) and a product dataset (DP). We primarily consider woman’s clothing since it generally exhibits a greater range of colours, textures and shapes than men’s and can also be more complex for retrieval than men’s due to clothing occlusions by long hair. Dataset DQ consists of a subset of 1000 images from the Fashionista dataset [8] featuring frontal poses suitable for our Viola-Jones face detector. (a) (b) (c) Fig. 2: Application: (a) home, (b) search, (c) product map This dataset contains real world images from a fashion based social network (chictopia.com) and is perhaps one of the most challenging for clothing segmentation and retrieval. Since we are concerned with clothing product search, we consider real-world e-commerce images from esprit.co.uk for Dataset DP. For this dataset, we collected 1500 images of models in frontal poses wearing woman’s tops along with their associated product URLs (so visual retrieval results can link to further details). 6.2. Computational Time Our system takes on average approximately 6.7 seconds for client processing. Although, we do not fully investigate transmission timing, our system can achieve a total response time of 9 seconds to retrieve results from the server across a 3G data network with excellent smart phone reception. Table 1 lists the computational times of the various stages of the system performed on the client and server. For reliability, the average timings consider a random sample of 10 images with each image in the sample being processed 10 times. These results show that the clothing segmentation is our biggest bottleneck. Our approach is slower than the real time work of [5], however their approach is for a different application, is not implemented in a mobile framework and their dataset is captured on a white background. Our approach is much faster than the work by [8] which works offline on our parent dataset (Fashionista), requiring 2 − 3GB of memory. 6.3. Accuracy We select a random sample of 30 images from Dataset A with variation in skin colour and manually segment ground truth to quantitatively analyse our clothing segmentation. Accuracy is reported using the best F-score criterion: F = 2RP/(P +R), where P and R are the precision and recall of pixels in the cloth segment relative to our manually segmented ground