正在加载图片...

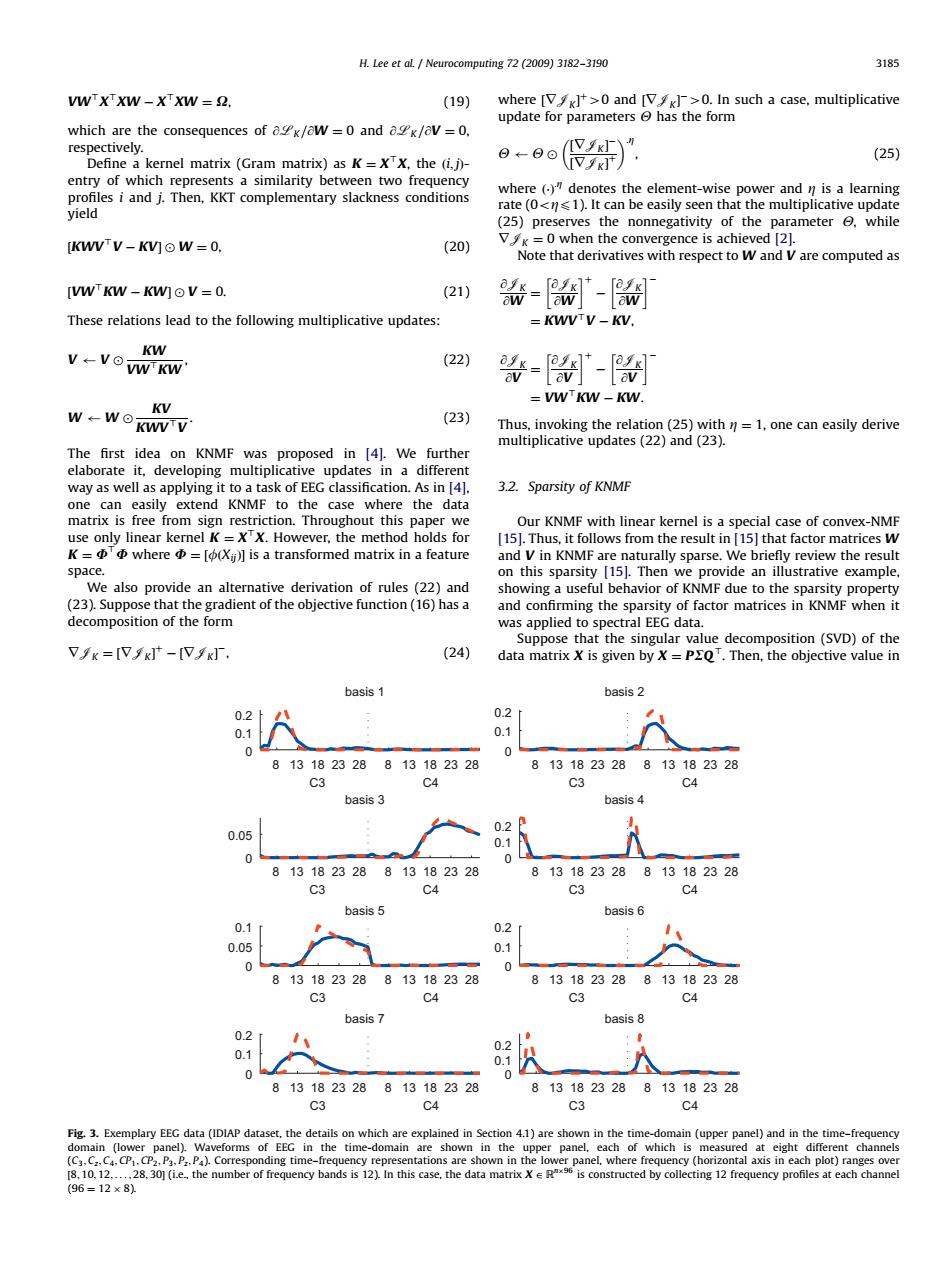

H.Lee et al.Neurocomputing 72 (2009)3182-3190 3185 VWXXW-XXW=2. (19) where []>0 and []>0.In such a case,multiplicative update for parameters has the form which are the consequences of ak/aw =0 and ak/av=0. respectively. ⊙←日⊙ VxJ" (25) Define a kernel matrix (Gram matrix)as K=X'X,the (i,j)- Vx厅)· entry of which represents a similarity between two frequency where ()denotes the element-wise power and n is a learning profiles i and j.Then,KKT complementary slackness conditions yield rate(0<1).It can be easily seen that the multiplicative update (25)preserves the nonnegativity of the parameter while IKWVV-KV]oW=0. (20) VK =0 when the convergence is achieved [2]. Note that derivatives with respect to W and V are computed as IVWKW-KW]oV=0. (21) -”- These relations lead to the following multiplicative updates: KWVTV-KV. V←VOVWKW KW (22) %-- VWKW-KW. W←W⊙ V KWVTV (23) Thus,invoking the relation(25)with n=1,one can easily derive multiplicative updates(22)and(23). The first idea on KNMF was proposed in [4].We further elaborate it,developing multiplicative updates in a different way as well as applying it to a task of EEG classification.As in [4]. 3.2.Sparsity of KNMF one can easily extend KNMF to the case where the data matrix is free from sign restriction.Throughout this paper we Our KNMF with linear kernel is a special case of convex-NMF use only linear kernel K =X'X.However,the method holds for [15].Thus,it follows from the result in [15]that factor matrices W K=ΦΦwhereΦ=[Xg】is a transformed matrix in a feature and V in KNMF are naturally sparse.We briefly review the result space. on this sparsity [15.Then we provide an illustrative example, We also provide an alternative derivation of rules (22)and showing a useful behavior of KNMF due to the sparsity property (23).Suppose that the gradient of the objective function(16)has a and confirming the sparsity of factor matrices in KNMF when it decomposition of the form was applied to spectral EEG data. Suppose that the singular value decomposition(SVD)of the V/K=[Vfx]-[V/x]. (24) data matrix X is given by X=PEQ.Then,the objective value in basis 1 basis 2 0.2 0.2 0.1 0.1 0 0 813182328 813182328 813182328813182328 C3 C4 C3 C4 basis 3 basis 4 0.2 0.05 0.1 0 0 813182328813182328 813182328813182328 C3 C4 C3 C4 basis 5 basis 6 0.1 0.2 0.05 0.1 0 813182328813182328 813182328813182328 C3 C4 c3 basis 7 basis 8 0.2 0.2 0.1 0.1 0 813182328 813182328 813182328813182328 C3 C4 C3 C4 Fig.3.Exemplary EEG data(IDIAP dataset,the details on which are explained in Section 4.1)are shown in the time-domain(upper panel)and in the time-frequency domain (lower panel).Waveforms of EEG in the time-domain are shown in the upper panel,each of which is measured at eight different channels i6S.c28 on the-the ume m2 he文。三ce2anop2aoma (96=12×8)VW>X>XW X>XW ¼ X, (19) which are the consequences of @LK =@W ¼ 0 and @LK =@V ¼ 0, respectively. Define a kernel matrix (Gram matrix) as K ¼ X>X, the ði; jÞ- entry of which represents a similarity between two frequency profiles i and j. Then, KKT complementary slackness conditions yield ½KWV>V KV W ¼ 0, (20) ½VW>KW KW V ¼ 0. (21) These relations lead to the following multiplicative updates: V V KW VW>KW , (22) W W KV KWV>V . (23) The first idea on KNMF was proposed in [4]. We further elaborate it, developing multiplicative updates in a different way as well as applying it to a task of EEG classification. As in [4], one can easily extend KNMF to the case where the data matrix is free from sign restriction. Throughout this paper we use only linear kernel K ¼ X>X. However, the method holds for K ¼ U>U where U ¼ ½fðXijÞ is a transformed matrix in a feature space. We also provide an alternative derivation of rules (22) and (23). Suppose that the gradient of the objective function (16) has a decomposition of the form rJK ¼ ½rJK þ ½rJK , (24) where ½rJK þ40 and ½rJK 40. In such a case, multiplicative update for parameters Y has the form Y Y ½rJK ½rJK þ :Z , (25) where ð Þ:Z denotes the element-wise power and Z is a learning rate (0oZp1). It can be easily seen that the multiplicative update (25) preserves the nonnegativity of the parameter Y, while rJK ¼ 0 when the convergence is achieved [2]. Note that derivatives with respect to W and V are computed as @JK @W ¼ @JK @W þ @JK @W ¼ KWV>V KV, @JK @V ¼ @JK @V þ @JK @V ¼ VW>KW KW. Thus, invoking the relation (25) with Z ¼ 1, one can easily derive multiplicative updates (22) and (23). 3.2. Sparsity of KNMF Our KNMF with linear kernel is a special case of convex-NMF [15]. Thus, it follows from the result in [15] that factor matrices W and V in KNMF are naturally sparse. We briefly review the result on this sparsity [15]. Then we provide an illustrative example, showing a useful behavior of KNMF due to the sparsity property and confirming the sparsity of factor matrices in KNMF when it was applied to spectral EEG data. Suppose that the singular value decomposition (SVD) of the data matrix X is given by X ¼ PRQ >. Then, the objective value in ARTICLE IN PRESS 8 0 0.1 0.2 C3 C4 basis 1 0 0.1 0.2 basis 2 0 0.05 basis 3 0 0.1 0.2 basis 4 0 0.05 0.1 basis 5 0 0.1 0.2 basis 6 0 0.1 0.2 basis 7 0 0.1 0.2 basis 8 13 18 23 28 8 13 18 23 28 8 C3 C4 13 18 23 28 8 13 18 23 28 8 C3 C4 13 18 23 28 8 13 18 23 28 8 C3 C4 13 18 23 28 8 13 18 23 28 8 C3 C4 13 18 23 28 8 13 18 23 28 8 C3 C4 13 18 23 28 8 13 18 23 28 8 C3 C4 13 18 23 28 8 13 18 23 28 8 C3 C4 13 18 23 28 8 13 18 23 28 Fig. 3. Exemplary EEG data (IDIAP dataset, the details on which are explained in Section 4.1) are shown in the time-domain (upper panel) and in the time–frequency domain (lower panel). Waveforms of EEG in the time-domain are shown in the upper panel, each of which is measured at eight different channels (C3; Cz; C4; CP1; CP2; P3; Pz ; P4). Corresponding time–frequency representations are shown in the lower panel, where frequency (horizontal axis in each plot) ranges over ½8; 10; 12; ... ; 28; 30 (i.e., the number of frequency bands is 12). In this case, the data matrix X 2 Rn96 is constructed by collecting 12 frequency profiles at each channel (96 ¼ 12 8). H. Lee et al. / Neurocomputing 72 (2009) 3182–3190 3185�