正在加载图片...

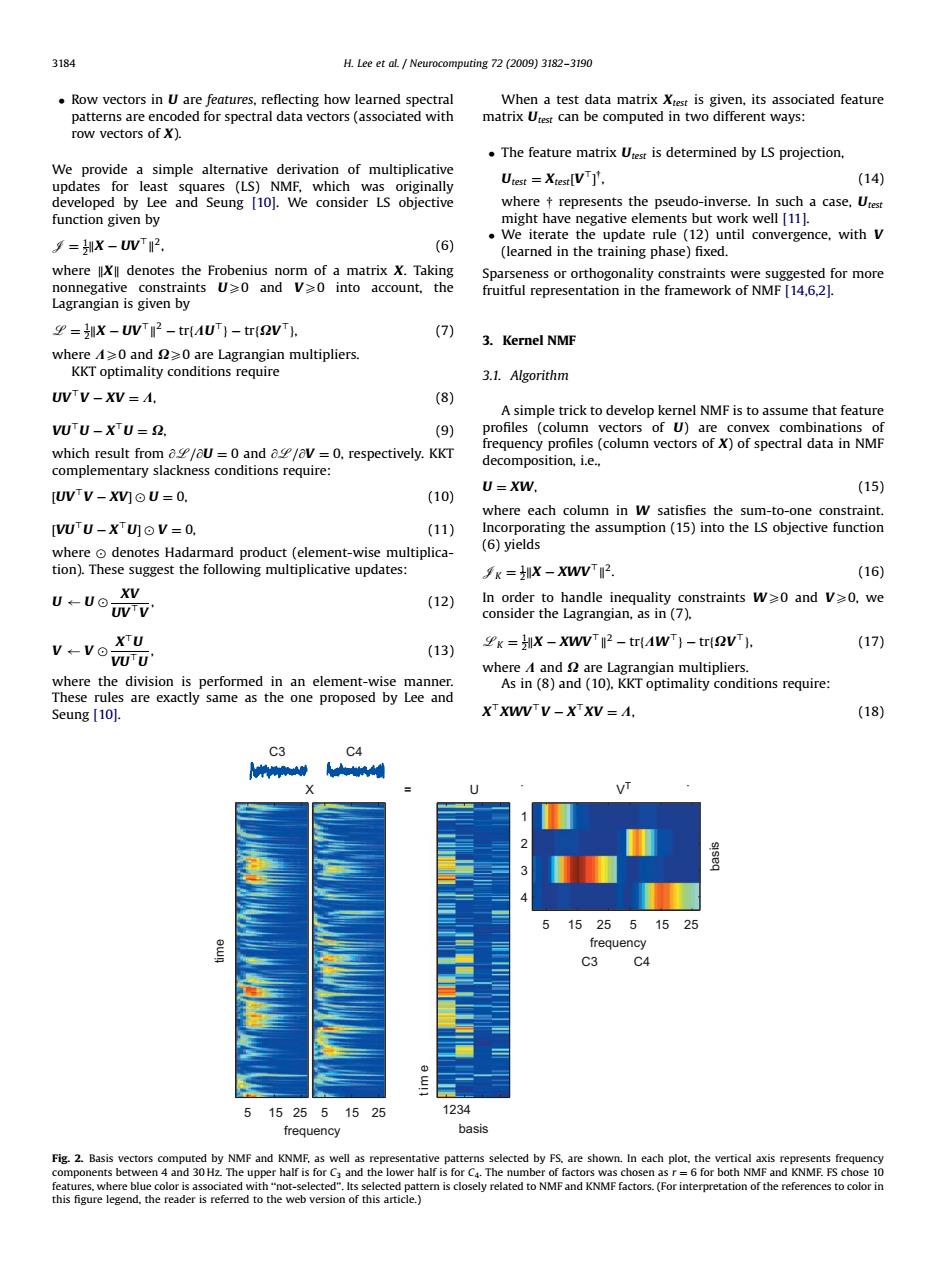

3184 H.Lee et al.Neurocomputing 72 (2009)3182-3190 Row vectors in U are features,reflecting how learned spectral When a test data matrix Xtest is given,its associated feature patterns are encoded for spectral data vectors (associated with matrix Utest can be computed in two different ways: row vectors of X). The feature matrix Utest is determined by LS projection, We provide a simple alternative derivation of multiplicative updates for least squares (LS)NMF,which was originally Utest =Xtest[VT]'. (14) developed by Lee and Seung [10].We consider LS objective where represents the pseudo-inverse.In such a case,Urest function given by might have negative elements but work well [11]. We iterate the update rule (12)until convergence,with V 9=x-UW2, (6) (learned in the training phase)fixed. where X denotes the Frobenius norm of a matrix X.Taking Sparseness or orthogonality constraints were suggested for more nonnegative constraints U20 and V2o into account,the fruitful representation in the framework of NMF [14,6,21. Lagrangian is given by =X-UVT2-tr(AUT)-tr(QVT). (7) 3.Kernel NMF where A≥0and2≥0 are Lagrangian multipliers. KKT optimality conditions require 3.1.Algorithm UVTV-XV=A. (8) A simple trick to develop kernel NMF is to assume that feature VUTU-XTU=2. (9) profiles (column vectors of U)are convex combinations of frequency profiles (column vectors of X)of spectral data in NMF which result from a/aU =0 and a/ov=0,respectively.KKT decomposition,i.e., complementary slackness conditions require: U=XW. (15) UVV-XM⊙U=0. (10) where each column in W satisfies the sum-to-one constraint. VU'U-XUOV=0. (11) Incorporating the assumption (15)into the LS objective function where o denotes Hadarmard product (element-wise multiplica- (6)yields tion).These suggest the following multiplicative updates: =X-XWVT2. (16) U←U⊙ XV (12) In order to handle inequality constraints W>0 and V>0,we UVTV consider the Lagrangian,as in (7). V←Vo: XTU =-XWVT2-tr(AWT)-tr(QVT). (13) (17) VUU where A and are Lagrangian multipliers. where the division is performed in an element-wise manner. As in (8)and (10).KKT optimality conditions require: These rules are exactly same as the one proposed by Lee and Seung [10]. X'XWV'V-X'XV=A. (18) 2 5 152551525 frequency C3 C4 5152551525 1234 frequency basis Fig.2.Basis vectors computed by NMF and KNMF,as well as representative patterns selected by FS.are shown.In each plot,the vertical axis represents frequency components between 4 and 30Hz.The upper half is for Ca and the lower half is for C4.The number of factors was chosen as r=6 for both NMF and KNMF.FS chose 10 features,where blue color is associated with"not-selected".Its selected patter is closely related to NMF and KNMF factors.(For interpretation of the references to color in this figure legend.the reader is referred to the web version of this article.)Row vectors in U are features, reflecting how learned spectral patterns are encoded for spectral data vectors (associated with row vectors of X). We provide a simple alternative derivation of multiplicative updates for least squares (LS) NMF, which was originally developed by Lee and Seung [10]. We consider LS objective function given by J ¼ 1 2kX UV>k2, (6) where kXk denotes the Frobenius norm of a matrix X. Taking nonnegative constraints UX0 and VX0 into account, the Lagrangian is given by L ¼ 1 2kX UV>k2 trfKU>g trfXV>g, (7) where KX0 and XX0 are Lagrangian multipliers. KKT optimality conditions require UV>V XV ¼ K, (8) VU>U X>U ¼ X, (9) which result from @L=@U ¼ 0 and @L=@V ¼ 0, respectively. KKT complementary slackness conditions require: ½UV>V XV U ¼ 0, (10) ½VU>U X>U V ¼ 0, (11) where denotes Hadarmard product (element-wise multiplication). These suggest the following multiplicative updates: U U XV UV>V , (12) V V X>U VU>U , (13) where the division is performed in an element-wise manner. These rules are exactly same as the one proposed by Lee and Seung [10]. When a test data matrix Xtest is given, its associated feature matrix Utest can be computed in two different ways: The feature matrix Utest is determined by LS projection, Utest ¼ Xtest½V> y , (14) where y represents the pseudo-inverse. In such a case, Utest might have negative elements but work well [11]. We iterate the update rule (12) until convergence, with V (learned in the training phase) fixed. Sparseness or orthogonality constraints were suggested for more fruitful representation in the framework of NMF [14,6,2]. 3. Kernel NMF 3.1. Algorithm A simple trick to develop kernel NMF is to assume that feature profiles (column vectors of U) are convex combinations of frequency profiles (column vectors of X) of spectral data in NMF decomposition, i.e., U ¼ XW, (15) where each column in W satisfies the sum-to-one constraint. Incorporating the assumption (15) into the LS objective function (6) yields JK ¼ 1 2kX XWV>k2. (16) In order to handle inequality constraints WX0 and VX0, we consider the Lagrangian, as in (7), LK ¼ 1 2kX XWV>k2 trfKW>g trfXV>g, (17) where K and X are Lagrangian multipliers. As in (8) and (10), KKT optimality conditions require: X>XWV>V X>XV ¼ K, (18) ARTICLE IN PRESS C3 C4 5 5 1234 5 1 2 3 4 X VT time frequency frequency C3 mi t e basis basis =U C4 15 25 5 15 25 15 25 15 25 Fig. 2. Basis vectors computed by NMF and KNMF, as well as representative patterns selected by FS, are shown. In each plot, the vertical axis represents frequency components between 4 and 30 Hz. The upper half is for C3 and the lower half is for C4. The number of factors was chosen as r ¼ 6 for both NMF and KNMF. FS chose 10 features, where blue color is associated with ‘‘not-selected’’. Its selected pattern is closely related to NMF and KNMF factors. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.) 3184 H. Lee et al. / Neurocomputing 72 (2009) 3182–3190���