正在加载图片...

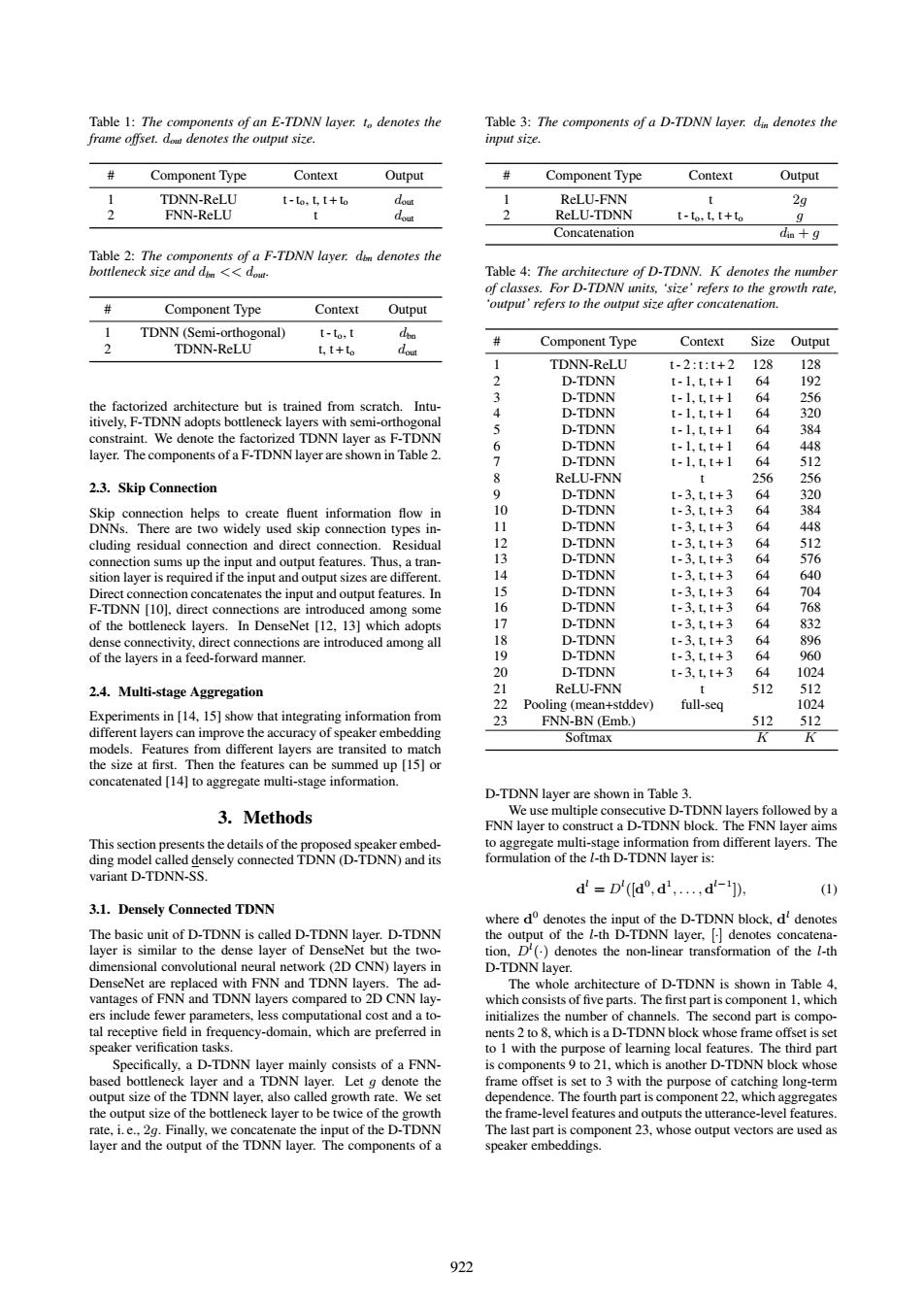

Table 1:The components of an E-TDNN layer.t denotes the Table 3:The components of a D-TDNN layer.din denotes the frame offset.dou denotes the output size input size Component Type Context Output Component Type Context Output TDNN-ReLU t-to,L,I+to dout ReLU-FNN 2g FNN-ReLU t dou 2 ReLU-TDNN I-to:L,I+to 9 Concatenation din +g Table 2:The components of a F-TDNN layer.don denotes the bottleneck size and di <dou- Table 4:The architecture of D-TDNN.K denotes the number of classes.For D-TDNN units,'size'refers to the growth rate, # Component Type Context Output 'output'refers to the output size after concatenation. TDNN (Semi-orthogonal) t-to.I doo TDNN-ReLU L,t+to dout Component Type Context Size Output TDNN-ReLU t-2:t:t+2 128 128 2 D-TDNN t-1,t,t+1 64 192 3 D-TDNN t-1,t,t+1 64 256 the factorized architecture but is trained from scratch.Intu- 4 D-TDNN t-1,t,t+1 64 320 itively,F-TDNN adopts bottleneck layers with semi-orthogonal 5 D-TDNN t-1,t,t+1 64 384 constraint.We denote the factorized TDNN layer as F-TDNN 6 D-TDNN t-1,tt+1 64 448 layer.The components of a F-TDNN layer are shown in Table 2. 7 D-TDNN t-1.tt+1 64 512 8 ReLU-FNN t 256 256 2.3.Skip Connection 9 D-TDNN t-3,tt+3 64 320 Skip connection helps to create fluent information flow in 10 D-TDNN t-3,t,t+3 64 384 DNNs.There are two widely used skip connection types in- 11 D-TDNN t-3,t,t+3 64 448 cluding residual connection and direct connection.Residual 12 D-TDNN t-3,t,t+3 64 512 connection sums up the input and output features.Thus,a tran- 13 D-TDNN t-3,tt+3 64 576 sition layer is required if the input and output sizes are different. 14 D-TDNN t-3,t,t+3 64 640 Direct connection concatenates the input and output features.In 15 D-TDNN t-3.t,t+3 64 704 F-TDNN [10],direct connections are introduced among some 16 D-TDNN t-3.t,t+3 64 768 of the bottleneck layers.In DenseNet [12,13]which adopts 17 D-TDNN t-3,t,t+3 832 dense connectivity,direct connections are introduced among all 18 D-TDNN t-3.tt+3 64 896 of the layers in a feed-forward manner. 19 D-TDNN t-3,tt+3 64 960 20 D-TDNN t-3.tt+3 64 1024 2.4.Multi-stage Aggregation 21 ReLU-FNN 512 512 22 Pooling(mean+stddev) full-seq 1024 Experiments in [14.15]show that integrating information from 23 FNN-BN (Emb.) 512 512 different layers can improve the accuracy of speaker embedding Softmax models.Features from different layers are transited to match the size at first.Then the features can be summed up [15]or concatenated [14]to aggregate multi-stage information. D-TDNN layer are shown in Table 3. 3.Methods We use multiple consecutive D-TDNN layers followed by a FNN layer to construct a D-TDNN block.The FNN layer aims This section presents the details of the proposed speaker embed- to aggregate multi-stage information from different layers.The ding model called densely connected TDNN (D-TDNN)and its formulation of the 1-th D-TDNN layer is: variant D-TDNN-SS. d=D'(d°,d,,d-]) (1) 3.1.Densely Connected TDNN where do denotes the input of the D-TDNN block,d'denotes The basic unit of D-TDNN is called D-TDNN layer.D-TDNN the output of the l-th D-TDNN layer,denotes concatena- layer is similar to the dense layer of DenseNet but the two- tion,D()denotes the non-linear transformation of the l-th dimensional convolutional neural network(2D CNN)layers in D-TDNN layer. DenseNet are replaced with FNN and TDNN layers.The ad- The whole architecture of D-TDNN is shown in Table 4, vantages of FNN and TDNN layers compared to 2D CNN lay- which consists of five parts.The first part is component 1,which ers include fewer parameters,less computational cost and a to- initializes the number of channels.The second part is compo- tal receptive field in frequency-domain,which are preferred in nents 2 to 8,which is a D-TDNN block whose frame offset is set speaker verification tasks. to 1 with the purpose of learning local features.The third part Specifically,a D-TDNN layer mainly consists of a FNN- is components 9 to 21,which is another D-TDNN block whose based bottleneck layer and a TDNN layer.Let g denote the frame offset is set to 3 with the purpose of catching long-term output size of the TDNN layer,also called growth rate.We set dependence.The fourth part is component 22,which aggregates the output size of the bottleneck layer to be twice of the growth the frame-level features and outputs the utterance-level features rate,i.e..2g.Finally,we concatenate the input of the D-TDNN The last part is component 23,whose output vectors are used as layer and the output of the TDNN layer.The components of a speaker embeddings. 922Table 1: The components of an E-TDNN layer. to denotes the frame offset. dout denotes the output size. # Component Type Context Output 1 TDNN-ReLU t - to, t, t + to dout 2 FNN-ReLU t dout Table 2: The components of a F-TDNN layer. dbn denotes the bottleneck size and dbn << dout. # Component Type Context Output 1 TDNN (Semi-orthogonal) t - to, t dbn 2 TDNN-ReLU t, t + to dout the factorized architecture but is trained from scratch. Intuitively, F-TDNN adopts bottleneck layers with semi-orthogonal constraint. We denote the factorized TDNN layer as F-TDNN layer. The components of a F-TDNN layer are shown in Table 2. 2.3. Skip Connection Skip connection helps to create fluent information flow in DNNs. There are two widely used skip connection types including residual connection and direct connection. Residual connection sums up the input and output features. Thus, a transition layer is required if the input and output sizes are different. Direct connection concatenates the input and output features. In F-TDNN [10], direct connections are introduced among some of the bottleneck layers. In DenseNet [12, 13] which adopts dense connectivity, direct connections are introduced among all of the layers in a feed-forward manner. 2.4. Multi-stage Aggregation Experiments in [14, 15] show that integrating information from different layers can improve the accuracy of speaker embedding models. Features from different layers are transited to match the size at first. Then the features can be summed up [15] or concatenated [14] to aggregate multi-stage information. 3. Methods This section presents the details of the proposed speaker embedding model called densely connected TDNN (D-TDNN) and its variant D-TDNN-SS. 3.1. Densely Connected TDNN The basic unit of D-TDNN is called D-TDNN layer. D-TDNN layer is similar to the dense layer of DenseNet but the twodimensional convolutional neural network (2D CNN) layers in DenseNet are replaced with FNN and TDNN layers. The advantages of FNN and TDNN layers compared to 2D CNN layers include fewer parameters, less computational cost and a total receptive field in frequency-domain, which are preferred in speaker verification tasks. Specifically, a D-TDNN layer mainly consists of a FNNbased bottleneck layer and a TDNN layer. Let g denote the output size of the TDNN layer, also called growth rate. We set the output size of the bottleneck layer to be twice of the growth rate, i. e., 2g. Finally, we concatenate the input of the D-TDNN layer and the output of the TDNN layer. The components of a Table 3: The components of a D-TDNN layer. din denotes the input size. # Component Type Context Output 1 ReLU-FNN t 2g 2 ReLU-TDNN t - to, t, t + to g Concatenation din + g Table 4: The architecture of D-TDNN. K denotes the number of classes. For D-TDNN units, ‘size’ refers to the growth rate, ‘output’ refers to the output size after concatenation. # Component Type Context Size Output 1 TDNN-ReLU t - 2 : t : t + 2 128 128 2 D-TDNN t - 1, t, t + 1 64 192 3 D-TDNN t - 1, t, t + 1 64 256 4 D-TDNN t - 1, t, t + 1 64 320 5 D-TDNN t - 1, t, t + 1 64 384 6 D-TDNN t - 1, t, t + 1 64 448 7 D-TDNN t - 1, t, t + 1 64 512 8 ReLU-FNN t 256 256 9 D-TDNN t - 3, t, t + 3 64 320 10 D-TDNN t - 3, t, t + 3 64 384 11 D-TDNN t - 3, t, t + 3 64 448 12 D-TDNN t - 3, t, t + 3 64 512 13 D-TDNN t - 3, t, t + 3 64 576 14 D-TDNN t - 3, t, t + 3 64 640 15 D-TDNN t - 3, t, t + 3 64 704 16 D-TDNN t - 3, t, t + 3 64 768 17 D-TDNN t - 3, t, t + 3 64 832 18 D-TDNN t - 3, t, t + 3 64 896 19 D-TDNN t - 3, t, t + 3 64 960 20 D-TDNN t - 3, t, t + 3 64 1024 21 ReLU-FNN t 512 512 22 Pooling (mean+stddev) full-seq 1024 23 FNN-BN (Emb.) 512 512 Softmax K K D-TDNN layer are shown in Table 3. We use multiple consecutive D-TDNN layers followed by a FNN layer to construct a D-TDNN block. The FNN layer aims to aggregate multi-stage information from different layers. The formulation of the l-th D-TDNN layer is: d l = D l ([d 0 , d 1 , . . . , d l−1 ]), (1) where d 0 denotes the input of the D-TDNN block, d l denotes the output of the l-th D-TDNN layer, [·] denotes concatenation, D l (·) denotes the non-linear transformation of the l-th D-TDNN layer. The whole architecture of D-TDNN is shown in Table 4, which consists of five parts. The first part is component 1, which initializes the number of channels. The second part is components 2 to 8, which is a D-TDNN block whose frame offset is set to 1 with the purpose of learning local features. The third part is components 9 to 21, which is another D-TDNN block whose frame offset is set to 3 with the purpose of catching long-term dependence. The fourth part is component 22, which aggregates the frame-level features and outputs the utterance-level features. The last part is component 23, whose output vectors are used as speaker embeddings. 922