正在加载图片...

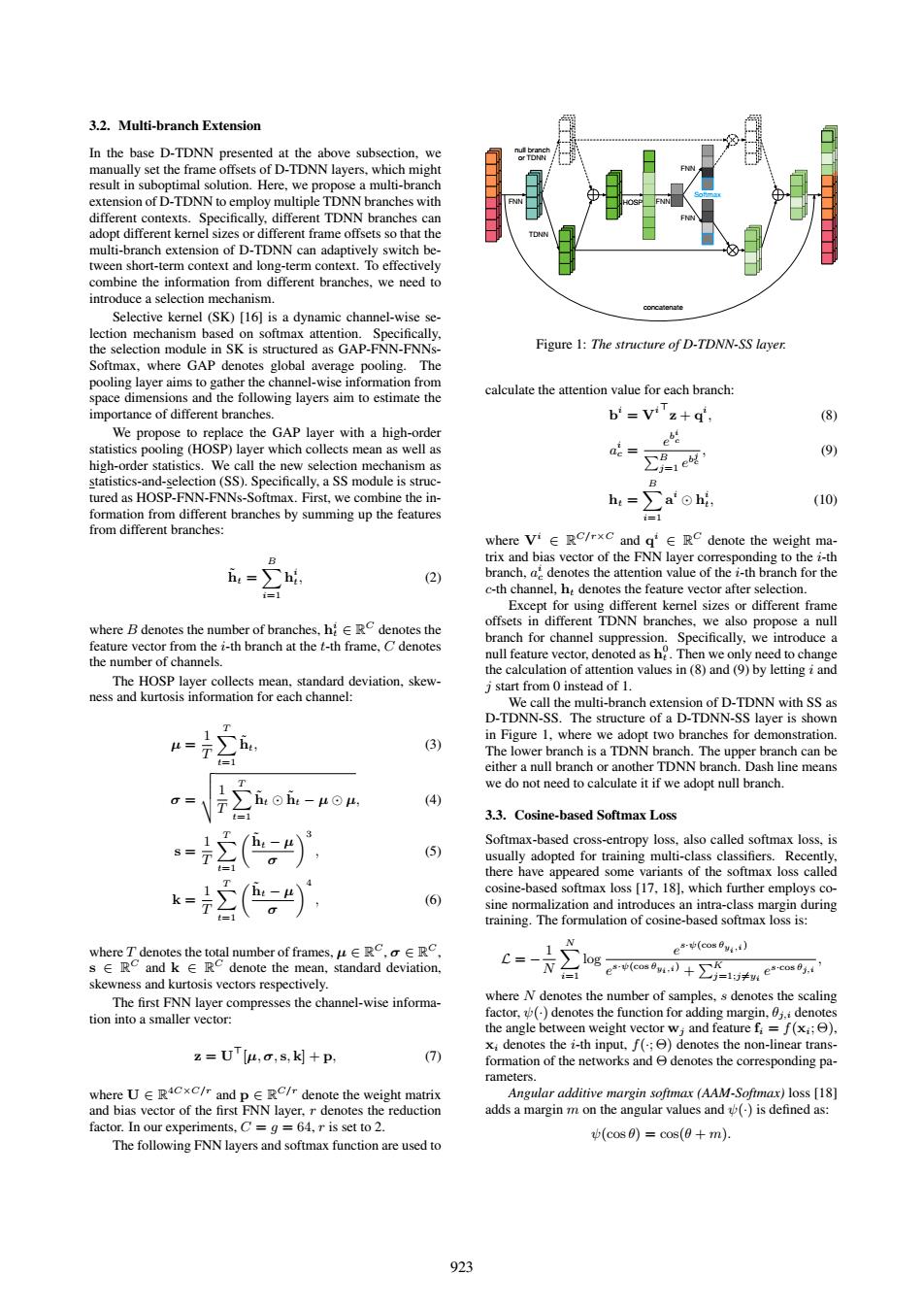

3.2.Multi-branch Extension In the base D-TDNN presented at the above subsection,we manually set the frame offsets of D-TDNN layers,which might result in suboptimal solution.Here,we propose a multi-branch extension of D-TDNN to employ multiple TDNN branches with different contexts.Specifically.different TDNN branches can adopt different kernel sizes or different frame offsets so that the multi-branch extension of D-TDNN can adaptively switch be- tween short-term context and long-term context.To effectively combine the information from different branches,we need to introduce a selection mechanism. con enate Selective kernel (SK)[16]is a dynamic channel-wise se- lection mechanism based on softmax attention.Specifically, the selection module in SK is structured as GAP-FNN-FNNs- Figure 1:The structure of D-TDNN-SS layer. Softmax.where GAP denotes global average pooling.The pooling layer aims to gather the channel-wise information from space dimensions and the following layers aim to estimate the calculate the attention value for each branch: importance of different branches. b=Vz+q. (8) We propose to replace the GAP layer with a high-order statistics pooling(HOSP)layer which collects mean as well as a2= high-order statistics.We call the new selection mechanism as ∑1ek (9) statistics-and-selection(SS).Specifically,a SS module is struc- B tured as HOSP-FNN-FNNs-Softmax.First,we combine the in- h=ahi, (10) formation from different branches by summing up the features =1 from different branches: where Vi RC/rxc and g'RC denote the weight ma- B trix and bias vector of the FNN layer corresponding to the i-th h=hi (2) branch,a denotes the attention value of the i-th branch for the i=1 c-th channel,h,denotes the feature vector after selection. Except for using different kernel sizes or different frame where B denotes the number of branches,hRC denotes the offsets in different TDNN branches,we also propose a null feature vector from the i-th branch at the t-th frame,C denotes branch for channel suppression.Specifically,we introduce a null feature vector,denoted as h.Then we only need to change the number of channels. the calculation of attention values in (8)and (9)by letting i and The HOSP layer collects mean.standard deviation,skew- j start from 0 instead of 1. ness and kurtosis information for each channel: We call the multi-branch extension of D-TDNN with SS as D-TDNN-SS.The structure of a D-TDNN-SS layer is shown =F∑i, in Figure 1,where we adopt two branches for demonstration. (3) The lower branch is a TDNN branch.The upper branch can be either a null branch or another TDNN branch.Dash line means we do not need to calculate it if we adopt null branch. (4) 3.3.Cosine-based Softmax Loss =(色。 Softmax-based cross-entropy loss,also called softmax loss,is (5) usually adopted for training multi-class classifiers.Recently, there have appeared some variants of the softmax loss called cosine-based softmax loss [17,18],which further employs co- (6) sine normalization and introduces an intra-class margin during training.The formulation of cosine-based softmax loss is: where T denotes the total number of frames,uRC.RC, 1 e(co) s E RC and k RC denote the mean,standard deviation, C=- log- i=1 (co)eco. skewness and kurtosis vectors respectively. The first FNN layer compresses the channel-wise informa- where N denotes the number of samples,s denotes the scaling tion into a smaller vector: factor,(denotes the function for adding margin,0j.i denotes the angle between weight vector w;and feature f;=f(x;;) z=U'[u,o,s,k]+P, x;denotes the i-th input,f(;e)denotes the non-linear trans- (7) formation of the networks and denotes the corresponding pa- rameters. where U E RCxC/r and p RC/r denote the weight matrix Angular additive margin softmax (AAM-Softmax)loss [18] and bias vector of the first FNN layer,r denotes the reduction adds a margin m on the angular values and (is defined as: factor.In our experiments,C=g 64,r is set to 2. (cos0)=cos(0+m). The following FNN layers and softmax function are used to 9233.2. Multi-branch Extension In the base D-TDNN presented at the above subsection, we manually set the frame offsets of D-TDNN layers, which might result in suboptimal solution. Here, we propose a multi-branch extension of D-TDNN to employ multiple TDNN branches with different contexts. Specifically, different TDNN branches can adopt different kernel sizes or different frame offsets so that the multi-branch extension of D-TDNN can adaptively switch between short-term context and long-term context. To effectively combine the information from different branches, we need to introduce a selection mechanism. Selective kernel (SK) [16] is a dynamic channel-wise selection mechanism based on softmax attention. Specifically, the selection module in SK is structured as GAP-FNN-FNNsSoftmax, where GAP denotes global average pooling. The pooling layer aims to gather the channel-wise information from space dimensions and the following layers aim to estimate the importance of different branches. We propose to replace the GAP layer with a high-order statistics pooling (HOSP) layer which collects mean as well as high-order statistics. We call the new selection mechanism as statistics-and-selection (SS). Specifically, a SS module is structured as HOSP-FNN-FNNs-Softmax. First, we combine the information from different branches by summing up the features from different branches: h˜t = XB i=1 h i t , (2) where B denotes the number of branches, h i t ∈ R C denotes the feature vector from the i-th branch at the t-th frame, C denotes the number of channels. The HOSP layer collects mean, standard deviation, skewness and kurtosis information for each channel: µ = 1 T XT t=1 h˜t, (3) σ = vuut 1 T XT t=1 h˜t

h˜t − µ

µ, (4) s = 1 T XT t=1 h˜t − µ σ 3 , (5) k = 1 T XT t=1 h˜t − µ σ 4 , (6) where T denotes the total number of frames, µ ∈ R C , σ ∈ R C , s ∈ R C and k ∈ R C denote the mean, standard deviation, skewness and kurtosis vectors respectively. The first FNN layer compresses the channel-wise information into a smaller vector: z = U >[µ, σ, s, k] + p, (7) where U ∈ R 4C×C/r and p ∈ R C/r denote the weight matrix and bias vector of the first FNN layer, r denotes the reduction factor. In our experiments, C = g = 64, r is set to 2. The following FNN layers and softmax function are used to TDNN null branch or TDNN FNN FNN HOSP Softmax FNN concatenate FNN Figure 1: The structure of D-TDNN-SS layer. calculate the attention value for each branch: b i = V i> z + q i , (8) a i c = e b i c PB j=1 e b j c , (9) ht = XB i=1 a i

h i t , (10) where Vi ∈ R C/r×C and q i ∈ R C denote the weight matrix and bias vector of the FNN layer corresponding to the i-th branch, a i c denotes the attention value of the i-th branch for the c-th channel, ht denotes the feature vector after selection. Except for using different kernel sizes or different frame offsets in different TDNN branches, we also propose a null branch for channel suppression. Specifically, we introduce a null feature vector, denoted as h 0 t . Then we only need to change the calculation of attention values in (8) and (9) by letting i and j start from 0 instead of 1. We call the multi-branch extension of D-TDNN with SS as D-TDNN-SS. The structure of a D-TDNN-SS layer is shown in Figure 1, where we adopt two branches for demonstration. The lower branch is a TDNN branch. The upper branch can be either a null branch or another TDNN branch. Dash line means we do not need to calculate it if we adopt null branch. 3.3. Cosine-based Softmax Loss Softmax-based cross-entropy loss, also called softmax loss, is usually adopted for training multi-class classifiers. Recently, there have appeared some variants of the softmax loss called cosine-based softmax loss [17, 18], which further employs cosine normalization and introduces an intra-class margin during training. The formulation of cosine-based softmax loss is: L = − 1 N XN i=1 log e s·ψ(cos θyi ,i) e s·ψ(cos θyi ,i) + PK j=1;j6=yi e s·cos θj,i , where N denotes the number of samples, s denotes the scaling factor, ψ(·) denotes the function for adding margin, θj,i denotes the angle between weight vector wj and feature fi = f(xi; Θ), xi denotes the i-th input, f(·; Θ) denotes the non-linear transformation of the networks and Θ denotes the corresponding parameters. Angular additive margin softmax (AAM-Softmax) loss [18] adds a margin m on the angular values and ψ(·) is defined as: ψ(cos θ) = cos(θ + m). 923