正在加载图片...

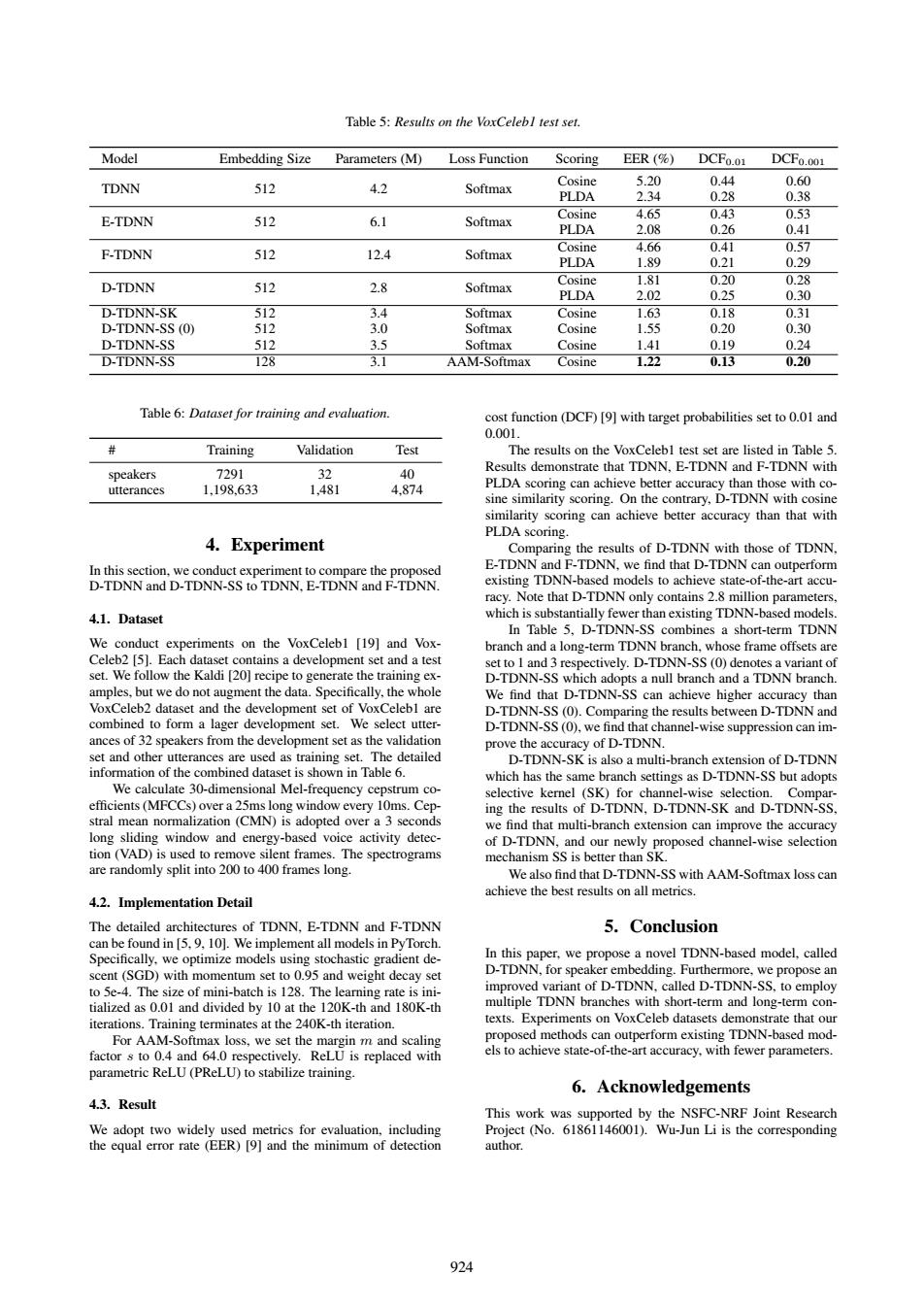

Table 5:Results on the VoxCelebl test set. Model Embedding Size Parameters (M) Loss Function Scoring EER (% DCFo.01 DCFo.001 TDNN 512 Cosine 5.20 0.44 0.60 4.2 Softmax PLDA 2.34 0.28 0.38 E-TDNN 512 6.1 Cosine 4.65 0.43 0.53 Softmax PLDA 2.08 0.26 0.41 F-TDNN 512 12.4 Cosine 4.66 0.41 0.57 Softmax PLDA 1.89 0.21 0.29 D-TDNN 512 2.8 Softmax Cosine 1.81 0.20 0.28 PLDA 2.02 0.25 0.30 D-TDNN-SK 512 3.4 Softmax Cosine 1.63 0.18 0.31 D-TDNN-SS (0) 512 3.0 Softmax Cosine 1.55 0.20 0.30 D-TDNN-SS 512 3.5 Softmax Cosine 1.41 0.19 0.24 D-TDNN-SS 128 3.1 AAM-Softmax Cosine 1.22 0.13 0.20 Table 6:Dataset for training and evaluation. cost function(DCF)[9]with target probabilities set to 0.01 and 0.001. # Training Validation Test The results on the VoxCelebl test set are listed in Table 5 speakers 7291 32 40 Results demonstrate that TDNN,E-TDNN and F-TDNN with utterances 1.198.633 1.481 4.874 PLDA scoring can achieve better accuracy than those with co- sine similarity scoring.On the contrary,D-TDNN with cosine similarity scoring can achieve better accuracy than that with PLDA scoring. 4.Experiment Comparing the results of D-TDNN with those of TDNN In this section,we conduct experiment to compare the proposed E-TDNN and F-TDNN,we find that D-TDNN can outperform D-TDNN and D-TDNN-SS to TDNN.E-TDNN and F-TDNN. existing TDNN-based models to achieve state-of-the-art accu- racy.Note that D-TDNN only contains 2.8 million parameters 4.1.Dataset which is substantially fewer than existing TDNN-based models In Table 5,D-TDNN-SS combines a short-term TDNN We conduct experiments on the VoxCelebl [19]and Vox- branch and a long-term TDNN branch,whose frame offsets are Celeb2 [5].Each dataset contains a development set and a test set to 1 and 3 respectively.D-TDNN-SS (0)denotes a variant of set.We follow the Kaldi [20]recipe to generate the training ex- D-TDNN-SS which adopts a null branch and a TDNN branch amples,but we do not augment the data.Specifically,the whole We find that D-TDNN-SS can achieve higher accuracy than VoxCeleb2 dataset and the development set of VoxCelebl are D-TDNN-SS (0).Comparing the results between D-TDNN and combined to form a lager development set.We select utter- D-TDNN-SS(0),we find that channel-wise suppression can im- ances of 32 speakers from the development set as the validation prove the accuracy of D-TDNN. set and other utterances are used as training set.The detailed D-TDNN-SK is also a multi-branch extension of D-TDNN information of the combined dataset is shown in Table 6. which has the same branch settings as D-TDNN-SS but adopts We calculate 30-dimensional Mel-frequency cepstrum co- selective kernel (SK)for channel-wise selection.Compar- efficients(MFCCs)over a 25ms long window every 10ms.Cep- ing the results of D-TDNN,D-TDNN-SK and D-TDNN-SS stral mean normalization (CMN)is adopted over a 3 seconds we find that multi-branch extension can improve the accuracy long sliding window and energy-based voice activity detec- of D-TDNN,and our newly proposed channel-wise selection tion (VAD)is used to remove silent frames.The spectrograms mechanism SS is better than SK. are randomly split into 200 to 400 frames long. We also find that D-TDNN-SS with AAM-Softmax loss can achieve the best results on all metrics. 4.2.Implementation Detail The detailed architectures of TDNN,E-TDNN and F-TDNN 5.Conclusion can be found in [5,9,10].We implement all models in PyTorch. Specifically,we optimize models using stochastic gradient de- In this paper,we propose a novel TDNN-based model,called scent (SGD)with momentum set to 0.95 and weight decay set D-TDNN,for speaker embedding.Furthermore,we propose an to 5e-4.The size of mini-batch is 128.The learning rate is ini- improved variant of D-TDNN,called D-TDNN-SS,to employ tialized as 0.01 and divided by 10 at the 120K-th and 180K-th multiple TDNN branches with short-term and long-term con- iterations.Training terminates at the 240K-th iteration. texts.Experiments on VoxCeleb datasets demonstrate that our For AAM-Softmax loss,we set the margin m and scaling proposed methods can outperform existing TDNN-based mod- factor s to 0.4 and 64.0 respectively.ReLU is replaced with els to achieve state-of-the-art accuracy,with fewer parameters. parametric ReLU(PReLU)to stabilize training. 6.Acknowledgements 4.3.Result This work was supported by the NSFC-NRF Joint Research We adopt two widely used metrics for evaluation,including Project (No.61861146001).Wu-Jun Li is the corresponding the equal error rate (EER)[9]and the minimum of detection author. 924Table 5: Results on the VoxCeleb1 test set. Model Embedding Size Parameters (M) Loss Function Scoring EER (%) DCF0.01 DCF0.001 TDNN 512 4.2 Softmax Cosine 5.20 0.44 0.60 PLDA 2.34 0.28 0.38 E-TDNN 512 6.1 Softmax Cosine 4.65 0.43 0.53 PLDA 2.08 0.26 0.41 F-TDNN 512 12.4 Softmax Cosine 4.66 0.41 0.57 PLDA 1.89 0.21 0.29 D-TDNN 512 2.8 Softmax Cosine 1.81 0.20 0.28 PLDA 2.02 0.25 0.30 D-TDNN-SK 512 3.4 Softmax Cosine 1.63 0.18 0.31 D-TDNN-SS (0) 512 3.0 Softmax Cosine 1.55 0.20 0.30 D-TDNN-SS 512 3.5 Softmax Cosine 1.41 0.19 0.24 D-TDNN-SS 128 3.1 AAM-Softmax Cosine 1.22 0.13 0.20 Table 6: Dataset for training and evaluation. # Training Validation Test speakers 7291 32 40 utterances 1,198,633 1,481 4,874 4. Experiment In this section, we conduct experiment to compare the proposed D-TDNN and D-TDNN-SS to TDNN, E-TDNN and F-TDNN. 4.1. Dataset We conduct experiments on the VoxCeleb1 [19] and VoxCeleb2 [5]. Each dataset contains a development set and a test set. We follow the Kaldi [20] recipe to generate the training examples, but we do not augment the data. Specifically, the whole VoxCeleb2 dataset and the development set of VoxCeleb1 are combined to form a lager development set. We select utterances of 32 speakers from the development set as the validation set and other utterances are used as training set. The detailed information of the combined dataset is shown in Table 6. We calculate 30-dimensional Mel-frequency cepstrum coefficients (MFCCs) over a 25ms long window every 10ms. Cepstral mean normalization (CMN) is adopted over a 3 seconds long sliding window and energy-based voice activity detection (VAD) is used to remove silent frames. The spectrograms are randomly split into 200 to 400 frames long. 4.2. Implementation Detail The detailed architectures of TDNN, E-TDNN and F-TDNN can be found in [5, 9, 10]. We implement all models in PyTorch. Specifically, we optimize models using stochastic gradient descent (SGD) with momentum set to 0.95 and weight decay set to 5e-4. The size of mini-batch is 128. The learning rate is initialized as 0.01 and divided by 10 at the 120K-th and 180K-th iterations. Training terminates at the 240K-th iteration. For AAM-Softmax loss, we set the margin m and scaling factor s to 0.4 and 64.0 respectively. ReLU is replaced with parametric ReLU (PReLU) to stabilize training. 4.3. Result We adopt two widely used metrics for evaluation, including the equal error rate (EER) [9] and the minimum of detection cost function (DCF) [9] with target probabilities set to 0.01 and 0.001. The results on the VoxCeleb1 test set are listed in Table 5. Results demonstrate that TDNN, E-TDNN and F-TDNN with PLDA scoring can achieve better accuracy than those with cosine similarity scoring. On the contrary, D-TDNN with cosine similarity scoring can achieve better accuracy than that with PLDA scoring. Comparing the results of D-TDNN with those of TDNN, E-TDNN and F-TDNN, we find that D-TDNN can outperform existing TDNN-based models to achieve state-of-the-art accuracy. Note that D-TDNN only contains 2.8 million parameters, which is substantially fewer than existing TDNN-based models. In Table 5, D-TDNN-SS combines a short-term TDNN branch and a long-term TDNN branch, whose frame offsets are set to 1 and 3 respectively. D-TDNN-SS (0) denotes a variant of D-TDNN-SS which adopts a null branch and a TDNN branch. We find that D-TDNN-SS can achieve higher accuracy than D-TDNN-SS (0). Comparing the results between D-TDNN and D-TDNN-SS (0), we find that channel-wise suppression can improve the accuracy of D-TDNN. D-TDNN-SK is also a multi-branch extension of D-TDNN which has the same branch settings as D-TDNN-SS but adopts selective kernel (SK) for channel-wise selection. Comparing the results of D-TDNN, D-TDNN-SK and D-TDNN-SS, we find that multi-branch extension can improve the accuracy of D-TDNN, and our newly proposed channel-wise selection mechanism SS is better than SK. We also find that D-TDNN-SS with AAM-Softmax loss can achieve the best results on all metrics. 5. Conclusion In this paper, we propose a novel TDNN-based model, called D-TDNN, for speaker embedding. Furthermore, we propose an improved variant of D-TDNN, called D-TDNN-SS, to employ multiple TDNN branches with short-term and long-term contexts. Experiments on VoxCeleb datasets demonstrate that our proposed methods can outperform existing TDNN-based models to achieve state-of-the-art accuracy, with fewer parameters. 6. Acknowledgements This work was supported by the NSFC-NRF Joint Research Project (No. 61861146001). Wu-Jun Li is the corresponding author. 924