正在加载图片...

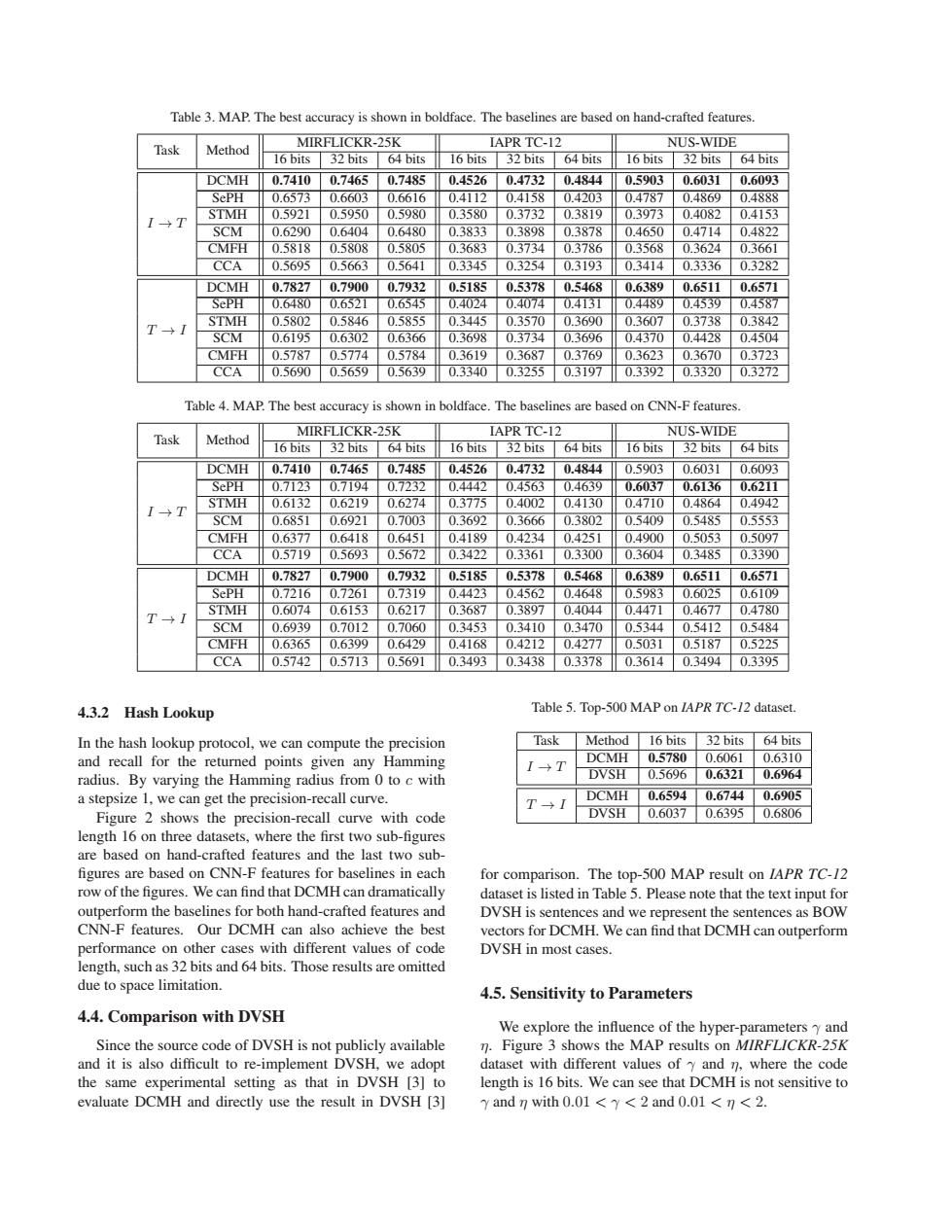

Table 3.MAP.The best accuracy is shown in boldface.The baselines are based on hand-crafted features. MIRFLICKR-25K Task Method LAPR TC-12 NUS-WIDE 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits DCMH 0.7410 0.7465 0.7485 0.4526 0.4732 0.4844 0.5903 0.6031 0.6093 SePH 0.6573 0.6603 0.6616 0.4112 0.4158 0.4203 0.4787 0.4869 0.4888 STMH 0.5921 0.5950 0.5980 0.3580 0.3732 0.3819 0.3973 0.4082 0.4153 I→T SCM 0.6290 0.6404 0.6480 0.3833 0.3898 0.3878 0.4650 0.4714 0.4822 CMFH 0.5818 0.5808 0.5805 0.3683 0.3734 0.3786 0.3568 0.3624 0.3661 CCA 0.5695 0.5663 0.5641 0.3345 0.3254 0.3193 0.3414 0.3336 0.3282 DCMH 0.7827 0.7900 0.7932 0.5185 0.5378 0.5468 0.6389 0.6511 0.6571 SePH 0.64801 0.6521 0.6545 0.4024 0.4074 0.4131 0.4489 0.4539 0.4587 STMH 0.5802 0.5846 0.5855 0.3445 0.3570 0.3690 0.3607 0.3738 0.3842 T→I SCM 0.6195 0.6302 0.6366 0.3698 0.3734 0.3696 0.4370 0.4428 0.4504 CMFH 0.5787 0.5774 0.5784 0.3619 0.3687 0.3769 0.3623 0.3670 0.3723 CCA 0.5690 0.5659 0.5639 0.3340 0.3255 0.3197 0.3392 0.3320 0.3272 Table 4.MAP.The best accuracy is shown in boldface.The baselines are based on CNN-F features. MIRFLICKR-25K IAPR TC-12 NUS-WIDE Task Method 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits DCMH 0.7410 0.7465 0.7485 0.4526 0.4732 0.4844 0.5903 0.6031 0.6093 Sep▣ 0.7123 0.7194 0.7232 0.4442 0.4563 0.4639 0.6037 0.6136 0.6211 STMH 0.6132 0.6219 0.6274 0.3775 0.4002 0.4130 0.4710 0.4864 0.4942 I→T SCM 0.6851 0.6921 0.7003 0.3692 0.3666 0.3802 0.5409 0.5485 0.5553 CMFH 0.6377 0.6418 0.6451 0.4189 0.4234 0.4251 0.4900 0.5053 0.5097 CCA 0.57190.5693 0.5672 0.3422 0.3361 0.3300 0.3604 0.3485 0.3390 DCMH 0.7827 0.7900 0.7932 0.5185 0.5378 0.5468 0.6389 0.6511 0.6571 SePH 0.7216 0.7261 0.7319 0.4423 0.4562 0.4648 0.5983 0.6025 0.6109 STMH 0.6074 0.6153 0.6217 0.3687 0.3897 0.4044 0.4471 0.4677 0.4780 T→I SCM 0.6939 0.7012 0.7060 0.3453 0.3410 0.3470 0.5344 0.5412 0.5484 CMFH 0.6365 0.6399 0.6429 0.4168 0.4212 0.4277 0.5031 0.5187 0.5225 CCA 0.5742 0.5713 0.5691 0.3493 0.3438 0.3378 0.3614 0.3494 0.3395 4.3.2 Hash Lookup Table 5.Top-500 MAP on IAPR TC-12 dataset. In the hash lookup protocol,we can compute the precision Task Method 16 bits 32 bits 64 bits and recall for the returned points given any Hamming DCMH 0.5780 0.6061 0.6310 radius.By varying the Hamming radius from 0 to c with DVSH 0.5696 0.63210.6964 a stepsize 1,we can get the precision-recall curve. DCMH0.65940.67440.6905 Figure 2 shows the precision-recall curve with code DVSH 0.6037 0.63950.6806 length 16 on three datasets,where the first two sub-figures are based on hand-crafted features and the last two sub- figures are based on CNN-F features for baselines in each for comparison.The top-500 MAP result on IAPR TC-12 row of the figures.We can find that DCMH can dramatically dataset is listed in Table 5.Please note that the text input for outperform the baselines for both hand-crafted features and DVSH is sentences and we represent the sentences as BOW CNN-F features.Our DCMH can also achieve the best vectors for DCMH.We can find that DCMH can outperform performance on other cases with different values of code DVSH in most cases. length,such as 32 bits and 64 bits.Those results are omitted due to space limitation. 4.5.Sensitivity to Parameters 4.4.Comparison with DVSH We explore the influence of the hyper-parameters y and Since the source code of DVSH is not publicly available n.Figure 3 shows the MAP results on MIRFLICKR-25K and it is also difficult to re-implement DVSH,we adopt dataset with different values of y and n,where the code the same experimental setting as that in DVSH [3]to length is 16 bits.We can see that DCMH is not sensitive to evaluate DCMH and directly use the result in DVSH [3] and n with 0.01<y<2 and 0.01<n<2.Table 3. MAP. The best accuracy is shown in boldface. The baselines are based on hand-crafted features. Task Method MIRFLICKR-25K IAPR TC-12 NUS-WIDE 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits DCMH 0.7410 0.7465 0.7485 0.4526 0.4732 0.4844 0.5903 0.6031 0.6093 SePH 0.6573 0.6603 0.6616 0.4112 0.4158 0.4203 0.4787 0.4869 0.4888 𝐼 → 𝑇 STMH 0.5921 0.5950 0.5980 0.3580 0.3732 0.3819 0.3973 0.4082 0.4153 SCM 0.6290 0.6404 0.6480 0.3833 0.3898 0.3878 0.4650 0.4714 0.4822 CMFH 0.5818 0.5808 0.5805 0.3683 0.3734 0.3786 0.3568 0.3624 0.3661 CCA 0.5695 0.5663 0.5641 0.3345 0.3254 0.3193 0.3414 0.3336 0.3282 DCMH 0.7827 0.7900 0.7932 0.5185 0.5378 0.5468 0.6389 0.6511 0.6571 SePH 0.6480 0.6521 0.6545 0.4024 0.4074 0.4131 0.4489 0.4539 0.4587 𝑇 → 𝐼 STMH 0.5802 0.5846 0.5855 0.3445 0.3570 0.3690 0.3607 0.3738 0.3842 SCM 0.6195 0.6302 0.6366 0.3698 0.3734 0.3696 0.4370 0.4428 0.4504 CMFH 0.5787 0.5774 0.5784 0.3619 0.3687 0.3769 0.3623 0.3670 0.3723 CCA 0.5690 0.5659 0.5639 0.3340 0.3255 0.3197 0.3392 0.3320 0.3272 Table 4. MAP. The best accuracy is shown in boldface. The baselines are based on CNN-F features. Task Method MIRFLICKR-25K IAPR TC-12 NUS-WIDE 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits 16 bits 32 bits 64 bits DCMH 0.7410 0.7465 0.7485 0.4526 0.4732 0.4844 0.5903 0.6031 0.6093 SePH 0.7123 0.7194 0.7232 0.4442 0.4563 0.4639 0.6037 0.6136 0.6211 𝐼 → 𝑇 STMH 0.6132 0.6219 0.6274 0.3775 0.4002 0.4130 0.4710 0.4864 0.4942 SCM 0.6851 0.6921 0.7003 0.3692 0.3666 0.3802 0.5409 0.5485 0.5553 CMFH 0.6377 0.6418 0.6451 0.4189 0.4234 0.4251 0.4900 0.5053 0.5097 CCA 0.5719 0.5693 0.5672 0.3422 0.3361 0.3300 0.3604 0.3485 0.3390 DCMH 0.7827 0.7900 0.7932 0.5185 0.5378 0.5468 0.6389 0.6511 0.6571 SePH 0.7216 0.7261 0.7319 0.4423 0.4562 0.4648 0.5983 0.6025 0.6109 𝑇 → 𝐼 STMH 0.6074 0.6153 0.6217 0.3687 0.3897 0.4044 0.4471 0.4677 0.4780 SCM 0.6939 0.7012 0.7060 0.3453 0.3410 0.3470 0.5344 0.5412 0.5484 CMFH 0.6365 0.6399 0.6429 0.4168 0.4212 0.4277 0.5031 0.5187 0.5225 CCA 0.5742 0.5713 0.5691 0.3493 0.3438 0.3378 0.3614 0.3494 0.3395 4.3.2 Hash Lookup In the hash lookup protocol, we can compute the precision and recall for the returned points given any Hamming radius. By varying the Hamming radius from 0 to 𝑐 with a stepsize 1, we can get the precision-recall curve. Figure 2 shows the precision-recall curve with code length 16 on three datasets, where the first two sub-figures are based on hand-crafted features and the last two sub- figures are based on CNN-F features for baselines in each row of the figures. We can find that DCMH can dramatically outperform the baselines for both hand-crafted features and CNN-F features. Our DCMH can also achieve the best performance on other cases with different values of code length, such as 32 bits and 64 bits. Those results are omitted due to space limitation. 4.4. Comparison with DVSH Since the source code of DVSH is not publicly available and it is also difficult to re-implement DVSH, we adopt the same experimental setting as that in DVSH [3] to evaluate DCMH and directly use the result in DVSH [3] Table 5. Top-500 MAP on IAPR TC-12 dataset. Task Method 16 bits 32 bits 64 bits 𝐼 → 𝑇 DCMH 0.5780 0.6061 0.6310 DVSH 0.5696 0.6321 0.6964 𝑇 → 𝐼 DCMH 0.6594 0.6744 0.6905 DVSH 0.6037 0.6395 0.6806 for comparison. The top-500 MAP result on IAPR TC-12 dataset is listed in Table 5. Please note that the text input for DVSH is sentences and we represent the sentences as BOW vectors for DCMH. We can find that DCMH can outperform DVSH in most cases. 4.5. Sensitivity to Parameters We explore the influence of the hyper-parameters 𝛾 and 𝜂. Figure 3 shows the MAP results on MIRFLICKR-25K dataset with different values of 𝛾 and 𝜂, where the code length is 16 bits. We can see that DCMH is not sensitive to 𝛾 and 𝜂 with 0.01 <𝛾< 2 and 0.01 <𝜂< 2