正在加载图片...

Advanced Review If A is diagonally dominant by rows,that is, U have nonnegative elements.Moreover,the growth factor pn=1.More importantly,for such matrices it i=1:n can be onger componentwi all ~3u or a is diagonally dominant by columns(that is.AT is diagonally dominant by rows)then it is safe not to use ALGORITHMS interchanges:the LU factorization without pivoting In principle,GE is computationally straightforward. exist Dn< It can be expressed in pseudocode as follows: li->D.then for k=1:n-1 have bandwidth p (=0 fori>j+p and=0 for forj=k:n fori=k+1:n preserve tor U that in m张=ak/akk =a-mka 0 end end end Here, BOX1 SPARSE MATRICES the upper t anglhrfacorUandthedemi of L are A matrix is sparse if it has a sufficiently large number of zero entries that it is worth taking There are 3!ways of ordering the three nested ne" GE ng the matrix and in loops in this pseudocode but not all matrix it can produce fill-in,which occurs when a ncy for computer imp de zero entry becomes nonzero.Depending on th f GE such those in kefs 16.17 a sparse matrix with and the LINPACK package -the inner loop tra- he columns of A,which matches t e order i etw ous tecr ues are nd Thran stor from the 1980s onwards led to the need to modif an issue,these techniques must be implementations of GE in order to maintain good effi y:ow the oo up into piec (or sparse GE to be successfully applied to extremely can arge matrice an te t atment tha lgorithm by writing ory or computation time for G tosolve Ax =b bec tive we must resort to []-[ae][69] m ally x[] A matrix is totally nonnegative if the determi (bywhatever means),solve suc then it has an LU factorization A=LU in which L and -L21U12, and a U are totally nonnegative,so that in particular L and S to obtain 1 and U.Th com 234 2011 John Wiley Sons.Inc Volume 3.Mayllune 2011 Advanced Review wires.wiley.com/compstats If A is diagonally dominant by rows, that is, n j=1 j

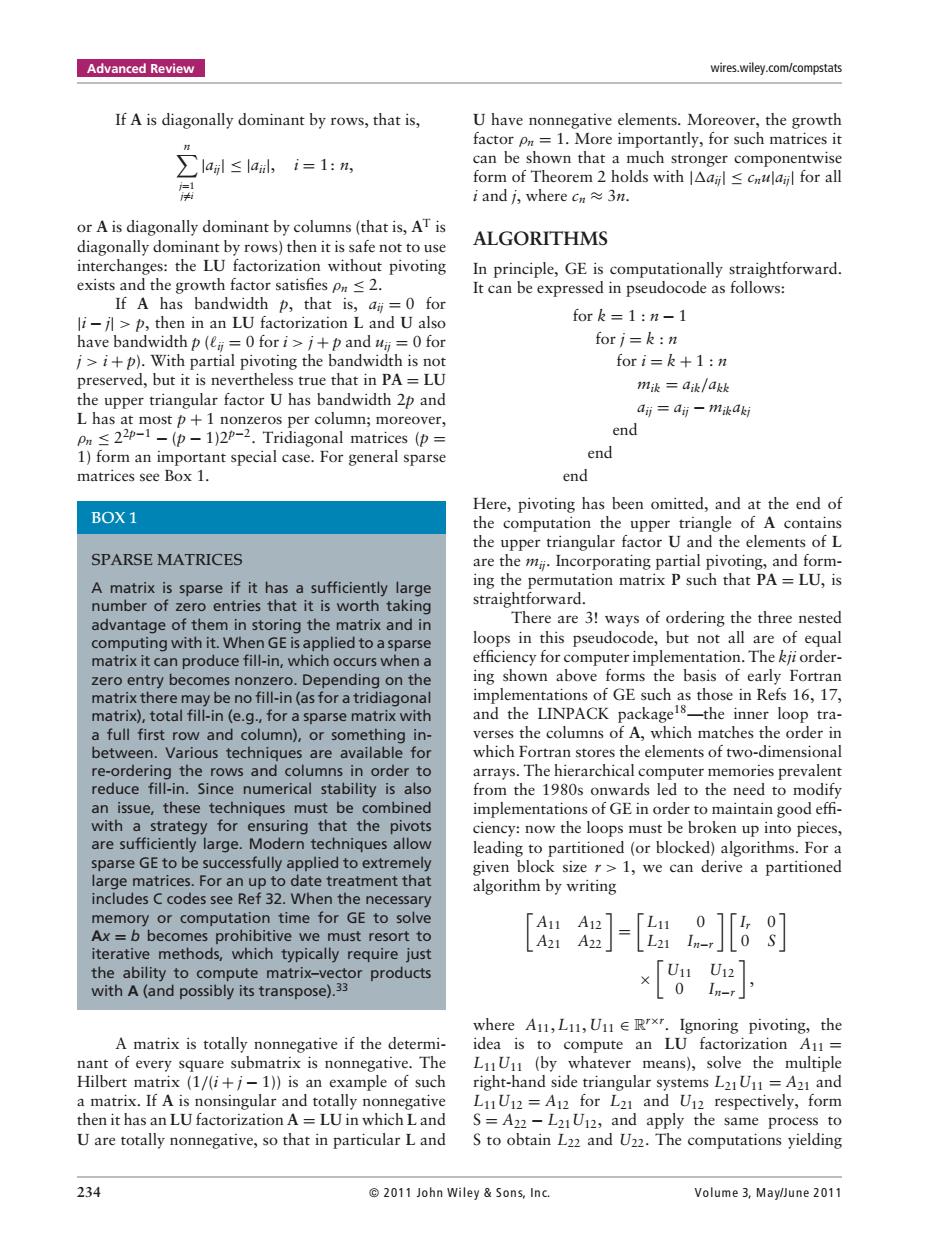

=i |aij|≤|aii|, i = 1: n, or A is diagonally dominant by columns (that is, AT is diagonally dominant by rows) then it is safe not to use interchanges: the LU factorization without pivoting exists and the growth factor satisfies ρn ≤ 2. If A has bandwidth p, that is, aij = 0 for |i − j| > p, then in an LU factorization L and U also have bandwidth p (ij = 0 for i > j + p and uij = 0 for j > i + p). With partial pivoting the bandwidth is not preserved, but it is nevertheless true that in PA = LU the upper triangular factor U has bandwidth 2p and L has at most p + 1 nonzeros per column; moreover, ρn ≤ 22p−1 − (p − 1)2p−2. Tridiagonal matrices (p = 1) form an important special case. For general sparse matrices see Box 1. BOX 1 SPARSE MATRICES A matrix is sparse if it has a sufficiently large number of zero entries that it is worth taking advantage of them in storing the matrix and in computing with it. When GE is applied to a sparse matrix it can produce fill-in, which occurs when a zero entry becomes nonzero. Depending on the matrix there may be no fill-in (as for a tridiagonal matrix), total fill-in (e.g., for a sparse matrix with a full first row and column), or something inbetween. Various techniques are available for re-ordering the rows and columns in order to reduce fill-in. Since numerical stability is also an issue, these techniques must be combined with a strategy for ensuring that the pivots are sufficiently large. Modern techniques allow sparse GE to be successfully applied to extremely large matrices. For an up to date treatment that includes C codes see Ref 32. When the necessary memory or computation time for GE to solve Ax = b becomes prohibitive we must resort to iterative methods, which typically require just the ability to compute matrix–vector products with A (and possibly its transpose).33 A matrix is totally nonnegative if the determinant of every square submatrix is nonnegative. The Hilbert matrix (1/(i + j − 1)) is an example of such a matrix. If A is nonsingular and totally nonnegative then it has an LU factorization A = LU in which L and U are totally nonnegative, so that in particular L and U have nonnegative elements. Moreover, the growth factor ρn = 1. More importantly, for such matrices it can be shown that a much stronger componentwise form of Theorem 2 holds with |aij| ≤ cnu|aij| for all i and j, where cn ≈ 3n. ALGORITHMS In principle, GE is computationally straightforward. It can be expressed in pseudocode as follows: for k = 1 : n − 1 for j = k : n for i = k + 1 : n mik = aik/akk aij = aij − mikakj end end end Here, pivoting has been omitted, and at the end of the computation the upper triangle of A contains the upper triangular factor U and the elements of L are the mij. Incorporating partial pivoting, and forming the permutation matrix P such that PA = LU, is straightforward. There are 3! ways of ordering the three nested loops in this pseudocode, but not all are of equal efficiency for computer implementation. The kji ordering shown above forms the basis of early Fortran implementations of GE such as those in Refs 16, 17, and the LINPACK package18—the inner loop traverses the columns of A, which matches the order in which Fortran stores the elements of two-dimensional arrays. The hierarchical computer memories prevalent from the 1980s onwards led to the need to modify implementations of GE in order to maintain good effi- ciency: now the loops must be broken up into pieces, leading to partitioned (or blocked) algorithms. For a given block size r > 1, we can derive a partitioned algorithm by writing A11 A12 A21 A22 = L11 0 L21 In−r Ir 0 0 S × U11 U12 0 In−r , where A11,L11, U11 ∈ Rr×r . Ignoring pivoting, the idea is to compute an LU factorization A11 = L11U11 (by whatever means), solve the multiple right-hand side triangular systems L21U11 = A21 and L11U12 = A12 for L21 and U12 respectively, form S = A22 − L21U12, and apply the same process to S to obtain L22 and U22. The computations yielding 234 2011 John Wiley & Son s, In c. Volume 3, May/June 2011�